Deutsche Telekom's Access 4.0 transforms the network edge

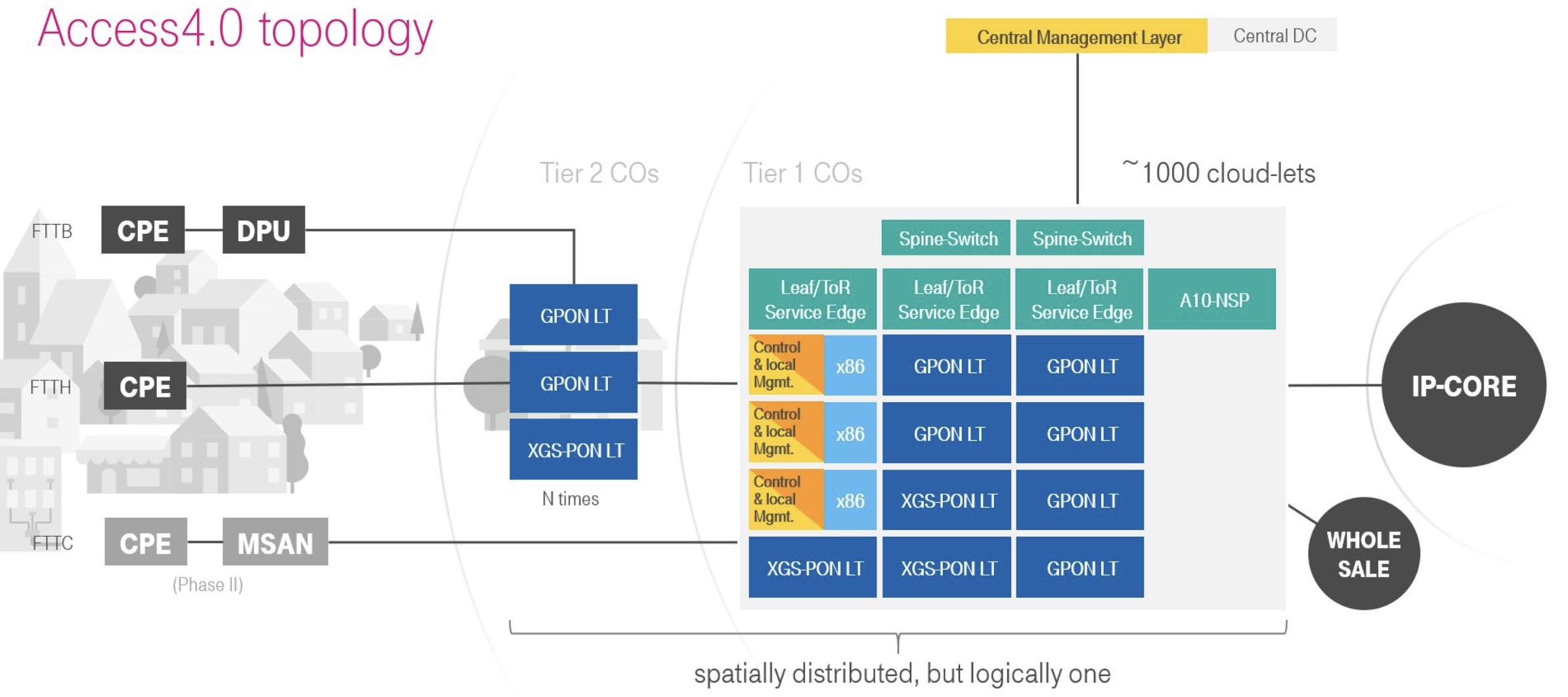

Deutsche Telekom has a working software platform for its Access 4.0 architecture that will start delivering passive optical network (PON) services to German customers later this year. The architecture will also serve as a blueprint for future edge services.

Access 4.0 is a disaggregated design comprising open-source software and platforms that use merchant chips – ‘white-boxes’ – to deliver fibre-to-the-home (FTTH) and fibre-to-the-building (FTTB) services.

“One year ago we had it all as prototypes plugged together to see if it works,” says Hans-Jörg Kolbe, chief engineer and head of SuperSquad Access 4.0. “Since the end of 2019, our target software platform – a first end-to-end system – is up and running.”

Deutsche Telekom has about 1,000 central office sites in Germany, several of which will be upgraded this year to the Access 4.0 architecture.

“Once you have a handful of sites up and running and you have proven the principle, building another 995 is rather easy,” says Robert Soukup, senior program manager at Deutsche Telekom, and another of the co-founders of the Access 4.0 programme.

Origins

The Access 4.0 programme emerged with the confluence of two developments: a detailed internal study of the costs involved in building networks and the advent of the Central Office Re-architected as a Datacentre (CORD) industry initiative.

Deutsche Telekom was scrutinising the costs involved in building its networks. “Not like removing screws here and there but looking at the end-to-end costs,” says Kolbe.

Separately, the operator took an interest in CORD that was, at the time, being overseen by ON.Labs.

At first, Kolbe thought CORD was an academic exercise but, on closer examination, he and his colleague, Thomas Haag, the chief architect and the final co-founder of Access 4.0, decided the activity needed to be investigated internally. In particular, to assess the feasibility of CORD, how bringing together cloud technologies with access hardware would work, and quantify the cost benefits.

“The first goal was to drive down cost in our future network,” says Kolbe. “And that was proven in the first month by a decent cost model. Then, building a prototype and looking into it, we found more [cost savings].”

Given the cost focus, the operator hadn’t considered the far-reaching changes involve with adopting white boxes and the disaggregation of software and hardware, nor the consequences of moving to a mainly software-based architecture in how it could shorten the introduction of new services.

“I knew both these arguments were used when people started to build up Network Functions Virtualisation (NFV) but we didn’t have this in mind; it was a plain cost calculation,” says Kolbe. “Once we starting doing it, however, we found both these things.”

Cost engineering

Deutsche Telekom says it has learnt a lot from the German automotive industry when it comes to cost engineering. For some companies, cost is part of the engineering process and in others, it is part of procurement.

“The issue is not talking to a vendor and asking for a five percent discount on what we want it to deliver,” says Soukup, adding that what the operator seeks is fair prices for everybody.

“Everyone needs to make a margin to stay in business but the margin needs to be fair,” says Soukup. “If we make with our customers a margin of ’X’, it is totally out of the blue that our vendors get a margin of ‘10X’.”

The operator’s goal with Access 4.0 has been to determine how best to deploy broadband internet access on a large scale and with carrier-grade quality. Access is an application suited to cost reduction since “the closer you come to the customer, the more capex [capital expenditure] you have to spend,” says Soukup, adding that since capex is always less than what you’d like, creativity is required.

“When you eat soup, you always grasp a spoon,” says Soukup. “But we asked ourselves: ‘Is a spoon the right thing to use?’”

Software and White Boxes

Access 4.0 uses two components from the Open Networking Foundation (ONF): Voltha and the Software Defined Networking (SDN) Enabled Broadband Access (SEBA) reference design.

Voltha provides a common control and management system for PON white boxes while making the PON network appear to the SDN controller that resides above as a programmable switch. “It abstracts away the [PON] optical line terminal (OLT) so we can treat it as a switch,” says Soukup

SEBA supports a range of fixed broadband technologies that include GPON and XGS-PON. “SEBA 2.0 is a design we are using and are compliant,” says Soukup.

“We are bringing our technology to geographically-distributed locations – central offices – very close to the customer,” says Kolbe. Some aspects are common with the cloud technology used in large data centres but there are also differences.

For example, virtualisation technologies such as Kubernetes are shared while large data centres use OpenStack which is not needed for Access 4.0. In turn, a leaf-spine switching architecture is common as is the use of SDN technology.

“One thing we have learned is that you can’t just take the big data centre technology and put it in distributed locations and try to run heavy-throughput access networks on them,” says Kolbe. “This is not going to work and it led us to the white box approach.”

The issue is that certain workloads cannot be tackled efficiently using x86-based server processors. An example is the Broadband Network Gateway (BNG). “You need to do significant enhancements to either run on the x86 or you offload it to a different type of hardware,” says Kolbe.

Deutsche Telekom started by running a commercial vendor’s BNG on servers. “In parallel, we did the cost calculation and it was horrible because of the throughput-per-Euro and the power-per-Euro,” says Kolbe. And this is where cost engineering comes in: looking at the system, the biggest cost driver was the servers.

“We looked at the design and in the data path there are three programmable ASICs,” says Kolbe. “And this is what we did; it is not a product yet but it is working in our lab and we have done trials.” The result is that the operator has created an opportunity for a white-box design.

There are also differences in the use of switching between large data centres and access. In large data centres, the switching supports the huge east-west traffic flows while in carrier networks, especially close to the edge, this is not required.

Instead, for Access 4.0, traffic from PON trees arrives at the OLT where it is aggregated by a chipset before being passed on to a top-of-rack switch where aggregation and packet processing occur.

The leaf-and-spine architecture can also be used to provide a ‘breakout’ to support edge-cloud services such as gaming and local services. “There is a traffic capability there but we currently don’t use it,” says Kolbe. “But we are thinking that in the future we will.”

Deutsche Telekom has been public about working with such companies as Reply, RtBrick and Broadcom. Reply is a key partner while RtBrick contributes a major element of the speciality domain BNG software.

Kolbe points out that there is no standard for using network processor chips: “They are all specific which is why we need a strong partnership with Broadcom and others and build a common abstraction layer.”

Deutsche Telekom also works closely with Intel, incumbent network vendors such as ADTRAN and original design manufacturers (ODMs) including EdgeCore Networks.

Challenges

About 80 percent of the design effort for Access 4.0 is software and this has been a major undertaking for Deutsche Telekom.

“The challenge is to get up to speed with software; that is not a thing that you just do,” says Kolbe. “We can’t just pretend we are all software engineers.”

Deutsche Telekom also says the new players it works with – the software specialists – also have to better understand telecom. “We need to meet in the middle,” says Kolbe.

Soukup adds that mastering software takes time – years rather than weeks or months – and this is only to be expected given the network transformation operators are undertaking.

But once achieved, operators can expect all the benefits of software – the ability to work in an agile manner, continuous integration/ continuous delivery (CI/DC), and the more rapid introduction of services and ideas.

“This is what we have discovered besides cost-savings: becoming more agile and transforming an organisation which can have an idea and realise it in days or weeks,” says Soukup. The means are there, he says: “We have just copied them from the large-scale web-service providers.”

Status

The first Access 4.0 services will be FTTH delivered from a handful of central offices in Germany later this year. FTTB services will then follow in early 2021.

“Once we are out there and we have proven that it works and it is carrier-grade, then I think we are very fast in onboarding other things,” says Soukup. “But they are [for now] not part of our case.”

Access drives a need for 10G compact aggregation boxes

Infinera has unveiled a platform to aggregate multiple 10-gigabit traffic streams originating in the access network.

The 1.6-terabit HDEA 1600G platform is designed to aggregate 80, 10-gigabit wavelengths. The use of ten-gigabit wavelengths in access continues to grow with the advent of 5G mobile backhaul and developments in cable and passive optical networking (PON).

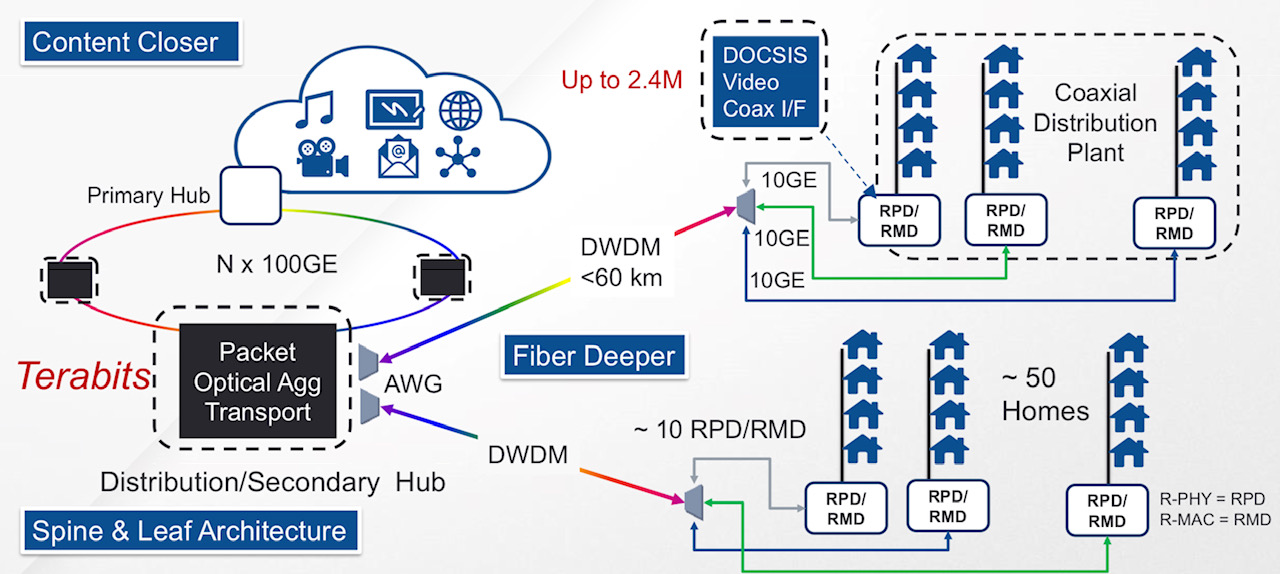

A distributed access architecture being embraced by cable operators. Shown are the remote PHY devices (RPD) or remote MAC-PHY devices (RMD), functionality moved out of the secondary hub and closer to the end user. Also shown is how DWDM technology is moved closer to the edge of the network. Source: Infinera.

A distributed access architecture being embraced by cable operators. Shown are the remote PHY devices (RPD) or remote MAC-PHY devices (RMD), functionality moved out of the secondary hub and closer to the end user. Also shown is how DWDM technology is moved closer to the edge of the network. Source: Infinera.

Infinera has adopted a novel mechanical design for its 1 rack unit (1RU) HDEA 1600G that uses the sides of the platform to fit 80 SFP+ optical modules.

The platform also features a 1.6-terabit Ethernet switch chip that aggregates the traffic from the 10-gigabit streams to fill 100-gigabit wavelengths that are passed to other switching or transport platforms for transmission into the network.

Distributed access architecture

Jon Baldry, metro marketing director at Infinera, cites the adoption of a distributed access architecture (DAA) by cable operators as an example of 10-gigabit links that are set to proliferate in the access network.

DAA is being adopted by cable operators to compete with the telecom operators’ rollout of fibre-to-the-home (FTTH) broadband access technology.

A recent report by market research firm, Ovum, addressing DAA in the North American market, discusses how the architectural approach will free up space in cable headends, reduce the operators’ operational costs, and allow the delivery of greater bandwidth to subscribers.

Implementing DAA involves bringing fibre as well as cable network functionality closer to the user. Such functionality includes remote PHY devices and remote MAC-PHY devices. It is these devices that will use a 10-gigabit interface, says Baldry: “The traffic they will be running at first will be two or three gigabits over that 10-gigabit link.”

Julie Kunstler, principal analyst at Ovum’s Network Infrastructure and Software group, says the choice whether to deploy a remote PHY or a remote MAC-PHY architecture is a issue of an operator's ‘religion’. What is important, she says, is that both options exploit the existing hybrid fibre coax (HFC) architecture to boost the speed tiers delivered to users.

The current, pre-DAA, cable network architecture. Source: Infinera.

In the current pre-DAA architecture, the cable network comprises cable headends and secondary distribution hubs (see diagram above). It is at the secondary hub that the dense wavelength-division multiplexing (DWDM) network terminates. From there, RF over fibre is carried over the hybrid fibre-coax (HFC) plant. The HFC plant also requires amplifier chains to overcome cable attenuation and the losses resulting from the cable splits that deliver the RF signals to the homes.

Typically, an HFC node in the cable network serves up to 500 homes. With the adoption of DAA and the use of remote PHYs, the amplifier chains can be removed with each PHY serving 50 homes (see diagram top).

“Basically DWDM is being pushed out to the remote PHY devices,” says Baldry. The remote PHYs can be as far as 60km from the secondary hub.

“DAA is a classic example where you will have dense 10-gigabit links all coming together at one location,” says Baldry. “Worst case, you can have 600-700 remote PHY devices terminating at a secondary hub.”

The same applies to cellular.

At present 4G networks use 1-gigabit links for mobile backhaul but 5G will use 10-gigabit and 25-gigabit links in a year or two. “So the edge of the WDM network has really jumped from 1 gigabit to 10 gigabit,” says Baldry.

It is the aggregation of large numbers of 10-gigabit links that the HDEA 1600G platform is designed to address.

HDEA 1600G

Only a certain number of pluggable interfaces can fit on the front panel of a 1RH box. To accommodate 80, 10-gigabit streams, the two sides of the platform are used for the interfaces. Using the HDEA’s sides creates much more space for the 1RU’s input-output (I/O) compared to traditional transport kit, says Baldry.

The 40 SFP+ modules on each side of the platform are accessed by pulling the shelf out and this can be done while it is operational (see photo below). Such an approach is used for supercomputing but Baldry believes Infinera is the first to adopt it for a transport product.

Infinera has also adopted MPO connectors to simplify the fibre management involved in connected 80 SFP+, each module requiring a fibre pair.

The HDEA 1600 has two groups of four MPO connectors on the front panel. Each MPO cluster connects 40 modules on each side, with each MPO cable having 20 fibres to connect 10 SFP+ modules.

A site terminating 400 remote PHYs, for example, requires the connection of 40 MPO cables instead of 800 individual fibres, says Baldry, simplifying installation greatly.

>

“DAA is a classic example where you will have dense 10-gigabit links all coming together at one location. Worst case, you can have 600-700 remote PHY devices terminating at a secondary hub.”

The other end of the MPO cable connects to a dense multiplexer-demultiplexer (mux-demux) unit that separates the individual 10-gigabit access wavelengths received over the DWDM link.

Each mux-demux unit uses an arrayed waveguide grating (AWG) that is tailored to the cable operators’ wavelengths needs. The 24-channel mux-demux design supports 20, 100GHz-wide channels for the 10-gigabit wavelengths and four wavelengths reserved for business services. Business services have become an important part of the cable operators’ revenues.

Infinera says the HDEA platform supports the extended C-band for a total of 96 wavelengths.

The company says it will develop different AWG configurations tailored for the wavelengths and channel count required for the different access applications.

In the rack, the HDEA aggregation platform takes up one shelf, while eight mux-demux units take up another 1RU. Space is left in between to house the cabling between the two.

The HDEA 1600G pulled out of the rack, showing the MPO connectors and the space to house the cabling between the HDEA and the rack of compact AWGs. Source: Infinera.

Baldry points out that the four business service wavelengths are not touched by the HDEA platform, Rather, these are routed to separate Ethernet switches dedicated to business customers. "We break those wavelengths out and hand them over to whatever system the operator is using," he says.

The HDEA 1600G also features eight 100-gigabit line-side interfaces that carry the aggregated cable access streams. Infinera is not revealing the supplier of the 1.6 terabit switch silicon - 800-gigabit for client-side capacity and 800-gigabit for line-side capacity - it is using for the HDEA platform.

The platform supports all the software Infinera uses for its EMXP, a packet-optical switch tailored for access and aggregation that is part of Infinera’s XTM family of products. Features include multi-chassis link aggregation group (MC-LAG), ring protection, all the Metro Ethernet Forum services, and synchronisation for mobile networks, says Baldry

Auto-Lambda

Infinera has developed what it calls its Auto-Lambda technology to simplify the wavelength management of the remote PHY devices.

Here, the optics set up the connection instead of a field engineer using a spreadsheet to determine which wavelength to use for a particular remote PHY. Tunable SFP+ modules can be used at the remote PHY devices only with fixed-wavelength (grey) SFP+ modules used by the HDEA platform to save on costs, or both ends can use tunable optics. Using tunable SFP+ modules at each end may be more expensive but the operator gains flexibility and sparing benefits.

Jon Baldry

Establishing a link when using fixed optics within the HDEA platform, the SFP+ is operated in a listening mode only. When a tunable SFP+ transceiver is plugged in at a remote PHY, which could be days later, it cycles through each wavelength. The blocking nature of the AWG means that such cycling does not disturb other wavelengths already in use.

Once the tunable SFP+ reaches the required wavelength, the transmitted signal is passed through the AWG to reach the listening transceiver at the switch. On receipt of the signal, the switch SFP+ turns on its transmitter and talks to the remote transceiver to establish the link.

For the four business wavelengths, both ends of the link use auto-tunable SFP+ modules, what is referred to a duel-ended solution. That is because both end-point systems may not be Infinera platforms and may have no knowledge as to how to manage WDM wavelengths, says Baldry.

In this more complex scenario, the time taken to establish a link is theoretically much longer. The remote end module has to cycle through all the wavelengths and if no connection is made, the near end transceiver changes its transmit wavelength and the remote end’s wavelength cycling is repeated.

Given that a sweep can take two minutes or more, an 80-wavelength system could take close to three hours in the worst case to establish the link; an unacceptable delay.

Infinera is not detailing how its duel-ended scheme works but a combination of scanning and communications is used between the two ends. Infinera had shown such a duel-ended scheme set up a link in 4 minutes and believes it can halve that time.

Finisar detailed its own Flextune fast-tuning technology at ECOC 2018. However, Infinera stresses its technology is different.

Infinera says it is talking to several pluggable optical module makers. “They are working on 25-gigabit optics which we are going to need for 5G,” says Baldry. “As soon as they come along, with the same firmware, we then have auto-tunable for 5G.”

System benefits

Infinera says its HDEA design delivers several benefits. Using the sides of the box means that the platform supports 80 SFP+ interfaces, twice the capacity of competing designs. In turn, using MPO connectors simplifies the fibre management, benefiting operational costs.

Infinera also believes that the platform’s overall power consumption has a competitive edge. Baldry says Infinera incorporates only the features and hardware needed. “We have deliberately not done a lot of stuff in Layer 2 to get better transport performance,” he says. The result is a more power-efficient and lower latency design. The lower latency is achieved using ‘thin buffers’ as part of the switch’s output-buffered queueing architecture, he says.

The platform supports open application programming interfaces (APIs) such that cable operators can make use of such open framework developments as the Cloud-Optimised Remote Datacentre (CORD) initiative being developed by the Open Networking Foundation. CORD uses open-source software-defined networking (SDN) technology such as ONOS and the OpenFlow protocol to control the box.

An operator can also choose to use Infinera’s Digital Network Administrator (DNA) management software, SDN controller, and orchestration software that it has gained following the Coriant acquisition.

The HDEA 1600G is generally available and in the hands of several customers.

Sckipio improves G.fast’s speed, reach and density

Sckipio has enhanced the performance of its G.fast chipset, demonstrating 1 gigabit data rates over 300 meter of telephone wire. The G.fast broadband standard has been specified for 100 meters only. The Israeli start-up has also demonstrated 2 gigabit performance by bonding two telephone wires.

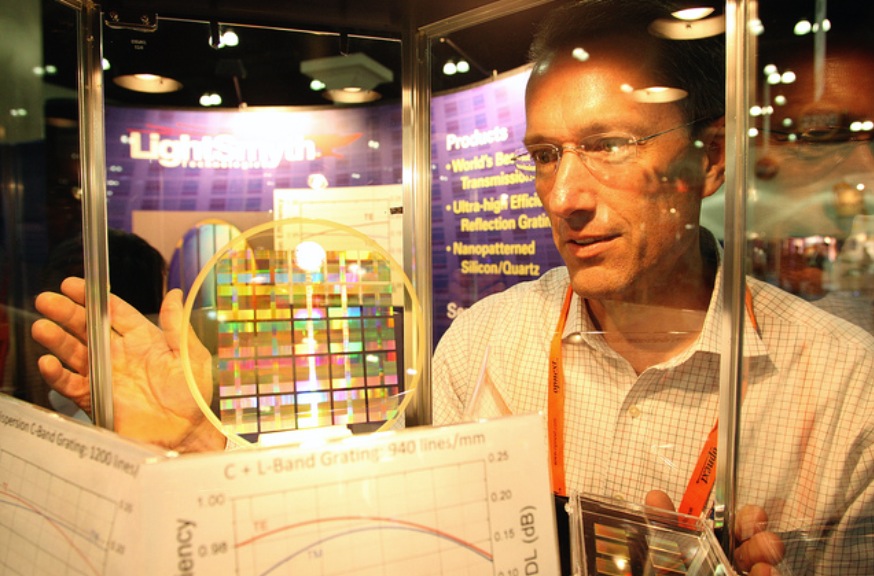

Michael Weissman

Michael Weissman

“Understand that G.fast is still immature,” says Michael Weissman, co-founder and vice president of marketing at Sckipio. “We have improved the performance of G.fast by 40 percent this summer because we haven’t had time to do the optimisation until now.”

The company also announced a 32-port distribution point unit (DPU), the aggregation unit that is fed via fibre and delivers G.fast to residences.

G.fast is part of the toolbox enabling faster and faster speeds, and fills an important role in the wireline broadband market

The 32-port design is double Sckipio’s current largest DPU design. The DPU uses eight Sckipio 4-port DP3000 distribution port chipsets, and moving to 32 lines requires more demanding processing to tackle the greater crosstalk. Vectoring uses signal processing to implement noise cancellation techniques to counter the crosstalk and is already used for VDSL2.

G.fast

“G.fast is part of the toolbox enabling faster and faster speeds, and fills an important role in the wireline broadband market,” says Julie Kunstler, principal analyst, components at market research firm, Ovum.

G.fast achieves gigabit rates over copper by expanding the usable spectrum to 106 MHz. VDSL2, the current most advanced digital subscriber line (DSL) standard, uses 17 MHz of spectrum. But operating at higher frequencies induces signal attenuation, shortening the reach. VDSL2 is deployed over 1,500 meter links typically whereas G.fast distances will likely be 300 meters or less.

Another issue is signal leakage or crosstalk between copper pairs in a cable bundle that can house tens or hundreds of copper twisted pairs. Moreover, the crosstalk becomes greater with frequency. The leakage causes each twisted pair not only to carry the signal sent but also noise, the sum of the leakage components from neighbouring pairs. Vectoring is used to restore a line's data capacity.

G.fast can be seen as the follow-on to VDSL2 but there are notable differences. Besides the wider 106 MHz spectrum, G.fast uses a different duplexing scheme. DSL uses frequency-division duplexing (FDD) where the data transmission is continuous - upstream (from the home) and downstream - but on different frequency bands or tones. In contrast, G.fast uses time-division duplexing (TDD) where all the spectrum is used to either send data or receive data.

Using TDD, the ability to adapt the upstream and downstream data ratio as well as put G.fast in a low-power mode when idle are features that DSL does not share.

“There are many attributes [of DSL] that are brought into this standard but, at a technical level, G.fast is quite fundamentally different,” says Weissman.

One Tier-1 operator has already done the bake-off and will very soon select its vendors

Status

Sckipio says all the largest operators are testing G.fast in their labs or are conducting field trials but few are going public.

Ovum stresses that telcos are pursuing a variety of broadband strategies with G.fast being just one.

Some operators have decided to deploy fibre, while others are deploying a variety of upgrade technologies - fibre-based and copper-based. G.fast can be a good fit for certain residential neighbourhood topologies, says Kunstler.

The economics of passive optical networking (PON) continues to improve. “The costs of building an optical distribution network has declined significantly, and the costs of PON equipment are reasonable,” says Kunstler, adding that skilled fibre technicians now exist in many countries and working with fibre is easier than ever before.

“Many operators see fibre as important for business services so why not just pull the fibre to support volume-residential and high average-revenue-per-user (ARPU) based business services,” she says. But in some regions, G.fast broadband speeds will be sufficient from a competitive perspective.

“One Tier-1 operator has already done the bake-off and will very soon select its vendors,” says Weissman. “Then the hard work of integrating this into their IT systems starts.”

And BT has announced that it had delivered up to 330 megabit-per-second in a trial of G.fast involving 2,000 homes, and has since announced other trials.

“BT has publically announced it can achieve 500 megabits - up and down - over 300 meters running from their cabinets,” says Weissman. “If BT moves its fibre closer to the distribution point, it will likely achieve 800 or 900 megabit rates.” Accordingly, the average customer could benefit from 500 megabit broadband from as early as 2016. And such broadband performance would be adequate for users for 8 to 10 years, he says

Meanwhile, Sckipio and other G.fast chip vendors, as well as equipment makers are working to ensure that their systems interoperate.

Sckipio has also shown G.fast running over coax cable within multi-dwelling units delivering speeds beyond 1 gigabit. “This allows telcos to compete with cable operators and go in places they have not historically gone,” says Weissman.

Standards work

The ITU-T is working to enhance the G.fast standard further using several techniques.

One is to increase the transmission power which promises to substantially improve performance. Another is to use more advanced modulation to carry extra bits per tone across the wire’s spectrum. The third approach is to double the wire's used spectrum from 106 MHz to 212 MHz.

All three approaches complicate transmission, however. Increasing the signal power and spectrum will increase crosstalk and require more vectoring, while more complex modulation will require advanced signal recovery, as will using more spectrum.

“The guys working in committee need to find the apex of these compromises,” says Weissman, adding that Sckipio believes it can generate a 50 to 70 percent improvement in data rate over a single pair using these enhancements. The standard work is likely be completed next spring.

Sckipio says it has over 30 customers for its chips that are designing over 50 G.fast systems, for the home and/ or the distribution point.

So far Sckipio has announced it is working with Calix, Adtran, Chinese original design manufacturer Cambridge Industries Group (CIG) and Zyxel, and says Sckipio products are on show in over 12 booths at the Broadband World Forum show.

OFC/NFOEC 2013 to highlight a period of change

Next week's OFC/NFOEC conference and exhibition, to be held in Anaheim, California, provides an opportunity to assess developments in the network and the data centre and get an update on emerging, potentially disruptive technologies.

Source: Gazettabyte

Source: Gazettabyte

Several networking developments suggest a period of change and opportunity for the industry. Yet the impact on optical component players will be subtle, with players being spared the full effects of any disruption. Meanwhile, industry players must contend with the ongoing challenges of fierce competition and price erosion while also funding much needed innovation.

The last year has seen the rise of software-defined networking (SDN), the operator-backed Network Functions Virtualization (NFV) initiative and growing interest in silicon photonics.

SDN has already being deployed in the data centre. Large data centre adopters are using an open standard implementation of SDN, OpenFlow, to control and tackle changing traffic flow requirements and workloads.

Telcos are also interested in SDN. They view the emerging technology as providing a more fundamental way to optimise their all-IP networks in terms of processing, storage and transport.

Carrier requirements are broader than those of data centre operators; unsurprising given their more complex networks. It is also unclear how open and interoperable SDN will be, given that established vendors are less keen to enable their switches and IP routers to be externally controlled. But the consensus is that the telcos and large content service providers backing SDN are too important to ignore. If traditional switching and routers hamper the initiative with proprietary add-ons, newer players will willing fulfill requirements.

Optical component players must assess how SDN will impact the optical layer and perhaps even components, a topic the OIF is already investigating, while keeping an eye on whether SDN causes market share shifts among switch and router vendors.

The ETSI Network Functions Virtualization (NFV) is an operator-backed initiative that has received far less media attention than SDN. With NFV, telcos want to embrace IT server technology to replace the many specialist hardware boxes that take up valuable space, consume power, add to their already complex operations support systems (OSS) while requiring specialist staff. By moving functions such as firewalls, gateways, and deep packet inspection onto cheap servers scaled using Ethernet switches, operators want lower cost systems running virtualised implementations of these functions.

The two-year NFV initiative could prove disruptive for many specialist vendors albeit ones whose equipment operate at higher layers of the network, removed from the optical layer. But the takeaway for optical component players is how pervasive virtualisation technology is becoming and the continual rise of the data centre.

Silicon photonics is one technology set to impact the data centre. The technology is already being used in active optical cables and optical engines to connect data centre equipment, and soon will appear in optical transceivers such as Cisco Systems' own 100Gbps CPAK module.

Silicon photonics promises to enable designs that disrupt existing equipment. Start-up Compass-EOS has announced a compact IP core router that is already running live operator traffic. The router makes use of a scalable chip coupled to huge-bandwidth optical interfaces based on 168, 8 Gigabit-per-second (Gbps) vertical-cavity surface-emitting lasers (VCSELs) and photodetectors. The Terabit-plus bandwidth enables all the router chips to be connected in a mesh, doing away with the need for the router's midplane and switching fabric.

The integrated silicon-optics design is not strictly silicon photonics - silicon used as a medium for light - but it shows how optics is starting to be used for short distance links to enable disruptive system designs.

Some financial analysts are beating the drum of silicon photonics. But integrated designs using VCSELs, traditional photonic integration and silicon photonics will all co-exist for years to come and even though silicon photonics is expected to make a big impact in the data centre, the Compass-EOS router highlights how disruptive designs can occur in telecoms.

Market status

The optical component industry continues to contend with more immediate challenges after experiencing sharp price declines in 2012.

The good news is that market research companies do not expect a repeat of the harsh price declines anytime soon. They also forecast better market prospects: The Dell'Oro Group expects optical transport to grow through 2017 at a compound annual growth rate (CAGR) of 10 percent, while LightCounting expects the optical transceiver market to grow 50 percent, to US $5.1bn in 2017. Meanwhile Ovum estimates the optical component market will grow by a mid-single-digit percent in 2013 after a contraction in 2012.

In the last year it has become clear how high-speed optical transport will evolve. The equipment makers' latest generation coherent ASICs use advanced modulation techniques, add flexibility by trading transport speed with reach, and use super-channels to support 400 Gigabit and 1 Terabit transmissions. Vendors are also looking longer term to techniques such as spatial-division multiplexing as fibre spectrum usage starts to approach the theoretical limit.

Yet the emphasis on 400 Gigabit and even 1 Terabit is somewhat surprising given how 100 Gigabit deployment is still in its infancy. And if the high-speed optical transmission roadmap is now clear, issues remain.

OFC/NFOEC 2013 will highlight the progress in 100 Gigabit transponder form factors that follow the 5x7-inch MSA, 100 Gigabit pluggable coherent modules, and the uptake of 100 Gigabit direct-detection modules for shorter reach links - tens or hundreds of kilometers - to connect data centres, for example.

There is also an industry consensus regarding wavelength-selective switches (WSSes) - the key building block of ROADMs - with the industry choosing a route-and-select architecture, although that was already the case a year ago.

There will also be announcements at OFC/NFOEC regarding client-side 40 and 100 Gigabit Ethernet developments based on the CFP2 and CFP4 that promise denser interfaces and Terabit capacity blades. Oclaro has already detailed its 100GBASE-LR4 10km CFP2 while Avago Technologies has announced its 100GBASE-SR10 parallel fibre CFP2 with a reach of 150m over OM4 fibre.

The CFP2 and QSFP+ make use of integrated photonic designs. Progress in optical integration, as always, is one topic to watch for at the show.

PON and WDM-PON remain areas of interest. Not so much developments in state-of-the-art transceivers such as for 10 Gigabit EPON and XG-PON1, though clearly of interest, but rather enhancements of existing technologies that benefit the economics of deployment.

The article is based on a news analysis published by the organisers before this year's OFC/NFOEC event.

OFC/NFOEC 2013: Technical paper highlights

Source: The Optical Society

Source: The Optical Society

Network evolution strategies, state-of-the-art optical deployments, next-generation PON and data centre interconnect are just some of the technical paper highlights of the upcoming OFC/NFOEC conference and exhibition, to be held in Anaheim, California from March 17-21, 2013. Here is a selection of the papers.

Optical network applications and services

Fujitsu and AT&T Labs-Research (Paper Number: 1551236) present simulation results of shared mesh restoration in a backbone network. The simulation uses up to 27 percent fewer regenerators than dedicated protection while increasing capacity by some 40 percent.

KDDI R&D Laboratories and the Centre Tecnològic de Telecomunicacions de Catalunya (CTTC), Spain (Paper Number: 1553225) show results of an OpenFlow/stateless PCE integrated control plane that uses protocol extensions to enable end-to-end path provisioning and lightpath restoration in a transparent wavelength switched optical network (WSON).

In invited papers, Juniper highlights the benefits of multi-layer packet-optical transport, IBM discusses future high-performance computers and optical networking, while Verizon addresses multi-tenant data centre and cloud networking evolution.

Network technologies and applications

A paper by NEC (Paper Number: 1551818) highlights 400 Gigabit transmission using four parallel 100 Gigabit subcarriers over 3,600km. Using optical Nyquist shaping each carrier occupies 37.5GHz for a total bandwidth of 150GHz.

In an invited paper Andrea Bianco of the Politecnico de Torino, Italy details energy awareness in the design of optical core networks, while Verizon's Roman Egorov discusses next-generation ROADM architecture and design.

FTTx technologies, deployment and applications

In invited papers, operators share their analysis and experiences regarding optical access. Ralf Hülsermann of Deutsche Telekom evaluates the cost and performance of WDM-based access networks, while France Telecom's Philippe Chanclou shares the lessons learnt regarding its PON deployments and details its next steps.

Optical devices for switching, filtering and interconnects

In invited papers, MIT's Vladimir Stojanovic discusses chip and board scale integrated photonic networks for next-generation computers. Alcatel-Lucent's Bell Labs' Nicholas Fontaine gives an update on devices and components for space-division multiplexing in few-mode fibres, while Acacia's Long Chen discusses silicon photonic integrated circuits for WDM and optical switches.

Optoelectronic devices

Teraxion and McGill University (Paper Number: 1549579) detail a compact (6mmx8mm) silicon photonics-based coherent receiver. Using PM-QPSK modulation at 28 Gbaud, up to 4,800 km is achieved.

Meanwhile, Intel and the UC-Santa Barbara (Paper Number: 1552462) discuss a hybrid silicon DFB laser array emitting over 200nm integrated with EAMs (3dB bandwidth> 30GHz). Four bandgaps spread over greater than 100nm are realised using quantum well intermixing.

Transmission subsystems and network elements

In invited Papers, David Plant of McGill University compares OFDM and Nyquist WDM, while AT&T's Sheryl Woodward addresses ROADM options in optical networks and whether to use a flexible grid or not.

Core networks

Orange Labs' Jean-Luc Auge asks whether flexible transponders can be used to reduce margins. In other invited papers, Rudiger Kunze of Deutsche Telekom details the operator's standardisation activities to achieve 100 Gig interoperability for metro applications, while Jeffrey He of Huawei discusses the impact of cloud, data centres and IT on transport networks.

Access networks

Roberto Gaudino of the Politecnico di Torino discusses the advantages of coherent detection in reflective PONs. In other invited papers, Hiroaki Mukai of Mitsubishi Electric details an energy efficient 10G-EPON system, Ronald Heron of Alcatel-Lucent Canada gives an update on FSAN's NG-PON2 while Norbert Keil of the Fraunhofer Heinrich-Hertz Institute highlights progress in polymer-based components for next-generation PON.

Optical interconnection networks for datacom and computercom

Use of orthogonal multipulse modulation for 64 Gigabit Fibre Channel is detailed by Avago Technologies and the University of Cambridge (Paper Number: 1551341).

IBM T.J. Watson (Paper Number: 1551747) has a paper on a 35Gbps VCSEL-based optical link using 32nm SOI CMOS circuits. IBM is claiming record optical link power efficiencies of 1pJ/b at 25Gb/s and 2.7pJ/b at 35Gbps.

Several companies detail activities for the data centre in the invited papers.

Oracle's Ola Torudbakken has a paper on a 50Tbps optically-cabled Infiniband data centre switch, HP's Mike Schlansker discusses configurable optical interconnects for scalable data centres, Fujitsu's Jun Matsui details a high-bandwidth optical interconnection for an densely integrated server while Brad Booth of Dell also looks at optical interconnect for volume servers.

In other papers, Mike Bennett of Lawrence Berkeley National Lab looks at network energy efficiency issues in the data centre. Lastly, Cisco's Erol Roberts addresses data centre architecture evolution and the role of optical interconnect.

China and the global PON market

China has become the world's biggest market for passive optical network (PON) technology even though deployments there have barely begun. That is because China, with approximately a quarter of a billion households, dwarfs all other markets. Yet according to market research firm Ovum, only 7% of Chinese homes were connected by year end 2011.

"In 2012, BOSAs [board-based PON optical sub-assemblies] will represent the majority versus optical transceivers for PON ONTs and ONUs"

Julie Kunstler, Ovum

Until recently Japan and South Korea were the dominant markets. And while PON deployments continue in these two markets, the rate of deployments has slowed as these optical access markets mature.

According to Ovum, slightly more than 4 million PON optical line terminals (OLTs) ports, located in the central office, were shipped in Asia Pacific in 2011, of which China accounted for the majority. Worldwide OLT shipments for the same period totaled close to 4.5 million. The fact that in China the ratio of OLT to optical network terminal (ONT), the end terminal at the home or building, deployed is relatively low highlights that in the Chinese market the significant growth in PON end terminals is still to come.

The strength of the Chinese market has helped local system vendors Huawei, ZTE and Fiberhome become leading global PON players, accounting for over 85% of the OLTs sold globally in 2011, says Julie Kunstler, principal analayst, optical components at Ovum. Moreover, around 60% of fibre-to-the-x deployments in Europe, Middle East and Africa were supplied by the Chinese vendors. The strongest non-Chinese vendor is Alcatel-Lucent.

Ovum says that the State Grid China Corporation, the largest electric utility company in China, has begun to deploy EPON for their smart grid trial deployments. PON is preferred to wireless technology because of its perceived ability to secure the data. This raises the prospect of two separate PON lines going to each home. But it remains to be seen, says Kunstler, whether this happens or whether the telcos and utilities share the access network.

"After China the next region that will have meaningful numbers is Eastern Europe, followed by South and Central America and we have already seen it in places like Russia,” says Kunstler. Indeed FTTx deployments in Eastern Europe already exceed those in Western Europe.

EPON and GPON

In China both Ethernet PON (EPON) and Gigabit PON (GPON) are being deployed. Ovum estimates that in 2011, 65% of equipment shipments were EPON while GPON represented 35% GPON in China.

China Telecom was the first of the large operators in China to deploy PON and began with EPON. Ovum is now seeing deployments of GPON and in the 3rd quarter of 2012, GPON OLT deployments have overtaken EPON.

China Mobile, not a landline operator, started deployments later and chose GPON. But these GPON deployments are on top of EPON, says Kunstler: "EPON is still heavily deployed by China Telecom, while China Mobile is doing GPON but it is a much smaller player." Moreover, Chinese PON vendors also supplying OLTs that support EPON and GPON, allowing local decisions to be made as to which PON technology is used.

One trend that is impacting the traditional PON optical transceiver market is the growing use of board-based PON optical sub-assemblies (BOSAs). Such PON optics dispenses with the traditional traditional optical module form factor in the interest of trimming costs.

“A number of the larger, established ODMs [original design manufacturers] have begun to ship BOSA-based PON CPEs,” says Kunstler. In 2012, BOSAs will represent the majority versus optical transceivers for PON ONTs/ONUs.” says Kunstler.

10 Gigabit PON

Ovum says that there has been very few deployments of next generation 10G EPON and XG-PON, the 10 Gigabit version of GPON.

"There have been small amounts of 10G [EPON] in China," says Kunstler. "We are talking hundreds or thousands, not the tens of thousands [of units]."

One reason for this is the relative high cost of 10 Gigabit PON which is still in its infancy. Another is the growing shift to deploy fibre-to-the-home (FTTh) versus fibre-to-the-building deployments in China. 10 Gigabit PON makes more sense in multi-dwelling units where the incoming signal is split between apartments. Moving to 10G EPON boosts the incoming bandwidth by 10x while XG-PON would increase the bandwidth by 4x. "The need for 10 Gig for multi-dwelling units is not as strong as originally thought," says Kunstler.

It is a chicken-and-egg issue with 10G PON, says Kunstler. The price of 10G optics would go down if there was more demand, and if there was more demand, the optical vendors would work on bringing down cost. "10G GPON will happen but will take longer," says Kunstler, with volumes starting to ramp from 2014.

However, Ovum thinks that a stronger market application for 10G PON will be for supporting wireless backhaul. The market research company is seeing early deployments of PON for wireless backhaul especially for small cell sites (e.g. picocells). Small cells are typically deployed in urban areas which is where FTTx is deployed. It is too early to know the market forecast for this application but PON will join the list of communications technologies supporting wireless backhaul.

Challenges

Despite the huge expected growth in deployments, driven by China, challenges remain for PON optical transceiver and chip vendors.

The margins on optics and PON silicon continue to be squeezed. ODMs using BOSAs are putting pricing pressure on PON transceiver costs while the vertical integration strategy of system vendors such as Huawei, which also develops some of its own components squeezes, out various independent players. Huawei has its own silicon arm called HiSilicon and its activities in PON has impacted the chip opportunity of the PON merchant suppliers.

"Depending upon who the customer is, depending upon the pricing, depending on the features and the functions, Huawei will make the decision whether they are using HiSilicon or whether they are using merchant silicon from an independent vendor, for example," says Kunstler.

There has been consolidation in the PON chip space as well as several new players. For example, Broadcom acquired Teknouvs and Broadlight while Atheros acquired Opulan and Atheros was then acquired by Qualcomm. Marvell acquired a very small start-up and is now competing with Atheros and Broadcom. Most recently, Realtek is rumored to have a very low-cost PON chip.

Is wireless becoming a valid alternative to fixed broadband?

Are wireless technologies such as Long Term Evolution (LTE) and WiMAX2 closing the gap on fixed broadband?

A recent blog by The Economist discussed how Long Term Evolution (LTE) is coming to the rescue of one of its US correspondents, located 5km from the DSL cabinet and struggling to get a decent broadband service.

Peak rates are rarely achieved: the mobile user needs to be very close to a base station and a large spectrum allocation is needed.

Mark Heath, Unwired Insights

The correspondent makes some interesting points:

- The DSL link offered a download speed of 700kbps at best while Verizon's FiOS passive optical networking (PON) service is not available as an alternative.

- The correspondent upgraded to an LTE handset service that enabled up to eight PCs and laptops to achieve a 15-20x download speed improvement.

The blog suggests that wireless data is becoming fast enough to address users' broadband needs.

But is LTE broadband now good enough? Mark Heath, a partner at telecom consultancy, Unwired Insight, is skeptical: "Is the gap between landline and wireless broadband narrowing? I'm not convinced."

Peak wireless rates, and in particular LTE, may suggest that wireless is now a substitute for fixed. But peak rates are rarely achieved: the mobile user needs to be very close to a base station and a large spectrum allocation is needed.

"While peak rates on mobile look to be increasing exponentially, average throughput per base station and base station capacities are increasing at a much more modest rate," says Heath. Hence the operator and vendor focus on LTE Advanced, as well as much bigger spectrum allocations and the use of heterogenous networks.

The advantage of landline broadband quality, in contrast, is that it does not suffer the degradation of a busy cell. There is much less disparity between peak rates and sustainable average throughputs with fixed broadband.

If fixed has advantages, it still requires operators to make the relevant investment, particularly in rural areas. "Wireless is better than nothing in rural areas," says Heath. But the gap between fixed and mobile isn't shrinking as much as peak data rates suggest.

Yet mobile networks do have a trump card: wide area mobility. With the increasing number of people dependent on smartphones, iPads and devices like the Kindle Fire, an ever increasing value is being placed on mobile broadband.

So if fixed broadband is keeping its edge over wireless, just what future services will drive the need for fixed's higher data rates?

This is a topic to be explored as part of the upcoming next-generation PON feature.

Further reading:

broadbandtrends: The Fixed versus mobile broadband conundrum, click here

ICT could reduce global carbon emissions by 15%

Part 1: Standards and best practices

Keith Dickerson is chair of the International Telecommunication Union's (ITU) working party on information and communications technology (ICT) and climate change.

In a Q&A with Gazettabyte, he discusses how ICT can help reduce emissions in other industries, where the power hot spots are in the network and what the ITU is doing.

"If you benchmark base stations across different countries and different operators, there is a 5:1 difference in their energy consumption"

Keith Dickerson

Q. Why is the ITU addressing power consumption reduction and will its involvement lead to standards?

KD: We are producing standards and best practices. The reason we are involved is simple: ICT – all IT and telecoms equipment - is generating 2% of [carbon] emissions worldwide. But traffic is doubling every two years and the energy consumption of data centres is doubling every five years. If we don’t watch out we will be part of the problem. We want to reduce emissions in the ICT sector and in other sectors. We can reduce emissions in other sectors by 5x or 6x what we emit in our own sector.

Just to understand that figure, you believe ICT can cut emissions in other industries by a factor of six?

KD: We could reduce emissions overall by 15% worldwide. Reducing things like travel and storage of goods and by increasing recycling. All these measures in conjunction, enabled by ICT, could reduce overall emissions by 15%. These sectors include travel, the forestry sector and waste management. The energy sector is huge and we can reduce emissions here by up to 30% using smarter grids.

What are the trends regarding ICT?

KD: ICT accounts for 2% at the moment, maybe 2.5% if you include TV, but it is growing very fast. By 2020 it could be 6% of worldwide emissions if we don’t do something. And you can see why: Broadband access rates are doubling every two years, and although the power-per-bit is coming down, overall power [consumed] is rising.

Where are the hot spots in the network?

The areas where energy consumption is going up most greatly are at the ends of the network. They are in the home equipment and in data centres. Within the network it is still going up, but it is under control and there are clear ways of reducing it.

For example all operators are moving to a next-generation network (NGN) – BT is doing this with its 21CN - and this alone leads to a power reduction. It leads to a significant reduction in switching centres, by a factor of ten. And you can collapse different networks into a single IP network, reducing the energy consumption [associated with running multiple networks]. The equipment in the NGN doesn’t need as much cooling or air conditioning. The use of more advanced access technology such as VDSL2 and PON will by itself lead to a reduction in power-per-bit.

The EU has a broadband code of conduct which sets targets in reducing energy consumption in the access network and that leads to technologies such as standby modes. My home hub, if I don’t use it for awhile, switches to a low-power mode.

The ITU is looking at how to apply these low–power modes to VDSL2. There has also been a very recent proposal to reduce the power levels in PONs. There has been a contribution from the Chinese for a deep-sleep mode for XG-PON. The ITU-T Study Group 13 on future networks is also looking at such techniques, shutting down part of the core network when traffic levels are low such as at night.

What about mobile networks?

If you benchmark them across different countries and different operators there is a 5:1 difference in the energy consumption of base stations. They are running the same standard but their energy efficiency is somewhat different; they have been made at different times and by different vendors.

In a base station, some half of the power is lost in the [signal] coupling to the antenna. If you can make amplifiers more efficient and reduce the amount of cooling and air-condition required by the base station, you can reduce energy consumption by 70 or 80%. If all operators and all counties used best practices here, energy consumption in the mobile network could be reduced by 50% to 70%.

If you could get overall power consumption of a base station down to 100W, you could power it from renewable energy. That would make a huge difference; it could work without having to worry about the reliability of the electricity grid which in India and Africa is a tricky problem. And at the moment the price of diesel fuel [to power standby generators] is going through the roof.

I visited Huawei recently and they have examples of 100W base stations powered by renewable energy, making them independent of the electricity network. At the moment a base station consume more like 1000W and overall they consume over half the overall power used by a mobile operator. At 100W, that wouldn’t be the case.

Other power saving activities in mobile include sharing networks among operators such as Orange and T-Mobile in the UK. And BT has signed a contract with four out of the five UK mobile operators to provide their backhaul and core networks in the future.

What is the ITU doing with regard energy saving schemes?

The ITU set up the working party on ICT and climate change less than two years ago. We have work in three different areas.

One is increasing energy efficiencies in ICT which we are doing through the widespread introduction of best practices. We are relying on the EC to set targets. The ITU, because it has 193 countries involved, finds it very difficult to agree targets. So we issue best practices which show how targets can be met. This covers data centres, broadband and core networks.

Another of our areas is agreeing a common methodology for how to measure the impact of ICT on carbon emissions. We have been working on this for 18 months and the first recommendations should be consented this summer. Overall this work will be completed in the next two years. This will enable you to measure the emissions of ICT by country, or sector, or an individual product or service, or within a company. If companies don’t meet their targets in future they will be fined so it is very important companies are measured in the same way.

A third area of our activities are things like recycling. We have produced a standard for a universal charger for mobile phones. You won’t have to buy a new charger each time you buy a new phone. At the moment thousands of tonnes of chargers go to landfill [waste sites] every year. The standard introduced by the ITU last year only covers 25% of handsets. The revised standard will raise that to 80%.

At the last meeting the Chinese also proposed a universal battery – or a range of batteries. This would means you don’t have to throw away your old battery each time you buy a new mobile. It is all about reducing the amount of equipment that goes into landfill.

We are also doing some other activities. Most telecom equipment use a 50V power supply. We are taking that up to 400V. So a standard power supply for a data centre or a switch would be at 400V. This would mean you would lose a lot less power in the wiring as you would be operating at a lower current - power losses vary according to the square of the current.

These ITU activities coupled with operators moving to new architectures and adopting new technologies will all help yet traffic is doubling every two years. What will be the overall effect?

It all depends on the targets that are set. The EU is putting in more and more severe targets. If companies have to pay a fine if they don’t meet them, they will introduce new technologies more quickly. Companies won’t pay the extra investment unless they have to, I’m afraid, especially during this difficult economic period.

Every year the EC revises the code of conduct on broadband and sets stiffer targets. They are driving the introduction of new technology into the industry, and everyone wants to sign up to show that they are using best practices.

What the ITU is doing is providing the best practices and the standards to help them do that. The rate at which they act will depend on how fast those targets are reduced.

Keith Dickerson is a director at Climate Associates.

Part 2 Operators' power efficiency strategies

Differentiation in a market that demands sameness

At first sight, optical transceiver vendors have little scope for product differentiation. Modules are defined through a multi-source agreement (MSA) and used to transport specified protocols over predefined distances.

“Their attitude is let the big guys kill themselves at 40 and 100 Gig while they beat down costs"

Vladimir Kozlov, LightCounting

“I don’t think differentiation matters so much in this industry,” says Daryl Inniss, practice leader components at Ovum. “Over time eventually someone always comes in; end customers constantly demand multiple suppliers.”

It is a view confirmed by Luc Ceuppens, senior director of marketing, high-end systems business unit at Juniper Networks. “We do look at the different vendors’ products - which one gives the lowest power consumption,” he says. “But overall there is very little difference.”

For vendors, developing transceivers is time-consuming and costly yet with no guarantee of a return. The very nature of pluggables means one vendor’s product can easily be swapped with a cheaper transceiver from a competitor.

Being a vendor defining the MSA is one way to steal a march as it results in a time-to-market advantage. There have even been cases where non-founder companies have been denied sight of an MSA’s specification, ensuring they can never compete, says Inniss: “If you are part of an MSA, you are very definitely at an advantage.”

Rafik Ward, vice president of marketing at Finisar, cites other examples where companies have an advantage.

One is Fibre Channel where new data rates require high-speed vertical-cavity surface-emitting lasers (VCSELs) which only a few companies have.

Another is 100 Gigabit-per-second (Gbps) for long-haul transmission which requires companies with deep pockets to meet the steep development costs. “One hundred Gigabit is a very expensive proposition whereas with the 40 Gigabit Ethernet LR4 (10km) standard, existing off-the-shelf 10Gbps technology can be used,” says Ward.

"One hundred Gigabit is a very expensive proposition"

"One hundred Gigabit is a very expensive proposition"

Rafik Ward, Finisar

Ovum’s Inniss highlights how optical access is set to impact wide area networking (WAN). The optical transceivers for passive optical networking (PON) are using such high-end components as distributed feedback (DFB) lasers and avalanche photo-detectors (APDs), traditionally components for the WAN. Yet with the higher volumes of PON, the cost of WAN optics will come down.

“With Gigabit Ethernet the price declines by 20% each time volumes double,” says Inniss. “For PON transceivers the decline is 40%.” As 10Gbps PON optics start to be deployed, the price benefit will migrate up to the SONET/ Ethernet/ WAN world, he says. Accordingly, those transceiver players that make and use their own components, and are active in PON and WAN, will most benefit.

“Differentiation is hard but possible,” says Vladimir Kozlov, CEO of optical transceiver market research firm, LightCounting. Active optical cables (AOCs) have been an area of innovation partly because vendors have freedom to design the optics that are enclosed within the cabling, he says.

AOCs, Fibre Channel and 100Gbps are all examples where technology is a differentiator, says Kozlov, but business strategy is another lever to be exploited.

On a recent visit to China, Kozlov spoke to ten local vendors. “They have jumped into the transceiver market and think a 20% margin is huge whereas in the US it is seen as nothing.”

The vendors differentiate themselves by supplying transceivers directly to the equipment vendors’ end customers. “They [the Chinese vendors] are finding ways in a business environment; nothing new here in technology, nothing new in manufacturing,” says Kozlov.

He cites one firm that fully populated with transceivers a US telecom system vendor’s installation in Malaysia. “Doing this in the US is harder but then the US is one market in a big world,” says Kozlov.

Offshore manufacturing is no longer a differentiator. One large Chinese transceiver maker bemoaned that everyone now has manufacturing in China. As a result its focus has turned to tackling overheads: trimming costs and reducing R&D.

“Their attitude is let the big guys kill themselves at 40 and 100 Gig while they beat down costs by slashing Ph.Ds, optimising equipment and improving yields,” says Kozlov. “Is it a winning approach long term? No, but short-term quite possibly.”