Is the tunable laser market set for an upturn?

Part 2: Tunable laser market

"The tunable laser market requires a lot of patience to research." So claims Vladimir Kozlov, CEO of LightCounting Market Research. Kozlov should know; he has spent the last 15 years tracking and forecasting lasers and optical modules for the telecom and datacom markets.

Source: LightCounting, Gazettabyte

Source: LightCounting, Gazettabyte

The tunable laser market is certainly sizeable; over half a million units will be shipped in 2014, says LightCounting. But the market requires care when forecasting. One subtlety is that certain optical component companies - Finisar, JDSU and Oclaro - are vertically integrated and use their own tunable lasers within the optical modules they sell. LightCounting counts these as module sales rather than tunable laser ones.

Another issue is that despite the development of advanced reconfigurable optical add/ drop multiplexers (ROADMs) and tunable lasers, the uptake of agile optical networking has been limited.

"Verizon is bullish on getting the next generation of colourless, directionless and contentionless ROADMS to reconfigure the network on-the-fly," says Kozlov. "But I'm not so sure Verizon is going to be successful in convincing the industry that this is going to be a good market for [ROADM] suppliers to sell into."

Reconfigurability helps engineers at installations when determining which channels to add or drop, but there is little evidence of operators besides Verizon talking about using ROADMS to change bandwidth dynamically, first in one direction and then the other, he says.

Another indicator of the reduced status of tunable lasers is NeoPhotonics's intention to purchase Emcore's tunable external cavity laser as well as its module assets for US $17.5 million. Emcore acquired the laser when it bought Intel's optical platform division for $85 million in 2007, while Intel acquired it from New Focus in 2002 for $50 million. NeoPhotonics has also spent more in the past: it bought Santur's tunable laser for $39 million in 2011.

"There was so much excitement with so many players [during the optical bubble of 1999-2000], the market was way too competitive and eventually it drove vendors to the point where they would prefer to sell the business for pennies rather than keep it running," says Kozlov. "Emcore has been losing money, it is not a highly profitable business." Yet for Kozlov, Emcore's tunable laser is probably the best in the business with its very narrow line-width compared to other devices.

Tunable laser market

Tunable lasers have failed to get into the mainstream of the industry. "If you look at DWDM, I'm guessing that 70 percent of lasers sold are still fixed wavelength or temperature-tunable over a few wavelengths," says Kozlov. System vendors such as Huawei and ZTE advertise their systems with tunable lasers. "But when we asked them how they are using tunable lasers, they admitted that the bulk of their shipments are fixed-wavelength devices because whatever little they can save on cost, they will."

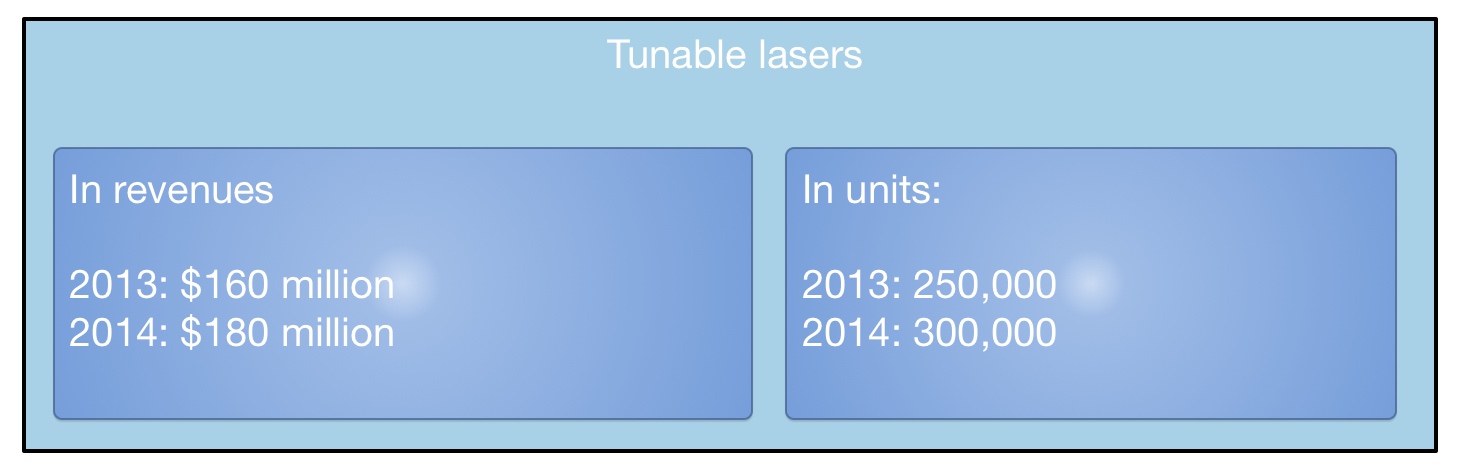

LightCounting valued the 2013 tunable laser market at $160 Million, growing to $180 Million in 2014. This equates to 250,000 units sold in 2013 and 300,000 units this year. "Most of these are for coherent systems," says Kozlov. The number of tunable lasers sold in modules - mainly XFPs but also SFPs and 300-pin modules - is 250,000 million units. "Half a million units a year; if you look at actual shipments, it is quite a lot," says Kozlov.

What next?

"I'm hoping we are reaching the low point in the tunable laser market as vendors are struggling and sales are at a very low valuation," says Kozlov.

The advent of more complex modulation schemes for 400 Gigabit and greater speed optical transmission, and the adoption of silicon photonics-based modulators for long haul will require higher powered lasers. But so much progress has been made by laser designers over the last 15 years, especially during the bubble, that it will last the industry for at least another decade or two, says Kozlov: "Incremental progress will continue and hopefully greater profitability."

For Part 1: NeoPhotonics to expand its tunable laser portfolio, click here

North American operators in an optical spending rethink

Optical transport spending by the North American operators dropped 13 percent year-on-year in the third quarter of 2014, according to market research firm Dell'Oro Group.

Operators are rethinking the optical vendors they buy equipment from as they consider their future networks. "Software-defined networking (SDN) and Network Functions Virtualisation (NFV) - all the futuristic next network developments, operators are considering what that entails," says Jimmy Yu, vice president of optical transport research at Dell’Oro. "Those decisions have pushed out spending."

NFV will not impact optical transport directly, says Yu, and could even benefit it with the greater signalling to central locations that it will generate. But software-defined networks will require Transport SDN. "You [as an operator] have to decide which vendors are going to commit to it [Transport SDN]," says Yu.

SDN and NFV - all the futuristic next network developments, operators are considering what that entails. Those decisions have pushed out spending

The result is that the North American tier-one operators reduced their spending in the third quarter 2014. Yu highlights AT&T which during 2013 through to mid 2014 undertook robust spending. "What we saw growing [in that period] was WDM metro equipment, and it is that spending that has dropped off in the third quarter," says Yu. For equipment vendors Ciena and Fujitsu that are part of AT&T's Domain 2.0 supplier programme, the Q3 reduced spending is unwelcome news. But Yu expects North American optical transport spending in 2015 to exceed 2014's. This, despite AT&T announcing that its capital expenditure in 2015 will dip to US $18 billion from $21 billion in 2014 now that its Project VIP network investment has peaked.

But Yu says AT&T has other developments that will require spending. "Even though AT&T may reduce spending on Project VIP, it is purchasing DirecTV and the Mexican mobile carrier, lusacell," he says. "That type of stuff needs network integration." AT&T has also committed to passing two million homes with fibre once it acquires DirecTV.

Verizon is another potential reason for 2015 optical transport growth in North America. It has a request-for-proposal for metro DWDM equipment and the only issue is when the operator will start awarding contracts. Meanwhile, each year the large internet content providers grow their optical transport spending.

Dell'Oro expects 2014 global optical transport spending to be flat, with 2015 forecast to experience three percent growth

Asia Pacific remains one of the brighter regions for optical transport in 2014. "Partly this is because China is buying a lot of DWDM long-haul equipment, with China Mobile being one of the biggest buyers of 100 Gig," says Yu. EMEA continues to under-perform and Yu expects optical transport spending to decline in 2014. "But there seems to be a lot of activity and it's just a question of when that activity turns into revenue," he says.

Dell'Oro expects 2014 global optical transport spending to be flat compared to 2013, with 2015 forecast to experience three percent growth. "That growth is dependent on Europe starting to improve," says Yu.

One area driving optical transport growth that Yu highlights is interconnected data centres. "Whether enterprises or large companies interconnecting their data centres, internet content providers distributing their networks as they add more data centres, or telecom operators wanting to jump on the bandwagon and build their own data centres to offer services; that is one of the more interesting developments," he says.

OFC 2014 industry reflections - Part 1

T.J. Xia, distinguished member of technical staff at Verizon

The CFP2 form factor pluggable - analogue coherent optics (CFP2-ACO) at 100 and 200 Gig will become the main choice for metro core networks in the near future.

I learnt that the discrete multitone (DMT) modulation format seems the right choice for a low-cost, single-wavelength direct-detection 100 Gigabit Ethernet (GbE) interface for data ports, and a 4xDMT for 400GbE ports.

As for developments to watch, photonic switches will play a much more important role for intra-data centre connections. As the port capacity of top-of-rack switches gets larger, photonic switches have more cost advantages over middle stage electrical switches.

Don McCullough, Ericsson's director of strategic communications at group function technology

The biggest trend in networking right now is software-defined networking (SDN) and Network Function Virtualisation (NFV), and both were on display at OFC. We see that the combination of SDN and NFV in the control and software domains will directly impact optical networks. The Ericsson-Ciena partnership embodies this trend with its agreement to develop joint transport solutions for IP-optical convergence and service provider SDN.

We learnt that network transformation, both at the control layer (SDN and NFV) and at the data plane layer, including optical, is happening at the network operators. Related to that, we also saw interest at OFC in the announcement that AT&T made at Mobile World Congress about their User-Defined Network Cloud and Domain 2.0 strategy where AT&T has selected to work with Ericsson on integration and transformation services.

We learnt that network transformation, both at the control layer (SDN and NFV) and at the data plane layer, including optical, is happening at the network operators. Related to that, we also saw interest at OFC in the announcement that AT&T made at Mobile World Congress about their User-Defined Network Cloud and Domain 2.0 strategy where AT&T has selected to work with Ericsson on integration and transformation services.

We will continue to watch the on-going deployment of SDN and NFV to control wide area networks including optical. We expect more joint developments agreements to connect SDN and NFV with optical networking, like the Ericsson-Ciena one.

One new thing for 2014 is that we expect to see open source projects like OpenStack and Open DayLight play increasingly important roles in the transformation of networks.

Brandon Collings, JDSU's CTO for communications and commercial optical products

The announcements of integrated photonics for coherent CFP2s was an important development in the 100 Gig progression. While JDSU did not make an announcement at OFC, we are similarly engaged with our customers on pluggable approaches for coherent 100 Gig.

I would like to see convergence around 400 Gig client interface standards

There is a lack of appreciation of the data centre operators who aren’t big household names. While the mega data centre operators have significant influence and visibility, the needs of the numerous, smaller-sized operators are largely under-represented.

I would like to see convergence around 400 Gig client interface standards. Lots of complex technology here, challenges to solve and options to do so. But ambiguity in these areas is typically detrimental to the overall industry.

Mike Freiberger, principal member of technical staff, Verizon

The emergence of 100 Gig for metro, access, and data centre reach optics generated a lot of contentious debate. Maybe the best way forward as an industry isn’t really solidified just yet.

What did I learn? Verizon is a leader in wireless backhaul and is growing its options at a rate faster than the industry.

The two developments that caught my attention are 100 Gig short-reach and above-100-Gig research. 100 Gig short-reach because this will set the trigger point for the timing of 100 Gig interfaces really starting to sell in volume. Research on data rates faster than 100 Gig because price-per-bit always has to come downward.

Xtera demonstrates 40 Terabit using Raman amplification

- Xtera's Raman amplification boosts capacity and reach

- 40 Terabit optical transmission over 1,500km in Verizon trial

- 64 Terabit over 1,500km in 2015 using a Raman module operating over 100nm of spectrum

Herve Fevrier

Herve FevrierSystem vendor Xtera is using all these techniques as part of its Nu-Wave Optima system but also uses Raman amplification to extend capacity and reach.

"We offer capacity and reach using a technology - Raman amplification - that we have been pioneering and working on for 15 years," says Herve Fevrier, executive vice president and chief strategy officer at Xtera.

The distributed amplification profile of Raman (blue) compared to an EDFA's point amplification. Source: Xtera

The distributed amplification profile of Raman (blue) compared to an EDFA's point amplification. Source: XteraOne way vendors are improving the amplification for 100 Gigabit and greater deployments is to use a hybrid EDFA/ Raman design. This benefits the amplifier's power efficiency and the overall transmission reach but the spectrum width is still dictated by Erbium to around 35nm. "And Raman only helps you have spans which are a bit longer," says Fevrier.

Meanwhile, Xtera is working on programable cards that will support the various transmission options. Xtera will offer a 100nm amplifier module this year that extends its system capacity to 24 Terabit (240, 100 Gig channels). Also planned this year is super-channel PM-QPSK implementation that will extend transmissions to 32 Terabit using the 100nm amplifier module. In 2015 Xtera will offer PM-16-QAM that will deliver the 48 Terabit over 2,000km and the 64 Terabit over 1,500km.

For Part 1, click here

Verizon on 100G+ optical transmission developments

Source: Gazettabyte

Source: Gazettabyte

Feature: 100 Gig and Beyond. Part 1:

Verizon's Glenn Wellbrock discusses 100 Gig deployments and higher speed optical channel developments for long haul and metro.

The number of 100 Gigabit wavelengths deployed in the network has continued to grow in 2013.

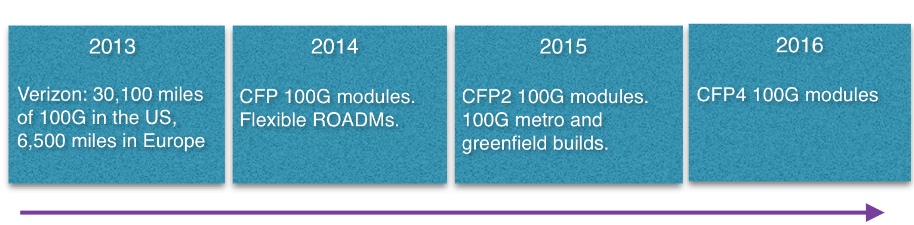

According to Ovum, 100 Gigabit has become the wavelength of choice for large wavelength-division multiplexing (WDM) systems, with spending on 100 Gigabit now exceeding 40 Gigabit spending. LightCounting forecasts that 40,000, 100 Gigabit line cards will be shipped this year, 25,000 in the second half of the year alone. Infonetics Research, meanwhile, points out that while 10 Gigabit will remain the highest-volume speed, the most dramatic growth is at 100 Gigabit. By 2016, the majority of spending in long-haul networks will be on 100 Gigabit, it says.

The market research firms' findings align with Verizon's own experience deploying 100 Gigabit. The US operator said in September that it had added 4,800, 100 Gigabit miles of its global IP network during the first half of 2013, to total 21,400 miles in the US network and 5,100 miles in Europe. Verizon expects to deploy another 8,700 miles of 100 Gigabit in the US and 1,400 miles more in Europe by year end.

"We expect to hit the targets; we are getting close," says Glenn Wellbrock, director of optical transport network architecture and design at Verizon.

Verizon says several factors are driving the need for greater network capacity, including its FiOS bundled home communication services, Long Term Evolution (LTE) wireless and video traffic. But what triggered Verizon to upgrade its core network to 100 Gig was converging its IP networks and the resulting growth in traffic. "We didn't do a lot of 40 Gig [deployments] in our core MPLS [Multiprotocol Label Switching] network," says Wellbrock.

The cost of 100 Gigabit was another factor: A 100 Gigabit long-haul channel is now cheaper than ten, 10 Gig channels. There are also operational benefits using 100 Gig such as having fewer wavelengths to manage. "So it is the lower cost-per-bit plus you get all the advantages of having the higher trunk rates," says Wellbrock.

Verizon expects to continue deploying 100 Gigabit. First, it has a large network and much of the deployment will occur in 2014. "Eventually, we hope to get a bit ahead of the curve and have some [capacity] headroom," says Wellbrock.

We could take advantage of 200 Gig or 400 Gig or 500 Gig today

Super-channel trials

Operators, working with optical vendors, are trialling super-channels and advanced modulation schemes such as 16-QAM (quadrature amplitude amplitude). Such trials involve links carrying data in multiples of 100 Gig: 200 Gig, 400 Gig, even a Terabit.

Super-channels are already carrying live traffic. Infinera's DTN-X system delivers 500 Gig super-channels using quadrature phase-shift keying (QPSK) modulation. Orange has a 400 Gigabit super-channel link between Lyon and Paris. The 400 Gig super-channel comprises two carriers, each carrying 200 Gig using 16-QAM, implemented using Alcatel-Lucent's 1830 photonic service switch platform and its photonic service engine (PSE) DSP-ASIC.

"We could take advantage of 200 Gig or 400 Gig or 500 Gig today," says Wellbrock. "As soon as it is cost effective, you can use it because you can put multiple 100 Gig channels on there and multiplex them."

The issue with 16-QAM, however, is its limited reach using existing fibre and line systems - 500-700km - compared to QPSK's 2,500+ km before regeneration. "It [16-QAM] will only work in a handful of applications - 25 percent, something of this nature," says Wellbrock. This is good for a New York to Boston, he says, but not New York to Chicago. "From our end it is pretty simple, it is lowest cost," says Wellbrock. "If we can reduce the cost, we will use it [16-QAM]. However, if the reach requirement cannot be met, the operator will not go to the expense of putting in signal regenerators to use 16-QAM do, he says.

Earlier this year Verizon conducted a trial with Ciena using 16-QAM. The goals were to test 16-QAM alongside live traffic and determine whether the same line card would work at 100 Gig using QPSK and 200 Gig using 16-QAM. "The good thing is you can use the same hardware; it is a firmware setting," says Wellbrock.

We feel that 2015 is when we can justify a new, greenfield network and that 100 Gig or versions of that - 200 Gig or 400 Gig - will be cheap enough to make sense

100 Gig in the metro

Verizon says there is already sufficient traffic pressure in its metro networks to justify 100 Gig deployments. Some of Verizon's bigger metro locations comprise up to 200 reconfigurable optical add/ drop multiplexer (ROADM) nodes. Each node is typically a central office connected to the network via a ROADM, varying from a two-degree to an eight-degree design.

"Not all the 200 nodes would need multiple 100 Gig channels but in the core of the network, there is a significant amount of capacity that needs to be moved around," says Wellbrock. "100 Gig will be used as soon as it is cost-effective."

Unlike long-haul, 100 Gigabit in the metro remains costlier than ten 10 Gig channels. That said, Verizon has deployed metro 100 Gig when absolutely necessary, for example connecting two router locations that need to be connected using 100 Gig. Here Verizon is willing to pay extra for such links.

"By 2015 we are really hoping that the [metro] crossover point will be reached, that 100 Gig will be more cost effective in the metro than ten times 10 [Gig]." Verizon will build a new generation of metro networks based on 100 Gig or 200 Gig or 400 Gig using coherent receivers rather than use existing networks based on conventional 10 Gig links to which 100 Gig is added.

"We feel that 2015 is when we can justify a new, greenfield network and that 100 Gig or versions of that - 200 Gig or 400 Gig - will be cheap enough to make sense."

Data Centres

The build-out of data centres is not a significant factor driving 100 Gig demand. The largest content service providers do use tens of 100 Gigabit wavelengths to link their mega data centres but they typically have their own networks that connect relatively few sites.

"If you have lots of data centres, the traffic itself is more distributed, as are the bandwidth requirements," says Wellbrock.

Verizon has over 220 data centres, most being hosting centres. The data demand between many of the sites is relatively small and is served with 10 Gigabit links. "We are seeing the same thing with most of our customers," says Wellbrock.

Technologies

System vendors continue to develop cheaper line cards to meet the cost-conscious metro requirements. Module developments include smaller 100 Gig 4x5-inch MSA transponders, 100 Gig CFP modules and component developments for line side interfaces that fit within CFP2 and CFP4 modules.

"They are all good," says Wellbrock when asked which of these 100 Gigabit metro technologies are important for the operator. "We would like to get there as soon as possible."

The CFP4 may be available by late 2015 but more likely in 2016, and will reduce significantly the cost of 100 Gig. "We are assuming they are going to be there and basing our timelines on that," he says.

Greater line card port density is another benefit once 100 Gig CFP2 and CFP4 line side modules become available. "Lower power and greater density which is allowing us to get more bandwidth on and off the card." sats Wellbrock.

Existing switch and routers are bandwidth-constrained: they have more traffic capability that the faceplate can provide. "The CFPs, the way they are today, you can only get four on a card, and a lot of the cards will support twice that much capacity," says Wellbrock.

With the smaller form factor CFP2 and CFP4, 1.2 and 1.6 Terabits card will become possible from 2015. Another possible development is a 400 Gigabit CFP which would achieve a similar overall capacity gains.

Coherent, not just greater capacity

Verizon is looking for greater system integration and continues to encourage industry commonality in optical component building blocks to drive down cost and promote scale.

Indeed Verizon believes that industry developments such as MSAs and standards are working well. Wellbrock prefers standardisation to custom designs like 100 Gigabit direct detection modules or company-specific optical module designs.

Wellbrock stresses the importance of coherent receiver technology not only in enabling higher capacity links but also a dynamic optical layer. The coherent receiver adds value when it comes to colourless, directionless, contentionless (CDC) and flexible grid ROADMs.

"If you are going to have a very cost-effective 100 Gigabit because the ecosystem is working towards similar solutions, then you can say: 'Why don't I add in this agile photonic layer?' and then I can really start to do some next-generation networking things." This is only possible, says Wellbrock, because of the tunabie filter offered by a coherent receiver, unlike direct detection technology with its fixed-filter design.

"Today, if you want to move from one channel to the next - wavelength 1 to wavelength 2 - you have to physically move the patch cord to another filter," says Wellbrock. "Now, the [coherent] receiver can simply tune the local oscillator to channel 2; the transmitter is full-band tunable, and now the receiver is full-band tunable as well." This tunability can be enabled remotely rather than requiring an on-site engineer.

Such wavelength agility promises greater network optimisation.

"How do we perhaps change some of our sparing policy? How do we change some of our restoration policies so that we can take advantage of that agile photonics later," says Wellbroack. "That is something that is only becoming available because of the coherent 100 Gigabit receivers."

Part 2, click here

FSAN adds WDM for next-generation PON standard

The Full Service Access Network (FSAN) group has chosen wavelength division multiplexing (WDM) to complement PON's traditional time-sharing scheme for the NG-PON2 standard.

"The technology choice allows us to have a single platform supporting both business and residential services"

"The technology choice allows us to have a single platform supporting both business and residential services"

Vincent O'Byrne, Verizon

The TWDM-PON scheme for NG-PON2 will enable operators to run several services over one network: residential broadband access, business services and mobile back-hauling. In addition, NG-PON2 will support dedicated point-to-point links – via a WDM overlay - to meet more demanding service requirements.

FSAN will work through the International Telecommunication Union (ITU) to turn NG-PON2 into a standard. Standards-compliant NG-PON2 equipment is expected to become available by 2014 and be deployed by operators from 2015. But much work remains to flush out the many details and ensure that the standard meets the operators’ varied requirements

Significance

The choice of TWDM-PON represents a pragmatic approach by FSAN. TWDM-PON has been chosen to avoid having to make changes to the operators' outside plant. Instead, changes will be confined to the PON's end equipment: the central office's optical line terminal (OLT) and the home or building's optical networking unit (ONU).

Operators yet to adopt PON technology may use NG-PON2's extended reach to consolidate their network by reducing the number of central offices they manage. Other operators already having deployed PON may use NG-PON2 to boost broadband capacity while consolidating business and residential services onto the one network.

US operator Verizon has deployed GPON and says the adoption of NG-PON2 will enable it to avoid the intermediate upgrade stage of XGPON (10Gbps GPON).

"The [NG-PON2] technology choice allows us to have a single platform supporting both business and residential services," says Vincent O'Byrne, director of technology, wireline access at Verizon. "With the TWDM wavelengths, we can split them: We could have a 10G/10G service or ten individual 1G/1G services and, in time, have also residential customers."

The technology choice for NG-PON2 is also good news for system vendors such as Huawei and Alcatel-Lucent that have already done detailed work on TWDM-PON systems.

Specification

NG-PON2's basic configuration will use four wavelengths, resulting in a 40Gbps PON. Support for eight (80G) and 16 wavelengths (160G) are also being considered.

Each wavelength will support 10Gbps downstream (from the central office to the end users) and 2.5Gbps upstream (XGPON) or 10Gbps symmetrical services for business users.

"The idea is to reuse as much as possible the XGPON protocol in TWDM-PON, and carry that protocol on multiple wavelengths," says Derek Nesset, co-chair of FSAN's NGPON task group.

The PON's OLT will support the 4, 8 or 16 wavelengths using lasers and photo-detectors as well as optical multiplexing, while the ONU will require a tunable laser and a tunable filter, to set the ONU to the PON's particular wavelengths.

Other NG-PON2 specifications include the support of at least 1Gbps services per ONU and a target reach of 40km. NG-PON2 will also support 60-100km links but that will require technologies such as optical amplification.

"The [NG-PON2] ONUs should be something like the cost of a VDSL or a GPON modem, so there is a challenge there for the [tunable] laser manufacturers"

"The [NG-PON2] ONUs should be something like the cost of a VDSL or a GPON modem, so there is a challenge there for the [tunable] laser manufacturers"

Derek Nesset, co-chair of FSAN's NGPON task group

What next?

"The big challenge and the first challenge is the wavelength plan [for NG-PON2]," says O'Byrne.

One proposal is for TWDM-PON's wavelengths to replace XGPON's. Alternatively, new unallocated spectrum could be assigned to ensure co-existence with existing GPON, RF video and XGPON. However, such a scheme will leave little spectrum available for NG-PON2. Some element of spectral flexibility will be required to accommodate the various co-existence scenarios in operator networks. That said, Verizon expects that FSAN will look for fresh wavelengths for NG-PON2.

"FSAN is a sum of operators opinions and requirements, and it is getting hard," says O'Byrne. "Our preference would be to reuse XGPON wavelengths but, at the last meeting, some operators want to use XGPON in the coming years and aren't too favourable to recharacterising that band."

Another factor regarding spectrum is how widely the wavelengths will be spaced; 50GHz, 100GHz or the most relaxed 200GHz spacing are all being considered. The tradeoff here is hardware design complexity and cost versus spectral efficiency.

There is still work to be done to define the 10Gbps symmetrical rate. "Some folks are also looking for slightly different rates and these are also under discussion," says O'Byrne.

Another challenge is that TWDM-PON will also require the development of tunable optical components. "The ONUs should be something like the cost of a VDSL or a GPON modem, so there is a challenge there for the [tunable] laser manufacturers," says Nesset.

Tunable laser technology is widely used in optical transport, and high access volumes will help the economics, but this is not the case for tunable filters, he says.

The size and power consumption of PON silicon pose further challenges. NG-PON2 will have at least four times the capacity, yet operators will want the OLT to be the same size as for GPON.

Meanwhile, FSAN has several documents in preparation to help progress ITU activities relating to NG-PON2's standardisation.

FSAN has an established record of working effectively through the ITU to define PON standards, starting with Broadband PON (BPON) and Gigabit PON (GPON) to XGPON that operators are now planning to deploy.

FSAN members have already submitted a NG-PON2 requirements document to the ITU. "This sets the framework: what is it this system needs to do?" says Nesset. "This includes what client services it needs to support - Gigabit Ethernet and 10 Gigabit Ethernet, mobile backhaul latency requirements - high level things that the specification will then meet."

In June 2012 a detailed requirements document was submitted as was a preliminary specification for the physical layer. These will be followed by documents covering the NG-PON2 protocol and how the management of the PON end points will be implemented.

If rapid progress continues to be made, the standard could be ratified as early as 2013, says O'Byrne.

FSAN close to choosing the next generation of PON

Briefing: Next-gen PON

Part 1: NG-PON2

The next-generation passive optical network (PON) will mark a departure from existing PON technologies. Some operators want systems based on the emerging standard for deployment by 2015.

“One of the goals in FSAN is to converge on one solution that can serve all the markets"

Derek Nesset, co-chair of FSAN's NGPON task group

The Full Service Access Network (FSAN) industry group is close to finalising the next optical access technology that will follow on from 10 Gigabit GPON.

FSAN - the pre-standards forum consisting of telecommunications service providers, testing labs and equipment manufacturers - crafted what became the International Telecommunication Union's (ITU) standards for GPON (Gigabit PON) and 10 Gigabit GPON (XGPON1). In the past year FSAN has been working on NG-PON2, the PON technology that comes next.

“One of the goals in FSAN is to converge on one solution that can serve all the markets - residential users, enterprise and mobile backhaul," says Derek Nesset, co-chair of FSAN's NGPON task group.

Some mobile operators are talking about backhaul demands that will require multiple 10 Gigabit-per-second (Gbps) links to carry the common public radio interface (CPRI), for example. The key design goal, however, is that NG-PON2 retains the capability to serve residential users cost-effectively, stresses Nesset.

FSAN says it has a good description of each of the candidate technologies: what each system looks like and its associated power consumption. "We are trying to narrow down the solutions and the ideal is to get down to one,” says Nesset.

The power consumption of the proposed access scheme is of key interest for many operators, he says. Another consideration is the risk associated with moving to a novel architecture rather than adopting an approach that builds on existing PON schemes.

Operators such as NTT of Japan and Verizon in the USA have a huge installed base of PON and want to avoid having to amend their infrastructure for any next-generation PON scheme unable to re-use power splitters. Other operators such as former European incumbents are in the early phases of their rollout of PON and have Greenfield sites that could deploy other passive infrastructure technologies such as arrayed waveguide gratings (AWG).

"The ideal is we select a system that operates with both types of infrastructure," says Nesset. "Certain flavours of WDM-PON (wavelength division multiplexing PON) don't need the wavelength splitting device at the splitter node; some form of wavelength-tuning can be installed at the customer premises." That said, the power loss of existing optical splitters is higher than AWGs which impacts PON reach – one of several trade-offs that need to be considered.

Once FSAN has concluded its studies, member companies will generate 'contributions' for the ITU, intended for standardisation. The ITU has started work on defining high-level requirements for NG-PON2 through contributions from FSAN operators. Once the NG-PON2 technology is chosen, more contributions that describe the physical layer, the media access controller and the customer premise equipment's management requirements will follow.

Nesset says the target is to get such documents into the ITU by September 2012 but achieving wide consensus is the priority rather than meeting this deadline. "Once we select something in FSAN, we expect to see the industry ramp up its contributions based on that selected technology to the ITU," says Nesset. FSAN will select the NG-PON2 technology before September.

NG-PON2 technologies

Candidate technologies include an extension to the existing GPON and XGPON1 based on time-division multiplexing (TDM). Already vendors such as Huawei have demonstrated prototype 40 Gigabit capacity PON systems that also support hybrid TDM and WDM-PON (TWDM-PON). Other schemes include WDM-PON, ultra-dense WDM-PON and orthogonal frequency division multiplexing (OFDM).

Nesset says there are several OFDM variants being proposed. He views OFDM as 'DSL in the optical domain’: sub-carriers finely spaced in the frequency domain, each carrying low-bit-rate signals.

One advantage of OFDM technology, says Nesset, includes taking a narrowband component to achieve a broadband signal: a narrowband 10Gbps transmitter and receiver can achieve 40Gbps using sub-carriers, each carrying quadrature amplitude modulation (QAM). "All the clever work is done in CMOS - the digital signal processing and the analogue-to-digital conversion," he says. The DSP executes the fast Fourier transform (FFT) and the inverse FFT.

"We are trying to narrow down the solutions and the ideal is to get down to one"

Another technology candidate is WDM-PON including an ultra-dense variant that promises a reach of up to 100km and 1,000 wavelengths. Such a technology uses a coherent receiver to tune to the finely spaced wavelengths.

In addition to being compatible with existing infrastructure, another FSAN consideration is compatibility with existing PON standards. This is to avoid having to do a wholesale upgrade of users. For example, with XGPON1, the optical line terminal (OLT) using an additional pair of wavelengths - a wavelength overlay - sits alongside the existing GPON OLT. ”The same principle is desirable for NG-PON2,” says Nesset.

However, an issue is that spectrum is being gobbled up with each generation of PON. PON systems have been designed to be low cost and the transmit lasers used are not wavelength-locked and drift with ambient temperature. As such they consume spectrum similar to coarse WDM wavelength bands. Some operators such as Verizon and NTT also have a large installed base of analogue video overlay at 1550nm.

”So in the 1500 band you've got 1490nm for GPON, 1550nm for RF (radio frequency) video, and 1577nm for XGPON; there are only a few small gaps,” says Nesset. A technology that can exploit such gaps is both desirable and a challenge. “This is where ultra-dense WDM-PON could come into play,” he says. This technology could fit tens of channels in the small remaining spectrum gaps.

The technological challenges implementing advanced WDM-PON systems that will likely require photonic integration is also a concern for the operators. "The message from the vendors is that ’when you tell us what to do, we have got the technology to do it’,” says Nesset. ”But they need the see the volume applications to justify the investment.” However, operators need to weigh up the technological risks in developing these new technologies and the potential for not realising the expected cost reductions.

Timetable

Nesset points out that each generation of PON has built on previous generations: GPON built on BPON and XGPON on GPON. But NG-PON2 will inevitably be based on new approaches. These include TWDM-PON which is an evolution of XG-PON into the wavelength domain, virtual point-to-point approaches such as WDM-PON that may also use an AWG, and the use of digital signal processing with OFDM or coherent ultra dense WDM-PON. ”It is quite a challenge to weigh up such diverse technological approaches,” says Nesset.

If all goes smoothly it will take two ITU plenary meetings, held every nine months, to finalise the bulk of the NG-PON2 standard. That could mean mid-2013 at the earliest.

FSAN's timetable is based on operators wanting systems deployable in 2015. That requires systems to be ready for testing in 2014.

“[Once deployed] we want NG-PON2 to last quite a while and be scalable and flexible enough to meet future applications and markets as they emerge,” says Nesset.

100 Gigabit: An operator view

Gazettabyte spoke with BT, Level 3 Communications and Verizon about their 100 Gigabit optical transmission plans and the challenges they see regarding the technology.

Briefing: 100 Gigabit

Part 1: Operators

Operators will use 100 Gigabit-per-second (Gbps) coherent technology for their next-generation core networks. For metro, operators favour coherent and have differing views regarding the alternative, 100Gbps direct-detection schemes. All the operators agree that the 100Gbps interfaces - line-side and client-side - must become cheaper before 100Gbps technology is more widely deployed.

"It is clear that you absolutely need 100 Gig in large parts of the network"

Steve Gringeri, Verizon

100 Gigabit status

Verizon is already deploying 100Gbps wavelengths in its European and US networks, and will complete its US nationwide 100Gbps backbone in the next two years.

"We are at the stage of building a new-generation network because our current network is quite full," says Steve Gringeri, a principal member of the technical staff at Verizon Business.

The operator first deployed 100Gbps coherent technology in late 2009, linking Paris and Frankfurt. Verizon's focus is on 100Gbps, having deployed a limited amount of 40Gbps technology. "We can also support 40 Gig coherent where it makes sense, based on traffic demands," says Gringeri.

Level 3 Communications and BT, meanwhile, have yet to deploy 100Gbps technology.

"We have not [made any public statements regarding 100 Gig]," says Monisha Merchant, Level 3’s senior director of product management. "We have had trials but nothing formal for our own development." Level 3 started deploying 40Gbps technology in March 2009.

BT expects to deploy new high-speed line rates before the year end. "The first place we are actively pursuing the deployment of initially 40G, but rapidly moving on to 100G, is in the core,” says Steve Hornung, director, transport, timing and synch at BT.

Operators are looking to deploy 100Gbps to meet growing traffic demands.

"If I look at cloud applications, video distribution applications and what we are doing for wireless (Long Term Evolution) - the sum of all the traffic - that is what is putting the strain on the network," says Gringeri.

Verizon is also transitioning its legacy networks onto its core IP-MPLS backbone, requiring the operator to grow its base infrastructure significantly. "When we look at demands there, it is clear that you absolutely need 100 Gig in large parts of the network," says Gringeri.

Level 3 points out its network between any two cities has been running at much greater capacity than 100 Gbps so that demand has been there for years, the issue is the economics of the technology. "Right now, going to 100Gbps is significantly a higher cost than just deploying 10x 10Gbps," says Level 3's Merchant.

BT's core network comprises 106 nodes: 20 in a fully-meshed inner core, surrounded by an outer 86-node core. The core carries the bulk of BT's IP, business and voice traffic.

"We are taking specific steps and have business cases developed to deploy 40G and 100G technology: alternative line cards into the same rack," says Hornung.

Coherent and direct detection

Coherent has become the default optical transmission technology for operators' next-generation core networks.

BT says it is a 'no-brainer' that 400Gbps and 1 Terabit-per-second light paths will eventually be deployed in the network to accommodate growing traffic. "Rather than keep all your options open, we need to make the assumption that technology will essentially be coherent going forward because it will be the bandwidth that drives it," says Hornung.

Beyond BT's 106-node core is a backhaul network that links 1,000 points-of-presence (PoPs). It is this part of the network that BT will consider 40Gbps and perhaps 100Gbps direct-detection technology. "If it [such technology] became commercially available, we would look at the price, the demand and use it, or not, as makes sense," says Hornung. "I would not exclude at this stage looking at any technology that becomes available." Such direct-detection 100Gbps solutions are already being promoted by ADVA Optical Networking and MultiPhy.

However, Verizon believes coherent will also be needed for the metro. "If I look at my metro systems, you have even lower quality amplifiers, and generally worse signal-to-noise," says Gringeri. “Based on the performance required, I have no idea how you are going to implement a solution that isn't coherent."

Even for shorter reach metro systems - 200 or 300km- Verizon believes coherent will be the implementation, including expanding existing deployments that carry 10Gbps light paths and that use dispersion-compensated fibre.

Level 3 says it is not wedded to a technology but rather a cost point. As a result it will assess a technology if it believes it will address the operator's needs and has a cost performance advantage.

100 Gig deployment stages

The cost of 100Gbps technology remains a key challenge impeding wider deployment. This is not surprising since 100Gbps technology is still immature and systems shipping are first-generation designs.

Operators are willing to pay a premium to deploy 100Gbps light paths at network pinch-points as it is cheaper that lighting a new fibre.

Metro deployments of new technology such as 100Gbps occur generally occur once the long-haul network has been upgraded. The technology is by then more mature and better suited to the cost-conscious metro.

Applications that will drive metro 100Gbps include linking data centre and enterprises. But Level 3 expects it will be another five years before enterprises move from requesting 10 Gigabit services to 100 Gigabit ones to meet their telecom needs.

Verizon highlights two 100Gbps priorities: the high-end performance dense WDM systems and client-side 'grey' (non-WDM) optics used to connect equipment across distances as short as 100m with ribbon cable to over 2km or 10km over single-mode fibre.

"I would not exclude at this stage looking at any technology that becomes available"

Steve Hornung, BT

"Grey optics are very costly, especially if I’m going to stitch the network and have routers and other client devices and potential long-haul and metro networks, all of these interconnect optics come into play," says Gringeri.

Verizon is a strong proponent of a new 100Gbps serial interface over 2km or 10km. At present there are the 100 Gigabit interface and the 10x10 MSA. However Gringeri says it will be 2-3 years before such a serial interface becomes available. "Getting the price-performance on the grey optics is my number one priority after the DWDM long haul optics," says Gringeri.

Once 100Gbps client-side interfaces do come down in price, operators' PoPs will be used to link other locations in the metro to carry the higher-capacity services, he says.

The final stage of the rollout of 100Gbps will be single point-to-point connections. This is where grey 100Gbps comes in, says Gringeri, based on 40 or 80km optical interfaces.

Source: Gazettabyte

Tackling costs

Operators are confident regarding the vendors’ cost-reduction roadmaps. "We are talking to our clients about second, third, even fourth generation of coherent," says Gringeri. "There are ways of making extremely significant price reductions."

Gringeri points to further photonic integration and reducing the sampling rate of the coherent receiver ASIC's analogue-to-digital converters. "With the DSP [ASIC], you can look to lower the sampling rate," says Gringeri. "A lot of the systems do 2x sampling and you don't need 2x sampling."

The filtering used for dispersion compensation can also be simpler for shorter-reach spans. "The filter can be shorter - you don't need as many [digital filter] taps," says Gringeri. "There are a lot of optimisations and no one has made them yet."

There are also the move to pluggable CFP modules for the line-side coherent optics and the CFP2 for client-side 100Gbps interfaces. At present the only line-side 100Gbps pluggable is based on direct detection.

"The CFP is a big package," says Gringeri. "That is not the grey optics package we want in the future, we need to go to a much smaller package long term."

For the line-side there is also the issue of the digital signal processor's (DSP) power consumption. "I think you can fit the optics in but I'm very concerned about the power consumption of the DSP - these DSPs are 50 to 80W in many current designs," says Gringeri.

One obvious solution is to move the DSP out of the module and onto the line card. "Even if they can extend the power number of the CFP, it needs to be 15 to 20W," says Gringeri. "There is an awful lot of work to get where you are today to 15 to 20W."

* Monisha Merchant left Level 3 before the article was published.

Further Reading:

100 Gigabit: The coming metro opportunity - a position paper, click here

Click here for Part 2: Next-gen 100 Gig Optics

Capella: Why the ROADM market is a good place to be

The reconfigurable optical add-drop multiplexer (ROADM) market has been the best performing segment of the optical networking market over the last year. According to Infonetics Research, ROADM-based wavelength division multiplexing (WDM) equipment grew 20% from Q2, 2010 to Q1, 2011 whereas the overall optical networking market grew 7%.

“It’s the Moore’s Law: Every two years we are doubling the capacity in terms of channel count and port count”

Larry Schwerin, Capella

The ROADM market has since slowed down but Larry Schwerin, CEO of wavelength-selective-switch (WSS) provider, Capella Intelligent Subsystems, says the market prospects for ROADMs remain solid.

Capella makes WSS products that steer and monitor light at network nodes, while the company’s core intellectual property is closed-loop control. Its WSS products are compact, athermal designs based on MEMS technology that switch and monitor light.

Schwerin compares Capella to a plumbing company: “We clean out pipes and those pipes happen to be fibre-optics ones.” The reason such pipes need ‘cleaning’ – to be made more efficient - is because of the content they carry. “It is bandwidth demand and the nature of the bandwidth which has changed dramatically, that is the fundamental driver here,” says Schwerin.

Increasingly the content is high-bandwidth video and streamed to end-user devices no longer confined to the home, while the video requested is increasingly user-specific. Such changes in the nature of content are affecting the operators’ distribution networks.

“Using Verizon as an example, they are now pushing 50 wavelengths per fibre in the metro,” says Schwerin. Such broad lanes of traffic arrive at network congestion points where certain fibre is partially used while other fibre is heavily used. “What they [operators] need is a vehicle that allows them to dynamically and remotely reassign those wavelengths on-the-fly,” says Schwerin. “That is what the ROADM does.”

Capella attributes strong ROADM sales to a maturing of the technology coupled with a price reduction. The technology also brings valuable flexibility at the optical layer. “It [ROADM] extends the life of the existing infrastructure, avoiding the need for capital to put new fibre in - which is the last thing the operators want to do,” says Schwerin.

$20M funding

Capella raised US $20M in April as part of its latest funding round. The funding is being used for capital expansion and R&D. “We are working on new engine technology, new patentable concepts,” says Schwerin. “We were at Verizon a few weeks ago doing a world-first demo which we will be putting out as a press release.” For now the company will say that the demonstration is research-oriented and will not be implemented within ROADM systems anytime soon.

“You have to be competitive in this market, that is the downfall of our sector. People getting 30 or 40% gross margins and calling that a win – that is not a win - that is why this sector is in trouble”

One investor in the latest funding round is SingTel Innov8, the investment arm of the operator SingTel. Schwerin says it has no specific venture with the operator but that SingTel will gain insight regarding switching technologies due to the investment. “We will sit down with them and talk about their plans for network evolution and what is technologically possible,” says Schwerin, who points out that many of the carriers have lost contact with technologies since they shed their own large, in-house R&D arms.

Cappella offers two 1x9 WSS products and by the end of this year will also offer a 1x20 product. “It’s the Moore’s Law: Every two years we are doubling the capacity in terms of channel count and port count,” says Schwerin.

“We have a reasonable share of design wins shipping in volume - we have thousands of switches deployed throughout the world,” says Schwerin. “We are not of the size of a JDSU or a Finisar but our objective within the next 18 months is to capture enough market share that you would see us as a main supplier of that ilk.”

The CEO stresses that Capella’s presence a decade after the optical boom ended proves it is offering distinctive products. “Our whole business model is about innovation and differentiation,” says Schwerin.

But as a start-up how can Capella compete with a JDSU or a Finisar? “I have these conversations with the carriers: if all they are doing is looking for second or third sourcing of commodity product parts then there is no room for a company like a Capella.”

The key is taking a dumb switch and turning it into a complete wavelength managed solution that can be easily added within the network.

Schwerin also stresses the importance of ROADM specsmanship: wider lightpath channel passbands, lower insertion loss, smaller size, lower power consumption and competitive pricing: “You have to be competitive in this market, that is the downfall of our sector,” says Schwerin. “People getting 30 or 40% gross margins and calling that a win – that is not a win - that is why this sector is in trouble.”

Advanced ROADM features

There has been much discussion in the last year regarding the four advanced attributes being added to ROADM designs: colourless, directionless, contentionless and gridless or CDCG for short.

Interviewing six system vendors late last year, while all claimed they could support CDCG features, views varied as to what would be needed and by when. Meanwhile all the system vendors were being cautious until it was clearer as to what operators needed.

Schwerin says that what the operators really want is a ‘touchless’ ROADM. Capella says its platform is capable of supporting each of the four attributes and that the company has plans for implementing each one. “Just because the carriers say they want it, that doesn’t mean that they are willing to pay for it,” says Schwerin. “And given the intense pricing pressure our system friends are in, they are rightly being cautious.”

Capella says that talking to the carriers doesn’t necessarily answer the issue since views vary as to what is needed. “The one [attribute] that seems clearest of all is colourless,” says Schwerin. And colourless is served using higher-port-count WSSs.

The directionless attribute is more a question of implementation and the good news is that it requires more WSSs, says Schwerin. Contentionless addresses the issue of wavelength blocking and is the most vague, a requirement that has even “faded away a bit”. As for gridless, that may be furthest out as it has ramifications in the network.

Schwerin says that Capella is seeing requests for reduced WSS switching times as well as wavelength tracking, tagging a wavelength whose signature can be identified optically and which is useful for network restoration and when wavelengths are passed between carriers’ networks.

Roadmap

In terms of product plans, Capella will launch a 1x20 WSS product later this year. The next logical step in the development of WSS technology is moving to a solid-state-based design.

“All of the the technologies out there today– liquid crystal, MEMS, liquid-crystal-on-silicon - are all free space [designs],” says Schwerin. “We have a solid-state engine in the middle [of our WSS] and we are down to five photonic-integrated-circuit components so the obvious next stage is silicon photonics.”

Does that mean a waveguide-based design? “Something of that form – it may not be a waveguide solution but something akin to that - but the idea is to get it down to a chip,” says Schwerin. “We are not pure silicon photonics but we are heading that way.”

Such a compact chip-based WSS design is probably five years out, concludes Schwerin.

Further information:

A Fujitsu ROADM discussion with Verizon and Capella – a Youtube 30-min video

Operators want to cut power by a fifth by 2020

Part 2: Operators’ power efficiency strategies

Service providers have set themselves ambitious targets to reduce their energy consumption by a fifth by 2020. The power reduction will coincide with an expected thirty-fold increase in traffic in that period. Given the cost of electricity and operators’ requirements, such targets are not surprising: KPN, with its 12,000 sites in The Netherlands, consumes 1% of the country’s electricity.

“We also have to invest in capital expenditure for a big swap of equipment – in mobile and DSLAMs"

Philippe Tuzzolino, France Telecom-Orange

Operators stress that power consumption concerns are not new but Marga Blom, manager, energy management group at KPN, highlights that the issue had become pressing due to steep rises in electricity prices. “It is becoming a significant part of our operational expense,” she says.

"We are getting dedicated and allocated funds specifically for energy efficiency,” adds John Schinter, AT&T’s director of energy. “In the past, energy didn’t play anywhere near the role it does today.”

Power reduction strategies

Service providers are adopted several approaches to reduce their power requirements.

Upgrading their equipment is one. Newer platforms are denser with higher-speed interfaces while also supporting existing technologies more efficiently. Verizon, for example, has deployed 100 Gigabit-per-second (Gbps) interfaces for optical transport and for its IT systems in Europe. The 100Gbps systems are no larger than existing 10Gbps and 40Gbps platforms and while the higher-speed interfaces consume more power, overall power-per-bit is reduced.

“There is a business case based on total cost of ownership for migrating to newer platforms.”

“There is a business case based on total cost of ownership for migrating to newer platforms.”

Marga Blom, KPN

Reducing the number of facilities is another approach. BT and Deutsche Telekom are reducing significantly the number of local exchanges they operate. France Telecom is consolidating a dozen data centres in France and Poland to two, filling both with new, more energy-efficient equipment. Such an initiative improves the power usage effectiveness (PUE), an important data centre efficiency measure, halving the energy consumption associated with France Telecom’s data centres’ cooling systems.

“PUE started with data centres but it is relevant in the future central office world,” says Brian Trosper, vice president of global network facilities/ data centers at Verizon. “As you look at the evolution of cloud-based services and virtualisation of applications, you are going to see a blurring of data centres and central offices as they interoperate to provide the service.”

Belgacom plans to upgrade its mobile infrastructure with 20% more energy-efficient equipment over the next two years as it seeks a 25% network energy efficiency improvement by 2020. France Telecom is committed to a 15% reduction in its global energy consumption by 2020 compared to the level in 2006. Meanwhile KPN has almost halted growth in its energy demands with network upgrades despite strong growth in traffic, and by 2012 it expects to start reducing demand. KPN’s target by 2020 is to reduce energy consumption by 20 percent compared to its network demands of 2005.

Fewer buildings, better cooling

Philippe Tuzzolino, environment director for France Telecom-Orange, says energy consumption is rising in its core network and data centres due to the ever increasing traffic and data usage but that power is being reduced at sites using such techniques as virtualisation of servers, free-air cooling, and increasing the operating temperature of equipment. “We employ natural ventilation to reduce the energy costs of cooling,” says Tuzzolino.

“Everything we do is going to be energy efficient.”

“Everything we do is going to be energy efficient.”

Brian Trosper, Verizon

Verizon uses techniques such as alternating ‘hot’ and ‘cold’ aisles of equipment and real-time smart-building sensing to tackle cooling. “The building senses the environment, where cooling is needed and where it is not, ensuring that the cooling systems are running as efficiently as possible,” says Trosper.

Verizon also points to vendor improvements in back-up power supply equipment such as DC power rectifiers and uninterruptable power supplies. Such equipment which is always on has traditionally been 50% efficient. “If they are losing 50% power before they feed an IP router that is clearly very inefficient,” says Chris Kimm, Verizon's vice president, network field operations, EMEA and Asia-Pacific. Now manufacturers have raised efficiencies of such power equipment to 90-95%.

France Telecom forecasts that its data centre and site energy saving measures will only work till 2013 with power consumption then rising again. “We also have to invest in capital expenditure for a big swap of equipment – in mobile and DSLAMs [access equipment],” says Tuzzolino.

Newer platforms support advanced networking technologies and more traffic while supporting existing technologies more efficiently. This allows operators to move their customers onto the newer platforms and decommission the older power-hungry kit.

“Technology is changing so rapidly that there is always a balance between installing new, more energy efficient equipment and the effort to reduce the huge energy footprint of existing operations”

John Schinter, AT&T

Operators also use networking strategies to achieve efficiencies. Verizon is deploying a mix of equipment in its global private IP network used by enterprise customers. It is deploying optical platforms in new markets to connect to local Ethernet service providers. “We ride their Ethernet clouds to our customers in one market, whereas layer 3 IP routing may be used in an adjacent, next most-upstream major market,” says Kimm. The benefit of the mixed approach is greater efficiencies, he says: “Fewer devices to deploy, less complicated deployments, less capital and ultimately less power to run them.”

Verizon is also reducing the real-estate it uses as it retires older equipment. “One trend we are seeing is more, relatively empty-looking facilities,” says Kimm. It is no longer facilities crammed with equipment that is the problem, he says, rather what bound sites are their power and cooling capacity requirements.

“You have to look at the full picture end-to-end,” says Trosper. “Everything we do is going to be energy efficient.” That includes the system vendors and the energy-saving targets Verizon demands of them, how it designs its network, the facilities where the equipment resides and how they are operated and maintained, he says.

Meanwhile, France Telecom says it is working with 19 operators such as Vodafone and Telefonica, BT, DT, China Telecom, and Verizon as well as the organisations such as the ITU and ETSI to define standards for DSLAMs and base stations to aid the operators in meeting their energy targets.

Tuzzolino stresses that France Telecom’s capital expenditure will depend on how energy costs evolve. Energy prices will dictate when France Telecom will need to invest in equipment, and the degree, to deliver the required return on investment.

The operator has defined capital expenditure spending scenarios - from a partial to a complete equipment swap from 2015 - depending on future energy costs. New services will clearly dictate operators’ equipment deployment plans but energy costs will influence the pace.

““If they [DC power rectifiers and UPSs] are losing 50% power before they feed an IP router that is clearly very inefficient”

““If they [DC power rectifiers and UPSs] are losing 50% power before they feed an IP router that is clearly very inefficient”

Chris Kimm, Verizon.

Justifying capital expenditure spending based on energy and hence operational expense savings in now ‘part of the discussion’, says KPN’s Blom: “There is a business case based on total cost of ownership for migrating to newer platforms.”

Challenges

But if operators are generally pleased with the progress they are making, challenges remain.

“Technology is changing so rapidly that there is always a balance between installing new, more energy efficient equipment and the effort to reduce the huge energy footprint of existing operations,” says AT&T’s Schinter.

“The big challenge for us is to plan the capital expenditure effort such that we achieve the return-on-investment based on anticipated energy costs,” says Tuzzolino.

Another aspect is regulation, says Tuzzolino. The EC is considering how ICT can contribute to reducing the energy demands of other industries, he says. “We have to plan to reduce energy consumption because ICT will increasingly be used in [other sectors like] transport and smart grids.”

Verizon highlights the challenge of successfully managing large-scale equipment substitution and other changes that bring benefits while serving existing customers. “You have to keep your focus in the right place,” says Kimm.

Part 1: Standards and best practices