Next-generation coherent adds sub-carriers to capabilities

Part 2: Infinera's coherent toolkit

Source: Infinera

Source: Infinera

Infinera has detailed coherent technology enhancements implemented using its latest-generation optical transmission technology. The system vendor is still to launch its newest photonic integrated circuit (PIC) and FlexCoherent DSP-ASIC but has detailed features the CMOS and indium phosphide ICs support.

The techniques highlight the increasing sophistication of coherent technology and an ever tighter coupling between electronics and photonics.

The company has demonstrated the technology, dubbed the Advanced Coherent Toolkit, on a Telstra 9,000km submarine link spanning the Pacific. In particular, the demonstration used matrix-enhanced polarisation-multiplexed, binary phased-shift keying (PM-BPSK) that enabled the 9,000km span without optical signal regeneration.

Using the ACT is expected to extend the capacity-reach product for links by the order of 60 percent. Indeed the latest coherent technology with transmitter-based digital signal processing delivers 25x the capacity-reach of 10-gigabit wavelengths using direct-detection, the company says.

Infinera’s latest PIC technology includes polarisation-multiplexed, 8-quadrature amplitude modulation (PM-8QAM) and PM-16QAM schemes. Its current 500-gigabit PIC supports PM-BPSK, PM-3QAM and PM-QPSK. The PIC is expected to support a 1.2-terabit super-channel and using PM-16QAM could deliver 2.4 terabit.

“This [the latest PIC] is beyond 500 gigabit,” confirms Pravin Mahajan, Infinera’s director of product and corporate marketing. “We are talking terabits now.”

Sterling Perrin, senior analyst at Heavy Reading, sees the Infinera announcement as less PIC related and more an indication of the expertise Infinera has been accumulating in areas such as digital signal processing.

Nyquist sub-carriers

Infinera is the first to announce the use of sub-carriers. Instead of modulating the data onto a single carrier, Infinera is using multiple Nyquist sub-carriers spread across a channel.

Using a flexible grid, the sub-carriers span a 37.5GHz-wide channel. In the example shown above, six are used although the number is variable depending on the link. The sub-carriers occupy 35GHz of the band while 2.5GHz is used as a guard band.

“Information you were carrying across one carrier can now be carried over multiple sub-carriers,” says Mahajan. “The benefit is that you can drive this as a lower-baud rate.”

Lowering the baud rate increases the tolerance to non-linear channel impairments experienced during optical transmission. “The electronic compensation is also much less than what you would be doing at a much higher baud rate,” says Abhijit Chitambar, Infinera’s principal product and technology marketing manager.

While the industry is looking to increase overall baud rate to increase capacity carried and reduce cost, the introduction of sub-carriers benefits overall link performance. “You end up with a better Q value,” says Mahajan. The ‘Q’ refers to the Quality Factor, a measure of the transmission’s performance. The Q Factor combines the optical signal-to-noise ratio (OSNR) and the optical bandwidth of the photo-detector, providing a more practical performance measure, says Infinera.

Infinera has not detailed how it implements the sub-carriers. But it appears to be a combination of the transmitter PIC and the digital-to-analogue converter of the coherent DSP-ASIC.

It is not clear what the hardware implications of adopting sub-carriers are and whether the overall DSP processing is reduced, lowering the ASIC’s power consumption. But using sub-carriers promotes parallel processing and that promises chip architectural benefits.

“Without this [sub-carrier] approach you are talking about upping baud rate,” says Mahajan. “We are not going to stop increasing the baud rate, it is more a question of how much you can squeeze with what is available today.“

SD-FEC enhancements

The FlexCoherent DSP also supports enhanced soft-decision forward-error correction (SD-FEC) including the processing of two channels that need not be contiguous.

SD-FEC delivers enhanced performance compared to conventional hard-decision FEC. Hard-decision FEC decides whether a received bit is a 1 or a 0; SD-FEC also uses a confidence measure as to the likelihood of the bit being a 1 or 0. This additional information results in a net coding gain of 2dB compared to hard-decision FEC, benefiting reach and extending the life of submarine links.

By pairing two channels, Infinera shares the FEC codes. By pairing a strong channel with a weak one and sharing the codes, some of the strength of the strong signal can be traded to bolster the weaker one, extending its reach or even allowing for a more advanced modulation scheme to be used.

The SD-FEC can also trade performance with latency. SD-FEC uses as much as a 35 percent overhead and this adds to latency. Trading the two supports those routes where low latency is a priority.

Matrix-enhanced PSK

Infinera has implemented a technique that enhances the performance of PM-BPSK used for the longest transmission distances such as sub-sea links. The matrix-enhancement uses a form of averaging that adds about a decibel of gain. “Any innovation that adds gain to a link, the margin that you give to operators is always welcome,” says Mahajan.

The toolkit also supports the fine-tuning of channel widths. This fine-tuning allows the channel spacing to be tailored for a given link as well as better accommodating the Nyquist sub-carriers.

Product launch

The company has not said when it will launch its terabit PIC and FlexCoherent DSP.

“Infinera is saying it is the first announcing Nyquist sub-carriers, which is true, but they don’t give a roadmap when the product is coming out,” says Heavy Reading’s Perrin. “I suspect that Nokia [Alcatel-Lucent], Ciena and Huawei are all innovating on the same lines.”

There could be a slew of announcements around the time of the OFC show in March, says Perrin: “So Infinera could be first to announce but not necessarily first to market.”

Finisar adds silicon photonics to its technology toolkit

- Finisar revealed its in-house silicon photonics design capability at ECOC

- The company also showed its latest ROADM technologies: a dual wavelength-selective switch and a high-resolution optical channel monitor.

- Also shown was an optical amplifier that spans 400km fibre links

These two complementary technologies [VCSELs and silicon photonics] work well together as we think about the next-generation Ethernet applications.

Rafik Ward

Finisar demonstrated at ECOC its first optical design implemented using silicon photonics. The photonic integrated circuit (PIC) uses a silicon photonics modulator and receiver and was shown operating at 50 Gigabit-per-second.

The light source used with the PIC was a continuous wave distributed feedback (DFB) laser. One Finisar ECOC demonstration showed the eye diagram of the 50 Gig transmitter using non-return-to-zero (NRZ) signalling. Separately, a 40 Gig link using this technology was shown operating error-free over 12km of single mode fibre.

"Finisar, and its fab partner STMicroelectronics, surprised the market with the 50 Gig silicon photonics demonstration,” says Daryl Inniss, practice leader of components at Ovum.

"This, to our knowledge, was the first public demonstration of silicon photonics running at such a high speed," says Rafik Ward, vice president of marketing at Finisar. However, the demonstrations were solely to show the technology's potential. "We are not announcing any new products," he says.

Potential applications for the PIC include the future 50 Gig IEEE Ethernet standard, as well as a possible 40 Gig serial Ethernet standard. "Also next-generation 400 Gig Ethernet and 100 Gig Ethernet using 50 Gig lanes," says Ward. "All these things are being discussed within the IEEE."

Jerry Rawls, co-founder and chairman of Finisar, said in an interview a year ago that the company had not developed any silicon photonics-based products as the technology had not shown any compelling advantage compared to its existing optical technologies.

Now Finisar has decided to reveal its in-house design capability as the technology is at a suitable stage of development to show to the industry. It is also timely, says Ward, given the many topics and applications being discussed in the standards work.

The company sees silicon photonics as part of its technology toolkit available to its engineers as they tackle next-generation module designs.

Finisar unveiled a vertical-cavity surface-emitting laser (VCSEL) operating at 40 Gig at the OFC show held in March. The 40 Gig VCSEL demonstration also used NRZ signalling. IBM has also published a technical paper that used Finisar's VCSEL technology operating at 50 Gbps.

"What we are trying to do is come up with solutions where we can enable a common architecture between the short wave and the long wave optical modules," says Ward. "These two complementary technologies [VCSELs and silicon photonics] work well together as we think about the next-generation Ethernet applications."

Cisco Systems, also a silicon photonics proponent, was quoted in the accompanying Finisar ECOC press release as being 'excited' to see Finisar advancing the development of silicon photonics technology. "Cisco is our biggest customer," says Ward. "We see this as a significant endorsement from a very large user of optical modules." Cisco acquired silicon photonics start-up Lightwire for $271 million in March 2012.

ROADM technologies

Finisar also demonstrated two products for reconfigurable optical add/ drop multiplexers (ROADM): a dual configuration wavelength-selective switch (WSS) and an optical channel monitor (OCM).

The dual-configuration WSS is suited to route-and-select ROADM architectures.

Two architectures are used for ROADMs: broadcast-and-select and route-and-select. With broadcast-and-select, incoming channels are routed in the various directions using a passive splitter that in effect makes copies of the incoming signal. To route signals in the outgoing direction, a 1xN WSS is used. However, due to the optical losses of the splitters, such an architecture is used for low node-degree applications. For higher-degree nodes, the optical loss becomes a barrier, such that a WSS is also used for the incoming signals, resulting in the route-and-select architecture. A dual-configuration WSS thus benefits a route-and-select ROADM design.

Finisar's WSS module is sufficiently slim that it occupies a single-chassis slot, unlike existing designs that require two. "It enables system designers to free up slots for other applications such as transponder line cards inside their chassis," says Ward.

The dual WSS modules support flexible grid and come in 2x1x20, 2x1x9 and 2x8x12 configurations. "There are some architectures being discussed for add/ drop that would utilise the WSS in that [2x8x12] configuration," says Ward.

The ECOC demonstrations included different traffic patterns passing through the WSS, as well as attenuation control and the management of super-channels.

Finisar also showed an accompanying high-resolution OCM that also occupies a single-chassis slot. The OCM can resolve the spectral power of channels as narrow as 6.25GHz. The OCM, a single-channel device, can scan a fibre's C-band in 200ms.

A rule of thumb is that an OCM is used for each WSS. A customer often monitors channels on a single fibre, says Ward, and must pick which fibres to monitor. The OCM is typically connected to each fibre or to an optical switch to scan multiple fibres.

"People are looking to use the spectrum in a fibre in a much more optimised way," says Ward. The advent of flexible grid and super-channels requires a much tighter packing of channels. "So, being able to see and identify all of the key elements of these channels and manage them is going to become more and more difficult," he says, with the issue growing in importance as operators move to line speeds greater than 100 Gig.

Finisar also used the ECOC show to demonstrate repeater-less transmission using an amplifier that can span 400km of fibre. Such an amplifier is used in harsh environments where it is difficult to build amplifier huts. The amplifier can also be used for certain submarine applications known as 'festooning' where the cable follows a coastline and returns to land each time amplification is required. Using such a long-span amplifier reduces the overall hops back to the coast.

Xtera demonstrates 40 Terabit using Raman amplification

- Xtera's Raman amplification boosts capacity and reach

- 40 Terabit optical transmission over 1,500km in Verizon trial

- 64 Terabit over 1,500km in 2015 using a Raman module operating over 100nm of spectrum

Herve Fevrier

Herve FevrierSystem vendor Xtera is using all these techniques as part of its Nu-Wave Optima system but also uses Raman amplification to extend capacity and reach.

"We offer capacity and reach using a technology - Raman amplification - that we have been pioneering and working on for 15 years," says Herve Fevrier, executive vice president and chief strategy officer at Xtera.

The distributed amplification profile of Raman (blue) compared to an EDFA's point amplification. Source: Xtera

The distributed amplification profile of Raman (blue) compared to an EDFA's point amplification. Source: XteraOne way vendors are improving the amplification for 100 Gigabit and greater deployments is to use a hybrid EDFA/ Raman design. This benefits the amplifier's power efficiency and the overall transmission reach but the spectrum width is still dictated by Erbium to around 35nm. "And Raman only helps you have spans which are a bit longer," says Fevrier.

Meanwhile, Xtera is working on programable cards that will support the various transmission options. Xtera will offer a 100nm amplifier module this year that extends its system capacity to 24 Terabit (240, 100 Gig channels). Also planned this year is super-channel PM-QPSK implementation that will extend transmissions to 32 Terabit using the 100nm amplifier module. In 2015 Xtera will offer PM-16-QAM that will deliver the 48 Terabit over 2,000km and the 64 Terabit over 1,500km.

For Part 1, click here

Verizon on 100G+ optical transmission developments

Source: Gazettabyte

Source: Gazettabyte

Feature: 100 Gig and Beyond. Part 1:

Verizon's Glenn Wellbrock discusses 100 Gig deployments and higher speed optical channel developments for long haul and metro.

The number of 100 Gigabit wavelengths deployed in the network has continued to grow in 2013.

According to Ovum, 100 Gigabit has become the wavelength of choice for large wavelength-division multiplexing (WDM) systems, with spending on 100 Gigabit now exceeding 40 Gigabit spending. LightCounting forecasts that 40,000, 100 Gigabit line cards will be shipped this year, 25,000 in the second half of the year alone. Infonetics Research, meanwhile, points out that while 10 Gigabit will remain the highest-volume speed, the most dramatic growth is at 100 Gigabit. By 2016, the majority of spending in long-haul networks will be on 100 Gigabit, it says.

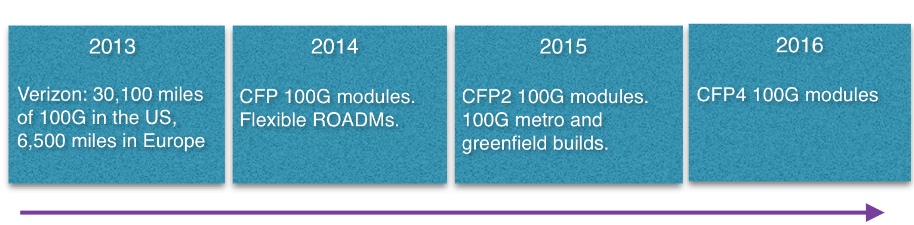

The market research firms' findings align with Verizon's own experience deploying 100 Gigabit. The US operator said in September that it had added 4,800, 100 Gigabit miles of its global IP network during the first half of 2013, to total 21,400 miles in the US network and 5,100 miles in Europe. Verizon expects to deploy another 8,700 miles of 100 Gigabit in the US and 1,400 miles more in Europe by year end.

"We expect to hit the targets; we are getting close," says Glenn Wellbrock, director of optical transport network architecture and design at Verizon.

Verizon says several factors are driving the need for greater network capacity, including its FiOS bundled home communication services, Long Term Evolution (LTE) wireless and video traffic. But what triggered Verizon to upgrade its core network to 100 Gig was converging its IP networks and the resulting growth in traffic. "We didn't do a lot of 40 Gig [deployments] in our core MPLS [Multiprotocol Label Switching] network," says Wellbrock.

The cost of 100 Gigabit was another factor: A 100 Gigabit long-haul channel is now cheaper than ten, 10 Gig channels. There are also operational benefits using 100 Gig such as having fewer wavelengths to manage. "So it is the lower cost-per-bit plus you get all the advantages of having the higher trunk rates," says Wellbrock.

Verizon expects to continue deploying 100 Gigabit. First, it has a large network and much of the deployment will occur in 2014. "Eventually, we hope to get a bit ahead of the curve and have some [capacity] headroom," says Wellbrock.

We could take advantage of 200 Gig or 400 Gig or 500 Gig today

Super-channel trials

Operators, working with optical vendors, are trialling super-channels and advanced modulation schemes such as 16-QAM (quadrature amplitude amplitude). Such trials involve links carrying data in multiples of 100 Gig: 200 Gig, 400 Gig, even a Terabit.

Super-channels are already carrying live traffic. Infinera's DTN-X system delivers 500 Gig super-channels using quadrature phase-shift keying (QPSK) modulation. Orange has a 400 Gigabit super-channel link between Lyon and Paris. The 400 Gig super-channel comprises two carriers, each carrying 200 Gig using 16-QAM, implemented using Alcatel-Lucent's 1830 photonic service switch platform and its photonic service engine (PSE) DSP-ASIC.

"We could take advantage of 200 Gig or 400 Gig or 500 Gig today," says Wellbrock. "As soon as it is cost effective, you can use it because you can put multiple 100 Gig channels on there and multiplex them."

The issue with 16-QAM, however, is its limited reach using existing fibre and line systems - 500-700km - compared to QPSK's 2,500+ km before regeneration. "It [16-QAM] will only work in a handful of applications - 25 percent, something of this nature," says Wellbrock. This is good for a New York to Boston, he says, but not New York to Chicago. "From our end it is pretty simple, it is lowest cost," says Wellbrock. "If we can reduce the cost, we will use it [16-QAM]. However, if the reach requirement cannot be met, the operator will not go to the expense of putting in signal regenerators to use 16-QAM do, he says.

Earlier this year Verizon conducted a trial with Ciena using 16-QAM. The goals were to test 16-QAM alongside live traffic and determine whether the same line card would work at 100 Gig using QPSK and 200 Gig using 16-QAM. "The good thing is you can use the same hardware; it is a firmware setting," says Wellbrock.

We feel that 2015 is when we can justify a new, greenfield network and that 100 Gig or versions of that - 200 Gig or 400 Gig - will be cheap enough to make sense

100 Gig in the metro

Verizon says there is already sufficient traffic pressure in its metro networks to justify 100 Gig deployments. Some of Verizon's bigger metro locations comprise up to 200 reconfigurable optical add/ drop multiplexer (ROADM) nodes. Each node is typically a central office connected to the network via a ROADM, varying from a two-degree to an eight-degree design.

"Not all the 200 nodes would need multiple 100 Gig channels but in the core of the network, there is a significant amount of capacity that needs to be moved around," says Wellbrock. "100 Gig will be used as soon as it is cost-effective."

Unlike long-haul, 100 Gigabit in the metro remains costlier than ten 10 Gig channels. That said, Verizon has deployed metro 100 Gig when absolutely necessary, for example connecting two router locations that need to be connected using 100 Gig. Here Verizon is willing to pay extra for such links.

"By 2015 we are really hoping that the [metro] crossover point will be reached, that 100 Gig will be more cost effective in the metro than ten times 10 [Gig]." Verizon will build a new generation of metro networks based on 100 Gig or 200 Gig or 400 Gig using coherent receivers rather than use existing networks based on conventional 10 Gig links to which 100 Gig is added.

"We feel that 2015 is when we can justify a new, greenfield network and that 100 Gig or versions of that - 200 Gig or 400 Gig - will be cheap enough to make sense."

Data Centres

The build-out of data centres is not a significant factor driving 100 Gig demand. The largest content service providers do use tens of 100 Gigabit wavelengths to link their mega data centres but they typically have their own networks that connect relatively few sites.

"If you have lots of data centres, the traffic itself is more distributed, as are the bandwidth requirements," says Wellbrock.

Verizon has over 220 data centres, most being hosting centres. The data demand between many of the sites is relatively small and is served with 10 Gigabit links. "We are seeing the same thing with most of our customers," says Wellbrock.

Technologies

System vendors continue to develop cheaper line cards to meet the cost-conscious metro requirements. Module developments include smaller 100 Gig 4x5-inch MSA transponders, 100 Gig CFP modules and component developments for line side interfaces that fit within CFP2 and CFP4 modules.

"They are all good," says Wellbrock when asked which of these 100 Gigabit metro technologies are important for the operator. "We would like to get there as soon as possible."

The CFP4 may be available by late 2015 but more likely in 2016, and will reduce significantly the cost of 100 Gig. "We are assuming they are going to be there and basing our timelines on that," he says.

Greater line card port density is another benefit once 100 Gig CFP2 and CFP4 line side modules become available. "Lower power and greater density which is allowing us to get more bandwidth on and off the card." sats Wellbrock.

Existing switch and routers are bandwidth-constrained: they have more traffic capability that the faceplate can provide. "The CFPs, the way they are today, you can only get four on a card, and a lot of the cards will support twice that much capacity," says Wellbrock.

With the smaller form factor CFP2 and CFP4, 1.2 and 1.6 Terabits card will become possible from 2015. Another possible development is a 400 Gigabit CFP which would achieve a similar overall capacity gains.

Coherent, not just greater capacity

Verizon is looking for greater system integration and continues to encourage industry commonality in optical component building blocks to drive down cost and promote scale.

Indeed Verizon believes that industry developments such as MSAs and standards are working well. Wellbrock prefers standardisation to custom designs like 100 Gigabit direct detection modules or company-specific optical module designs.

Wellbrock stresses the importance of coherent receiver technology not only in enabling higher capacity links but also a dynamic optical layer. The coherent receiver adds value when it comes to colourless, directionless, contentionless (CDC) and flexible grid ROADMs.

"If you are going to have a very cost-effective 100 Gigabit because the ecosystem is working towards similar solutions, then you can say: 'Why don't I add in this agile photonic layer?' and then I can really start to do some next-generation networking things." This is only possible, says Wellbrock, because of the tunabie filter offered by a coherent receiver, unlike direct detection technology with its fixed-filter design.

"Today, if you want to move from one channel to the next - wavelength 1 to wavelength 2 - you have to physically move the patch cord to another filter," says Wellbrock. "Now, the [coherent] receiver can simply tune the local oscillator to channel 2; the transmitter is full-band tunable, and now the receiver is full-band tunable as well." This tunability can be enabled remotely rather than requiring an on-site engineer.

Such wavelength agility promises greater network optimisation.

"How do we perhaps change some of our sparing policy? How do we change some of our restoration policies so that we can take advantage of that agile photonics later," says Wellbroack. "That is something that is only becoming available because of the coherent 100 Gigabit receivers."

Part 2, click here

Merits and challenges of optical transmission at 64 Gbaud

u2t Photonics announced recently a balanced detector that supports 64Gbaud. This promises coherent transmission systems with double the data rate. But even if the remaining components - the modulator and DSP-ASIC capable of operating at 64Gbaud - were available, would such an approach make sense?

Gazettabyte asked system vendors Transmode and Ciena for their views.

Transmode:

Transmode points out that 100 Gigabit dual-polarisation, quadrature phase-shift keying (DP-QPSK) using coherent detection has several attractive characteristics as a modulation format.

It can be used in the same grid as 10 Gigabit-per-second (Gbps) and 40Gbps signals in the C-band. It also has a similar reach as 10Gbps by achieving a comparable optical signal-to-noise ratio (OSNR). Moreover, it has superior tolerance to chromatic dispersion and polarisation mode dispersion (PMD), enabling easier network design, especially with meshed networking.

The IEEE has started work standardising the follow-on speed of 400 Gigabit. "This is a reasonable step since it will be possible to design optical transmission systems at 400 Gig with reasonable performance and cost," says Ulf Persson, director of network architecture in Transmode's CTO office.

Moving to 100Gbps was a large technology jump that involved advanced technologies such as high-speed analogue-to-digital (A/D) converters and advanced digital signal processing, says Transmode. But it kept the complexity within the optical transceivers which could be used with current optical networks. It also enabled new network designs due to the advanced chromatic dispersion and PMD compensations made possible by the coherent technology and the DSP-ASIC.

For 400Gbps, the transition will be simpler. "Going from 100 Gig to 400 Gig will re-use a lot of the technologies used for 100 Gig coherent," says Magnus Olson, director of hardware engineering.

So even if there will be some challenges with higher-speed components, the main challenge will move from the optical transceivers to the network, he says. That is because whatever modulation format is selected for 400Gbps, it will not be possible to fit that signal into current networks keeping both the current channel plan and the reach.

"From an industry point of view, a metro-centric cost reduction of 100Gbps coherent is currently more important than increasing the bit rate to 400Gbps"

"If you choose a 400 Gigabit single carrier modulation format that fits into a 50 Gig channel spacing, the optical performance will be rather poor, resulting in shorter transmission distances," says Persson. Choosing a modulation format that has a reasonable optical performance will require a wider passband. Inevitably there will be a tradeoff between these two parameters, he says.

This will likely lead to different modulation formats being used at 400 Gig, depending on the network application targeted. Several modulation formats are being investigated, says Transmode, but the two most discussed are:

- 4x100Gbps super-channels modulated with DP-QPSK. This is the same as today's modulation format with the same optical performance as 100Gbps, and requires a channel width of 150GHz.

- 2x200Gbps super-channels, modulated with DP-16-QAM. This will have a passband of about 75GHz. It is also possible to put each of the two channels in separate 50GHz-spaced channels and use existing networks The effective bandwidth will then be 100GHz for a 400GHz signal. However, the OSNR performance for this format is about 5-6 dB worse than the 100Gbps super-channels. That equates to about a quarter of the reach at 100Gbps.

As a result, 100Gbps super-channels are more suited to long distance systems while 200Gbps super-channels are applicable to metro/ regional systems.

Since 200Gbps super-channels can use standard 50GHz spacing, they can be used in existing metro networks carrying a mix of traffic including 10Gbps and 40Gbps light paths.

"Both 400 Gig alternatives mentioned have a baud rate of about 32 Gig and therefore do not require a 64 Gbaud photo detector," says Olson. "If you want to go to a single channel 400G with 16-QAM or 32-QAM modulation, you will get 64Gbaud or 51Gbaud rate and then you will need the 64 Gig detector."

The single channel formats, however, have worse OSNR performance than 200Gbps super-channels, about 10-12 dB worse than 100Gbps, says Transmode, and have a similar spectral efficiency as 200Gbps super-channels. "So it is not the most likely candidates for 400 Gig," says Olson. "It is therefore unclear for us if this detector will have a use in 400 Gigabit transmission in the near future."

Transmode points out that the state-of-the-art bit rate has traditionally been limited by the available optics. This has kept the baud rate low by using higher order modulation formats that support more bits per symbol to enable existing, affordable technology to be used.

"But the price you have to pay, as you can not fool physics, is shorter reach due to the OSNR penalty," says Persson.

Now the challenges associated with the DSP-ASIC development will be equally important as the optics to further boost capacity.

The bundling of optical carriers into super-channels is an approach that scales well beyond what can be accomplished with improved optics. "Again, we have to pay the price, in this case eating greater portions of the spectrum," says Persson.

Improving the bandwidth of the balanced detector to the extent done by u2t is a very impressive achievement. But it will not make it alone into new products, modulators and a faster DSP-ASIC will also be required.

"From an industry point of view, a metro-centric cost reduction of 100Gbps coherent is currently more important than increasing the bit rate to 400Gbps," says Olson. "When 100 Gig coherent costs less than 10x10 Gig, both in dollars and watts, the technology will have matured to again allow for scaling the bit rate, using technology that suits the application best."

Ciena:

How the optical performance changes going from 32Gbaud to 64Gbaud depends largely on how well the DSP-ASIC can mitigate the dispersion penalties that get worse with speed as the duration of a symbol narrows.

BPSK goes twice as far as QPSK which goes about 4.5 times as far as 16-QAM

"I would also expect a higher sensitivity would be needed also, so another fundamental impact," says Joe Berthold, vice president of network architecture at Ciena. "We have quite a bit or margin with the WaveLogic 3 [DSP-ASIC] for many popular network link distances, so it may not be a big deal."

With a good implementation of a coherent transmission system, the reach is primarily a function of the modulation format. BPSK goes twice as far as QPSK which goes about 4.5 times as far as 16-QAM, says Berthold.

"On fibres without enough dispersion, a higher baud rate will go 25 percent further than the same modulation format at half of that baud rate, due to the nonlinear propagation effects," says Berthold. This is the opposite of what occurred at 10 Gigabit incoherent. On fibres with plenty of local dispersion, the difference becomes marginal, approximately 0.05 dB, according to Ciena.

Regarding how spectral efficiency changes with symbol rate, doubling the baud rate doubles the spectral occupancy, says Berthold, so the benefit of upping the baud rate is that fewer components are needed for a super-channel.

"Of course if the cost of the higher speed components are higher this benefit could be eroded," he says. "So the 200 Gbps signal using DP-QPSK at 64 Gbaud would nominally require 75GHz of spectrum given spectral shaping that we have available in WaveLogic 3, but only require one laser."

Does Ciena have an view as to when 64Gbaud systems will be deployed in the network?

Berthold says this hard to answer. "It depends on expectations that all elements of the signal path, from modulators and detectors to A/D converters, to DSP circuitry, all work at twice the speed, and you get this speedup for free, or almost."

The question has two parts, he says: When could it be done? And when will it provide a significant cost advantage? "As CMOS geometries narrow, components get faster, but mask sets get much more expensive," says Berthold.

u2t Photonics pushes balanced detectors to 70GHz

- u2t's 70GHz balanced detector supports 64Gbaud for test and measurement and R&D

- The company's gallium arsenide modulator and next-generation receiver will enable 100 Gigabit long-haul in a CFP2

"The performance [of gallium arsenide] is very similar to the lithium niobate modulator"

Jens Fiedler, u2t Photonics

u2t Photonics has announced a balanced detector that operates at 70GHz. Such a bandwidth supports 64 Gigabaud (Gbaud), twice the symbol rate of existing 100 Gigabit coherent optical transmission systems.

The German company announced a coherent photo-detector capable of 64Gbaud in 2012 but that had an operating bandwidth of 40GHz. The latest product uses two 70GHz photo-detectors and different packaging to meet the higher bandwidth requirements.

"The achieved performance is a result of R&D work using our experience with 100GHz single photo-detectors and balanced detector technology at a lower speed,” says Jens Fiedler, executive vice president sales and marketing at u2t Photonics.

The monolithically-integrated balanced detector has been sampling since March. The markets for the device are test and measurement systems and research and development (R&D). "It will enable engineers to work on higher-speed interface rates for system development," says Fiedler.

The balanced detector could be used in next-generation transmission systems operating at 64 Gbaud, doubling the current 100 Gigabit-per-second (Gbps) data rate while using the same dual-polarisation, quadrature phase-shift keying (DP-QPSK) architecture.

A 64Gbaud DP-QPSK coherent system would halve the number of super-channels needed for 400Gbps and 1 Terabit transmissions. In turn, using 16-QAM instead of QPSK would further halve the channel count - a single dual-polarisation, 16-QAM at 64Gbaud would deliver 400Gbps, while three channels would deliver 1.2Tbps.

However, for such a system to be deployed commercially the remaining components - the modulator, device drivers and the DSP-ASIC - would need to be able to operate at twice the 32Gbaud rate; something that is still several years out. That said, Fiedler points out that the industry is also investigating baud rates in between 32 Gig and 64 Gig.

Gallium arsenide modulator

u2t acquired gallium arsenide modulator technology in June 2009, enabling the company to offer coherent transmitter as well as receiver components.

At OFC/NFOEC 2013, u2t Photonics published a paper on its high-speed gallium arsenide coherent modulator. The company's design is based on the Mach-Zehnder modulator specification of the Optical Internetworking Forum (OIF) for 100 Gigabit DP-QPSK applications.

The DP-QPSK optical modulation includes a rotator on one arm and a polarisation beam combiner at the output. u2t has decided to support an OIF compatible design with a passive polarisation rotator and combiner which could also be integrated on chip. The resulting coherent modulator is now being tested before being integrated with the free space optics to create a working design.

"The performance [of gallium arsenide] is very similar to the lithium niobate modulator," says Fiedler. "Major system vendors have considered the technology for their use and that is still ongoing."

The gallium arsenide modulator is considerably smaller than the equivalent lithium niobate design. Indeed u2t expects the technology's power and size requirements, along with the company's coherent receiver, to fit within the CFP2 optical module. Such a pluggable 100 Gigabit coherent module would meet long-haul requirements, says Fiedler.

The gallium arsenide modulator can also be used within the existing line-side 100 Gigabit 5x7-inch MSA coherent transponder. Fiedler points out that by meeting the OIF specification, there is no space saving benefit using gallium arsenide since both modulator technologies fit within the same dimensioned package. However, the more integrated gallium arsenide modulator may deliver a cost advantage, he says.

Another benefit of using a gallium arsenide modulator is its optical performance stability with temperature. "It requires some [temperature] control but it is stable," says Fiedler.

Coherent receiver

u2t's current 100Gbps coherent receiver product uses two chips, each comprising the 90-degree hybrid and a balanced detector. "That is our current design and it is selling in volume," says Fiedler. "We are now working on the next version, according to the OIF specification, which is size-reduced."

The resulting single-chip design will cost less and fit within a CFP2 pluggable module.

The receiver might be small enough to fit within the even smaller CFP4 module, concludes Fiedler.

Infinera speeds up network restoration

- Claimed to be the only hardware implementation of the Shared Mesh Protection protocol

- Provides network-wide protection against multiple network failures

- The chip is already within the DTN-X system; protocol will be activated this year

Pravin Mahajan, Infinera

Pravin Mahajan, Infinera

Infinera has developed a chip to speed up network restoration following faults.

The chip implements the Shared Mesh Protection (SMP) protocol being developed by the International Telecommunication Union (ITU) and the Internet Engineering Task Force (IETF) and Infinera believes it is the only vendor with hardware acceleration of the protocol.

The SMP standard is still being worked on and will be completed this year. Infinera demonstrated its hardware SMP implementation at OFC/NFOEC 2013 and will activate the scheme in operators' networks using a platform software upgrade this year.

The chip, dubbed Fast Shared Mesh Protection (FastSMP), is sprinkled across cards within Infinera's DTN-X platform and will be linked to other FastSMP ICs across the network. The FastSMP chips exchange signalling information and use internal look-up tables with pre-calculated routing data to determine the required protection action when one or more network failures occur.

Network faults

The causes of network faults range from fibre cuts from construction work to natural disasters such as Hurricane Sandy and the Asia Pacific tsunami. Level 3 Communications cited in 2011 that squirrels were the second most common cause of fibre cuts after construction work. The squirrels, chewing through fibre, accounted for 17 percent of all cuts. Meanwhile, one Indian service provider says it experiences 100 fibre cuts nationwide each day, according to Infinera.

Operators are also having to share their network maps with enterprises that want to assess the risk based on geography before choosing a service provider. "End customers no longer necessarily trust the service level agreements they have with operators," says Pravin Mahajan, director, corporate marketing and messaging at Infinera. In riskier regions, for example those prone to earthquakes, enterprises may choose two operators. "A form of 1+1 protection,” says Mahajan.

Operators want resilient networks that adapt to faults quickly, ideally within 50ms, without adding extra cost.

Traditional resiliency schemes include SONET/SDH’s 1+1 protection. This meets the sub-50ms requirement but addresses single faults only and requires dedicated back-up for each circuit. At the IP/MPLS (Internet Protocol/ Multiprotocol Label Switching) layer, the MPLS Fast Re-Route scheme caters for multiple failures and is sub-50ms. But it only addresses local faults, not the full network. And being packet-based - at a higher layer of the network - the scheme is costlier to implement.

"End customers no longer necessarily trust the service level agreements they have with operators"

Infinera's protection scheme uses its digital optical networking approach based on its photonic integrated circuits (PICs) coupled with Optical Transport Networking (OTN). OTN resides between the packet and optical layers, and using a mesh network topology, it can handle multiple failures. By sharing bandwidth at the transport layer, the approach is cheaper than at the packet layer. But being software-based, restoration takes seconds.

Infinera has speeded up the scheme by implementing SMP with its chip such that it meets the 50ms goal.

FastSMP chip

Infinera plans for multiple failures using the Generalized Multiprotocol Label Switching (GMPLS) control plane. “The same intelligence is now implemented in hardware [using the FastSMP processor],” says Mahajan.

The chip is on each 500 Gigabit-per-second (Gbps) line card, within the platform's OTN switch fabric, the client side and as part of the controller. The FastSMP, described as a co-processor to the CPU, hosts look-up tables with rules as to what should happen with each failure. The chips, located in the platform and across the network, then adjust to the back-up plan for each service failure.

Infinera says that the protection is at the service level not at the link level. "It does this at ODU [OTN's optical data unit] granularity," says Mahajan; each circuit can hold different sized services, 2.5 Gigabit-per-second (Gbps) or 10Gbps for example, all carried within a 100Gbps light path. "By defining failure scenarios on a per-service basis, you now need to put all these entries in hardware," says Mahajan.

To program the chip, network failures are simulated using Infinera's network planning tool to determine the required back-up schemes. These can be chosen based on shortest path or lowest latency, for example.

The GMPLS control plane protocol determines the rules as to how the network should be adapted and these are written on-chip. When a failure occurs, the chip detects the failure and performs the required actions.

The FastSMP chip is already on all the DTN-X line cards Infinera has shipped and will be enabled using software upgrade.

The GMPLS control plane recomputes backup paths after a failure has occurred. Typically no action is required but if several failures occur, the new GMPLS backup paths will be distributed to update the FastSMPs' tables. "Only on the third or fourth failure typically will a new backup plan be needed," says Mahajan.

In effect, the more meshed the network topology, the greater the number of failures that can be tolerated. "When you have three or four failures, you need to have new computation at the GMPLS control plane and then it can repopulate the backups for failures 3, 4, and 5," he says.

Instant bandwidth and FastSMP

Infinera is able to turn up bandwidth in real-time using its 500Gbps super-channel PIC. "We slice up the 500 Gig capacity available per line card into 100 Gig chunks," says Mahajan.

This feature, combined with FastSMP, aids operators dealing with failures once traffic is rerouted. The next backup route, if it is close to its full capacity, can have an extra 100 Gigabit of capacity added in case the link is called into use.

A study based on an example 80-node network by ACG Research estimates that the Shared Mesh Protection scheme uses 30 percent less line-side ports compared to an equivalent network implementing the 1+1 protection scheme.

Cisco Systems demonstrates 100 Gigabit technologies

* Announces 100 Gigabit transmission over 4,800km

"CPAK helps accelerate the feasibility and cost points of deploying 100Gbps"

Stephen Liu, Cisco

Cisco Sytems has announced that its 100 Gigabit coherent module has achieved a reach of 4,800km without signal regeneration. The span was achieved in the lab and the system vendor intends to verify the span in a customer's network.

The optical transmission system achieved a reach of 3,000km over low-loss fibre when first announced in 2012. The extended reach is not a result of a design upgrade, rather the 100 Gigabit-per-second (Gbps) module is being used on a link with Raman amplification.

Cisco says it started shipping its 100Gbps coherent module in June 2012. "We have shipped over 2,000 100Gbps coherent dense WDM ports," says Sultan Dawood, marketing manager at Cisco. The 100Gbps ports include line-side 100Gbps interfaces integrated within Cisco's ONS 15454 multi-service transport platform and its CRS core router supporting its IP-over-DWDM elastic core architecture.

Cisco has also coupled the ASR 9922 series router to the ONS 15454. "We are extending what we have done for IP and optical convergence in the core," says Stephen Liu, director of market management at Cisco. "There is now a common solution to the [network] edge."

None of Cisco's customers has yet used 100Gbps over a 3,000km span, never mind 4,800km. But the reach achieved is an indicator of the optical transmission performance. "The [distance] performance is really a proxy for usefulness," says Liu. "If you take that 3,000km over low-loss fibre, what that buys you is essentially a greater degree of tolerance for existing fibre in the ground."

Much industry attention is being given to the next-generation transmission speeds of 400Gbps and one Terabit. This requires support for super-channels - multi-carrier signals to transmit 400Gbps and one Terabit as well as flexible spectrum to pack the multi-carrier signals efficiently across the fibre's spectrum. But Cisco argues that faster transmission is only one part of the engineering milestones to be achieved, especially when 100Gbps deployment is still in its infancy.

To benefit 100Gbps deployments, Cisco has officially announced its own CPAK 100Gbps client-side optical transceiver after discussing the technology over the last year. "CPAK helps accelerate the feasibility and cost points of deploying 100Gbps," says Liu.

CPAK

The CPAK is Cisco' first optical transceiver using silicon photonics technology following its acquisition of LightWire. The CPAK is a compact optical transceiver to replace the larger and more power hungry 100Gbps CFP interfaces.

The CPAK is being launched at the same time as many companies are announcing CFP2 multi-source agreement (MSA) optical transceiver products. Cisco stresses that the CPAK conforms to the IEEE 100GBASE-LR4 and -SR10 100Gbps standards. Indeed at OFC/NFOEC it is demonstrating the CPAK interfacing with a CFP2.

The CPAK will be used across several Cisco platforms but the first implementation is for the ONS 15454.

The CPAK transceiver will be generally available in the summer of 2013.

Space-division multiplexing: the final frontier

System vendors continue to trumpet their achievements in long-haul optical transmission speeds and overall data carried over fibre.

Alcatel-Lucent announced earlier this month that France Telecom-Orange is using the industry's first 400 Gigabit link, connecting Paris and Lyon, while Infinera has detailed a trial demonstrating 8 Terabit-per-second (Tbps) of capacity over 1,175km and using 500 Gigabit-per-second (Gbps) super-channels.

"Integration always comes at the cost of crosstalk"

Peter Winzer, Bell Labs

Yet vendors already recognise that capacity in the frequency domain will only scale so far and that other approaches are required. One is space-division multiplexing such as using multiple channels separated in space and implemented using multi-core fibre with each core supporting several modes.

"We want a technology that scales by a factor of 10 to 100," says Peter Winzer, director of optical transmission systems and networks research at Bell Labs. "As an example, a fibre with 10 cores with each core supporting 10 modes, then you have the factor of 100."

Space-division multiplexing

Alcatel-Lucent's research arm, Bell Labs, has demonstrated the transmission of 3.8Tbps using several data channels and an advanced signal processing technique known as multiple-input, multiple-output (MIMO).

In particular, 40 Gigabit quadrature phase-shift keying (QPSK) signals were sent over a six-spatial mode fibre using two polarisation modes and eight wavelengths to achieve 3.8Tbps. The overall transmission uses 400GHz of spectrum only.

Alcatel-Lucent stresses that the commercial deployment of space-division multiplexing remains years off. Moreover operators will likely first use already-deployed parallel strands of single-mode fibre, needing the advanced signal processing techniques only later.

"You might say that is trivial [using parallel strands of fibre], but bringing down the cost of that solution is not," says Winzer.

First, cost-effective integrated amplifiers will be needed. "We need to work on a single amplifier that can amplify, say, ten existing strands of single-mode fibre at the cost of two single-mode amplifiers," says Winzer. An integrated transponder will also be needed: one transponder that couples to 10 individual fibres at a much lower cost than 10 individual transponders.

With a super-channel transponder, several wavelengths are used, each with its own laser, modulator and detector. "In a spatial super-channel you have the same thing, but not, say, three different frequencies but three different spatial paths," says Winzer. Here photonic integration is the challenge to achieve a cost-effective transponder.

Once such integrated transponders and amplifiers become available, it will make sense to couple them to multi-core fibre. But operators will only likely start deploying new fibre once they exhaust their parallel strands of single-mode fibre.

Such integrated amplifiers and integrated transponders will present challenges. "The more and more you integrate, the more and more crosstalk you will have," says Winzer. "That is fundamental: integration always comes at the cost of crosstalk."

Winzer says there are several areas where crosstalk may arise. An integrated amplifier serving ten single-mode fibres will share a multi-core erbium-doped fibre instead of ten individual strands. Crosstalk between those closely-spaced cores is likely.

The transponder will be based on a large integrated circuit giving rise to electrical crosstalk. One way to tackle crosstalk is to develop components to a higher specification but that is more costly. Alternatively, signal processing on the received signal can be used to undo the crosstalk. Using electronics to counter crosstalk is attractive especially when it is the optics that dominate the design cost. This is where MIMO signal processing plays a role. "MIMO is the most advanced version of spatial multiplexing," says Winzer.

To address crosstalk caused by spatial multiplexing in the Bell Labs' demo, 12x12 MIMO was used. Bell Labs says that using MIMO does not add significantly to the overall computation. Existing 100 Gigabit coherent ASICs effectively use a 2x2 MIMO scheme, says Winzer: “We are extending the 2x2 MIMO to 2Nx2N MIMO.”

Only one portion of the current signal processing chain is impacted, he adds; a portion that consumes 10 percent of the power will need to increase by a certain factor. The resulting design will be more complex and expensive but not dramatically so, he says.

Winzer says such mitigation techniques need to be investigated now since crosstalk in future systems is inevitable. Even if the technology's deployment is at least a decade away, developing techniques to tackle crosstalk now means vendors have a clear path forward.

Parallelism

Winzer points out that optical transmission continues to embrace parallelism. "With super-channels we go parallel with multiple carriers because a single carrier can’t handle the traffic anymore," he says. This is similar to parallelism in microprocessors where multi-core designs are now used due to the diminishing return in continually increasing a single core's clock speed.

For 400Gbps or 1 Terabit over a single-mode fibre, the super-channel approach is the near term evolution.

Over the next decade, the benefit of frequency parallelism will diminish since it will no longer increase spectral efficiency. "Then you need to resort to another physical dimension for parallelism and that would be space," says Winzer.

MIMO will be needed when crosstalk arises and that will occur with multiple mode fibre.

"For multiple strands of single mode fibre it will depend on how much crosstalk the integrated optical amplifiers and transponders introduce," says Winzer.

Part 1: Terabit optical transmission

Infinera adds software to its PIC for instant bandwidth

Infinera has enabled its DTN-X platform to deliver rapidly 100 Gigabit services. The ability to fulfill capacity demand quickly is seen as a competitive advantage by operators. Gazettabyte spoke with Infinera and TeliaSonera International Carrier, a DTN-X customer, about the merits of its 'instant bandwidth' and asked several industry analysts for their views.

Infinera has added a WDM line card hosting its 500 Gigabit super-channel photonic integrated circuit to its DTN-X platform

Infinera has added a WDM line card hosting its 500 Gigabit super-channel photonic integrated circuit to its DTN-X platform

Pravin Mahajan, Infinera.

Infinera is claiming an industry first with the software-enablement of 100 Gigabit capacity increments. The company's DTN-X platform's 'instant bandwidth' feature shortens the time to add new capacity in the network, from weeks as is common today to less than a day.

The ability to add bandwidth as required is increasingly valued by operators. TeliaSonera International Carrier points out that its traffic demands are increasingly variable, making capacity requirements harder to forecast and manage.

"It [the DTN-X's instant bandwidth] enables us to activate 100 Gig services between network spans to manage our own IP traffic which is growing rapidly," says Ivo Pascucci, head of sales, Americas at TeliaSonera International Carrier. "We will also be able to sell in the market 100 Gig services and activate the capacity much more rapidly."

What has been done

Infinera has added three elements to enable its DTN-X platform to enable 100 Gigabit services.

One is a new wavelength division multiplexing (WDM) line card that features its 500 Gigabit-per-second (Gbps) super-channel photonic integrated circuit (PIC). Infinera says the line card has 500Gbps of capacity enabled, of which only 100Gbps is activated. "The remaining 400Gbps is latent, waiting to be activated," says Pravin Mahajan, director of corporate marketing and messaging at Infinera.

Infinera uses the DTN-X's Optical Transport Network (OTN) switch fabric to pack the client side signals onto any of the 100Gbps channels activated on the line side. This capacity pool of up to 500 Gbps, says Infinera, results in better usage of backbone capacity compared to traditional optical networking equipment based on individual 100Gbps 'siloed' channels.

A software application has also been added to Infinera's network management system, the digital network administrator (DNA), to activate the 100Gbps capacity increments.

Lastly, Infinera has in place a just-in-time system that enables client-side 10 Gigabit Ethernet optical transceivers to be delivered to customers within 10 days, if they out of stock. Infinera says it is achieving a 6-day delivery time in 95% of the cases.

Advantages

TeliaSonera International Carrier confirms the advantages to having 100 Gigabit capacities pre-provisioned and ready for use.

"Having the ability to turn up large bandwidth is critical to our business, especially as the [traffic] numbers continue to grow"

"Having the ability to turn up large bandwidth is critical to our business, especially as the [traffic] numbers continue to grow"

Ivo Pascucci, TeliaSonera International Carrier

"If it is individual line cards across the network when you have as many PoPs as we do, it does get tricky," says Pascucci. "If we have 500 Gig channels pre-provisioned with the ability to activate 100 Gig segments as needed, that gives us an advantage versus having to figure out how many line cards to have deployed in which nodes, and forecasting which nodes should have the line cards in the first place."

The operator is already seeing demand for 100 Gigabit services, from the carrier market and large content providers. The operator already provides 10x10Gbps and 20x10Gbps services to customers. "With that there are all the challenges of provisioning ten or 20 10 Gig circuits and 10 or 20 cross-connects for each site," says Pascucci. The operator also manages one and two Terabits of network capacity for certain customers.

"Having the ability to turn up large bandwidth is critical to our business, especially as the [traffic] numbers continue to grow," says Pascucci.

Analysts' comments

Gazettabye asked several industry analysts about the significance of Infinera's announcement. In particular the uniqueness of the offering, the claim to reduce rapidly bandwidth enablement times and its importance for operators.

Infonetics Research

Andrew Schmitt, directing analyst for optical

Schmitt believes Infinera's announcement is significant as it is the first announced North American win. It also shows the company has a solution for carriers that only want to roll out a single 100 Gbps but don't want to buy 500Gbps.

More importantly, it should allow some carriers to deploy extra capacity for future use at no cost to them and that opens up interesting possibilities for automatically switched optical network (ASON) management or even software-defined networking (SDN).

"As to the claim that it reduces capacity enablement from weeks to potential minutes, to some degree, yes," says Schmitt.

Certainly Ciena, Alcatel-Lucent or Cisco could ship extra line cards into customers and not charge the customer until they are used and that would effectively achieve the same result. "But if the PIC truly has better economics than the discrete solutions from these vendors then Infinera can ship hardware up front and then recognise the profits on the back end," he says.

"You simply can't predict where the best places to put bandwidth will be"

In turn, if customers get free inventory management out of the deal and Infinera equipment can support that arrangement more economically, that is a significant advantage for Infinera.

"This instant bandwidth is unique to Infinera. As I said, anyone could do this deal. But you need a hardware cost structure that can support it or it gets expensive quickly," says Schmitt. "Everyone is working on super-channels but it is clear from the legacy of the way the 10 Gig DTN hardware and software worked that Infinera gets it."

Schmitt believes the term super-channel is abused. He prefers the term virtualised bandwidth - optical capacity that can be allocated the same way server or storage resources are assigned through virtualization.

"The SDN hype is hitting strong in this business but Infinera is really one of the only companies that have a history of a hardware and software architecture that lends itself well to this concept," he says. This is validated with its customer list which is loaded heavily with service providers that are not just talking about SDN but actively doing something, he says.

"It [turning capacity up quickly] is important for SDN as well as more advanced protection arrangements. You simply can't predict where the best places to put bandwidth will be," says Schmitt. "If you can have spare capacity in the network that is lit on demand but not paid for if you don't need it, it is the cheapest approach for avoiding overbuilding a network for corner-case requirements.

"I think the accounting for this product will be interesting, it is likely that we will know in a year how successful this concept was just by a careful examination of the company's financials," he concludes.

ACG Research

Eve Griliches, vice president of optical networking

Infinera delivered this year the DTN-X with 500 Gig super-channels based on PIC technology. Now, a new 500 Gig line card has been added that can operate at 100 Gig and the remaining 400 Gig can be lit in 100 Gig increments using software. This allows customers to purchase 100 Gig at a time, and turn up subsequent bandwidth via software when they require it.

“No other vendor has a software-based solution, and no one else is delivering 500 Gig yet either,” says Griliches.

With this solution, ACG Research says in its research note, operators can start to develop a flexible infrastructure where bandwidth can grow and move around the network instantly. This is useful to address varying demands in bandwidth, triggered by incidents such as natural disasters or sporting events.

Rapid bandwidth enablement has always been important and takes way too long, so this development is key, says Griliches: “Also, it enables Infinera to enter markets which only need one 100 Gig wavelength for now, which they could not do before.”

“No other vendor has a software-based solution, and no one else is delivering 500 Gig yet either”

Looking forward, ACG Research expects this software and hardware-based instant bandwidth utility model will enable Infinera to widen its potential market base and increase its global market share in 2013 and 2014.

Ovum

Ron Kline, principal analyst, and Dana Cooperson, vice president, of the network infrastructure practice

Ovum also thinks Infinera's announcement is significant. It brings essentially the same value proposition Infinera had with 10 Gigabit to the 100 Gigabit market - low operational expenditure (opex) and quick time-to-market. ”Remember 10 Gig in 10 days?” says Kline.

It further fixes an issue for customers in that with the 10x10Gbps, they had to essentially pay for the full 100Gbps up front, and then they could be very efficient with turn-up and opex. Customers made an efficient opex for more capital expenditure (capex) up-front trade. "With instant bandwidth, they don't have to make the upfront capex-versus-opex tradeoff; they can be most efficient with both,” says Cooperson.

Any vendor can shorten capacity enablement times if they can convince the operator to pre-position bandwidth in the network that is ready to be turned on at a moment's notice.

Ron Kline

Kline says operators has different processes for turning up services and in many cases it is these processes and not the equipment directly that is the cause of the additional time for provisioning. “For example the operator may not use the DNA system or may have a very complex OSS/BSS used in the process,” says Kline.

Nevertheless, the capability to have really short provisioning is there, if an operator wants to take advantage. In the TeliaSonera case, Infinera is managing the network so the quick time to market will be there, says Kline.

Cooperson adds that there can be many factors that impede the capacity enablement process, based on Ovum's own research. “But it is clear from talking to Infinera's customers that its system design and approach is a big benefit to those carriers, often the competitive carriers, in competing in the market,” she says. “Multiple carriers told us that with the Infinera system, they were able to win business from competitors.”

Any vendor can shorten capacity enablement times if they can convince the operator to pre-position bandwidth in the network that is ready to be turned on at a moment's notice. However what is unique to Infinera is its system is deployed 500Gbps at a time and all the switching is done electrically by the OTN switch at each node. Others are working on super-channels but none are close to deploying, says Ovum.

“Multiple carriers told us that with the Infinera system, they were able to win business from competitors.”

Dana Cooperson

The ability to turn on bandwidth rapidly is becoming increasingly important. From a wholesale operator perspective it is very important and a key differentiator.

"It's particularly relevant to wholesale applications where large bandwidth chunks are required and the customer is another carrier," says Cooperson. "Whether you view a Google or a Facebook as a carrier or a very large enterprise, it would apply to them as well as a more traditional carrier."