Software-defined networking: A network game-changer?

OFC/NFOEC reflections: Part 1

"We [operators] need to move faster"

Andrew Lord, BT

Q: What was your impression of the show?

A: Nothing out of the ordinary. I haven't come away clutching a whole bunch of results that I'm determined to go and check out, which I do sometimes.

I'm quite impressed by how the main equipment vendors have moved on to look seriously at post-100 Gigabit transmission. In fact we have some [equipment] in the labs [at BT]. That is moving on pretty quickly. I don't know if there is a need for it just yet but they are certainly getting out there, not with live chips but making serious noises on 400 Gig and beyond.

There was a talk on the CFP [module] and whether we are going to be moving to a coherent CFP at 100 Gig. So what is going to happen to those prices? Is there really going to be a role for non-coherent 100 Gig? That is still a question in my mind.

"Our dream future is that we would buy equipment from whomever we want and it works. Why can't we do that for the network?"

I was quite keen on that but I'm wondering if there is going to be a limited opportunity for the non-coherent 100 Gig variants. The coherent prices will drop and my feeling from this OFC is they are going to drop pretty quickly when people start putting these things [100 Gig coherent] in; we are putting them in. So I don't know quite what the scope is for people that are trying to push that [100 Gigabit direct detection].

What was noteworthy at the show?

There is much talk about software-defined networking (SDN), so much talk that a lot of people in my position have been describing it as hype. There is a robust debate internally [within BT] on the merits of SDN which is essentially a data centre activity. In a live network, can we make use of it? There is some skepticism.

I'm still fairly optimistic about SDN and the role it might have and the [OFC/NFOEC] conference helped that.

I'm expecting next year to be the SDN conference and I'd be surprised if SDN doesn't have a much greater impact then [OFC/NFOEC 2014] with more people demoing SDN use cases.

Why is there so much excitement about SDN?

Why now when it could have happened years ago? We could have all had GMPLS (Generalised Multi-Protocol Label Switching) control planes. We haven't got them. Control plane research has been around for a long time; we don't use it: we could but we don't. We are still sitting with heavy OpEx-centric networks, especially optical.

"The 'something different' this conference was spatial-division multiplexing"

So why are we getting excited? Getting the cost out of the operational side - the software-development side, and the ability to buy from whomever we want to.

For example, if we want to buy a new network, we put out a tender and have some 10 responses. It is hard to adjudicate them all equally when, with some of them, we'd have to start from scratch with software development, whereas with others we have a head start as our own management interface has already been developed. That shouldn't and doesn't need to be the case.

Opening the equipment's north-bound interface into our own OSS (operating systems support) in theory, and this is probably naive, any specific OSS we develop ought to work.

Our dream future is that we would buy equipment from whomever we want and it works. Why can't we do that for the network?

We want to as it means we can leverage competition but also we can get new network concepts and builds in quicker without having to suffer 18 months of writing new code to manage the thing. We used to do that but it is no longer acceptable. It is too expensive and time consuming; we need to move faster.

It [the interest in SDN] is just competition hotting up and costs getting harder to manage. This is an area that is now the focus and SDN possibly provides a way through that.

Another issue is the ability to put quickly new applications and services onto our networks. For example, a bank wants to do data backup but doesn't want to spend a year and resources developing something that it uses only occasionally. Is there a bandwidth-on-demand application we can put onto our basic network infrastructure? Why not?

SDN gives us a chance to do something like that, we could roll it out quickly for specific customers.

Anything else at OFC/NFOEC that struck you as noteworthy?

The core networks aspect of OFC is really my main interest.

You are taking the components, a big part of OFC, and then the transmission experiments and all the great results that they get - multiple Terabits and new modulation formats - and then in networks you are saying: What can I build?

The networks have always been the poor relation. It has not had the great exposure or the same excitement. Well, now, the network is becoming centre stage.

As you see components and transmission mature - and it is maturing as the capacity we are seeing on a fibre is almost hitting the natural limit - so the spectral efficiency, the amount of bits you can squeeze in a single Hertz, is hitting the limit of 3,4,5,6 [bit/s/Hz]. You can't get much more than that if you want to go a reasonable distance.

So the big buzz word - 70 to 80 percent of the OFC papers we reviewed - was flex-grid, turning the optical spectrum in fibre into a much more flexible commodity where you can have wherever spectrum you want between nodes dynamically. Very, very interesting; loads of papers on that. How do you manage that? What benefits does it give?

What did you learn from the show?

One area I don't get yet is spatial-division multiplexing. Fibre is filling up so where do we go? Well, we need to go somewhere because we are predicting our networks continuing to grow at 35 to 40 percent.

Now we are hitting a new era. Putting fibre in doesn't really solve the problem in terms of cost, energy and space. You are just layering solutions on top of each other and you don't get any more revenue from it. We are stuffed unless we do something different.

The 'something different' this conference was spatial-division multiplexing. You still have a single fibre but you put in multiple cores and that is the next way of increasing capacity. There is an awful lot of work being done in this area.

I gave a paper [pointing out the challenges]. I couldn't see how you would build the splicing equipment, how you would get this fibre qualified given the 30-40 years of expertise of companies like Corning making single mode fibre, are we really going to go through all that again for this new fibre? How long is that going to take? How do you align these things?

"SDN for many people is data centres and I think we [operators] mean something a bit different."

I just presented the basic pitfalls from an operator's perspective of using this stuff. That is my skeptic side. But I could be proved wrong, it has happened before!

Anything you learned that got you excited?

One thing I saw is optics pushing out.

In the past we saw 100 Megabit and one Gigabit Ethernet (GbE) being king of a certain part of the network. People were talking about that becoming optics.

We are starting to see optics entering a new phase. Ten Gigabit Ethernet is a wavelength, a colour on a fibre. If the cost of those very simple 10GbE transceivers continues to drop, we will start to see optics enter a new phase where we could be seeing it all over the place: you have a GigE port, well, have a wavelength.

[When that happens] optics comes centre stage and then you have to address optical questions. This is exciting and Ericsson was talking a bit about that.

What will you be monitoring between now and the next OFC?

We are accelerating our SDN work. We see that as being game-changing in terms of networks. I've seen enough open standards emerging, enough will around the industry with the people I've spoken to, some of the vendors that want to do some work with us, that it is exciting. Things like 4k and 8k (ultra high definition) TV, providing the bandwidth to make this thing sensible.

"I don't think BT needs to be delving into the insides of an IP router trying to improve how it moves packets. That is not our job."

Think of a health application where you have a 4 or 8k TV camera giving an ultra high-res picture of a scan, piping that around the network at many many Gigabits. These type of applications are exciting and that is where we are going to be putting a bit more effort. Rather than the traditional just thinking about transmission, we are moving on to some solid networking; that is how we are migrating it in the group.

When you say open standards [for SDN], OpenFlow comes to mind.

OpenFlow is a lovely academic thing. It allows you to open a box for a university to try their own algorithms. But it doesn't really help us because we don't want to get down to that level.

I don't think BT needs to be delving into the insides of an IP router trying to improve how it moves packets. That is not our job.

What we need is the next level up: taking entire network functions and having them presented in an open way.

For example, something like OpenStack [the open source cloud computing software] that allows you to start to bring networking, and compute and memory resources in data centres together.

You can start to say: I have a data centre here, another here and some networking in between, how can I orchestrate all of that? I need to provide some backup or some protection, what gets all those diverse elements, in very different parts of the industry, what is it that will orchestrate that automatically?

That is the kind of open theme that operators are interested in.

That sounds different to what is being developed for SDN in the data centre. Are there two areas here: one networking and one the data centre?

You are quite right. SDN for many people is data centres and I think we mean something a bit different. We are trying to have multi-vendor leverage and as I've said, look at the software issues.

We also need to be a bit clearer as to what we mean by it [SDN].

Andrew Lord has been appointed technical chair at OFC/NFOEC

Further reading

Part 2: OFC/NFOEC 2013 industry reflections, click here

Part 3: OFC/NFOEC 2013 industry reflections, click here

Part 4: OFC/NFOEC industry reflections, click here

Part 5: OFC/NFEC 2013 industry reflections, click here

Telcos eye servers & software to meet networking needs

- The Network Functions Virtualisation (NFV) initiative aims to use common servers for networking functions

- The initiative promises to be industry disruptive

"The sheer massive [server] volumes is generating an innovation dynamic that is far beyond what we would expect to see in networking"

"The sheer massive [server] volumes is generating an innovation dynamic that is far beyond what we would expect to see in networking"

Don Clarke, NFV

Telcos want to embrace the rapid developments in IT to benefit their networks and operations.

The Network Functions Virtualisation (NFV) initiative, set up by the European Telecommunications Standards Institute (ETSI), has started work to use servers and virtualisation technology to replace the many specialist hardware boxes in their networks. Such boxes can be expensive to maintain, consume valuable floor space and power, and add to the operators' already complex operations support systems (OSS).

"Data centre technology has evolved to the point where the raw throughput of the compute resources is sufficient to do things in networking that previously could only be done with bespoke hardware and software," says Don Clarke, technical manager of the NFV industry specification group, and who is BT's head of network evolution innovation. "The data centre is commoditising server hardware, and enormous amounts of software innovation - in applications and operations - is being applied.”

"Everything above Layer 2 is in the compute domain and can be put on industry-standard servers"

The operators have been exploring independently how IT technology can be applied to networking. Now they have joined forces via the NFV initiative.

"The most exciting thing about the technology is piggybacking on the innovation that is going on in the data centre," says Clarke. "The sheer massive volumes is generating an innovation dynamic that is far beyond what we would expect to see in networking."

Another key advantage is that once networks become software-based, enormous amounts of flexibility results when creating new services, bringing them to market quickly while also reducing costs.

NFV and SDN

The NFV initiative is being promoted as a complement to software-defined networking (SDN).

The complementary relationship between NFV and SDN. Source: NFV.

The complementary relationship between NFV and SDN. Source: NFV.

SDN is focussed on control mechanisms to reconfigure networks that separate the control plane and the data plane. The transport network can be seen as dumb pipes with the control mechanisms adding the intelligence.

“There are other areas of the network where there is intrinsic complexity of processing rather than raw throughput,” says Clarke.

These include firewalls, session border controllers, deep packet inspection boxes and gateways - all functions that can be ported onto servers. Indeed, once running as software on servers such networking functions can be virtualised.

"Everything above Layer 2 is in the compute domain and can be put on industry-standard servers,” says Clarke. This could even include core IP routers but clearly that is not the best use of general-purpose computing, and the initial focus will be equipment at the edge of the network.

Clarke describes how operators will virtualise network elements and interface them to their existing OSS systems. “We see SDN as a longer journey for us,” he says. “In the meantime we want to get the benefits of network virtualisation alongside existing networks and reusing our OSS where we can.”

NFV will first be applied to appliances that lend themselves to virtualisation and where the impact on the OSS will be minimal. Here the appliance will be loaded as software on a common server instead of current bespoke systems situated at the network's end points. “You [as an operator] can start to draw a list of target things as to what will be of most interest,” says Clarke.

Virtualised network appliances are not a new concept and examples are already available on the market. Vanu's software-based radio access network technology is one such example. “What has changed is the compute resources available in servers is now sufficient, and the volume of servers [made] is so massive compared to five years ago,” says Clarke

The NFV forum aims to create an industry-wide understanding as to what the challenges are while ensuring that there are common tools for operators that will also increase the total available market.

Clarke stresses that the physical shape of operators' networks - such as local exchange numbers - will not change greatly with the uptake of NFV. “But the kind of equipment in those locations will change, and that equipment will be server-based," says Clarke.

"One of the things the software world has shown us is that if you sit on your hands, a player comes out of nowhere and takes your business"

One issue for operators is their telecom-specific requirements. Equipment is typically hardened and has strict reliability requirements. In turn, operators' central offices are not as well air conditioned as data centres. This may require innovation around reliability and resilience in software such that should a server fail, the system adapts and the server workload is moved elsewhere. The faulty server can then be replaced by an engineer on a scheduled service visit rather than an emergency one.

"Once you get into the software world, all kinds of interesting things that enhance resilience and reliability become possible," says Clarke.

Industry disruption

The NFV initiative could prove disruptive for many telecom vendors.

"This is potentially massively disruptive," says Clarke. "But what is so positive about this is that it is new." Moreover, this is a development that operators are flagging to vendors as something that they want.

Clarke admits that many vendors have existing product lines that they will want to protect. But these vendors have unique telecom networking expertise which no IT start-up entering the field can match.

"It is all about timing," says Clarke. "When do they [telecom vendors] decisively move their product portfolio to a software version is an internal battle that is happening right now. Yes, it is disruptive, but only if they sit on their hands and do nothing and their competitors move first."

Clarke is optimistic about to the vendors' response to the initiative. "One of the things the software world has shown us is that if you sit on your hands, a player comes out of nowhere and takes your business," he says.

Once operators deploy software-based network elements, they will be able to do new things with regard services. "Different kinds of service profiles, different kinds of capabilities and different billing arrangements become possible because it is software- not hardware-based."

Work status

The NFV initiative was unveiled late last year with the first meeting being held in January. The initiative includes operators such as AT&T, BT, Deutsche Telekom, Orange, Telecom Italia, Telefonica and Verizon as well as telecoms equipment vendors, IT vendors and technology providers.

One of the meeting's first tasks was to identify the issues to be addressed to enable the use of servers for telecom functions. Around 60 companies attended the meeting - including 20-odd operators - to create the organisational structure to address these issues.

Two experts groups - on security, and on performance and portability - were set up. “We see these issues as key for the four working groups,” says Clarke. These four working groups cover software architecture, infrastructure, reliability and resilience, and orchestration and management.

Work has started on the requirement specifications, with calls between the members taking place each day, says Clarke. The NFV work is expected to be completed by the end of 2014.

Further information:

White Paper: Network Functions Virtualisation: An Introduction, Benefits, Enablers, Challenges & Call for Action, click here

The role of software-defined networking for telcos

The OIF's Carrier Working Group is assessing how software-defined networking (SDN) will impact transport. Hans-Martin Foisel, chair of the OIF working group, explains SDN's importance for operators.

Briefing: Software-defined networking

Part 1: Operator interest in SDN

"Using SDN use cases, we are trying to derive whether the transport network is ready or if there is some missing functionality"

"Using SDN use cases, we are trying to derive whether the transport network is ready or if there is some missing functionality"

Hans-Martin Foisel, OIF

Hans-Martin Foisel, of Deutsche Telekom and chair of the OIF Carrier Working Group, says SDN is of great interest to operators that view the emerging technology as a way of optimising all-IP networks that increasingly make use of data centres.

"Software-defined networking is an approach for optimising the network in a much larger sense than in the past," says Foisel whose OIF working group is tasked with determining how SDN's requirements will impact the transport network.

Network optimisation remains an ongoing process for operators. Work continues to improve the interworking between the network's layers to gain efficiencies and reduce operating costs (see Cisco Systems' intelligent light).

With SDN, the scope is far broader. "It [SDN] is optimising the network in terms of processing, storage and transport," says Foisel. SDN takes the data centre environment and includes it as part of the overall optimisation. For example, content allocation becomes a new parameter for network optimisation.

Other reasons for operator interest in SDN, says Foisel, include optimising operation support systems (OSS) software, and the characteristic most commonly associated with SDN, making more efficient use of the network's switches and routers.

"A lot of carriers are struggling with their OSSes - these are quite complex beasts," he says. "With data centres involved, you now have a chance to simplify your IT as all carriers are struggling with their IT."

The Network Functions Virtualisation (NFV) industry specification group is a carrier-led initiative set up in January by the European Telecommunications Standards Institute (ETSI). The group is tasked with optimising software components, the OSSes, involved for processing, storage and transport.

The initiative aims to make use of standard servers, storage and Ethernet switches to reduce the varied equipment making up current carrier networks to improve service innovation and reduce the operators' capital and operational expenditure.

The NFV and SDN are separate developments that will benefit each other. The ETSI group will develop requirements and architecture specifications for the hardware and software infrastructure needed for the virtualized functions, as well as guidelines for developing network functions.

The third reason for operator interest in SDN - separating management, control and data planes - promises greater efficiencies, enabling network segmentation irrespective of the switch and router deployments. This allows flexible use the network, with resources shifted based on particular user requirements.

"Optimising the network as a whole - including the data centre services and applications - is a concept, a big architecture," says Foisel. "OpenFlow and the separation of data, management and control planes are tools to achieve them."

OpenFlow is an open standard implementation of the SDN concept. The OpenFlow protocol is being developed by the Open Networking Foundation, an industry body that includes Google, Facebook and Microsoft, telecom operators Verizon, NTT, Deutsche Telekom, and various equipment makers.

Transport SDN

The OIF Working Group will identify how SDN impacts the transport network including layers one and two, networking platforms and even components. By undertaking this work, the operators' goal is to make SDN "carrier-grade'.

Foisel admits that the working group does not yet know whether the transport layer will be impacted by SDN. To answer the question, SDN applications will be used to identify required transport SDN functionalities. Once identified, a set of requirements will be drafted.

"Using SDN use cases, we are trying to derive whether the transport network is ready or if there is some missing functionality," says Foisel.

The work will also highlight any areas that require standardisation, for the OIF and for other standards bodies, to ensure future SDN interworking between vendors' solutions. The OIF expects to have a first draft of the requirements by July 2013.

"In the transport network we are pushed by the mobile operators but also by the over-the-top applications to be faster and be more application-aware," says Foisel. "With SDN we have a chance to do so."

Part 2: Hardware for SDN

100 Gigabit: An operator view

Gazettabyte spoke with BT, Level 3 Communications and Verizon about their 100 Gigabit optical transmission plans and the challenges they see regarding the technology.

Briefing: 100 Gigabit

Part 1: Operators

Operators will use 100 Gigabit-per-second (Gbps) coherent technology for their next-generation core networks. For metro, operators favour coherent and have differing views regarding the alternative, 100Gbps direct-detection schemes. All the operators agree that the 100Gbps interfaces - line-side and client-side - must become cheaper before 100Gbps technology is more widely deployed.

"It is clear that you absolutely need 100 Gig in large parts of the network"

Steve Gringeri, Verizon

100 Gigabit status

Verizon is already deploying 100Gbps wavelengths in its European and US networks, and will complete its US nationwide 100Gbps backbone in the next two years.

"We are at the stage of building a new-generation network because our current network is quite full," says Steve Gringeri, a principal member of the technical staff at Verizon Business.

The operator first deployed 100Gbps coherent technology in late 2009, linking Paris and Frankfurt. Verizon's focus is on 100Gbps, having deployed a limited amount of 40Gbps technology. "We can also support 40 Gig coherent where it makes sense, based on traffic demands," says Gringeri.

Level 3 Communications and BT, meanwhile, have yet to deploy 100Gbps technology.

"We have not [made any public statements regarding 100 Gig]," says Monisha Merchant, Level 3’s senior director of product management. "We have had trials but nothing formal for our own development." Level 3 started deploying 40Gbps technology in March 2009.

BT expects to deploy new high-speed line rates before the year end. "The first place we are actively pursuing the deployment of initially 40G, but rapidly moving on to 100G, is in the core,” says Steve Hornung, director, transport, timing and synch at BT.

Operators are looking to deploy 100Gbps to meet growing traffic demands.

"If I look at cloud applications, video distribution applications and what we are doing for wireless (Long Term Evolution) - the sum of all the traffic - that is what is putting the strain on the network," says Gringeri.

Verizon is also transitioning its legacy networks onto its core IP-MPLS backbone, requiring the operator to grow its base infrastructure significantly. "When we look at demands there, it is clear that you absolutely need 100 Gig in large parts of the network," says Gringeri.

Level 3 points out its network between any two cities has been running at much greater capacity than 100 Gbps so that demand has been there for years, the issue is the economics of the technology. "Right now, going to 100Gbps is significantly a higher cost than just deploying 10x 10Gbps," says Level 3's Merchant.

BT's core network comprises 106 nodes: 20 in a fully-meshed inner core, surrounded by an outer 86-node core. The core carries the bulk of BT's IP, business and voice traffic.

"We are taking specific steps and have business cases developed to deploy 40G and 100G technology: alternative line cards into the same rack," says Hornung.

Coherent and direct detection

Coherent has become the default optical transmission technology for operators' next-generation core networks.

BT says it is a 'no-brainer' that 400Gbps and 1 Terabit-per-second light paths will eventually be deployed in the network to accommodate growing traffic. "Rather than keep all your options open, we need to make the assumption that technology will essentially be coherent going forward because it will be the bandwidth that drives it," says Hornung.

Beyond BT's 106-node core is a backhaul network that links 1,000 points-of-presence (PoPs). It is this part of the network that BT will consider 40Gbps and perhaps 100Gbps direct-detection technology. "If it [such technology] became commercially available, we would look at the price, the demand and use it, or not, as makes sense," says Hornung. "I would not exclude at this stage looking at any technology that becomes available." Such direct-detection 100Gbps solutions are already being promoted by ADVA Optical Networking and MultiPhy.

However, Verizon believes coherent will also be needed for the metro. "If I look at my metro systems, you have even lower quality amplifiers, and generally worse signal-to-noise," says Gringeri. “Based on the performance required, I have no idea how you are going to implement a solution that isn't coherent."

Even for shorter reach metro systems - 200 or 300km- Verizon believes coherent will be the implementation, including expanding existing deployments that carry 10Gbps light paths and that use dispersion-compensated fibre.

Level 3 says it is not wedded to a technology but rather a cost point. As a result it will assess a technology if it believes it will address the operator's needs and has a cost performance advantage.

100 Gig deployment stages

The cost of 100Gbps technology remains a key challenge impeding wider deployment. This is not surprising since 100Gbps technology is still immature and systems shipping are first-generation designs.

Operators are willing to pay a premium to deploy 100Gbps light paths at network pinch-points as it is cheaper that lighting a new fibre.

Metro deployments of new technology such as 100Gbps occur generally occur once the long-haul network has been upgraded. The technology is by then more mature and better suited to the cost-conscious metro.

Applications that will drive metro 100Gbps include linking data centre and enterprises. But Level 3 expects it will be another five years before enterprises move from requesting 10 Gigabit services to 100 Gigabit ones to meet their telecom needs.

Verizon highlights two 100Gbps priorities: the high-end performance dense WDM systems and client-side 'grey' (non-WDM) optics used to connect equipment across distances as short as 100m with ribbon cable to over 2km or 10km over single-mode fibre.

"I would not exclude at this stage looking at any technology that becomes available"

Steve Hornung, BT

"Grey optics are very costly, especially if I’m going to stitch the network and have routers and other client devices and potential long-haul and metro networks, all of these interconnect optics come into play," says Gringeri.

Verizon is a strong proponent of a new 100Gbps serial interface over 2km or 10km. At present there are the 100 Gigabit interface and the 10x10 MSA. However Gringeri says it will be 2-3 years before such a serial interface becomes available. "Getting the price-performance on the grey optics is my number one priority after the DWDM long haul optics," says Gringeri.

Once 100Gbps client-side interfaces do come down in price, operators' PoPs will be used to link other locations in the metro to carry the higher-capacity services, he says.

The final stage of the rollout of 100Gbps will be single point-to-point connections. This is where grey 100Gbps comes in, says Gringeri, based on 40 or 80km optical interfaces.

Source: Gazettabyte

Tackling costs

Operators are confident regarding the vendors’ cost-reduction roadmaps. "We are talking to our clients about second, third, even fourth generation of coherent," says Gringeri. "There are ways of making extremely significant price reductions."

Gringeri points to further photonic integration and reducing the sampling rate of the coherent receiver ASIC's analogue-to-digital converters. "With the DSP [ASIC], you can look to lower the sampling rate," says Gringeri. "A lot of the systems do 2x sampling and you don't need 2x sampling."

The filtering used for dispersion compensation can also be simpler for shorter-reach spans. "The filter can be shorter - you don't need as many [digital filter] taps," says Gringeri. "There are a lot of optimisations and no one has made them yet."

There are also the move to pluggable CFP modules for the line-side coherent optics and the CFP2 for client-side 100Gbps interfaces. At present the only line-side 100Gbps pluggable is based on direct detection.

"The CFP is a big package," says Gringeri. "That is not the grey optics package we want in the future, we need to go to a much smaller package long term."

For the line-side there is also the issue of the digital signal processor's (DSP) power consumption. "I think you can fit the optics in but I'm very concerned about the power consumption of the DSP - these DSPs are 50 to 80W in many current designs," says Gringeri.

One obvious solution is to move the DSP out of the module and onto the line card. "Even if they can extend the power number of the CFP, it needs to be 15 to 20W," says Gringeri. "There is an awful lot of work to get where you are today to 15 to 20W."

* Monisha Merchant left Level 3 before the article was published.

Further Reading:

100 Gigabit: The coming metro opportunity - a position paper, click here

Click here for Part 2: Next-gen 100 Gig Optics

Operators want to cut power by a fifth by 2020

Part 2: Operators’ power efficiency strategies

Service providers have set themselves ambitious targets to reduce their energy consumption by a fifth by 2020. The power reduction will coincide with an expected thirty-fold increase in traffic in that period. Given the cost of electricity and operators’ requirements, such targets are not surprising: KPN, with its 12,000 sites in The Netherlands, consumes 1% of the country’s electricity.

“We also have to invest in capital expenditure for a big swap of equipment – in mobile and DSLAMs"

Philippe Tuzzolino, France Telecom-Orange

Operators stress that power consumption concerns are not new but Marga Blom, manager, energy management group at KPN, highlights that the issue had become pressing due to steep rises in electricity prices. “It is becoming a significant part of our operational expense,” she says.

"We are getting dedicated and allocated funds specifically for energy efficiency,” adds John Schinter, AT&T’s director of energy. “In the past, energy didn’t play anywhere near the role it does today.”

Power reduction strategies

Service providers are adopted several approaches to reduce their power requirements.

Upgrading their equipment is one. Newer platforms are denser with higher-speed interfaces while also supporting existing technologies more efficiently. Verizon, for example, has deployed 100 Gigabit-per-second (Gbps) interfaces for optical transport and for its IT systems in Europe. The 100Gbps systems are no larger than existing 10Gbps and 40Gbps platforms and while the higher-speed interfaces consume more power, overall power-per-bit is reduced.

“There is a business case based on total cost of ownership for migrating to newer platforms.”

“There is a business case based on total cost of ownership for migrating to newer platforms.”

Marga Blom, KPN

Reducing the number of facilities is another approach. BT and Deutsche Telekom are reducing significantly the number of local exchanges they operate. France Telecom is consolidating a dozen data centres in France and Poland to two, filling both with new, more energy-efficient equipment. Such an initiative improves the power usage effectiveness (PUE), an important data centre efficiency measure, halving the energy consumption associated with France Telecom’s data centres’ cooling systems.

“PUE started with data centres but it is relevant in the future central office world,” says Brian Trosper, vice president of global network facilities/ data centers at Verizon. “As you look at the evolution of cloud-based services and virtualisation of applications, you are going to see a blurring of data centres and central offices as they interoperate to provide the service.”

Belgacom plans to upgrade its mobile infrastructure with 20% more energy-efficient equipment over the next two years as it seeks a 25% network energy efficiency improvement by 2020. France Telecom is committed to a 15% reduction in its global energy consumption by 2020 compared to the level in 2006. Meanwhile KPN has almost halted growth in its energy demands with network upgrades despite strong growth in traffic, and by 2012 it expects to start reducing demand. KPN’s target by 2020 is to reduce energy consumption by 20 percent compared to its network demands of 2005.

Fewer buildings, better cooling

Philippe Tuzzolino, environment director for France Telecom-Orange, says energy consumption is rising in its core network and data centres due to the ever increasing traffic and data usage but that power is being reduced at sites using such techniques as virtualisation of servers, free-air cooling, and increasing the operating temperature of equipment. “We employ natural ventilation to reduce the energy costs of cooling,” says Tuzzolino.

“Everything we do is going to be energy efficient.”

“Everything we do is going to be energy efficient.”

Brian Trosper, Verizon

Verizon uses techniques such as alternating ‘hot’ and ‘cold’ aisles of equipment and real-time smart-building sensing to tackle cooling. “The building senses the environment, where cooling is needed and where it is not, ensuring that the cooling systems are running as efficiently as possible,” says Trosper.

Verizon also points to vendor improvements in back-up power supply equipment such as DC power rectifiers and uninterruptable power supplies. Such equipment which is always on has traditionally been 50% efficient. “If they are losing 50% power before they feed an IP router that is clearly very inefficient,” says Chris Kimm, Verizon's vice president, network field operations, EMEA and Asia-Pacific. Now manufacturers have raised efficiencies of such power equipment to 90-95%.

France Telecom forecasts that its data centre and site energy saving measures will only work till 2013 with power consumption then rising again. “We also have to invest in capital expenditure for a big swap of equipment – in mobile and DSLAMs [access equipment],” says Tuzzolino.

Newer platforms support advanced networking technologies and more traffic while supporting existing technologies more efficiently. This allows operators to move their customers onto the newer platforms and decommission the older power-hungry kit.

“Technology is changing so rapidly that there is always a balance between installing new, more energy efficient equipment and the effort to reduce the huge energy footprint of existing operations”

John Schinter, AT&T

Operators also use networking strategies to achieve efficiencies. Verizon is deploying a mix of equipment in its global private IP network used by enterprise customers. It is deploying optical platforms in new markets to connect to local Ethernet service providers. “We ride their Ethernet clouds to our customers in one market, whereas layer 3 IP routing may be used in an adjacent, next most-upstream major market,” says Kimm. The benefit of the mixed approach is greater efficiencies, he says: “Fewer devices to deploy, less complicated deployments, less capital and ultimately less power to run them.”

Verizon is also reducing the real-estate it uses as it retires older equipment. “One trend we are seeing is more, relatively empty-looking facilities,” says Kimm. It is no longer facilities crammed with equipment that is the problem, he says, rather what bound sites are their power and cooling capacity requirements.

“You have to look at the full picture end-to-end,” says Trosper. “Everything we do is going to be energy efficient.” That includes the system vendors and the energy-saving targets Verizon demands of them, how it designs its network, the facilities where the equipment resides and how they are operated and maintained, he says.

Meanwhile, France Telecom says it is working with 19 operators such as Vodafone and Telefonica, BT, DT, China Telecom, and Verizon as well as the organisations such as the ITU and ETSI to define standards for DSLAMs and base stations to aid the operators in meeting their energy targets.

Tuzzolino stresses that France Telecom’s capital expenditure will depend on how energy costs evolve. Energy prices will dictate when France Telecom will need to invest in equipment, and the degree, to deliver the required return on investment.

The operator has defined capital expenditure spending scenarios - from a partial to a complete equipment swap from 2015 - depending on future energy costs. New services will clearly dictate operators’ equipment deployment plans but energy costs will influence the pace.

““If they [DC power rectifiers and UPSs] are losing 50% power before they feed an IP router that is clearly very inefficient”

““If they [DC power rectifiers and UPSs] are losing 50% power before they feed an IP router that is clearly very inefficient”

Chris Kimm, Verizon.

Justifying capital expenditure spending based on energy and hence operational expense savings in now ‘part of the discussion’, says KPN’s Blom: “There is a business case based on total cost of ownership for migrating to newer platforms.”

Challenges

But if operators are generally pleased with the progress they are making, challenges remain.

“Technology is changing so rapidly that there is always a balance between installing new, more energy efficient equipment and the effort to reduce the huge energy footprint of existing operations,” says AT&T’s Schinter.

“The big challenge for us is to plan the capital expenditure effort such that we achieve the return-on-investment based on anticipated energy costs,” says Tuzzolino.

Another aspect is regulation, says Tuzzolino. The EC is considering how ICT can contribute to reducing the energy demands of other industries, he says. “We have to plan to reduce energy consumption because ICT will increasingly be used in [other sectors like] transport and smart grids.”

Verizon highlights the challenge of successfully managing large-scale equipment substitution and other changes that bring benefits while serving existing customers. “You have to keep your focus in the right place,” says Kimm.

Part 1: Standards and best practices

AT&T domain suppliers

|

Date |

Domain |

Partners |

|

Sept 2009 |

Wireline Access |

Ericsson |

|

Feb 2010 |

Radio Access Network |

Alcatel-Lucent, Ericsson |

|

April 2010 |

Optical and transport equipment |

Ciena |

|

July 2010 |

IP/MPLS/Ethernet/Evolved Packet Core |

Alcatel-Lucent, Juniper, Cisco |

The table shows the selected players in AT&T's domain supplier programme announced to date.

AT&T has stated that there will likely be eight domain supplier categories overall so four more have still to be detailed.

Looking at the list, several thoughts arise:

- AT&T has already announced wireless and wireline infrastructure providers whose equipment spans the access network all the way to ultra long-haul. The networking technologies also address the photonic layer to IP or layer 3.

- Alcatel-Lucent and Ericsson already play in two domains while no Asian vendor has yet to be selected.

- One or two more players may be added to the wireline access and optical and transport infrastructure domains but this part of the network is pretty much done.

So what domains are left? Peter Jarich, service director at market research firm Current Analysis, suggests the following:

- Datacentre

- OSS/BSS

- IP Service Layer (IP Multimedia Subsystem, subscriber data management, service delivery platform)

- Voice Core (circuit, softswitch)

- Content Delivery (IP TV, etc.)

AT&T was asked to comment but the operator said that it has not detailed any domains beyond those that have been announced.

|

Date |

Domain |

Partners |

|

Sept 2009 |

Wireline Access |

Ericsson |

|

Feb 2010 |

Radio Access Network |

Alcatel-Lucent, Ericsson |

|

April 2010 |

Optical and transport equipment |

Ciena |

|

July 2010 |

IP/MPLS/Ethernet/Evolved Packet Core |

Alcatel-Lucent, Juniper, Cisco |

ROADMs: reconfigurable but still not agile

Part 2: Wavelength provisioning and network restoration

How are operators using reconfigurable optical add-drop multiplexers (ROADMs) in their networks? And just how often are their networks reconfigured? gazettabyte spoke to AT&T and Verizon Business.

Operators rarely make grand statements about new developments or talk in terms that could be mistaken for hyperbole.

“You create new paths; the network is never finished”

“You create new paths; the network is never finished”

Glenn Wellbrock, Verizon Business

AT&T’s Jim King certainly does not. When questioned about the impact of new technologies, his answers are thoughtful and measured. Yet when it comes to developments at the photonic layer, and in particular next-generation reconfigurable optical add-drop multiplexers (ROADMs), his tone is unmistakable.

“We really are at the cusp of dramatic changes in the way transport is built and architected,” says King, executive director of new technology product development and engineering at AT&T Labs.

ROADMs are now deployed widely in operators’ networks.

AT&T’s ultra-long-haul network is all ROADM-based as are the operator’s various regional networks that bring traffic to its backbone network.

Verizon Business has over 2,000 ROADMs in its medium-haul metropolitan networks. “Everywhere we deploy FiOS [Verizon’s optical access broadband service] we put a ROADM node,” says Glenn Wellbrock, director of backbone network design at Verizon Business.

“FiOS requires a lot of bandwidth to a lot of central offices,” says Wellbrock. Whereas before, one or two OC-48 links may have been sufficient, now several 10 Gigabit-per-second (Gbps) links are needed, for redundancy and to meet broadcast video and video-on-demand requirements.

According to Infonetics Research, the optical networking equipment market has been growing at an annual compound rate of 8% since 2002 while ROADMs have grown at 46% annually between 2005 and 2009. Ovum, meanwhile, forecasts that the global ROADM market will reach US$7 billion in 2014.

While lacking a rigid definition, a ROADM refers to a telecom rack comprising optical switching blocks—wavelength-selective switches (WSSs) that connect lightpaths to fibres —optical amplifiers, optical channel monitors and control plane and management software. Some vendors also include optical transponders.

ROADMs benefit the operators’ networks by allowing wavelengths to remain in the optical domain, passing through intermediate locations without requiring the use of transponders and hence costly optical-electrical conversions. ROADMs also replace the previous arrangement of fixed optical add-drop multiplexers (OADMs), external optical patch panels and cabling.

Wellbrock estimates that with FiOS, ROADMs have halved costs. “Beforehand we used OADMs and SONET boxes,” he says. “Using ROADMs you can bypass any intermediate node; there is no SONET box and you save on back-to-back transponders.”

Verizon has deployed ROADMs from Tellabs, with its 7100 optical transport series, and Fujitsu with its 9500 packet optical networking platform. The first generation Verizon platform ROADMs are degree-4 with 100GHz dense wavelength division multiplexing (DWDM) channel spacings while the second generation platforms have degree-8 and 50GHz spacings. The degree of a WSS-enabled ROADM refers to the number of directions an optical lightpath can be routed.

Network adaptation

Wavelength provisioning and network restoration are the main two events requiring changes at the photonic layer.

Provisioning is used to deliver new bandwidth to a site or to accommodate changes in the network due to changing traffic patterns. Operators try and forecast demand in advance but inevitably lightpaths need to be moved to achieve more efficient network routing. “You create new paths; the network is never finished,” says Wellbrock.

“We want to move around those wavelengths just like we move around channels or customer VPN circuits in today’s world”

Jim King, AT&T Labs

In contrast, network restoration is all about resuming services after a transport fault occurs such as a site going offline after a fibre cut. Restoration differs from network protection that involves much faster service restoration – under 50 milliseconds – and is handled at the electrical layer.

If the fault can be fixed within a few hours and the operator’s service level agreement with a customer will not be breached, engineers are sent to fix the problem. If the fault is at a remote site and fixing it will take days, a restoration event is initiated to reroute the wavelength at the physical layer. But this is a highly manual process. A new wavelength and new direction need to be programmed and engineers are required at both route ends. As a result, established lightpaths are change infrequently, says Wellbrock.

At first sight this appears perplexing given the ‘R’ in ROADMs. Operators have also switched to using tunable transponders, another core component needed for dynamic optical networking.

But the issue is that when plugged into a ROADM, tunability is lost because the ROADM’s restricts the operating wavelength. The lightpath's direction is also fixed. “If you take a tunable transponder that can go anywhere and plug it into Port 2 facing west, say, that is the only place it can go at that [network] ingress point,” says Wellbrock.

When the wavelength passes through intermediate ROADM stages – and in the metro, for example, 10 to 20 ROADM stages can be encountered - the lightpath’s direction can at least be switched but the wavelength remains fixed. “At the intermediate points there is more flexibility, you can come in on the east and send it out west but you can’t change the wavelength; at the access point you can’t change either,” says Wellbrock.

“Should you not be able to move a wavelength deployed on one route onto another more efficiently? Heck, yes,” says King. “We want to move around those wavelengths just like we move around channels or customer VPN circuits in today’s world.”

Moving to a dynamic photonic layer is also a prerequisite for more advanced customer services. “If you want to do cloud computing but the infrastructure is [made up of] fixed ‘hard-wired’ connections, that is basically incompatible,” says King. “The Layer 1 cloud should be flexible and dynamic in order to enable a much richer set of customer applications.”]

To this aim operators are looking to next-generation WSS technology that will enable ROADMS to change a signal’s direction and wavelength. Known as colourless and directionless, these ROADMs will help enable automatic wavelength provisioning and automatic network restoration, circumventing manual servicing. To exploit such ROADMs, advances in control plane technology will be needed (to be discussed in Part 3) but the resulting capabilities will be significant.

“The ability to deploy an all-ROADM mesh network and remotely control it, to build what we need as we need it, and reconfigure it when needed, is a tremendously powerful vision,” says King.

When?

Verizon’s Wellbrock expects such next-generation ROADMs to be available by 2012. “That is when we will see next-generation long-haul systems,” he says, adding that the technology is available now but it is still to be integrated.

King is less willing to commit to a date and is cautious about some of the vendors’ claims. “People tell me 100Gbps is ready today,” he quipped.

Other sections of this briefing

Part 1: Still some way to go

Part 3: To efficiency and beyond

Verizon plans coherent-optimised routes

"Next-gen lines will be coherent only"

Glenn Wellbrock, Verizon Business

Muxponders at 40Gbps

Given the expense of OC-768 very short reach transponders, Verizon is a keen proponent of 4x10Gbps muxponders. Instead of using the OC-768 client side interface, Verizon uses 4x10Gbps pluggables which are multiplexed into the 40Gbps line-side interface. The muxponder approach is even more attractive with compared to 40Gbps IP core router interfaces which are considerable more expensive than 4x10Gbps pluggables.

DQPSK will be deployed this year

Verizon has been selective in its use of differential phase-shift keying (DPSK) based 40Gbps transmission within its network. It must measure the polarisation mode dispersion (PMD) on a proposed 40Gbps route and its variable nature means that impairment issues can arise over time. For this reason Verizon favours differential quadrature phase-shift keying (DQPSK) modulation.

According to Wellbrock, DPSK has a typical PMD tolerance of 4 ps while DQPSK is closer to 8 ps. In contrast, 10Gbps DWDM systems have around 12 ps. “That [8 ps of DQPSK] is the right ballpark figure,” he says, pointing out that a measuring a route's PMD must still be done.

Verizon is testing the technology in its labs and Wellbrock says Verizon will deploy 40Gbps DQPSK technology this year.

Cost of 100Gbps

Verizon Business has already deployed Nortel’s 100Gbps dual- polarization quadrature phase-shift keying (DP-QSPK) coherent system in Europe, connecting Frankfurt and Paris. However, given 100Gbps is at the very early stages of development it will take time to meet the goal of costing 2x 40Gbps.

That said, Verizon expects at least one other system vendor to have a 100Gbps system available for deployment this year. And around mid-2011, at least three 300-pin module makers will likely have products. It will be the advent of 100Gbps modules and the additional 100Gbps systems they will enable that will reduce the price of 100Gbps. This has already happened with 40Gbps line side transponders; with 100Gbps the advent of 300-pin MSAs will happen far much quickly, says Wellbrock.

Next-gen routes coherent only

When Verizon starts deploying its next-generation fibre routes they will be optimised for 100Gbps coherent systems. This means that there will be no dispersion compensation fibre used on the links, depending on the 100Gbps receiver’s electronics to execute the dispersion compensation instead.

The routes will accommodate 40Gbps transmission but only if the systems use coherent detection. Moreover, much care will be needed in how these links are architected since they will need to comply with future higher-speed optical transmission schemes.

Verizon expects to start such routes in 2011 and “certainly” in 2012.

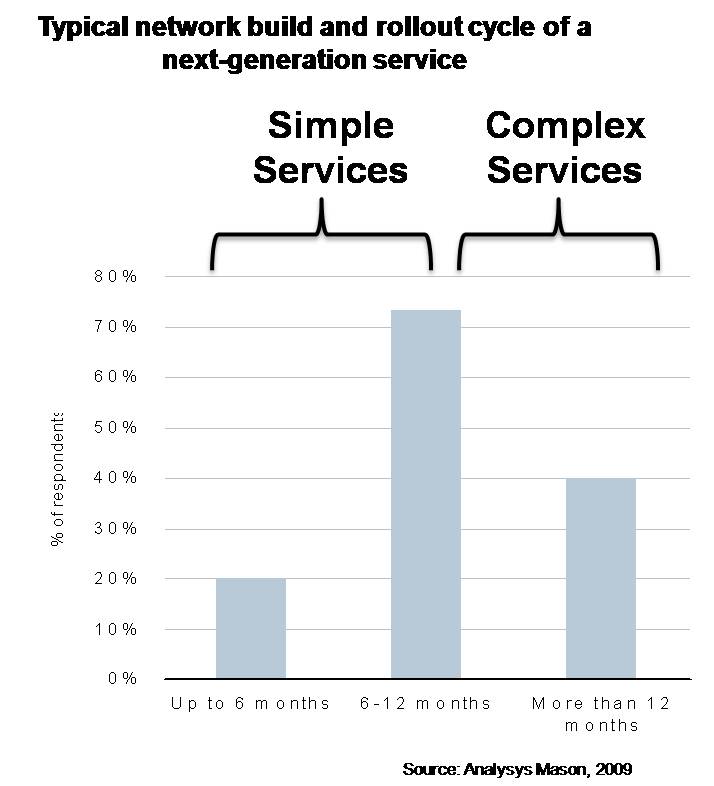

Service providers' network planning in need of an overhaul

These are the findings of an operator study conducted by Analysys Mason on behalf of Amdocs, the business and operational support systems (BSS/ OSS) vendor.

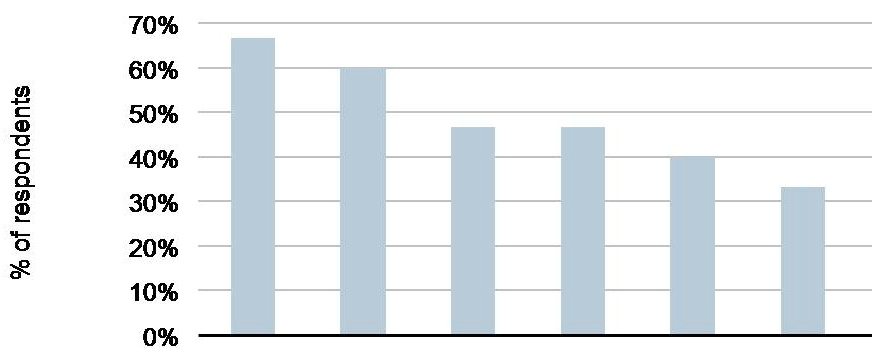

Columns (left to right): 1) Stove-pipe solutions and legacy systems with no time-lined consolidated view 2) Too much time spent on manual processes 3) Too much time (or too little time) and investment on integration efforts with different OSS 4) Lack of consistent processes or tools to roll-out same resources/ technologies 5) Competition difficulties 6) Delays in launching new services. Source: Analysys MasonClick here to view full chart.

Columns (left to right): 1) Stove-pipe solutions and legacy systems with no time-lined consolidated view 2) Too much time spent on manual processes 3) Too much time (or too little time) and investment on integration efforts with different OSS 4) Lack of consistent processes or tools to roll-out same resources/ technologies 5) Competition difficulties 6) Delays in launching new services. Source: Analysys MasonClick here to view full chart.

What is network planning?

Every service provider has a network planning organisation, connected to engineering but a separate unit. According to Mark Mortensen, senior analyst at Analysys Mason and co-author of the study, the unit typically numbers fewer than 100 staff although BT’s, for example, has 600.

"They are highly technical; you will have a ROADM specialist, radio frequency experts, someone knowledgeable on Juniper and Cisco routers," says Mortensen. "Their job is to figure out how to augment the network using the available budget."

In particular, the unit's tasks include strategic planning, doing ‘what-if’ analyses two years ahead to assess likely demand on the network. Technical planning, meanwhile, includes assessing what needs to be bought in the coming year assuming the budget comes in.

The network planners must also address immediate issues such as when an operator wins a contract and must connect an enterprise’s facilities in locations where the operator has no network presence.

“What operators did in two years of planning five years ago they are now doing in a quarter.”

Mark Mortensen, Analysys Mason.

Network planning issues

- Operators have less time to plan. “What operators did in two years of planning five years ago they are now doing in a quarter,” says Mortensen. “BT wants to be able to run a new plan overnight.”

- Automated and sophisticated planning tools do not exist. The small size of the network planning group has meant OSS vendors’ attention has been focused elsewhere.

- If operators could plan forward orders and traffic with greater confidence, they could reduce the amount of extra-capacity they currently have in place. This, according to Mortensen, could save operators 5% of their capital budget.

Key study findings

- Changes in budgets and networks are happening faster than ever before.

- Network planning is becoming more complex requiring the processing of many data inputs. These include how fast network resources are being consumed, by what services and how quickly the services are growing.

- As a result network planning takes longer than the very changes it needs to accommodate. “It [network planning] is a very manual process,” says Mortensen.

- Marketing people now control the budgets. This makes the network planners’ task more complex and requires interaction between the two groups. “This is not a known art and requires compromise,” he says. Mortensen admits that he was surprised by the degree to which the marketing people now control budgets.

In summary

Even if OSS vendors develop sophisticated network planning tools, it is unlikely that end users will notice a difference, says Mortensen. However, it will impact significantly operators’ efficiencies and competitiveness.

Users will also not be as frustrated when new service are launched, such as the poor network performance that resulted due to the huge increases in data generated by the introduction of the latest smartphones. This change may not be evident to users but will be welcome nonetheless.

Study details

Analysys Mason interviewed 24 operators including (40%) mobile, (50%) fixed and (10%) cable. A dozen were Tier One operators while two were Tier Three. The rest - Tier Two operators - are classed as having yearly revenues ranging from US$1bn and 10bn. Lastly, half the operators surveyed were European while the rest were split between Asia Pacific and North America. One Latin American operator was also included.

AT&T rethinks its relationship with networking vendors

“We’ll go with only two players [per domain] and there will be a lot more collaboration.”

Tim Harden, AT&T

AT&T has changed the way it selects equipment suppliers for its core network. The development will result in the U.S. operator working more closely with vendors, and could spark industry consolidation. Indeed, AT&T claims the programme has already led to acquisitions as vendors broaden their portfolios.

The Domain Supplier programme was conjured up to ensure the financial health of AT&T’s suppliers as the operator upgrades its network to all-IP.

By working closely with a select group of system vendors, AT&T will gain equipment tailored to its requirements while shortening the time it takes to launch new services. In return, vendors can focus their R&D spending by seeing early the operator’s roadmap.

“This is a significant change to what we do today,” says Tim Harden, president, supply chain and fleet operations at AT&T. Currently AT&T, like the majority of operators, issues a request-for-proposal (RFP) before getting responses from six to ten vendors typically. A select few are taken into the operator’s labs where the winning vendor is chosen.

With the new programme, AT&T will work with players it has already chosen. “We’ll go with only two players [per domain] and there will be a lot more collaboration,” says Harden. “We’ll bring them into the labs and go through certification and IT issues.” Most importantly, operator and vendor will “interlock roadmaps”, he says.

The ramifications of AT&T’s programme could be far-reaching. The promotion of several broad-portfolio equipment suppliers into an operator’s inner circle promises them a technological edge, especially if the working model is embraced by other leading operators.

The development is also likely to lead to consolidation. Equipment start-ups will have to partner with domain suppliers if they wish to be used in AT&T’s network, or a domain supplier may decide to bring the technology in-house.

Meanwhile, selling to domain supplier vendors becomes even more important for optical component and chip suppliers.

Domain suppliers begin to emerge

AT&T first started work on the programme 18 months ago. “AT&T is on a five-year journey to an all-IP network and there was a concern about the health of the [vendor] community to help us make that transition, what with the bankruptcy of Nortel,” says Harden. The Domain Supplier programme represents 30 percent of the operator’s capital expenditure.

The operator began by grouping technologies. Initially 14 domains were identified before the list was refined to eight. The domains were not detailed by Harden but he did cite two: wireless access, and radio access including the packet core.

For each domain, two players will be chosen. “If you look at the players, all have strengths in all eight [domains],” says Harden.

AT&T has been discussing its R&D plans with the vendors, and where they have gaps in their portfolios. “You have seen the results [of such discussions] being acted out in recent weeks and months,” says Harden, who did not name particular deals.

In October Cisco Systems announced it planned to acquire IP-based mobile infrastructure provider Starent Networks, while Tellabs is to acquire WiChorus, a maker of wireless packet core infrastructure products. "We are not at liberty to discuss specifics about our customer AT&T,” says a Tellabs spokesperson. Cisco has still to respond.

Harden dismisses the suggestion that its programme will lead to vendors pursuing too narrow a focus. Vendors will be involved in a longer term relationship – five years rather than two or three common with RFPs, and vendors will have an opportunity to earn back their R&D spending. “They will get to market faster while we get to revenue faster,” he says.

The operator is also keen to stress that there is no guarantee of business for a vendor selected as a domain supplier. Two are chosen for each domain to ensure competition. If a domain supplier continues to meet AT&T’s roadmap and has the best solution, it can expect to win business. Harden stresses that AT&T does not require a second-supplier arrangement here.

In September AT&T selected Ericsson as one of the domain suppliers for wireline access, while suppliers for radio access Long Term Evolution (LTE) cellular will be announced in 2010.