Broadcom’s silicon for the PCI Express 6.0 era

Broadcom has detailed its first silicon for the sixth generation of the PCI Express (PCIe 6.0) bus, developed with AI servers in mind.

The two types of PCIe 6.0 devices are a switch chip and a retimer.

Broadcom, working with Teledyne LeCroy, is also making available an interoperability development platform to aid engineers adopting the PCIe 6.0 standard as part of their systems.

Compute servers for AI are placing new demands on the PCIe bus. The standard no longer about connects CPUs to peripherals but also serving the communication needs of AI accelerator chips.

“AI servers have become a lot more complicated, and connectivity is now very important,” says Sreenivas Bagalkote, Broadcom’s product line manager for the data center solutions group.

Bagalkote describes Broadcom’s PCIe 6.0 switches as a ‘fabric’ rather than silicon to switch between PCIe lanes.

PCI Express

PCIe is an long-standing standard adopted widely, not only for computing and servers but across industries such as medical imaging, automotive, and storage.

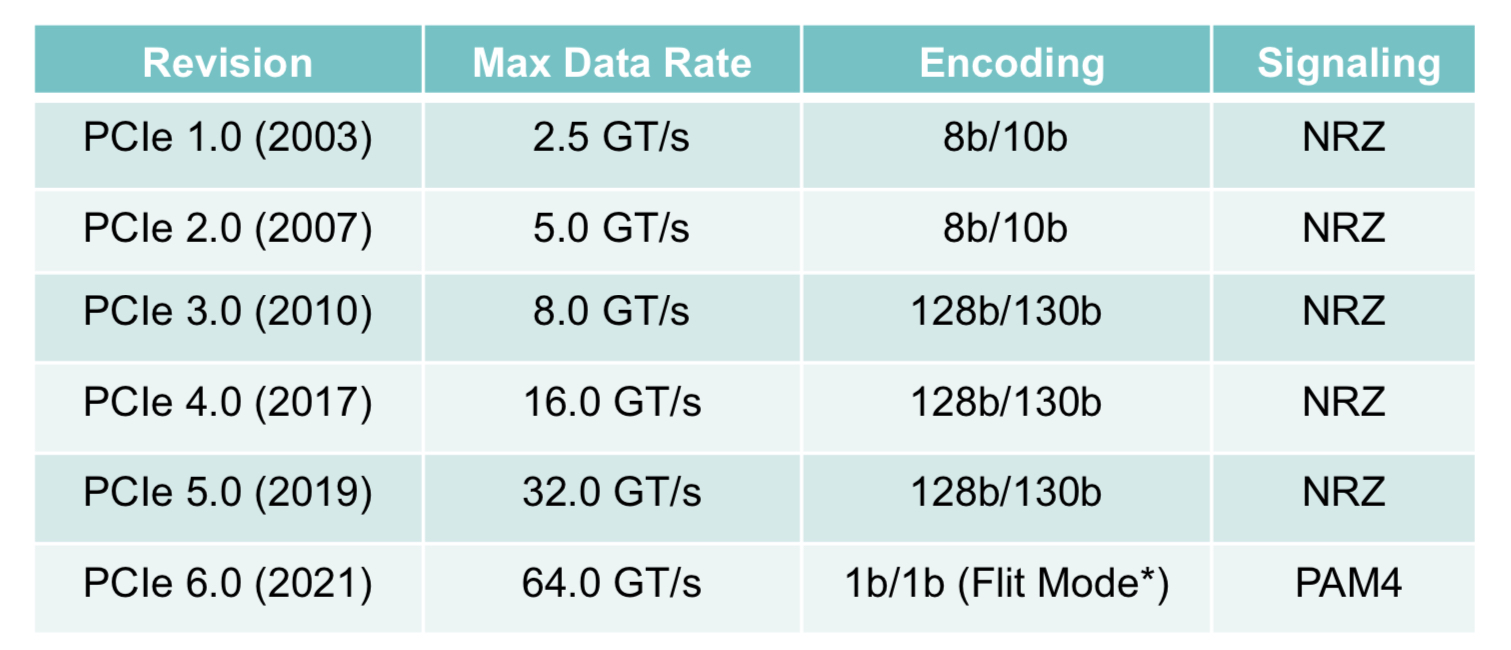

The first three generations of PCIe evolved around the CPU. There followed a big wait for the PCIe 4.0, but since then, a new PCI generation has appeared every two years, each time doubling the data transfer rate.

Now, PCIe 6.0 silicon is coming to the market while work continues to progress on the latest PCIe 7.0, with the final draft ready for member review.

The PCIe standard supports various lane configurations from two to 32 lanes. For servers, 8-lane and 16-lane configurations are common.

“Of all the transitions in PCIe technology, generation 6.0 is the most important and most complicated,” says Bagalkote.

PCIe 6.0 introduces several new features. Like previous generations, it doubles the lane rate: PCIe 5.0 supports 32 giga-transfers a second (GT/s) while PCIe 6.0 supports 64GT/s.

The 64GT/s line rate requires the use of 4-level pulse amplitude modulation (PAM-4) for the first time; all previous PCIe generations use non-return-to-zero (NRZ) signalling.

Since PCIe must be backwards compatible, the PCIe 6.0 switch supports PAM-4 and NRZ signalling. More sophisticated circuitry is thus required at each end of the link as well as a forward error correction scheme, also a first for the PCIe 6.0 implementation.

Another new feature is flow control unit (FLIT) encoding, a network packet scheme designed to simplify data transfers.

PCIe 6.0 also adds integrity and data encryption (IDE) to secure the data on the PCIe links.

AI servers

A typical AI server includes CPUs, 8 or 16 interconnect GPUs (AI accelerators), network interface cards (NICs) to connect to GPUs making up the cluster, and to storage elements.

A typical server connectivity tray will likely have four switch chips, one for each pair of GPUs, says Bagalkote. Each GPU has a dedicated NIC, typically with a 400 gigabit per second (Gbps) interface. The PCIe switch chips also connect the CPUs and NVMe storage.

Broadcom’s existing generation PCIe 5.0 switch ICs have been used in over 400 AI server designs, estimated by the company at 80 to 90 per cent of all deployed AI servers.

Switch and retimer chips

PCIe 6.0’s doubling the lane data rate makes sending signals over 15-inch rack servers harder.

Broadcom says its switch chip uses serialiser-deserialiser (serdes) that outperform the PCIe specification by 4 decibels (dB). If an extra link distance is needed, Broadcom also offers its PCIe 6.0 retimer chips that also offer an extra 4dB.

Using Broadcom’s ICs at both ends results in a 40dB link budget, whereas the specification only calls for 32dB. “This [extra link budget] allows designers to either achieve a longer reach or use cheaper PCB materials,” says Bagalkote.

The PCIe switch chip also features added telemetry and diagnostic features. Given the cost of GPUs, such features help data centre operators identify and remedy issues they have, to avoid taking the server offline

“PCIe has become an important tool for diagnosing in real-time, remotely, and with less human intervention, all the issues that happen in AI servers,” says Bagalkote.

Early PCIe switches were used in a tree-like arrangement with one input – the root complex – connected via the switch to multiple end-points. Now, with AI servers, many devices connect to each other. Broadcom’s largest device – the PEX90144 – can switches between its 144 PCIe 6.0 lanes while supporting 2-, 4-, 8- or 16-lane-wide ports.

Broadcom also has announced other switch IC configurations with 104- and 88-lanes. These will be followed by 64 and 32 lane versions. All the switch chips are implemented using a 5nm CMOS process.

Broadcom is shipping “significant numbers” of samples of the chips to certain system developers.

PCIe versus proprietary interconnects

Nvidia and AMD that develop CPUs and AI accelerators have developed their own proprietary scale-up architectures. Nvidia has NVLink, while AMD has developed the Infinity Fabric interconnect technology.

Such proprietary interconnect schemes are used in preference to PCIe to connect GPUs, and CPUs and GPUs. However, the two vendors use PCIe in their systems to connect to storage, for example.

Broadcom says that for the market in general, open systems have a history of supplanting closed, proprietary systems. It points to the the success of its PCIe 4.0 and PCIe 5.0 switch chips and believes PCIe 6.0 will be no different.

Disaggregated system vendor developer, Drut Technologies, is now shipping a PCIe 5.0-based scalable AI cluster that can support different vendors’ AI accelerators. Its system uses Broadcom’s 144-lane PCIe 5.0 switch silicon for its interconnect fabric.

Drut is working on its next-generation PCIe 6.0-generation-based design.

PCI-SIG releases the next PCI Express bus specification

The Peripheral Component Interconnect Express (PCIe) 6.0 specification doubles the data rate to deliver 64 giga-transfers-per-second (GT/s) per lane.

For a 16-lane configuration, the resulting bidirectional data transfer capacity is 256 gigabytes-per-second (GBps).

“We’ve doubled the I/O bandwidth in two and a half years, and the average pace is now under three years,” says Al Yanes, President of the Peripheral Component Interconnect – Special Interest Group (PCI-SIG).

The significance of the specification’s release is that PCI-SIG members can now plan their products.

Users of FPGA-based accelerators, for example, will know that in 12-18 months there will be motherboards running at such rates, says Yanes

Applications

The PCIe bus is used widely for such applications as storage, processors, artificial intelligence (AI), the Internet of Things (IoT), mobile, and automotive.

In servers, PCIe has been adopted for storage and by general-purpose processors and specialist devices such as FPGAs, graphics processor units (GPUs) and AI hardware.

The CXL standard enables server disaggregation by interconnecting processors, accelerator devices, memory, and switching, with the protocol sitting on top of the PCIe physical layer. The NVM Express (NVMe) storage standard similarly uses PCIe.

“If you are on those platforms, you know you have a healthy roadmap; this technology has legs,” says Yanes.

A focus area for PCI-SIG is automotive which accounts for the recent membership growth; the organisation now has 900 members. PCI-SIG has also created a new workgroup addressing automotive.

Yanes attributes the automotive industry’s interest in PCIe due to the need for bandwidth and real-time analysis within cars. Advanced driver assistance systems, for example, use a variety of sensors and technologies such as AI.

PCIe 6.0

The PCIe bus uses a dual simplex scheme – serial transmissions in both directions – referred to as a lane. The bus can be configured in several lane configurations: x1, x2, x4, x8, x12, x16 and x32, although x2, x12 and x32 are rarely used.

PCIe 6.0’s 64GT/s per lane is double that of PCIe 5.0 that is already emerging in ICs and products.

IBM’s latest 7nm POWER10 16-core processor, for example, uses the PCIe 5.0 bus as part of its I/O, while the latest data processing units (DPUs) from Marvell (Octeon 10) and Nvidia (BlueField 3) also support PCIe 5.0.

To achieve the 64GT/s transfer rates, the PCIe bus has adopted 4-level pulse amplitude modulation (PAM-4) signalling. This requires forward error correction (FEC) to offset the bit error rates of PAM-4 while minimising the impact on latency. And low latency is key given the PCIe PHY layer is used by such protocols as CXL that carry coherency and memory traffic. (see IEEE Micro article.)

The latest specification also adopts flow control unit (FLIT) encoding. Here, fixed 256-byte packets are sent: 236 bytes of data and 20 bytes of cyclic redundancy check (CRC).

Using fixed-length packets simplifies the encoding, says Yanes. Since the PCIe 3.0 specification, 128b/130b encoding has been used for clock recovery and the aligning of data. Now with the fixed-sized packet of FLIT, no encoding bits are needed. “They know where the data starts and where it ends,” says Yanes.

Silicon designed for PCIe 6.0 will also be able to use FLITs with earlier standard PCIe transfer speeds.

Yanes says power-saving modes have been added with the release. Both ends of a link can agree to make lanes inactive when they are not being used.

Status and developments

IP blocks for PCIe 6.0 already exist while demonstrations and technology validations will occur this year. First products using PCIe 6.0 will appear in 2023.

Yanes expects PCIe 6.0 to be used first in servers with accelerators used for AI and machine learning, and also where 800 Gigabit Ethernet will be needed.

PCI-SIG is also working to develop new cabling for PCIe 5.0 and PCIe 6.0 for sectors such as automotive. This will aid the technology’s adoption, he says

Meanwhile, work has begun on PCIe 7.0.

“I would be ecstatic if we can double the data rate to 128GT/s in two and a half years,” says Yanes. “We will be investigating that in the next couple of months.”

One challenge with the PCIe standard is that it borrows the underlying technology from telecom and datacom. But the transfer rates it uses are higher than the equivalent rates used in telecom and datacom.

So, while PCI 6.0 has adopted 64GT/s, the equivalent rate used in telecom is 56Gbps only. The same will apply if PCI-SIG chooses 128GT/s as the next data rate given that telecom uses 112Gbps.

Yanes notes, however, that telecom requires much greater reaches whereas PCIe runs on motherboards, albeit ones using advanced printed circuit board (PCB) materials.

Waiting for buses: PCI Express 6.0 to arrive on time

- PCI Express 6.0 (PCIe 6.0) continues the trend of doubling the speed of the point-to-point bus every 3 years.

- PCIe 6.0 uses PAM-4 signalling for the first time to achieve 64 giga-transfers per second (GT/s).

- Given the importance of the bus for interconnect standards such as the Compute Express Link (CXL) that supports disaggregation, the new bus can’t come fast enough for server vendors.

The PCI Express 6.0 specification is expected to be completed early next year.

So says Richard Solomon, vice-chair of the PCI Special Interest Group (PCI-SIG) which oversees the long-established PCI Express (PCIe) standard, and that has nearly 900 member companies.

The first announced products will then follow later next year while IP blocks supporting the 6.0 standard exist now.

When the work to develop the point-to-point communications standard was announced in 2019, developing lanes capable of 64 giga transfers-per-second (GT/s) in just two years was deemed ambitious, especially given 4-level pulse amplitude modulation (PAM-4) would be adopted for the first time.

But Solomon says the global pandemic may have benefitted development due to engineers working from home and spending more time on the standard. Demand from applications such as storage and artificial intelligence (AI)/ machine learning have also been driving factors.

Applications

The PCIe standard uses a dual simplex scheme – serial transmissions in both directions – referred to as a lane. The bus can be configured in several lane configurations: x1, x2, x4, x8, x12, x16 and x32, although x2, x12 and x32 are rarely used in practice.

PCIe 6.0’s transfer rate of 64GT/s is double that of the PCIe 5.0 standard that is already being adopted in products.

The PCIe bus is used for storage, processors, AI, the Internet of Things (IoT), mobile, and automotive especially with the advent of advanced driver assistance systems (ADAS). “Advanced driver assistance systems use a lot of AI; there is a huge amount of vision processing going on,” says Solomon.

For cloud applications, the bus is used for servers and storage. For servers, PCIe has been adopted by general-purpose processors and more specialist devices such as FPGAs, graphics processor units (GPUs) and AI hardware.

IBM’s latest 7nm POWER10 16-core processor, for example, is an 18-billion transistor device. The chip uses the PCIe 5.0 bus as part of its input-output.

In contrast, IoT applications typically adopt older generation PCIe interfaces. “It will be PCIe at 8 gigabit when the industry is on 16 and 32 gigabit,” says Solomon.

PCIe is being used for IoT because of it being a widely adopted interface and because PCIe devices interface like memory, using a load-store approach.

The CXL standard – an important technology for the data centre that interconnects processors, accelerator devices, memory, and switching – also makes use of PCIe, sitting on top of the PCIe physical layer.

PCIe roadmap

The PCIe 4.0 came out relatively late but then PCI-SIG quickly followed with PCIe 5.0 and now the 6.0 specification.

The PCIe 6.0 specification built into the schedule an allowance for some slippage while still being ready for when the industry would need the technology. But even with the adoption of PAM-4, the standard has kept to the original ambitious schedule.

PCIe 4.0 incorporated an important change by extending the number of outstanding commands and data. Before the 4.0 specification, PCIe allowed for up to 256 commands to be outstanding. With PCIe 4.0 that was tripled to 768.

To understand why this is needed, a host CPU system may support several add-in cards. When a card makes a read request, it may take the host a while to service the request, especially if the memory system is remote.

A way around that is for the add-in card to issue more commands to hide the latency.

“As the bus goes faster and faster, the transfer time goes down and the systems are frankly busier,” says Solomon. “If you are busy, I need to give you more commands so I can cover that latency.”

The PCIe technical terms are tags, a tag identifying each command, and credits which refers to how the bus takes care of flow control.

“You can think of tags as the sheer number of outstanding commands and credits as more as the amount of overall outstanding data,” says Solomon.

Both tags and credits had to be changed to support up to 768 outstanding commands. And this protocol change has been carried over into PCI 5.0.

In addition to the doubling in transfer rate to 32GT/s, PCI 5.0 requires an enhanced link budget of 36dB, up from 28dB with the PCIe 4.0. “As the frequency [of the signals] goes up, so does the loss,” says Solomon.

PCI 6.0

Moving from 32GT/s to 64GT/s and yet keep ensuring the same typical distances requires PAM-4.

More sophisticated circuitry at each end of the link is needed as well as a forward-error correction scheme which is a first for a PCI express standard implementation.

One advantage is that PAM-4 is already widely used for 56 and 112 gigabit-per-second high-speed interfaces. “That is why it was reasonable to set an aggressive timescale because we are leveraging a technology that is out there,” says Solomon. Here, PAM-4 will be operated at 64Gbps.

The tags and credits have again been expanded for PCI 6.0 to support 16,384 outstanding commands. “Hopefully, it will not be needed to be extended again,” says Solomon.

PCIe 6.0 also supports FLITs – a network packet scheme – that simplifies data transfers. FLITs are introduced with PCIe 6.0, but silicon designed for PCIe 6.0 could use FLITs at lower transfer speeds. Meanwhile, there are no signs of PCI Express needing to embrace optics as the interface speeds continue to advance.

“There is a ton of complexity and additional stuff we have to do to move to 6.0; optical would add to that,” says Solomon. “As long as people can do it on copper, they will keep doing it on copper.”

PCI-SIG is not yet talking about PCIe 7.0 but Solomon points out that every generation has doubled the transfer rate.