QSFP28 MicroMux expands 10 & 40 Gig faceplate capacity

- ADVA Optical Networking's MicroMux aggregates lower rate 10 and 40 gigabit client signals in a pluggable QSFP28 module

- ADVA is also claiming an industry first in implementing the Open Optical Line System concept that is backed by Microsoft

The need for terabits of capacity to link Internet content providers’ mega-scale data centres has given rise to a new class of optical transport platform, known as data centre interconnect.

Source: ADVA Optical Networking

Source: ADVA Optical Networking

Such platforms are designed to be power efficient, compact and support a variety of client-side signal rates spanning 10, 40 and 100 gigabit. But this poses a challenge for design engineers as the front panel of such platforms can only fit so many lower-rate client-side signals. This can lead to the aggregate data fed to the platform falling short of its full line-side transport capability.

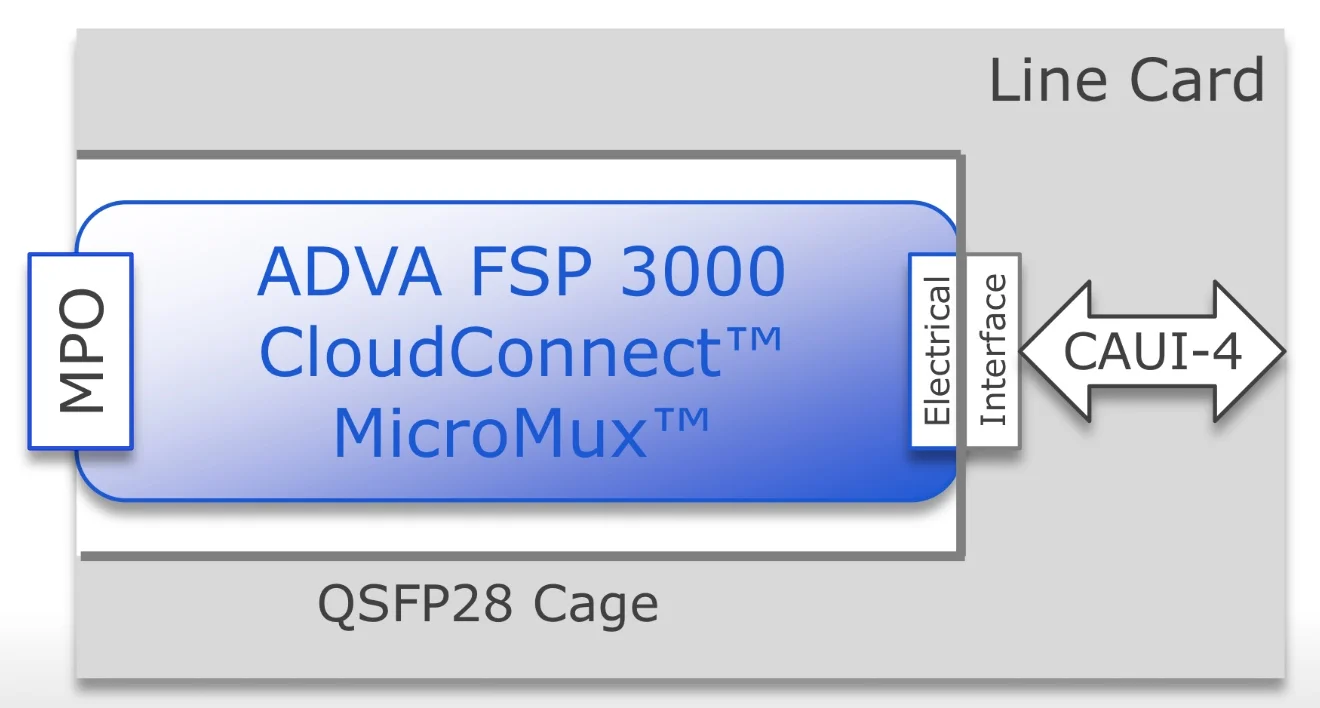

ADVA Optical Networking has tackled the problem by developing the MicroMux, a multiplexer placed within a QSFP28 module. The MicroMux module plugs into the front panel of the CloudConnect, ADVA’s data centre interconnect platform, and funnels either 10, 10-gigabit ports or two, 40-gigabit ports into a front panel’s 100-gigabit port.

"The MicroMux allows you to support legacy client rates without impacting the panel density of the product," says Jim Theodoras, vice president of global business development at ADVA Optical Networking.

Using the MicroMux, lower-speed client interfaces can be added to a higher-speed product without stranding line-side bandwidth. An alternative approach to avoid wasting capacity is to install a lower-speed platform, says Theodoras, but then you can't scale.

ADVA Optical Networking offers four MicroMux pluggables for its CloudConnect data centre interconnect platform: short-reach and long-reach 10-by-10 gigabit QSFP28s, and short-reach and intermediate-reach 2-by-40 gigabit QSFP28 modules.

The MicroMux features an MPO connector. For the 10-gigabit products, the MPO connector supports 20 fibres, while for the 40-gigabit products, it is four fibres. At the other end of the QSFP28, that plugs into the platform, sits a CAUI-4 4x25-gigabit electrical interface (see diagram above).

“The key thing is the CAUI-4 interface; this is what makes it all work," says Theodoras.

Inside the MicroMux, signals are converted between the optical and electrical domains while a gearbox IC translates between 10- or 40-gigabit signals and the CAUI-4 format.

Theodoras stresses that the 10-gigabit inputs are not the old 100 Gigabit Ethernet 10x10 MSA but independent 10 Gigabit Ethernet streams. "They can come from different routers, different ports and different timing domains," he says. "It is no different than if you had 10, 10 Gigabit Ethernet ports on the front face plate."

Using the pluggables, a 5-terabit CloudConnect configuration can support up to 520, 10 Gigabit Ethernet ports, according to ADVA Optical Networking.

The first products will be shipped in the third quarter to preferred customers that help in its development while the products will be generally available at the year-end.

ADVA Optical Networking unveiled the MicroMux at OFC 2016, held in Anaheim, California in March. ADVA also used the show to detail its Open Optical Line System demonstration with switch vendor, Arista Networks.

Two years after Microsoft first talked about the [Open Optical Line System] concept at OFC, here we are today fully supporting it

Open Optical Line System

The Open Optical Line System is a concept being promoted by the Internet content providers to afford them greater control of their optical networking requirements.

Data centre players typically update their servers and top-of-rack switches every three years yet the optical transport functions such as the amplifiers, multiplexers and ROADMs have an upgrade cycle closer to 15 years.

“When the transponding function is stuck in with something that is replaced every 15 years and they want to replace it every three years, there is a mismatch,” says Theodoras.

Data centre interconnect line cards can be replaced more frequently with newer cards while retaining the chassis. And the CloudConnect product is also designed such that its optical line shelf can take external wavelengths from other products by supporting the Open Optical Line System. This adds flexibility and is done in a way that matches the work practices of the data centre players.

“The key part of the Open Optical Line System is the software,” says Theodoras. “The software lets that optical line shelf be its own separate node; an individual network element.”

The data centre operator can then manage the standalone CloudConnect Open Optical Line System product. Such a product can take coloured wavelength inputs and even provide feedback with the source platform, so that the wavelength is tuned to the correct channel. “It’s an orchestration and a management level thing,” says Theodoras.

Arista recently added a coherent line card to its 7500 spine switch family.

The card supports six CFP2-ACOs that have a reach of up to 2,000km, sufficient for most data centre interconnect applications, says Theodoras. The 7500 also supports the layer-two MACsec security protocol. However, it does not support flexible modulation formats. The CloudConnect does, supporting 100-, 150- and 200-gigabit formats. CloudConnect also has a 3,000km reach.

Source: ADVA Optical Networking

Source: ADVA Optical Networking

In the Open Optical Line System demonstration, ADVA Optical Networking squeezed the Arista 100-gigabit wavelength into a narrower 37.5GHz channel, sandwiched between two 100 gigabit wavelengths from legacy equipment and two 200 gigabit (PM-16QAM) wavelengths from the CloudConnect Quadplex card. All five wavelengths were sent over a 2,000km link.

Implementing the Open Optical Line System expands a data centre manager’s options. A coherent card can be added to the Arista 7500 and wavelengths sent directly using the CFP2-ACOs, or wavelengths can be sent over more demanding links, or ones that requires greater spectral efficiency, by using the CloudConnect. The 7500 chassis could also be used solely for switching and its traffic routed to the CloudConnect platform for off-site transmission.

Spectral efficiency is important for the large-scale data centre players. “The data centre interconnect guys are fibre-poor; they typically only have a single fibre pair going around the country and that is their network,” says Theodoras.

The joint demo shows that the Open Optical Line System concept works, he says: “Two years after Microsoft first talked about the concept at OFC, here we are today fully supporting it.”

ADVA's 100 Terabit data centre interconnect platform

- The FSP 3000 CloudConnect comes in several configurations

- The data centre interconnect platform scales to 100 terabits of throughput

- The chassis use a thin 0.5 RU QuadFlex card with up to 400 Gig transport capacity

- The optical line system has been designed to be open and programmable

ADVA Optical Networking has unveiled its FSP 3000 CloudConnect, a data centre interconnect product designed to cater for the needs of the different data centre players. The company has developed several sized platforms to address the workloads and bandwidth needs of data centre operators such as Internet content providers, communications service providers, enterprises, cloud and colocation players.

Certain Internet content providers want to scale the performance of their computing clusters across their data centres. A cluster is a grouping of distributed computing comprising a defined number of virtual machines and processor cores (see Clusters, pods and recipes explained, bottom). Yet there are also data centre operators that only need to share limited data between their sites.

ADVA Optical Networking highlights two internet content providers - Google and Microsoft with its Azure cloud computing and services platform - that want their distributed clusters to act as one giant global cluster.

“The performance of the combined clusters is proportional to the bandwidth of the interconnect,” says Jim Theodoras, senior director, technical marketing at ADVA optical Networking. “No matter how many CPU cores or servers, you are now limited by the interconnect bandwidth.”

ADVA Optical Networking cites a Google study that involved running an application on different cluster configurations, starting with a single cluster; then two, side-by-side; two clusters in separate buildings through to clusters across continents. Google claimed the distributed clusters only performed at 20 percent capacity due to the limited interconnect bandwidth. “The reason you are hearing these ridiculous amounts of connectivity, in the hundreds of terabits, is only for those customers that want their clusters to behave as a global cluster,” says Theodoras.

Yet other internet content providers have far more modest interconnect demands. ADVA cites one, as large as the two global cluster players, that wants only 1.2 terabit-per-second (Tbps) between its sites. “It is normal duplication/ replication between sites,” says Theodoras. “They want each campus to run as a cluster but they don’t want their networks to behave as a global cluster.”

FSP 3000 CloudConnect

The FSP 3000 CloudConnect has several configurations. The company stresses that it designed CloudConnect as a high-density, self-contained platform that is power-efficient and that comes with advanced data security features.

All the CloudConnect configurations use the QuadFlex card that has a 800 Gigabit throughput: up to 400 Gigabit client-side interfaces and 400 Gigabit line rates.

Jim TheodorasThe QuadFlex card is thin, measuring only a half rack unit (RU). Up to seven can be fitted in ADVA’s four rack-unit (4 RU) platform, dubbed the SH4R, for a line side transport capacity of 2.8 Tbps. The SH4R’s remaining, eighth slot hosts either one or two management controllers.

Jim TheodorasThe QuadFlex card is thin, measuring only a half rack unit (RU). Up to seven can be fitted in ADVA’s four rack-unit (4 RU) platform, dubbed the SH4R, for a line side transport capacity of 2.8 Tbps. The SH4R’s remaining, eighth slot hosts either one or two management controllers.

The QuadFlex line-side interface supports various rates and reaches, from 100 Gigabit ultra long-haul to 400 Gigabit metro/ regional, in increments of 100 Gigabit. Two carriers, each using polarisation-multiplexing, 16 quadrature amplitude modulation (PM-16-QAM), are used to achieve the 400 Gbps line rate, whereas for 300 Gbps, 8-QAM is used on each of the two carriers.

“The reason you are hearing these ridiculous amounts of connectivity, in the hundreds of terabits, is only for those customers that want their clusters to behave as a global cluster”

The advantage of 8-QAM, says Theodoras, is that it is 'almost 400 Gigabit of capacity' yet its can span continents. ADVA is sourcing the line-side optics but uses its own code for the coherent DSP-ASIC and module firmware. The company has not confirmed the supplier but the design matches Acacia's 400 Gigabit coherent module that was announced at OFC 2015.

ADVA says the CloudConnect 4 RU chassis is designed for customers that want a terabit-capacity box. To achieve a terabit link, three QuadFlex cards and an Erbium-doped fibre amplifier (EDFA) can be used. The EDFA is a bidirectional amplifier design that includes an integrated communications channel and enables the 4 RU platform to achieve ultra long-haul reaches. “There is no need to fit into a [separate] big chassis with optical line equipment,” says Theodoras. Equally, data centre operators don’t want to be bothered with mid-stage amplifier sites.

Some data centre operators have already installed 40 dense WDM channels at 100GHz spacing across the C-band which they want to keep. ADVA Optical Networking offers a 14 RU configuration that uses three SH4R units, an EDFA and a DWDM multiplexer, that enables a capacity upgrade. The three SH4R units house a total of 20 QuadFlex cards that fit 200 Gigabit in each of the 40 channels for an overall transport capacity of 8 terabits.

ADVA CloudConnect configuration supporting 25.6 Tbps line side capacity. Source: ADVA Optical Networking

ADVA CloudConnect configuration supporting 25.6 Tbps line side capacity. Source: ADVA Optical Networking

The last CloudConnect chassis configuration is for customers designing a global cluster. Here the chassis has 10 SH4R units housing 64 QuadFlex cards to achieve a total transport capacity of 25.6 Tbps and a throughput of 51.2 Tbps.

Also included are 2 EDFAs and a 128-channel multiplexer. Two EDFAs are needed because of the optical loss associated with the high number of channels, such that an EDFA is allocated for each of the 64 channels. “For the [14 RU] 40 channels [configuration], you need only one EDFA,” says Theodoras.

The vendor has also produced a similar-sized configuration for the L-band. Combining the two 40 RU chassis delivers 51.2Tbps of transport and 102.4 Tbps of throughput. “This configuration was built specifically for a customer that needed that kind of throughput,” says Theodoras.

Other platform features include bulk encryption. ADVA says the encryption does not impact the overall data throughput while adding only a very slight latency hit. “We encrypt the entire payload; just a few framing bytes are hidden in the existing overhead,” says Theodoras.

The security management is separate from the network management. “The security guys have complete control of the security of the data being managed; only they can encrypt and decrypt content,” says Theodoras.

CloudConnect consumes only 0.5W/ Gigabit. The platform does not use electrical multiplexing of data streams over the backplane. The issue with using such a switched backplane is that power is consumed independent of traffic. The CloudConnect designers has avoided this approach. “The reason we save power is that we don’t have all that switching going on over the backplane.” Instead all the connectivity comes from the front panel of the cards.

The downside of this approach is that the platform does not support any-port to any-port connectivity. “But for this customer set, it turns out that they don’t need or care about that.”

Open hardware and software

ADVA Optical Networking claims is 4 RU basic unit addresses a sweet spot in the marketplace. The CloudConnect also has fewer inventory items for the data centre operators to manage compared to competing designs based on 1 RU or 2 RU pizza boxes, it says.

Theodoras also highlights the system’s open hardware and software design.

“We will let anybody’s hardware or software control our network,” says Theodoras. “You don’t have to talk to our software-defined networking (SDN) controller to control our network.” ADVA was part of a demonstration last year whereby an NEC and a Fujitsu controller oversaw ADVA’s networking elements.

Every vendor is always under pressure to have the best thing because you are only designed in for 18 months

By open hardware, what is meant is that programmers can control the optical line system used to interconnect the data centres. “We have found a way of simplifying it so it can be programmed,” says Theodoras. “We have made it more digital so that they don’t have to do dispersion maps, polarisation mode dispersion maps or worry about [optical] link budgets.” The result is that data centre operators can now access all the line elements.

“At OFC 2015, Microsoft publicly said they will only buy an open optical line system,” says Theodoras. Meanwhile, Google is writing a specification for open optical line systems dubbed OpenConfig. “We will be compliant with Microsoft and Google in making every node completely open.”

General availability of the CloudConnect platforms is expected at the year-end. “The data centre interconnect platforms are now with key partners, companies that we have designed this with,” says Theodoras.

Clusters, pods and recipes explained

A cluster is made up of a number of virtual machines and CPU cores and is defined in software. A cluster is a virtual entity, says Theodoras, unrelated to the way data centre managers define their hardware architectures.

“Clusters vary a lot [between players],” says Theodoras. “That is why we have had to make scalability such a big part of CloudConnect.”

The hardware definition is known as a pod or recipe. “How these guys build the network is that they create recipes,” says Theodoras. “A pod with this number of servers, this number of top-of-rack switches, this amount of end-of-row router-switches and this transport node; that will be one recipe.”

Data centre players update their recipes every 18 months. “Every vendor is always under pressure to have the best thing because you are only designed in for 18 months,” says Theodoras.

Vendors are informed well in advance what the next hardware requirements will be, and by when they will be needed to meet the new recipe requirements.

In summary, pods and recipes refer to how the data centre architecture is built, whereas a cluster is defined at a higher, more abstract layer.