Will AI spur revenue growth for the telcos?

- A global AI survey sponsored by Ciena highlights industry optimism

- The telcos have unique networking assets that can serve users of AI.

- Much is still to play out and telcos have a history of missed opportunities.

The leading communications service providers have been on a decade-long journey to transform their networks and grow their revenues.

To the list of technologies the operators have been embracing can now be added artificial intelligence (AI).

AI is a powerful tool for improving their business efficiency. The technology is also a revenue opportunity and service providers are studying how AI traffic will impact their networks.

“This is the single biggest question that everyone in this industry is struggling with,” says Jürgen Hatheier. “How can the service providers exploit the technology to grow revenues?”

However, some question whether AI will be an telecom opportunity.

“The current hype around AI has very little to do with telcos and is focused on hyperscalers and specifically the intra-data centre traffic driven by AI model training,” says Sterling Perrin, senior principal analyst at HeavyReading. “There is a lot of speculation that, ultimately, this traffic will spread beyond the data centre to data centre interconnect (DCI) applications. But there are too many unknowns right now.”

AI survey

Hatheier is chief technology officer, international at Ciena. He oversees 30 staff, spanning Dublin to New Zealand, that work with the operators to understand their mid- to long-term goals.

Ciena recently undertook a global survey (see note 1, bottom) about AI, similar to one it conducted two years ago that looked at the Metaverse.

Conducting such surveys complements Ciena’s direct research with the service providers. However, there is only so much time a telco’s chief strategy officer (CSO) or chief technology officer (CTO) can spend with a vendor discussing strategy, vision, and industry trends.

“The survey helps confirm what we are hearing from a smaller set,” says Hatheier.

Surveys also uncover industry and regional nuances. Hatheier cites how sometimes it is the tier-two communications service providers are the trailblazers.

Lastly, telcos have their own pace. “It takes time to implement new services and change the underlying network architecture,” says Hatheier. “So it is good to plan.”

Findings

The sectors expected to generate the most AI traffic are financial services (46 per cent of those surveyed), media and entertainment (43 per cent), and manufacturing (38 per cent). Hatheier says these industries have already been using the technology for a while, so AI is not new to them.

Sterling Perrin

For financial services, an everyday use of AI is for security, detecting fraudulent transactions and monitoring video streams to detect anomalous behavior at a site. The amount of traffic AI applications generate can vary greatly. This is common, says Hatheier; it is the use that matters here, not the industry.

“I would not break it down by the industries to say, okay, this industry is going to create more traffic than another,” says Hatheier. “For financial services, if it is transaction data, it’s a few lines of text, but if it is video for branch security, the data volumes are far more significant.”

AI is also set to change the media and entertainment sector, challenging the way content is consumed. Video streaming uses content delivery networks (CDNs) to store the most popular video content close to users. But AI promises to enable more personalised video, tailored for the end-user. Such content will make the traffic more dynamic.

Another example of personalised content is for marketing and advertising. Such personalisation tends to achieve better results, says Hatheier.

AI is also being applied in the manufacturing sector. Examples include automating supply-chain operations, predictive maintenance, and quality assurance.

Car manufacturers check a vehicle for any blemishes at the end of a production line. This usually takes several staff and lasts 10-15 minutes. Now with AI, the inspection can be completed as the cars passes by. “This is a potent application that could run on infrastructure within the manufacturing site but use a service provider’s compute assets and connectivity,” says Hatheier.

The example shows how AI produces productivity gains. However, AI also promises unique abilities that staff cannot match.

Traffic trends

If the history of telecoms is anything to go by, applications that drove traffic in the network rarely lead to revenue growth for the service providers. Hatheier cites streaming video, gaming, and augmented reality as examples.

However, the operators have assets at network edge and the metro that can benefit AI usage. They also have central offices that can act is distributed data centres for the metro and network edge.

Hatheier says users have an advantage if they consume AI applications across a fibre-based broadband network. But certain countries, such as Saudi Arabia and India, mainly use wireless for connectivity.

“AI applications will need to adapt to what is available, and if people want to consume low-latency applications, there is 5G slicing,” says Hatheier. “At the end of the day, there is no way around fibre.”

Optical networking

Government policy regarding AI and regulations to ensure data does not cross borders also play a part.

“It’s an important decision criterion, as we saw in the survey response,” says Hatheier. “So private AI and local computing will be an important decision factor.”

Another critical decision influencing where data centres are built is power. “We see all the gold rush in the Nordics right now with their renewable power and cool climates,” says Hatheier. “You don’t need to cool your servers as much, and it requires a lot of connectivity.”

However, as well as these region-specific data centre builds, there will also be builds in metropolitan areas using smaller distributed data centres.

“Let’s say there are 20 sizable edge or metro compute centres for AI, and you would need three or four to run a big training job,” says Hatheier. “You will not create a permanent end-to-end connection between them because sometimes there will not be four that need to work together, but five, seven, and 11.”

Such a metro network would require reconfigurable optical add-drop multiplexer (ROADM) technology to connect wavelengths between those clusters based on demand to keep sites busy, to avoid expensive AI clusters being idle.

These are opportunities for the CSPs. And while much is still to happen, such discussions are taking place between systems vendors and the telcos.

For Heavy Reading’s Perrin, the more telling opportunity is the telcos’ own use of AI rather than the networking opportunity.

“As a vertical industry, telecom is not typically a leading-edge adopter of any new technology due to many factors, including culture, size, legacy infrastructure and processes, and government regulations,” he says. “I don’t believe AI will be any different.”

Hatheier points to the survey’s finding of general optimism that sees AI as an opportunity rather than a challenge or business risk.

“We have seen very little differences between countries,” says Hatheier. “That may have to do with the fact that emerging countries get as much attention of data centre investment than more developed ones.”

OFC 2024 industry reflections: Part 2

Gazettabyte is asking industry figures for their thoughts after attending the recent OFC show in San Diego. Here are the thoughts from Ciena, Celestial AI and Heavy Reading.

Dino DiPerna, Senior Vice President, Global Research and Development at Ciena.

Power efficiency was a key theme at OFC this year. Although it has been a prevalent topic for some time, it stood out more than usual at OFC 2024 as the industry strives to make further improvements.

There was a vast array of presentations focused on power efficiency gains and technological advancements, with sessions highlighting picojoule-per-bit (pJ/b) requirements, high-speed interconnect evolution including co-packaged optics (CPO), linear pluggable optics (LPO), and linear retimer optics (LRO), as well as new materials like thin-film lithium niobate, coherent transceiver evolution, and liquid cooling.

And the list of technologies goes on. The industry is innovating across multiple fronts to support data centre architecture requirements and carbon footprint reduction goals as energy efficiency tops the list of network provider needs.

Another hot topic at OFC was overcoming network scale challenges with various explorations in new frequency bands or fibre types.

One surprise from the show was learning of the achievement of less than 0.11dB/km loss for hollow-core optical fibre, which was presented in the post-deadline session. This achievement offers a new avenue to address the challenge of delivering the higher capacities required in future networks. So, it is one to keep an eye on for sure.

Preet Virk, Co-founder and Chief Operating Officer at Celestial AI.

This year’s OFC was all about AI infrastructure. Since it is an optical conference, the focus is on optical connectivity. A common theme was how interconnect bandwidth is the oxygen for AI infrastructure. Celestial AI agrees fully with this and adds the memory capacity issue to deal with the Memory Wall problem.

Traditionally, OFC has focused on inter- and intra-data centre connectivity. This year’s OFC clarified that chip-to-chip connectivity is also a critical bottleneck. We discussed our high-bandwidth, low-latency, and low-power photonic fabric solutions for compute-to-memory and compute-to-compute connectivity, which were well received at the show.

It seemed that we were the only company with optical connectivity that satisfies bandwidths for high-bandwidth memory—HBM3 and the coming HBM4—with our optical chiplet.

Sterling Perrin, Senior Principal Analyst, Heavy Reading.

OFC is the premier global event for the optics industry and the place to go to get up to speed quickly on trends that will drive the optics industry through the year and beyond. There’s always a theme that ties optics into the overall communications industry zeitgeist. This year’s theme, of course, is AI. OFC themes are sometimes a stretch – think connected cars – but this is not the case for the role of optics in AI where the need is immediate. And the role is clear: higher capacities and lower power consumption.

The fact that OFC took place one week after Nvidia’s GTC event during which President and CEO Jensen Huang unveiled the Grace-Blackwell Superchip was a perfect catalyst for discussions about the urgency for 800 gigabit and 1.6 terabit connectivity within the data centre.

At a Sunday workshop on linear pluggable optics (LPO), Alibaba’s Chongjin Xie presented a slide comparing LPO and 400 gigabit DR4 that showed 50 per cent reduction in power consumption, a 100 per cent reduction in latency, and a 30 per cent reduction in production cost. But, as Xie and many others noted throughout the conference, LPO feasibility at 200 gigabit per lane remains a major industry challenge that has yet to be solved.

Another topic of intense debate within the data centre is Infiniband versus Ethernet. Infiniband delivers high capacity and extremely low latency required for AI training, but it’s expensive, highly complex, and closed. The Ultra Ethernet Consortium aims to build an open, Ethernet-based alternative for AI and high-performance computing. But Nvidia product architect, Ashkan Seyedi, was skeptical about the need for high-performance Ethernet. During a media luncheon, he noted that InfiniBand was developed as a high-performance, low-latency alternative to Ethernet for high-performance computing. Current Ethernet efforts, therefore, are largely trying to re-create InfiniBand, in his view.

The comments above are all about connectivity within the data centre. Outside the data centre, OFC buzz was harder to find. What about AI and data centre interconnect? It’s not here yet. Connectivity between racks and AI clusters is measured in meters for many reasons. There was much talk about building distributed data centres in the future as a means of reducing the demands on individual power grids, but it’s preliminary at this point.

While data centres strive toward 1.6 terabit, 400 gigabit seems to be the data rate of highest interest for most telecom operators (i.e., non-hyperscalers), with pluggable optics as the preferred form factor. I interviewed the OIF’s inimitable Karl Gass, who was dressed in a shiny golden suit, about their impressive coherent demo that included 23 suppliers and demonstrated 400ZR, 400G ZR+, 800ZR, and OpenROADM.

Lastly, quantum safe networking popped up several times at Mobile World Congress this year and the theme continued at OFC. The topic looks poised to move out of academia and into networks, and optical networking has a central role to play. I learned two things.

First, “Q-Day”, when quantum computers can break public encryption keys, may be many years away, but certain entities such as governments and financial institutions want their traffic to be quantum safe well in advance of the elusive Q-Day.

Second, “quantum safe” may not require quantum technology though, like most new areas, there is debate here. In the fighting-quantum-without-quantum camp, Israel-based start-up CyberRidge has developed an approach to transmitting keys and data, safe from quantum computers, that it calls photonic level security.

Heavy Reading’s take on optical module trends

The industry knows what the next-generation 400-gigabit client-side interfaces will look like but uncertainty remains regarding what form factors to use. So says Simon Stanley who has just authored a report entitled: From 25/100G to 400/600G: A Competitive analysis of Optical Modules and Components.

Implementing the desired 400-gigabit module designs is also technically challenging, presenting 200-gigabit modules with a market opportunity should any slip occur at 400 gigabits.

Simon StanleyStanley, analyst-at-large at Heavy Reading and principal consultant at Earlswood Marketing, points to several notable developments that have taken place in the last year. For 400 gigabits, the first CFP8 modules are now available. There are also numerous suppliers of 100-gigabit QSFP28 modules for the CWDM4 and PSM4 multi-source agreements (MSAs). He also highlights the latest 100-gigabit SFP-DD MSA, and how coherent technology for line-side transmission continues to mature.

Simon StanleyStanley, analyst-at-large at Heavy Reading and principal consultant at Earlswood Marketing, points to several notable developments that have taken place in the last year. For 400 gigabits, the first CFP8 modules are now available. There are also numerous suppliers of 100-gigabit QSFP28 modules for the CWDM4 and PSM4 multi-source agreements (MSAs). He also highlights the latest 100-gigabit SFP-DD MSA, and how coherent technology for line-side transmission continues to mature.

Routes to 400 gigabit

The first 400-gigabit modules using the CFP8 form factor support the 2km-reach 400Gbase-FR8 and the 10km 400Gbase-LR8; standards defined by the IEEE 802.3bs 400 Gigabit Ethernet Task Force. The 400-gigabit FR8 and LR8 employ eight 50Gbps wavelengths (in each direction) over a single-mode fibre.

There is significant investment going into the QSFP-DD and OSFP modules

But while the CFP8 is the first main form factor to deliver 400-gigabit interfaces, it is not the form factor of choice for the data centre operators. Rather, interest is centred on two emerging modules: the QSFP-DD that supports double the electrical signal lanes and double the signal rates of the QSFP28, and the octal small form factor pluggable (OSFP) MSA.

“There is significant investment going into the QSFP-DD and OSFP modules,” says Stanley. The OSFP is a fresh design, has a larger power envelope - of the order of 15W compared to the 12W of the QSFP-DD - and has a roadmap that supports 800-gigabit data rates. In contrast, the QSFP-DD is backwards compatible with the QSFP and that has significant advantages.

“Developers of semiconductors and modules are hedging their bets which means they have got to develop for the QSFP-DD, so that is where the bulk of the development work is going,” says Stanley. “But you can put the same electronics and optics in an OSFP.”

Given there is no clear winner, both will likely be deployed for a while. “Will QSFP-DD win out in terms of high-volumes?” says Stanley. “Historically, that says that is what is going to happen.”

The technical challenges facing component and module makers are achieving 100-gigabit-per-wavelength for 400 gigabits and fitting them in a power- and volume-constrained optical module.

The IEEE 400 Gigabit Ethernet Task Force has also defined the 400GBase-DR4 which has an optical interface comprising four single-mode fibres, each carrying 100 gigabits, with a reach up to 500m.

“The big jump for 100 gigabits was getting 25-gigabit components cost-effectively,” says Stanley. “The big challenge for 400 gigabits is getting 100-gigabit-per-wavelength components cost effectively.” This requires optical components that will work at 50 gigabaud coupled with 4-level pulse-amplitude modulation (PAM-4) that encodes two bits per symbol.

That is what gives 200-gigabit modules an opportunity. Instead of 4x50 gigabaud and PAM-4 for 400 gigabits, a 200-gigabit module can use existing 25-gigabit optics and PAM-4. “You get the benefit of 25-gigabit components and a bit of a cost overhead for PAM-4,” says Stanley. “How big that opportunity is depends on how quickly people execute on 400-gigabit modules.”

The first 200-gigabit modules using the QSFP56 form factor are starting to sample now, he says.

100-Gigabit

A key industry challenge at 100 gigabit is meeting demand and this is likely to tax the module suppliers for the rest of this year and next. Manufacturing volumes are increasing, in part because the optical module leaders are installing more capacity and because of the entrance of many, smaller vendors into the marketplace.

End users buying a switch only populate part of the ports due to the up-front costs. More modules are then added as traffic grows. Now, internet content providers turn on entire data centres filled with equipment that is fully populated with modules. “The hyper-scale guys have completely changed the model,” says Stanley.

The 100-gigabit module market has been coming for several years and has finally reached relatively high volumes. Stanley attributes this not just to the volumes needed by the large-scale data centre operators but also the fact that 100-gigabit modules have reached the right price point. Another indicator of the competitive price of 100-gigabit is the speed at which 40-gigabit technology is starting to be phased out.

Developments such as silicon photonics and smart assembly techniques are helping to reduce the cost of 100-gigabit modules, says Stanley, and this will be helped further with the advent of the new SFP-DD MSA.

SFP-DD

The double-density SFP (SFP-DD) MSA was announced in July. It is the next step after the SFP28, similar to the QSFP-DD being an advance on the QSFP28. And just as the 100-gigabit QSFP28 can be used in breakout mode to interface to four 25-gigabit SFP28s, the 400-gigabit QSFP-DD promises to perform a similar breakout role interfacing to SFP-DD modules.

Stanley sees the SFP-DD as a significant development. “Another way to reduce cost apart from silicon photonics and smart assembly is to cut down the number of lasers,” he says. The number of lasers used for 100 gigabits can be halved from four using 28 gigabaud signalling and PAM-4). Existing examples of two-wavelength/ PAM-4 styled 100-gigabit designs are Inphi’s ColorZ module and Luxtera’s CWDM2.

The industry’s embrace of PAM-4 is another notable development of the last year. The debate about the merits of using 56-gigabit symbol rate and non-return-to-zero signalling versus PAM-4 with its need for forward-error correction and extra latency has largely disappeared, he says.

The first 400-gigabit QSFP-DD and OSFP client-side modules are expected in a year’s time with volumes starting at the end of 2018 and into 2019

Coming of age

Stanley describes the coherent technology used for line-side transmissions as coming of age. Systems vendors have put much store in owning the technology to enable differentiation but that is now changing. To the well-known merchant coherent digital signal processing (DSP) players, NTT Electronics (NEL) and Inphi, can now be added Ciena which has made its WaveLogic Ai coherent DSP available to three optical module partners, Lumentum, NeoPhotonics and Oclaro.

CFP2-DCO module designs, where the DSP is integrated within the CFP2 module, are starting to appear. These support 100-gigabit and 200-gigabit line rates for metro and data centre interconnect applications. Meanwhile, the DSP suppliers are working on coherent chips supporting 400 gigabits.

Stanley says the CFP8 and OSFP modules are the candidates for future pluggable coherent module designs.

Meanwhile, the first 400-gigabit QSFP-DD and OSFP client-side modules are expected in a year’s time with volumes starting at the end of 2018 and into 2019.

As for 800-gigabit modules, that is unlikely before 2022.

“At OFC in March, a big data centre player said it wanted 800 Gigabit Ethernet modules by 2020, but it is always a question of when you want it and when you are going to get it,” says Stanley.

Books in 2015 - Final Part

Sterling Perring, senior analyst, Heavy Reading

My ambitions to read far exceed my actual reading output, and because I have such a backlog of books on my reading list, I generally don’t read the latest.

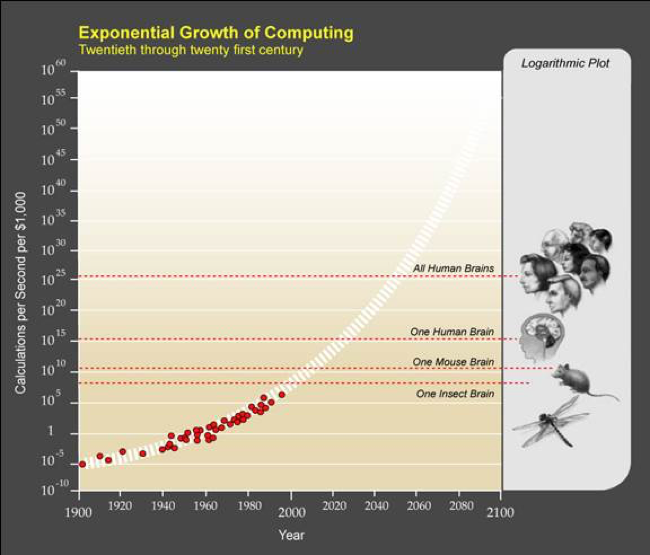

Source: The Age of Spiritual Machines

Source: The Age of Spiritual Machines

I have long been fascinated by a graphic from futurist Ray Kurweil which depicts the exponential growth of computing and plots it against living intelligence. The graphic is from Kurzweil’s 1999 book on artificial intelligence The Age of Spiritual Machines: When Computers Exceed Human Intelligence, which I read in 2015.

The book contains several predictions, but this one about computer intelligence vastly exceeding collective human intelligence in our own lifetimes interested me most. Kurzweil translates the brain power of living things into computational speeds and storage capacity and plots them against exponentially growing computing power, based on Moore’s law and its expected successors.

He writes that by 2020, a $1,000 personal computer will have enough speed and memory to match the human brain. But science fiction (and beyond) becomes reality quickly because computational power continues to grow exponentially while collective human intelligence continues on its plodding linear progression. The inevitable future, in Kurzweil’s scenario, blends human intelligence and AI to the point where by the end of this century, it’s no longer possible or relevant to distinguish between the two.

There have been many criticisms of Kurzweil’s theory and methodologies on AI evolution, but reading a futures book 15 years after publication gives you the ability to validate its predictions. On this, Kurzweil has been quite amazing, including self-driving cars, headset-based virtual reality gaming (which I experienced this year at the mall), tablet computing coming of age in 2009, and the coming end of Moore’s law, to name a few in this book that struck me as astoundingly accurate.

Of newer books, I read Yuval Noah Harari’s Sapiens: A Brief History of Humankind (originally published in Hebrew in 2011 but first published in English in 2014). I was attracted to this book because it provides a succinct summary of millions of years of human history and, from its high level vantage point, is able to draw fascinating conclusions about why our human species of sapiens has been so successful.

Harari’s thesis is that it’s not our thumbs, or the invention of fire, or even our languages that led to our dominance over all animals and other humans but rather the creation of fictional constructs – enabled by our languages – that unified sapiens in collective groups vastly larger than otherwise achievable.

Here, the book can strike some nerves because all religions qualify as fictional constructs, but he’s really talking about all intangible constructs under which humans can massively align themselves, including nations, empires, corporations, money and even capitalism. Without fictional constructs, he writes, it’s hard for humans to form meaningful social organizations beyond 150 people – a number also famously cited by Malcolm Gladwell in The Tipping Point.

In fiction, I completed the fifth and final published installment of George RR Martin’s Song of Ice and Fire Series, A Dance with Dragons. I’ve been drawn to this series in large part, I think, because the simpler medieval setting is such a stark contrast to the ultra-high-tech world in which we live and work.

I thought I had timed the reading to coincide with the release of the 6th book, The Winds of Winter, but I’ve heard that the book is delayed again. Fortunately, I’m still two seasons behind on the HBO series.

Aaron Zilkie, vice president of engineering at Rockley Photonics

I recommend the risk assessment principles in the book, Projects at Warp - Speed with QRPD: The Definitive Guidebook to Quality Rapid Product Development by Adam Josephs, Cinda Voegtli, and Orion Moshe Kopelman.

These principles provide valuable one-stop teaching of fundamental principles for the often under-utilised and taken-for-granted engineering practice of technology risk management and prioritisation. This is an important subject for technology and R&D managers in small-to-medium size technology companies to include in their thinking as they perform the challenging task of selecting new technologies to make next-generation products and product improvements.

The book Who: The A Method for Hiring by Geoff Smart and Randy Street teaches good practices for focused hiring, to build A-teams in technology companies, a topic of critical importance for the rapid success of start-up companies that is not taught in schools.

Tom Foremski, SiliconValleyWatcher

Return of a King: The Battle for Afghanistan, 1839-42 by William Dalrymple. This is one of the best reads, an amazing story! Only one survivor on an old donkey.

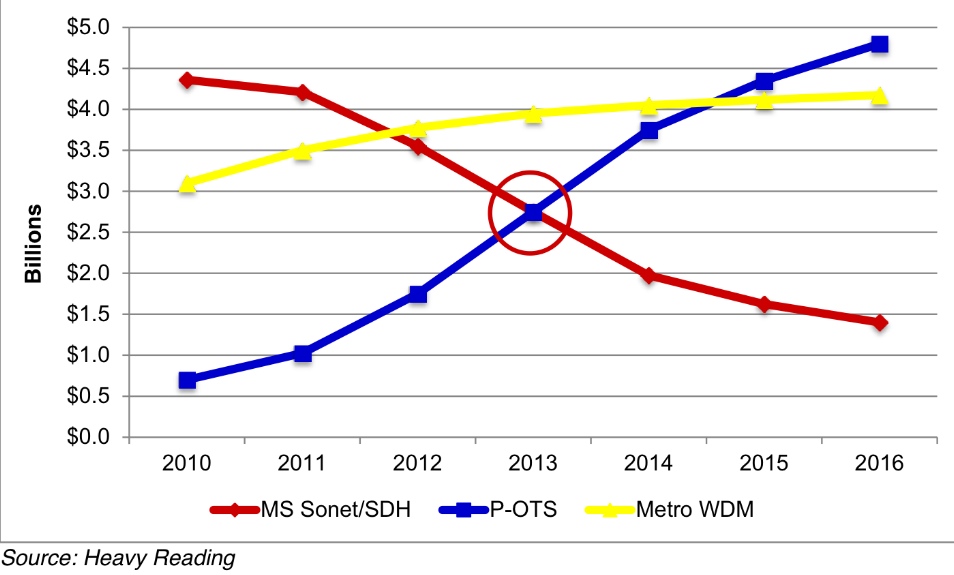

P-OTS 2.0: 60s interview with Heavy Reading's Sterling Perrin

Q: Heavy Reading claims the metro packet optical transport system (P-OTS) market is entering a new phase. What are the characteristics of P-OTS 2.0 and what first-generation platform shortcomings does it address?

A: I would say four things characterise P-OTS 2.0 and separate 2.0 from the 1.0 implementations:

- The focus of packet-optical shifts from time-division multiplexing (TDM) functions to packet functions.

- Pure-packet implementations of P-OTS begin to ramp and, ultimately, dominate.

- Switched OTN (Optical Transport Network) enters the metro, removing the need for SONET/SDH fabrics in new elements.

- 100 Gigabit takes hold in the metro.

The last two points are new functions while the first two address shortcomings of the previous generation. P-OTS 1.0 suffered because its packet side was seen as sub-par relative to Ethernet "pure plays" and also because packet technology in general still had to mature and develop - such as standardising MPLS-TP (Multiprotocol Label Switching - Transport Profile).

Your survey's key findings: What struck Heavy Reading as noteworthy?

The biggest technology surprise was the tremendous interest in adding IP/MPLS functions to transport. There was a lot of debate about this 10 years ago. Then the industry settled on a de facto standard that transport includes layers 0-2 but no higher. Now, it appears that the transport definition must broaden to include up to layer 3.

A second key finding is how quickly SONET/SDH has gone out of favour. Going forward, it is all about packet innovation. We saw this shift in equipment revenues in 2012 as SONET/SDH spend globally dropped more than 20 percent. That is not a one-time hit - it's the new trend for SONET/SDH.

Heavy Reading argues that transport has broadened in terms of the networking embraced - from layers 0 (WDM) and 1 (SONET/SDH and OTN) to now include IP/MPLS. Is the industry converging on one approach for multi-layer transport optimisation? For example, IP over dense WDM? Or OTN, Carrier Ethernet 2.0 and MPLS-TP? Or something else?

We did not uncover a single winning architecture and it's most likely that operators will do different things. Some operators will like OTN and put it everywhere. Others will have nothing to do with OTN. Some will integrate optics on routers to save transponder capital expenditure, but others will keep hardware separate but tightly link IP and optical layers via the control plane. I think it will be very mixed.

You talk about a spike in 100 Gigabit metro starting in 2014. What is the cause? And is it all coherent or is a healthy share going to 100 Gigabit direct detection?

Interest in 100 Gigabit in the metro exceeds interest in OTN in the metro - which is different from the core, where those two technologies are more tightly linked.

Cloud and data centre interconnect are the biggest drivers for interest in metro 100 Gig but there are other uses as well. We did not ask about coherent versus direct in this survey, but based on general industry discussions, I'd say the momentum is clearly around coherent at this stage - even in the metro. It does not seem that direct detect 100 Gig has a strong enough cost proposition to justify a world with two very different flavours of 100 Gig.

What surprised you from the survey's findings?

It was really the interest-level in IP functionality on transport systems that was the most surprising find.

It opens up the packet-optical transport market to new players that are strongest on IP and also poses a threat to suppliers that were good at lower layers but have no IP expertise - they'll have to do something about that.

Heavy Reading surveyed 114 operators globally. All those surveyed were operators; no system vendors were included. The regional split was North America - 22 percent, Europe - 33 percent, Asia Pacific - 25 percent, and the rest of the world - Latin America mainly - 20 percent.

Optical industry restructuring: The analysts' view

The view that the optical industry is due a shake-up has been aired periodically over the last decade. Yet the industry's structure has remained intact. Now, with the depressed state of the telecom industry, the spectre of impending restructuring is again being raised.

In Part 2, Gazettabyte asked several market research analysts - Heavy Reading's Sterling Perrin, Ovum's Daryl Inniss and Dell'Oro's Jimmy Yu - for their views.

Part II: The analysts' view

"It is just a very slow, grinding process of adjustment; I am not sure that the next five years will be any different to what we've seen"

Sterling Perrin, Heavy Reading

Larry Schwerin, CEO of ROADM subsystem player Capella Intelligent Subsystems, believes optical industry restructuring is inevitable. Optical networking analysts largely agree with Schwerin's analysis. Where they differ is that the analysts say change is already evident and that restructuring will be gradual.

"The industry has not been in good shape for many years," says Sterling Perrin, senior analyst at Heavy Reading. "The operators are the ones with the power [in the supply chain] and they seem to be doing decently but it is not a good situation for the systems players and especially for the component vendors."

Daryl Inniss, practice leader for components at Ovum, highlights the changes taking place at the optical component layer. "There is no one dominate [optical component] supplier driving the industry that you would say: This is undeniably the industry leader," says Inniss.

A typical rule of thumb for an industry in that you need the top three [firms] to own between two thirds and 80 percent of the market, says Inniss: "These are real market leaders that drive the industry; everyone else is a specialist with a niche focus."

But the absence of such dominant players should not be equated with a lack of change or that component companies don't recognise the need to adapt.

"Finisar looks more like an industry leader than we have had before, and its behaviour is that of market leader," says Inniss. Finisar is building an integrated company to become a one-stop-shop supplier, he says, as is the newly merged Oclaro-Opnext which is taking similar steps to be a vertically integrated company. Finisar acquired Israeli optical amplifier specialist RED-C Optical Networks in July 2012.

Capella's Schwerin also wonders about the long term prospects of some of the smaller system vendors. Chinese vendors Huawei and ZTE now account for 30 percent of the market, while Alcatel-Lucent is the only other major vendor with double-digit share.

The rest of the market is split among numerous optical vendors. "If you think about that, if you have 5 percent or less [optical networking] market share, that really is not a sustainable business given the [companies'] overhead expenses," says Schwerin.

However Jimmy Yu, vice president of optical transport research at Dell’Oro Group, believes there is a role for generalist and specialist systems suppliers, and that market share is not the only indicator of a company's economic health. “You have a few vendors that are healthy and have a good share of the market,” he says. “That said, when I look at some of these [smaller] vendors, I say they are better off.”

Yu cites the likes of ADVA Optical Networking and Transmode, both small players with less than 3 percent market share but they are some of the most profitable system companies with gross margins typically above 40 percent. “Do I think they are going to be around? Yes. They are both healthy and investing as needed.”

Innovation

Equipment makers are also acquiring specialist component players. Cisco Systems acquired coherent receiver specialist CoreOptics in 2010 and more recently silicon photonics player, Lightwire. Meanwhile Huawei acquired photonic integration specialist, CIP Technologies in January 2012. "This is to acquire strategic technologies, not for revenues but to differentiate and reduce the cost of their products," says Perrin.

"There is a problem with the rate of innovation coming from the component vendors," adds Inniss. This is not a failing of the component vendors as innovation has to come from the system vendors: a device will only be embraced by equipment vendors if it is needed and available in time.

Inniss also highlights the changing nature of the market where optical networking and the carriers are just one part. This includes enterprises, cloud computing and the growing importance of content service providers such as Google, Facebook and Amazon who buy components and gear. "It is a much bigger picture than just looking at optical networking," says Inniss.

"There is no one dominate [optical component] supplier driving the industry that you would say: This is undeniably the industry leader"

"There is no one dominate [optical component] supplier driving the industry that you would say: This is undeniably the industry leader"

Daryl Inniss, Ovum

Huawei is one system vendor targeting these broader markets, from components to switches, from consumer to the data centre core. Huawei has transformed itself from a follower to a leader in certain areas, while fellow Chinese vendor ZTE is also getting stronger and gaining market share.

Moreover, a consequence of these leading system vendors is that it will fuel the emergence of Chinese optical component players. At present the Chinese optical component players are followers but Inniss expects this to change over the next 3-5 years, as it has at the system level.

Perrin also notes Huawei's huge emphasis on the enterprise and IT markets but highlights several challenges.

The content service providers may be a market but it is not as big an opportunity as traditional telecom. "It is also tricky for the systems providers to navigate as you really can't build all your product line to fit Google's specs and still expect to sell to a BT or an AT&T," says Perrin. That said, systems companies have to go after every opportunity they can because telecom has slowed globally so significantly, he says.

Inniss expects the big optical component players to start to distance themselves, although this does not mean their figures will improve significantly.

"This market is what it is - they [component players] will continue to have 35 percent gross margins and that is the ceiling," says Inniss. But if players want to improve their margins, they will have to invest and grow their presence in markets outside of telecom.

"I like the idea of a Cisco or a Huawei acquiring technology to use internally as a way to differentiate and innovate, and we are going to see more of that," says Perrin.

Thus the supply chain is changing, say the analysts, albeit in a gradual way; not the radical change that Capella's Schwerin suggests is coming.

"It is just a very slow, grinding process of adjustment; I am not sure that the next five years will be any different to what we've seen," says Perrin. "I just don't see why there is some catalyst that suggests it is going to be different to the past two years."

This is based on an article that appears in the Optical Connections magazine for ECOC 2012

60-second interview with .... Sterling Perrin

Heavy Reading has published a report Photonic Integration, Super Channels & the March to Terabit Networks. In this 60-second interview, Sterling Perrin, senior analyst at the market research company, talks about the report's findings and the technology's importance for telecom and datacom.

"PICs will be an important part of an ensemble cast, but will not have the starring role. Some may dismiss PICs for this reason, but that would be a mistake – we still need them."

Sterling Perrin, Heavy Reading

Heavy Reading's previous report on optical integration was published in 2008. What has changed?

The biggest change has been the rise of coherent detection, bringing electronics to prominence in the world of optics. This is a big shift - and it has taken some of the burden off photonic integration. Simply put, electronics has taken some of the job away from optics.

How important is optical Integration, for optical component players and for system vendors?

Until now, photonic integration has not been a ‘must have’ item for systems suppliers. For the most part, there have been other ways to get at lower costs and footprint reductions.

I think we are starting to see photonic integration move into the must-have category for systems suppliers, in certain applications, which means that it becomes a must-have item for the components companies that supply them.

How should one view silicon photonics and what importance does Heavy Reading attach to Cisco System's acquisition of silicon photonics' startup, Lightwire?

When we published the last [2008] report, silicon photonics was definitely within the hype cycle. We’ve seen the hype fade quite a bit – it’s now understood that just because a component is made with silicon, it’s not automatically going to be cheaper. Also, few in the industry continue to talk about a Moore’s Law for optics today. That said, there are applications for silicon photonics, particularly in data centre and short-reach applications, and the technology has moved forward.

Cisco’s acquisition of Lightwire is a good testament for how far the technology has come. This is a strategic acquisition, aimed at long-term differentiation, and Cisco believes that silicon photonics will help them get there.

"It will be interesting to watch what other [optical integration] M&A activity occurs, and how this activity affects the components players"

What are the main optical integration market opportunities?

In long haul, we already see applications for photonic integrated circuits (PICs). Certainly, Infinera’s PIC-based DTN and DTN-X systems stand out. But also, the OIF has specified photonic integration in its 100 Gigabit long haul, DWDM (dense wavelength division multiplexing) MSA (multi-source agreement) – it was needed to get the necessary size reduction.

Moving forward, there is opportunity for PICs in client-side modules as PICs are the best way to reduce module sizes and improve system density. Then, beyond 100G, to super-channel-based long-haul systems, PICs will play a big role here, as parallel photonic integration will be used to build these super-channels.

Were you surprised by any of the report's findings?

When I start researching a report, I am always hopefully for big black and white kinds of findings – this is the biggest thing for the industry or this is a dud. With photonic integration, we found such a wide array of opinions and viewpoints that, in the end, we had to place photonic integration somewhere in the middle.

It’s clear that system vendors are going to need PICs but it’s also clear that PICs alone won’t solve all the industry’s challenges. PICs will be an important part of an ensemble cast, but will not have the starring role. Some may dismiss PICs for this reason, but that would be a mistake – we still need them.

What optical integration trends/ developments should be watched over the next two years?

The year started with two major system suppliers buying PIC companies: Cisco and Lightwire and Huawei and CIP Technologies. With Alcatel-Lucent having in-house abilities, and, of course, Infinera, this should put pressure on other optical suppliers to have a PIC strategy.

It will be interesting to watch what other M&A activity occurs, and how this activity affects the components players.

The editor of Gazettabyte worked with Heavy Reading in researching photonic integration for the report.

Ciena: Changing bandwidth on the fly

Ciena has announced its latest coherent chipset that will be the foundation for its future optical transmission offerings. The chipset, dubbed WaveLogic 3, will extend the performance of its 100 Gigabit links while introducing transmission flexibility that will trade capacity with reach.

Feature: Beyond 100 Gigabit - Part 1

"We are going to be deployed, [with WaveLogic 3] running live traffic in many customers’ networks by the end of the year"

"We are going to be deployed, [with WaveLogic 3] running live traffic in many customers’ networks by the end of the year"

Michael Adams, Ciena

"This is changing bandwidth modulation on the fly," says Ron Kline, principal analyst, network infrastructure group at market research firm, Ovum. “The capability will allow users to dynamically optimise wavelengths to match application performance requirements.”

WaveLogic 3 is Ciena's third-generation coherent chipset that introduces several firsts for the company.

- The chipset supports single-carrier 100 Gigabit-per-second (Gbps) transmission in a 50GHz channel.

- The chipset includes a transmit digital signal processor (DSP) - which can adapt the modulation schemes as well as shape the pulses to increase spectral efficiency. The coherent transmitter DSP is the first announced in the industry.

- WaveLogic 3's second chip, the coherent receiver DSP, also includes soft-decision forward error correction (SD-FEC). SD-FEC is important for high-capacity metro and regional, not just long-haul and trans-Pacific routes, says Ciena.

The two-ASIC chipset is implemented using a 32nm CMOS process. According to Ciena, the receiver DSP chip, which compensates for channel impairments, measures 18 mm sq. and is capable of 75 Tera-operations a second.

Ciena says the chipset supports three modulation formats: dual-polarisation bipolar phase-shift keying (DP-BPSK), quadrature phase-shift keying (DP-QPSK) and 16-QAM (quadrature amplitude modulation). Using a single carrier, these equate to 50Gbps, 100Gbps and 200Gbps data rates. Going to 16-QAM may increase the data rate to 200Gbps but it comes at a cost: a loss in spectral efficiency and in reach.

"This software programmability is critical for today's dynamic, cloud-centric networks," says Michael Adams, Ciena’s vice president of product & technology marketing.

WaveLogic 3 has also been designed to scale to 400Gbps. "This is the first programmable coherent technology scalable to 400 Gig," says Adams. "For 400 Gig, we would be using a dual-carrier, dual-polarisation 16-QAM that would use multiple [WaveLogic 3] chipsets."

Performance

Ciena stresses that this is a technology not a product announcement. But it is willing to detail that in a terrestrial network, a single carrier 100Gbps link using WaveLogic 3 can achieve a reach of 2,500+ km. "These refer to a full-fill [wavelengths in the C-Band] and average fibre," says Adams. "This is not a hero test with one wavelength and special [low-loss] fibre.”

Metro to trans-Pacific: The different reaches and distances over terrestrial and submarine using Ciena's WaveLogic 3. SC stands for single carrier. Source: Ciena/ Gazettabyte

Metro to trans-Pacific: The different reaches and distances over terrestrial and submarine using Ciena's WaveLogic 3. SC stands for single carrier. Source: Ciena/ Gazettabyte

When the modulation is changed to BPSK, the reach is effectively doubled. And Ciena expects a 9,000-10,000km reach on submarine links.

The same single-carrier 50GHz channel reverting to 16-QAM can transmit a 200Gbps signal over distances of 750-1,000km. "A modulation change [to 16-QAM] and adding a second 100 Gigabit Ethernet transceiver and immediately you get an economic improvement," says Adams.

For 400Gbps, two carriers, each 16-QAM, are needed and the distances achieved are 'metro regional', says Ciena.

The transmit DSP also can implement spectral shaping. According to Ciena, by shaping the signals sent, a 20-30% bandwidth improvement (capacity increase) can be achieved. However that feature will only be fully exploited once networks deploy flexible grid ROADMs.

At OFC/NFOEC. Ciena will be showing a prototype card that will demonstrate the modulation going from BPSK to QPSK to 16-QAM. "We are going to be deployed, running live traffic in many customers’ networks by the end of the year," says Adams.

Analysis

Sterling Perrin, senior analyst, Heavy Reading

Heavy Reading believes Ciena's WaveLogic 3 is an impressive development, compared to its current WaveLogic 2 and to other available coherent chipsets. But Perrin thinks the most significant WaveLogic 3 development is Ciena’s single-carrier 100Gbps debut.

Until now, Ciena has used two carriers within a 50GHz, each carrying 50Gbps of data.

"The dual carrier approach gave Ciena a first-to-market advantage at 100Gbps, but we have seen the vendor lose ground as Alcatel-Lucent rolled out its single carrier 100Gbps system," says Perrin in a Heavy Reading research note. "We believe that Alcatel-Lucent was the market leader in 100Gbps transport in 2011."

Other suppliers, including Cisco Systems and Huawei, have also announced single-carrier 100Gbps, and more single-wavelength 100Gbps announcements will come throughout 2012.

Heavy Reading believes the ability to scale to 400Gbps is important, as is the use of multiple carriers (or super-channels). But 400 Gigabit and 1 Terabit transport are still years away and 100Gbps transport will be the core networking technology for a long time yet.

"The vendors with the best 100G systems will be best-positioned to capture share over the next five years, we believe," says Perrin.

Ron Kline, principal analyst for Ovum’s network infrastructure group.

For Ron Kline, Ciena's announcement was less of a surprise. Ciena showcased WaveLogic 3's to analysts late last year. The challenge with such a technology announcement is understanding the capabilities and how it will be rolled out and used within a product, he says.

"Ciena's WaveLogic 3 is the basis for 400 Gig," says Kline. "They are not out there saying 'we have 400 Gig'." Instead, what the company is stressing is the degree of added capacity, intelligence and flexibility that WaveLogic 3 will deliver. That said, Ciena does have trials planned for 400 Gig this year, he says.

What is noteworthy, says Ovum, is that 400Gbps is within Ciena's grasp whereas there are still some vendors yet to record revenues for 100Gbps.

"Product differentiation has changed - it used to be about coherent," says Kline. "But now that nearly all vendors have coherent, differentiation is going to be determined by who has the best coherent technology."

ROADMs: core role, modest return for component players

Next-generation reconfigurable optical add/ drop multiplexers (ROADMs) will perform an important role in simplifying network operation but optical component vendors making the core component - the wavelength-selective switch (WSS) - on which such ROADMs will be based should expect a limited return for their efforts.

"[Component suppliers] are going to be under extreme constraints on pricing and cost"

"[Component suppliers] are going to be under extreme constraints on pricing and cost"

Sterling Perrin, Heavy Reading

That is one finding from an upcoming report by market research firm, Heavy Reading, entitled: "The Next-Gen ROADM Opportunity: Forecast & Analysis".

"We do see a growth opportunity [for optical component vendors]," says Sterling Perrin, senior analyst and author of the report. “But in terms of massive pools of money becoming available, it's not going to happen; it is a modest growth in spend that will go to next-generation ROADMs."

That is because operators’ capex spending on optical will grow only in single digits annually while system vendors that supply the next-generation ROADMs will compete fiercely, including using discounting, to win this business. "All of this comes crashing down on the component suppliers, such that they are going to be under extreme constraints on pricing and cost," says Perrin. The report will quantify the market opportunity but Heavy Reading will not discuss numbers until the report is published.

Next-generation ROADMs incorporate such features as colourless (wavelength-independence on an input port), directionless (wavelength routing to any port), contentionless (more than one same-wavelength light path accommodated at a port) and flexible spectrum (variable channel width for signal rates above 100 Gigabit-per-second (Gbps)).

Networks using such ROADMs promise to reduce service providers' operational costs. And coupled with the wide deployment of coherent optical transmission technology, next-generation ROADMs are set to finally deliver agile optical networks.

Other of the report’s findings include the fact that operators have been deploying colourless and directionless ROADMs since 2010, even though implementing such features using current 1x9 WSSs are cumbersome and expensive. However, operators wanting these features in their networks have built such systems with existing components. "Probably about 10% of the market was using colourless and directionless functions in 2010," says Perrin.

Service providers are requiring ROADMs to support flexible spectrum even though networks will likely adopt light paths faster than 100Gbps (400Gbps and beyond) in several years' time.

The need to implement a flexible spectrum scheme will force optical component vendors with microelectromechanical system (MEMS) technology to adopt liquid crystal technology – and liquid-crystal-on-silicon (LCoS) in particular - for their WSSs (see Comments). "MEMS WSS technology is great for all the stuff we do today - colourless, directionless and contentionless - but when you move to flexible spectrum it is not capable of doing that function," says Perrin. "The technology they (vendors with MEMS technology) have set their sights on - and which there is pretty much agreement as the right technology for flexible spectrum - is the liquid crystal on silicon." A shift from MEMS to LCoS for next-generation ROADM technology is thus to be expected, he says.

Perrin also highlights how coherent detection technology, now being installed for 100 Gbps optical transmission, can also implement a colourless ROADM by making use of the tunable nature of the coherent receiver. "It knocks out a bunch of WSSs added to the add/ drop," says Perrin. "It is giving a colourless function for free, which is a huge advantage."

Perrin views next-gen ROADMs as a money-saving exercise for the operators, not a money-making one. "This is hitting on the capex as well as the opex piece which is absolutely critical," he says. "You see the charts of the hockey stick of bandwidth growth and flat venue growth; that is what ROADMS hit at."

The Heavy Reading report will be published later this month.

Further reading:

Boosting the 100 Gigabit addressable market

Alcatel-Lucent has enhanced the optical performance of its 100 Gigabit technology with the launch of its extended reach (100G XR) line card. Extending the reach of 100 Gigabit systems helps makes the technology more attractive when compared to existing 40 Gigabit optical transport.

"We have built some rather large [data centre to data centre] networks with spans larger that 1,000km in totality"

"We have built some rather large [data centre to data centre] networks with spans larger that 1,000km in totality"

Sam Bucci, Alcatel-Lucent

Used with the Alcatel-Lucent 1830 Photonic Service Switch, the line card improves optical transmission performance by 30% by fine-tuning the algorithm that runs on its coherent receiver ASIC. The system vendor says the typical optical reach extends to 2,000km.

When Alcatel-Lucent first announced its 100 Gigabit technology in June 2010, it claimed a reach of 1,500-2,000km. Now this upper reach limit is met for most networking scenarios with the extended reach performance.

"By announcing the extended reach, Alcatel-Lucent is able to highlight the 2,000km reach as well as draw attention to the fact that it has many deployments already, and that some of those customers are using 100 Gig in 1,000km+ applications," says Sterling Perrin, senior analyst at Heavy Reading.

Market research firm Ovum views the 100G XR announcement as a specific evolutionary improvement.

"But it is significant in that it makes the case for 100 Gig versus 40 Gig more attractive for terrestrial longer-reach applications," says Dana Cooperson, network infrastructure practice leader at Ovum. “The higher the performance vendors can make 100 Gig for more demanding applications - bad fiber, ultra long-haul and ultimately submarine - the quicker it will eclipse 40 Gig.” That said, Ovum does not expect 40 Gig to be eclipsed anytime soon.

100G XR

The line card's improved optical performance equates to transmission across longer fibre spans before optical regeneration is required. This, says the vendor, saves on equipment cost, power and space.

More complex network topologies can also be implemented such as mesh networks where the signal can encounter varying-length paths based on differing fibre types as well as multiple ROADM stages. Alcatel-Lucent says it has implemented a 1,700km link with 20 amplifiers and seven ROADM stages without the need for signal regeneration.

The improved optical performance of the 100G XR has been achieved without changing the line card's hardware. The card uses the same analogue-to-digital converter, digital signal processor (DSP) ASIC and the same forward error correction scheme used for its existing 100 Gigabit line card.

What has changed is the dispersion compensation algorithm that runs on the DSP, making use of the experience Alcatel-Lucent has gained from existing 100 Gigabit deployments.

"We can tune various parameters, such as power and the way it [the algorithm] deals with impairments," says Sam Bucci, vice president, terrestrial portfolio management at Alcatel-Lucent. In particular the 100G XR has increased tolerance to polarisation mode dispersion and non-linear impairments.

Cooperson says Alcatel-Lucent has adjusted the receiver ASIC performance after 'mining' data from coherent deployments, something the company is used to doing with its wireless networks. She says Alcatel-Lucent has also worked closely with component vendors to achieve the improved performance.

Perrin points out that Alcatel-Lucent's 100 Gig design uses a single laser while Ciena's system is dual laser. "Alcatel-Lucent is saying that over an identical plant the two-laser approach has no distance advantages over the one laser approach," he says. However, other system vendors have announced distances at and beyond 2,000km. "So Alcatel-Lucent's enhanced system is not record-setting," says Perrin.

100 Gigabit Market

Alcatel-Lucent says it has more than 45 deployments comprising over 1,200 100 Gig lines since the launch of its 100 Gigabit system in 2010.

"It appears that Alcatel-Lucent has shipped more 100G line cards than anyone," says Cooperson. "Alcatel-Lucent has a good opportunity to make some serious 100 Gig inroads here, along with Ciena, while everyone else gears up to get their solutions to market in 2012."

Cooperson also says the 100G XR announcement dovetails nicely with Alcatel-Lucent’s recent CloudBand announcement. Indeed Bucci says that its deployments of 100 Gig include connecting data centres: "We have built some rather large [data centre to data centre] networks with spans larger that 1,000km in totality."

The 100G XR card is being tested by customers and will be generally available starting December 2011.