Books of 2024: Part 3

Gazettabyte is asking industry figures to pick their reads of the year. In the penultimate entry, Prof. Yosef Ben Ezra, Dave Welch, William Webb, and Abdul Rahim share their favourite reads.

Professor Yosef Ben Ezra, PhD, CTO, NewPhotonics

My reading in 2024 continued to augment my technical knowledge with insights on how to bring innovation to the market.

As part of our mission to shift the industry with innovative products, I have been focussing on decision-making as the key to transitioning from technology development to product-market impact and fit. Our company entered a new phase at the beginning of last year, moving from an early-stage technology start-up to a customer-centred growth company. In reading The Lean Startup: How Today’s Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses by Eric Ries, I better understood how we must apply evidence-based decision-making even as we establish a more agile environment where rapid experimentation and learning from customer input takes precedence over extensive planning and development cycles. This insight was critical as we moved from research to delivering a product that met market demand.

Another instrumental read in 2024 was Thinking, Fast and Slow by Daniel Kahneman. With our team growing quickly, the company leadership began facing significantly broader input and issues tied to decision-making that reached beyond engineering. Kahneman’s insights on the interplay between two systems of thinking—intuitive and deliberate—provided an expanded mindset for dealing with a range of cognitive perspectives and biases that influence contextual, practical, and effective decision-making, which is vital to our progress.

The final book I’ll reference has proven to be an essential follow-up to an earlier read that played an instrumental role in starting our company: Blue Ocean Strategies. We strongly identify with this, so Peter Thiel’s Zero to One: Notes on Startups, or How to Build the Future, was an excellent follow-up for me. Ultimately, it spotlights the importance of originality and boldness in innovation. It aligns strongly with our aim to avoid imitation and incremental improvements to connectivity and instead seek transformative advances that offer substantial, long-term value.

I identify strongly with the idea of pursuing a daring and groundbreaking product introduction that reaches new heights, like the distinction Thiel explains in horizontal versus vertical innovation.

Dave Welch, CEO and Founder, AttoTude

One Summer: America, 1927, by Bill Bryson. A fun read about a similarly fascinating time of technology, politics, and human behavior.

William Webb, Independent Consultant, Board Member and Author

I much prefer fact to fiction and often read books about politics, economics and philosophy. But occasionally Amazon suggests something different and I give it a try. Two such random suggestions this year stood out.

The first is Ingrained: The Making of a Craftsman by Callum Robinson. A true story of a woodworker in Scotland with his own small company that has to suddenly change tack when a major client cancelled a huge order. It’s beautifully written with a love for woodwork, craftsmanship and friends. It’s not normally my sort of thing, but this book is one that you won’t put down and will make you think again about what’s important in life.

My second suggestion is completely different – Why Machines Learn: The Elegant Maths Behind Modern AI by Anil Ananthaswamy. The book sets out the mathematics behind how large language models and other AI systems work. It is written for someone with fairly rudimentary mathematical skills. It isn’t a light read, but I found it valuable to understand just how models are trained and the compromises and choices behind it all. AI is so important for the future and now I feel that I’ve got a good handle on how it works.

Abdul Rahim, Ecosystem Manager, PhotonDelta

The book I enjoyed most this year is Overcrowded: Designing Meaningful Products in a World Awash with Ideas, by Roberto Verganti.

The book treats innovation as a gift towards the beneficiaries of innovation and presents a framework for innovation of meaning. This framework is different from design thinking, which is geared towards finding solutions to a problem in an empathetic manner. Roberto’s framework requires a sparring partner who challenges, questions and criticises in the journey of innovation of meaning. The photonics integrated circuit (PIC) community can learn a lot from this book.

The other book I read – well, listened to – is How to Win Friends and Influence People, by Dale Carnegie. This one needs no explanation.

Agent of change

Dave Welch on how entrepreneurial problem-solving skills can tackle some of society’s biggest challenges

Dave Welch is best known for being the founder and chief innovation officer at Infinera, the optical equipment specialist. But he has a history of involvement in social causes.

In 2012, Welch went to court to fight for the educational rights of children in schools in California, a story covered by newspapers in the US and abroad and featured on the front cover of Time magazine.

“Ultimately, we lost,” says Welch. “But the facts of these [school] practices and their link to a poor educational outcome were confirmed and never disputed.”

Welch recently co-founded NosTerra Ventures, a non-profit organisation tackling challenging social issues. These Grand Challenges, as NosTerra calls them, cover housing, energy and environment, access to healthcare, public education, democracy, and information security, issues more suited to a presidential debate.

“NosTerra aims to identify key societal issues to contribute to, identify differentiated strategies and appropriate entrepreneurs, and help get those launched,” explains Welch. Nosterra has added partners, equivalent to a board of directors, to guide the organisation and ensure its strategies make sense. “We are not a Bill Gates or a Michael Bloomberg, but the object, frankly, is to make an organisation that can have the same influence on how we address these problems,” says Welch.

Welch says his involvement stems from being gifted with various opportunities, creating a responsibility to the greater society. Moreover, these issues define the quality of a society, so it is crucial to address them. “It’s also personally very rewarding to figure out what you can do to help,” says Welch.

Strategy for change

NosTerra works to identify what it must do to contribute to a solution and do it in a differentiated fashion, to make a structural change that improves things over time.

Welch returns to the example of public education and establishing the right to a quality education. “That’s a doable task, and trust me, we will reach out to the Bloombergs and the Gates to ask them for their help,” says Welch.

Energy and the environment is another example. Welch says there is much debate about the topic, which is only right given its impact. But less is discussed about the future direction of energy.

Welch sits on the Natural Resources Defense Council board, an important non-profit organisation, and is involved in setting strategies. He believes NosTerra can pursue various activities, including investing in technologies or creating the opportunity for its partners to invest directly. “In this case, the vehicles of change are that we absolutely need new technologies,” he says, citing the extreme example of fusion to shorter-term battery technologies.

NosTerra also believes it can use politics and influence what Welch calls factual prioritisation Developing solutions to significant problems by 2035 results in a markedly different approach to a 2060 timeframe. “If I’m a government or organisation, where do I want to spend my next pile of money?” says Welch. “What is shocking is that there isn’t a go-to validated model to run such scenarios on.”

Some organisations, such as the US Department of Energy, do have detailed models, but there is no open-source trusted model to see what impact short-term and longer-term investments will have. NosTerra is looking to address this with an open-source energy model so that if the Government is willing to invest $100 billion, it can identify what will give it the best return.

“I applaud the financial attack on the system to convert our energy sources, but I’m also a little appalled at the prioritisation of some of where we spend our money,” says Welch. “We can do some things to help there.”

NosTerra is busy creating a community around these areas to develop solutions to get ‘some of these things done’. Any NosTerra success will not be evident in one or two years but more likely five or ten years.

Welch stresses such ventures is not new to Silicon Valley. “David Packard and Bill Hewlett, those guys not only ran an incredible company [Hewlett-Packard or HP], but they were plugged into their community and sat on school boards,” he says. “I still find Silicon Valley is made up of people that care and work with the community.”

Welch is still fully involved at Infinera. “My added value [at Infinera] is the 40 years of watching telecom technologies develop and watching markets change,” he says. He provides creative thought, a perspective on technologies and why customers and markets will adopt specific directions. He also helps with prioritising what technologies Infinera should develop that will make a difference.

“I love that area,” he says.

ECOC 2022 Reflections - Part 1

Gazettabyte is asking industry and academic figures for their thoughts after attending ECOC 2022, held in Basel, Switzerland. In particular, what developments and trends they noted, what they learned, and what, if anything, surprised them.

In Part 1, Infinera’s David Welch, Cignal AI’s Scott Wilkinson, University of Cambridge’s Professor Seb Savory, and Huawei’s Maxim Kuschnerov share their thoughts.

David Welch, Chief Innovation Officer and Founder of Infinera

First, we had great meetings. It was exciting to be back to a live, face-to-face industry event. It was also great to see strong attendance from so many European carriers.

Point-to-multipoint developments were a hot topic in our engagements with service providers and component suppliers. It was also evident in the attendance and excitement at the Open XR Forum Symposium, as well as the vendor demos.

We’re seeing that QSFP-DD ZR+ is a book-ended solution for carriers; interoperability requirements are centred on the CFEC (concatenated or cascaded FEC) market; oFEC (Open FEC) is not being deployed.

Management of pluggables in the optical layer is critical to their network deployment, while network efficiency and power reduction are top of mind.

The definition of ZR and ZR+ needs to be subdivided further into ZR – CFEC, ZR+ – oFEC, and ZR+-HP (high performance), which is a book-ended solution.

Scott T. Wilkinson, Lead Analyst, Optical Components, Cignal AI.

The show was invaluable, given this was our first ECOC since Cignal AI launched its optical components coverage.

Coherent optics announcements from the show did not follow the usual bigger-faster-stronger pattern, as the success of 400ZR has convinced operators and vendors to look at coherent at the edge and inside the data centre.

100ZR for access, the upcoming 800ZR specifications from the OIF, and coherent LR (coherent designed for 2km-10km) will revolutionise how coherent optics are used in networks.

Alongside the coherent announcements were developments from the direct-detect vendors demonstrating or previewing key technologies for 800 Gigabit Ethernet (GbE) and 1.6 Terabit Ethernet (TbE) modules.

800GbE is nearly ready for prime time, awaiting completion of systems based on the newest 112 gigabit-per-second (Gbps) serialiser-deserialiser (serdes) switches. The technology for 224Gbps serdes is just starting to emerge and looks promising for products in late 2024 or 2025.

While there were no unexpected developments at the show, it was great to compare developments across the industry and understand the impact of supply chain issues, operator deployment plans, and any hints of oversupply.

We came away optimistic about continued growth in optical components shipments and revenue into 2023.

Seb Savory, Professor of Optical Fibre Communication, University of Cambridge

My overwhelming sense from ECOC was it was great to be meeting in person again. I must confess I was looking at logistics as much as content with a view to ECOC 2023 in Glasgow where I will be a technical programme committee chair.

Maxim Kuschnerov, Director of the Optical and Quantum Communications Laboratory at Huawei

In the last 12 months, the industry has got more technical clarification regarding next-generation 800ZR and 800LR coherent pluggables.

While 800ZR’s use case seems to be definitely in the ZR+ regime, including 400 gigabit covering metro and long-haul, the case for 800LR is less clear.

Some proponents argue that this is a building block toward 1.6TbE and the path of coherence inside the data centre.

Although intensity-modulation direct detection (IMDD) faces technical barriers to scaling wavelength division multiplexing to 8×200 gigabit, the technological options for beyond 800-gigabit coherent aren’t converging either.

In the mix are 4×400 gigabit, 2×800 gigabit and 1×1.6 terabit, making the question of how low-cost and low-power coherent can scale into data centre applications one of the most interesting technical challenges for the coming years.

Arista continues making a case for a pluggable roadmap through the decade based on 200-gigabit serdes.

With module power envelopes of around 40W at the faceplate, it shows the challenge that the industry is facing and the case co-packaged optics is trying to make.

However, putting all the power into, or next to, the switching chip doesn’t make the cooling problem any less problematic. Here, I wonder if Avicena’s microLED technology could benefit next-generation chip-to-chip or die-to-die interconnects by dropping the high-speed serdes altogether and thus avoiding the huge overhead current input-output (I/O) is placing on data centre networking.

It was great to see the demo of the 200-gigabit PAM-4 externally modulated laser (EML) at Coherent’s booth delivering high-quality eye diagrams. The technology is getting more mature, and next year will receive much exposure in the broader ecosystem.

As for every conference, we have seen the usual presentations on Infinera’s XR Optics. Point-to-multipoint coherent is a great technology looking for a use case, but it is several years too early.

At ECOC’s Market Focus, Dave Welch put up a slide on the XR ecosystem, showing several end users, several system OEMs and a single component vendor – Infinera. I think one can leave it at this for now without further comment.

Is traffic aggregation the next role for coherent?

Ciena and Infinera have each demonstrated the transmission of 800-gigabit wavelengths over near-1,000km distances, continuing coherent's marked progress. But what next for coherent now that high-end optical transmission is approaching the theoretical limit? Can coherent compete over shorter spans and will it find new uses?

Part 1: XR Optics

“I’m going to be a bit of a historian here,” says Dave Welch, when asked about the future of coherent.

Interest in coherent started with the idea of using electronics rather than optics to tackle dispersion in fibre. Using electronics for dispersion compensation made optical link engineering simpler.

Dave Welch

Coherent then evolved as a way to improve spectral efficiency and reduce the cost of sending traffic, measured in gigabit-per-dollar.

“By moving up the QAM (quadrature amplitude modulation) scale, you got both these benefits,” says Welch, the chief innovation officer at Infinera.

Improving the economics of traffic transmission still drives coherent. Coherent transmission offers predictable performance over a range of distances while non-coherent optics links have limited spans.

But coherent comes at a cost. The receiver needs a local oscillator - a laser source - and a coherent digital signal processor (DSP).

Infinera believes coherent is now entering a phase that will add value to networking. “This is less about coherent and more about the processor that sits within that DSP,” says Welch.

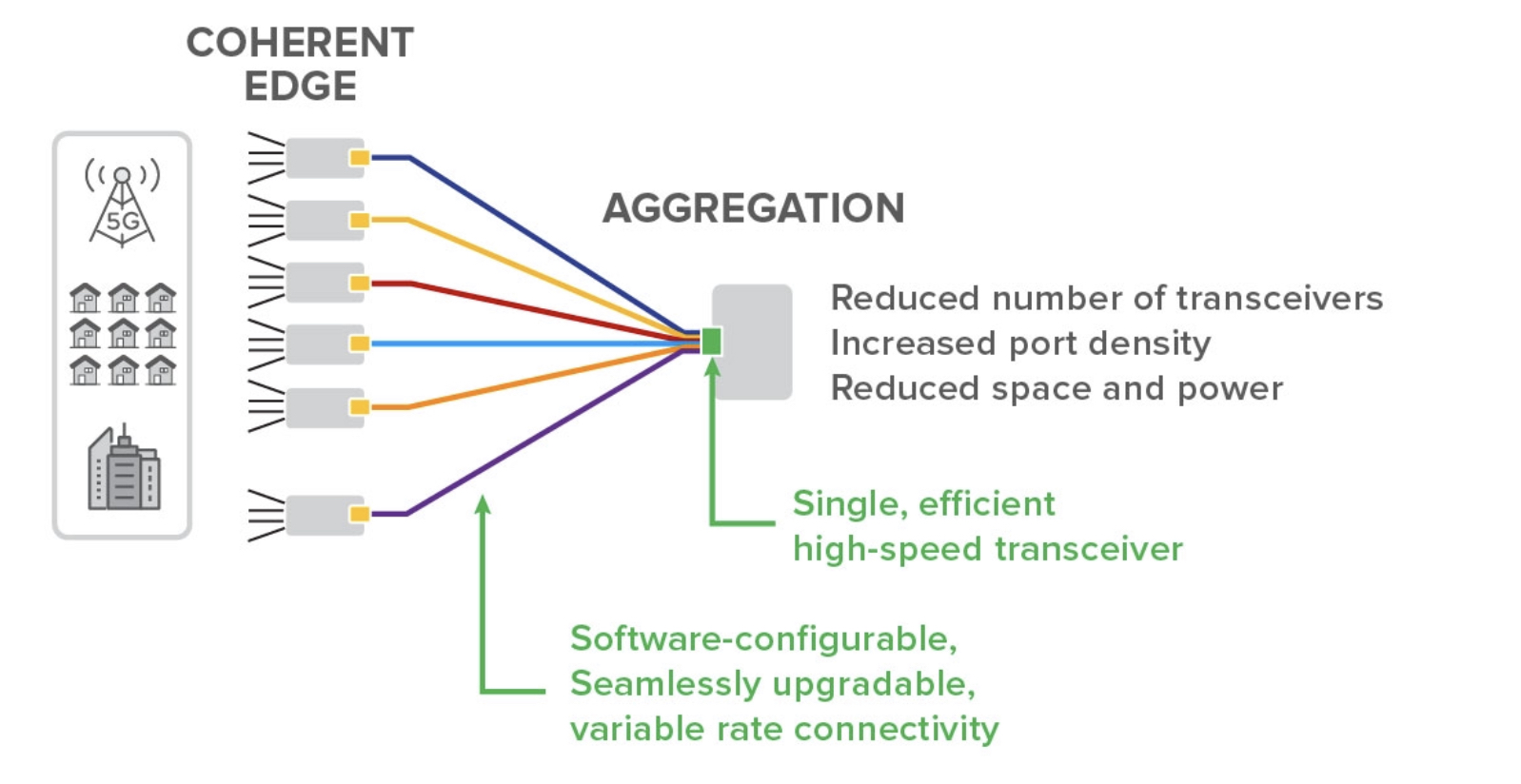

Aggregation

Infinera is developing technology - dubbed XR Optics - that uses coherent for traffic aggregate in the optical domain.

The 'XR’ label is a play on 400ZR, the 400-gigabit pluggable optics coherent standard. XR will enable point-to-point spans like ZR optics but also point-to-multipoint links.

Infinera, working with network operators, has been assessing XR optics’ role in the network. The studies highlight how traffic aggregation dictates networking costs.

“If you aggregate traffic in the optical realm and avoid going through a digital conversion to aggregate information, your network costs plummet,” says Welch.

Are there network developments that are ripe for such optical aggregation?

“The expansion of bandwidth demand at the network edge,” says Rob Shore, Infinera’s senior vice president of marketing. “It is growing, and it is growing unpredictably.”

XR Optics

XR optics uses coherent technology and Nyquist sub-carriers. Instead of a laser generating a single carrier, pulse-shaping at the optical transmitter is used to create multiple carriers, dubbed Nyquist sub-carriers.

The sub-carriers carry the same information as a single carrier but each one has a lower symbol rate. The lower symbol rate improves tolerance to non-linear fibre effects and enables the use of lower-speed electronics. This benefits long-distance transmissions.

But sub-carriers also enable traffic aggregation. Traffic is fanned out over the Nyquist sub-carriers. This enables modules with different capacities to communicate, using the sub-carrier as a basic data rate. For example, a 25-gigabit single sub-carrier XR module and a 100-gigabit XR module based on four sub-carriers can talk to a 400-gigabit module that supports 16.

It means that optics is no longer limited to a fixed point-to-point link but can support point-to-multipoint links where capacities can be changed adaptively.

“You are not using coherent to improve performance but to increase flexibility and allow dynamic reconfigurability,” says Shore.

Rob Shore

XR optics makes an intermediate-stage aggregation switch redundant since the higher-capacity XR coherent module aggregates the traffic from the lower-capacity edge modules.

The result is a 70 per cent reduction in networking costs: the transceiver count is halved and platforms can be removed from the network.

XR Optics starts to make economic sense at 10-gigabit data rates, says Shore. “It depends on the rest of the architecture and how much of it you can drive out,” he says. “For 25-gigabit data rates, it becomes a virtual no-brainer.”

There may be the coherent ‘tax’ associated with XR Optics but it removes so much networking cost that it proves itself much earlier than a 400ZR module, says Shore.

Applications

First uses of XR Optics will include 5G and distributed access architecture (DAA) whereby cable operators bring fibre closer to the network edge.

XR Optics will likely be adopted in two phases. The first is traditional point-to-point links, just as with 400ZR pluggables.

“For mobile backhaul, what is fascinating is that XR Optics dramatically reduces the expense of your router upgrade cost,” says Welch. “With the ZR model I have to upgrade every router on that ring; in XR I only have to upgrade the routers needing more bandwidth.”

Phase two will be for point-to-multipoint aggregation networks: 5G, followed by cable operators as they expand their fibre footprint.

Aggregation also takes place in the data centre, has coherent a role there?

“The intra-data centre application [of XR Optics] is intriguing in how much you can change in that environment but it is far from proven,” says Welch.

Coherent for point-to-point links will not be used inside the data centre as it doesn’t add value but configurable point-to-multiple links do have merit.

“It is less about coherent and more about the management of how content is sent to various locations in a point-to-multiple or multipoint-to-multipoint way,” says Welch. “That is where the game can be had.”

Uptake

Infinera is working with leading mobile operators regarding using XR Optics for optical aggregation. Infinera is talking to their network architects and technologists at this stage, says Shore.

Given how bandwidth at the network edge is set to expand, operators are keen to explore approaches that promise cost savings. “The people that build mobile networks or cable have told us they need help,” says Shore.

Infinera is developing the coherent DSPs for XR Optics and has teamed with optical module makers Lumentum and II-VI. Other unnamed partners have also joined Infinera to bring the technology to market.

The company will detail its pluggable module strategy including XR Optics and ZR+ later this year.

The 50th anniversary of light-speed connections at OFC

The 50th anniversary of two key optical developments will be celebrated at the upcoming OFC show to take place in San Diego starting March 8th.

Back in 1970 the first low-loss fibre and the first room-temperature semiconductor laser were demonstrated.

“The low-loss fibre had a loss of 16 decibels-per-kilometre,” says Jun Shan Wey of ZTE and the OFC programme co-chair. “Without such optical fibre, there would be no chance of any long-distance communication.”

The advent of a semiconductor laser operating at room temperature was another development of key importance, she adds.

The Fiber 50 celebrations and recognition of the invention of the first EDFA

Fiber 50 Keynote

The Fiber 50 celebrations include a series of events assessing the impact of the two optical-communication enablers as well as looking to the future.

Dave Welch, founder and chief innovation officer at Infinera, will open the event with a keynote talk (Tuesday, 10 March 2020, 18:15 – 19:00 Ballroom 20BCD) addressing the impact of optical communications on society over the last half-century and discussing what to expect in the coming years.

According to Welch, advances witnessed in the technology are the result of game-changing innovations such as the erbium-doped fibre amplifier (EDFA), “a critical enabler” for dense wavelength-division multiplexing (DWDM).

Professor Sir David Payne, that led the team that invented the EDFA, is one of this year’s OFC Plenary Session speakers.

“What is so striking is how the world has changed as a consequence of the fibre network,” says Welch. “I do not believe that there has been anything more globally impactful than the fibre-optic network because, without it, the internet could not exist.“

Fibre-optic communication has also transformed the business world.

“If we look at the top ten companies as defined by market valuation and compare the list from 1997 to the list today, it is stunning,” says Welch.

Back then it was energy firms and bricks-and-mortar companies whereas today the list is dominated by companies built on data and the communication of that data.

Welch will also highlight in his keynote what to expect going forward.

“What is highly predictable is the continued expansion of bandwidth consumption and the cost of bandwidth continuing to drop,” says Welch.

The technologies that enable the network will also be highly innovative. “For example, optical communication will draw even more from radio network designs, and from the functional convergence of physical layers of the network to achieve continued expansion,” says Welch.

The networks will also be built with machines in mind, not just humans. “This is where most of the traffic expansion is coming from today and in the future,” concludes Welch.

Vision 2030

Welch’s Fiber 50 keynote talk will be followed by a conference reception (Tuesday 10 March 2020, 19:00 – 20:30 Sails Pavilion) where key individuals in the development of optical communications will be present.

The OFC also has a Special Chair’s Session entitled: Vision 2030: Taking Optical Communications through the Next Decade (Wednesday, 11 March 2020, 14:00-18:30 Room: 6F) where industry luminaries will discuss key topic directions over the next decade.

These include data centres; optical devices such as terabit transmitters, indium phosphide photonic integrated circuits (PICs) and silicon/ nanophotonics; undersea communications; optical access, and 5G optical transport.

Wey highlights how new technologies such as machine learning and quantum techniques for optical communications feature widely across this year’s OFC conference sessions.

“My view is that we are seeing a ‘hockey-stick’ of ideas coming in as we enter the next decade,” says Wey.

She cites access networks as an example. In the past decade, the main driver has been increasing access bit rates. This remains important, she says, but other considerations are also driving access.

5G is changing the architectural requirements, not just the increase in bandwidth but in the use cases it will support. Multi-access edge computing is another aspect.

“Cloud and virtualisation are coming into access, and then there is the challenge of how vendors stay in business,” says Wey.

Exhibits

The OFC has also organised a timeline-of-innovation exhibition on the show floor with items being loaned by companies for the event.

The exhibits will include a 1970 lithium niobate modulator, a 1977 fibre prototype from Corning that carried the first commercial traffic, an early fibre-to-the-home BPON optical transceiver and the first coherent optical transmission system from Ciena (Nortel).

Lastly, there will be an interactive exhibit honouring the recipients of The John Tyndall Award. The award is presented by the IEEE and the OSA to 33 individuals that have made outstanding contributions in optical fibre technology.

“We are very excited and proud in what we have put together,” says Wey. The other OFC programme co-chairs are Professor David Plant of McGill University and Shinji Matsuo of NTT.

Infinera rethinks aggregation with slices of light

An optical architecture for traffic aggregation that promises to deliver networking benefits and cost savings was unveiled by Infinera at this week’s ECOC show, held in Dublin.

Traffic aggregation is used widely in the network for applications such as fixed broadband, cellular networks, fibre-deep cable networks and business services.

Infinera has developed a class of optics, dubbed XR optics, that fits into pluggable modules for traffic aggregation. And while the company is focussing on the network edge for applications such as 5G, the technology could also be used in the data centre.

Optics is inherently a point-to-point communications technology, says Infinera. Yet optics is applied to traffic aggregation, a point-to-multipoint architecture, and that results in inefficiencies.

“The breakthrough here is that, for the first time in optics’ history, we have been able to make optics work to match the needs of an aggregation network,” says Dave Welch, founder and chief innovation officer at Infinera.

Infinera proposes coherent sub-carriers for a new class of problem

XR Optics

Infinera came up with the ‘XR’ label after borrowing from the naming scheme used for 400ZR, the 400-gigabit pluggable optics coherent standard.

“XR can do point-to-point like ZR optics,” says Welch. “But XR allows you to go beyond, to point-to-multipoint; ‘X’ being an ill-defined variable as to exactly how you want to set up your network.”

XR optics uses coherent technology and Nyquist sub-carriers. Instead of using a laser to generate a single carrier, pulse-shaping is used at the transmitter to generate multiple carriers, referred to as Nyquist sub-carriers.

The sub-carriers convey the same information as a single carrier but by using several sub-carriers, a lower symbol rate can be used for each. The lower symbol rate improves the tolerance to non-linear effects in a fibre and enables the use of lower-speed electronics.

Infinera first detailed Nyquist sub-carriers as part of its advanced coherent toolkit, and implemented the technology with its Infinite Capacity Engine 4 (ICE4) used for optical transport.

The company is bringing to market its second-generation Nyquist sub-carrier design with its ICE6 technology that supports 800-gigabit wavelengths.

Now Infinera is proposing coherent sub-carriers for a new class of problem: traffic aggregation. But XR optics will need backing and be multi-sourced if it is to be adopted widely.

Network operators will also need to be convinced of the technology’s merits. Infinera claims XR optics will halve the pluggable modules needed for aggregation and remove the need for intermediate digital aggregation platforms, reducing networking costs by 70 percent.

Aggregation optics

XR optics will be required at both ends of a link. The modules will need to understand a protocol that tells them the nature of the sub-carriers to use: their baud rate (and resulting spectral width) and modulation scheme.

Infinera cites as the example a 4GHz-wide sub-carrier modulated using 16-ary quadrature amplitude modulation (16-QAM) that can transmit 25-gigabit of data.

A larger capacity XR coherent module will be used at the aggregation hub and will talk directly with XR modules at the network edge, “casting out” its sub-carriers to the various pluggable modules at the network edge.

For example, the module at the hub may be a 400-gigabit QSFP-DD supporting 16, 25-gigabit sub-carriers, or an 800-gigabit QSFP-DD or OSFP module delivering 32 sub-carriers. A mix of lower-speed XR modules will be used at the edge: 100-gigabit QSFP28 XR modules based on four sub-carriers and single sub-carrier 25-gigabit SFP28s.

“As soon as you have defined that each one of these transceivers is some multiple of that 25-gigabit sub-carrier, they can all talk to each other,” says Welch.

The hub XR module and network-edge modules are linked using optical splitters such that all the sub-channels sent by the hub XR module are seen by each of the edge modules. The hub in effect broadcasts its sub-carriers to all the edge devices, says Welch.

A coding scheme is used such that each edge module’s coherent receiver can pick off its assigned sub-channel(s). In turn, an edge module will send its data using the same frequencies on a separate fibre.

Basing the communications on multiples of sub-carriers means any XR module can talk to any other, irrespective of their overall speeds.

Sub-carriers can also be reassigned.

“In that fashion, today you are a 25-gigabit client module and tomorrow you are 100-gigabit,” says Welch. Reassigning edge-module capacities will not happen often but when undertaken, no truck roll will be needed.

System benefits

In a conventional aggregation network, the edge transceivers send traffic to an intermediate electrical aggregation switch. The switch’s line-side-facing transceivers then send on the aggregated traffic to the hub.

Using XR optics, the intermediate aggregation switch becomes redundant since the higher-capacity XR coherent module aggregates the traffic from the edge. Removing the switch and its one-to-one edge-facing transceivers account for the halving of the overall transceiver count and the overall 70 percent network cost saving (see diagram below).

The disadvantage of getting rid of the intermediate aggregation switch is minor in comparison to the plusses, says Infinera.

“In a network where all the traffic is going left to right, there is always an economic gain,” says Welch. And while a layer-2 aggregation switch enables statistical multiplexing to be applied to the traffic, it is insignificant when compared to the cost-savings XR optics brings, he says.

Challenges

XR transceivers will need to support sub-carriers and coherent signal processing as well as the language that defines the sub-carriers and their assignment codes. Accordingly, module makers will need to make a new class of XR pluggable modules.

“We are working with others,” says Welch. “The object is to bring the technology and a broad-base supply chain to the market.” The fastest way to achieve this, says Welch, is through a series of multi-source agreements (MSAs). Arista Networks and Lumentum were both quoted as part of Infinera’s XR Optics press release.

Another challenge is that a family of coherent digital signal processors (DSPs) will need to be designed that fit within the power constraints of the various slim client-side pluggable form factors.

Infinera stresses it is unveiling a technological development and not a product announcement. That will come later.

However, Welch says that XR optics will support a reach of hundreds of kilometres and even metro-regional distances of over 1,000km.

“We are comfortable we are working with partners to get this out,” says Welch. “We are comfortable we have some key technologies that will enhance these capabilities as well.”

Other applications

Infinera’s is focussing its XR optics on applications such as 5G. But it says the technology will benefit many network applications.

“If you look at the architecture in the data centre or look are core networks, they are all aggregation networks of one flavour or another,” says Welch. “Any type of power, cost, and operational savings of this magnitude should be evaluated across the board on all networks.”

Books in 2017: Part 2

Dave Welch, founder and chief strategy and technology officer at Infinera

One favourite book I read this year was Alexander Hamilton by Ron Chernow. Great history about the makings of the US government and financial systems as well as a great biography. Another is The Gene: An Intimate History by Siddhartha Mukherjee, a wonderful discussion about the science and history of genetics.

Yuriy Babenko, senior expert NGN, Deutsche Telekom

As part of my reading in 2017 I selected two technical books, one general life-philosophy title and one strategy book.

Today’s internet infrastructure design is hardly possible without what we refer to as the cloud. Cloud is a very general term but I really like the definition of NIST: Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources that can be rapidly provisioned and released with minimal management effort or service provider interaction.

Cloud Native Infrastructure: Patterns for scalable infrastructure and applications in a dynamic environment by Kris Nova and Justin Garrison helps you understand the necessary characteristics of such cloud infrastructure and defines the capabilities of the service architecture that fits this model. The Cloud Native architecture is not just about ‘lift and shift’ into the cloud, it is the redesign of your services focusing on cloud elasticity, scalability, and security as well as operational models including but not limited to infrastructure as code. If you already heard about Kubernetes, Terraform and Cloud Native Foundation but want to understand how various technologies and frameworks fit together, this is a great and easy read.

High Performnce Browser Networking: What Every Web Developer Should Know About Networking and Web Performance by Ilya Grigorik provides a thorough look into the peculiarities of modern browser networking protocols, their foundation, methods and tools that help to optimise and increase the performance of internet sites.

Every serious business today has a web presence. Many services and processes are consumed through the browser, so a look behind the curtains of these infrastructure is informative and useful.

Probably one of the more interesting conclusions is that is not always the bandwidth which is necessary for a site’s successful operation but rather the end-to-end latency. The book discusses HTTP, HTTP2 and SPDY and will be of great interest to anyone who wants to refresh their knowledge of the history of the internet as well as to understand the peculiarities of performance optimisation of (big) internet sites.

Principles: Life and Work by Ray Dalio is probably one of the most discussed books of 2017. Mr Dalio is one of the most successful hedge-fund investors of our generation. In this book, he decided to share the main life and business principles that have guided his decisions during the course of his life. The main message which Dalio shares is not to copy or use his particular principles, although you are likely to adopt several of them, but to have your own.

One of Dalio’s key ideas is that everything works as a machine so if you define the general rules of how the machine (i.e. life in general) works, it will be significantly easier to follow the ups and downs and apply clear thinking in case of difficulties and challenges. He sums it up in an easy-to-comprehend approach which goes like the following: you try things out, reflect on them if something goes well or wrong, log all problems you face along the way, reflect on them and formulate and write down the principles. In due course, you will end up with your own version of Principles. Sounds easy but doing it is the key.

Edge Strategy: A New Mindset for Profitable Growth by Dan McKone and Alan Lewis is about the edges of a business, opportunities sitting comfortably in front of you and your business and which can be 'easily’ tackled and addressed.

Why would you go for a crazy new and risky business idea when there is a bunch of market opportunities just outside of the main door of your core business?

This sounds like “focus and expand” to me and makes a lot of sense. The authors identify three main “edges” which a business can address: product edge, journey edge and enterprise edge.

The book goes into detail about how product edge can be expanded (remember your shiny new iPhone leather case?); a firm can focus more on the complete customer journey (What are the jobs to be done? What problem is the customer really trying to solve? Airbnb service can be a great example); and finally leveraging the enterprise edge (like Amazon renting and selling unused server capacity via its AWS services).

Edge strategies are not new per se, but this book helps to formulate and structure the discussion in an understandable and comprehensive framework.

Books in 2013 - Part 2

Steve Alexander, CTO of Ciena

David and Goliath: Underdogs, Misfits, and the Art of Battling Giants by Malcolm Gladwell.

I’ve enjoyed some of Gladwell’s earlier works such as The Tipping Point and Outliers: The Story of Success. You often have to read his material with a bit of a skeptic's eye since he usually deals with people and events that are at least a standard deviation or two away from whatever is usually termed “normal.” In this case he makes the point that overcoming an adversity (and it can be in many forms) is helpful in achieving extraordinary results. It also reminded me of the many people who were skeptical about Ciena’s initial prospects back in the middle '90s when we first came to market as a “David” in a land of giant competitors. We clearly managed to prosper and have now outlived some of the giants of the day.

Overconnected: The Promise and Threat of the Internet by William Davidow.

I downloaded this to my iPad a while back and finally got to read it on a flight back from South America. On my trip what had I been discussing with customers? Improving network connections of course. I enjoyed it quite a bit because I see some of his observations within my own family. The desire to “connect” whenever something happens and the “positive feedback” that can result from an over-rich set of connections can be both quite amusing as well as a little scary! I don’t believe that all of the events that the author attributes to being overconnected are really as cause-and-effect driven as he may portray, but I found the possibilities for fads, market bubbles, and market collapses entertaining.

For another insight into such extremes see Extraordinary Popular Delusions and the Madness of Crowds by Charles Mackay, first published in the 1840s. We, as a species, have been a bit wacky for a long time.

Shadow Divers: The True Adventure of Two Americans Who Risked Everything to Solve One of the Last Mysteries of World War II by Robert Kurson.

Having grown up in the New York / New Jersey area and having listened to stories from my parents about the fear of sabotage in World War II (Google Operation Pastorius for some background) and grandparents, who had experienced the Black Tom Explosion during WW1, this book was a “don’t put it down till done” for me. I found it by accident when browsing a used book store. It’s available on Kindle and is apparently somewhat controversial because another diver has written a rebuttal to at least some of what was described. It is a great example of what it takes to both dive deep and solve a mystery.

David Welch, President, Infinera

Here is my cut. The first three books offer a perspective on how people think and I apply it to business.

- The Talent Code: Greatness Isn't Born. It's Grown. Here's How by Daniel Coyle.

- Mindset: The New Psychology of Success by Carol Dweck

- Moneyball: The Art of Winning an Unfair Game by Michael Lewis.

My non-work related book is Unbroken: A World War II Story of Survival, Resilience, and Redemption by Laura Hillenbrand.

Unfortunately, I rarely get time to read books, so the picking can be thin at times.

Marcus Weldon, President of Bell Labs and CTO, Alcatel-Lucent

I am currently re-reading Jon Gertner's history of Bell labs, called The Idea Factory: Bell Labs and the Great Age of American Innovation which should be no surprise as I have just inherited the leadership of this phenomenal place, and much of what he observes is still highly relevant today and will inform the future that I am planning.

I joined Bell Labs in 1995 as a post-doctoral researcher in the famous, Nobel-prize winning Physics Division (Div111, as it was known) and so experienced much of this first hand. In particular, I recall being surrounded by the most brilliant, opinionated, odd, inspired, collaborative, competitive, driven, relaxed, set of people I had ever met. And with the shared goal of solving the biggest problems in information and telecommunications.

Having recently returned back to the 'bosom of bell', I find that, remarkably, much of that environment and pool of talent still remains. And that is hugely exciting as it means that we still have the raw ingredients for the next great era of Bell Labs. My hope is that 10 years from now Gertner will write a second edition or updated version of the tale that includes the renewed success of Bell Labs, and not just the historical successes.

On the personal front, I am reading whatever my kids ask me to read them. Two of the current favourites are: Turkey Claus, about a turkey trying to avoid becoming the centrepiece of a Christmas feast by adapting and trying various guises, and Pete the Cat Saves Christmas, about a world of an ailing feline Claus, requiring average cat, Pete, to save the big day.

I am not sure there is a big message here, but perhaps it is that 'any one of us can be called to perform great acts, and can achieve them, and that adaptability is key to success'. And of course, there is some connection in this to the Bell Labs story above, so I will leave it there!

Books in 2013: Part 1, click here

OFC/NFOEC 2012 industry reflections - Part 1

The recent OFC/NFOEC show, held in Los Angeles, had a strong vendor presence. Gazettabyte spoke with Infinera's Dave Welch, chief strategy officer and executive vice president, about his impressions of the show, capacity challenges facing the industry, and the importance of the company's photonic integrated circuit technology in light of recent competitor announcements.

OFC/NFOEC reflections: Part 1

"I need as much fibre capacity as I can get, but I also need reach"

Dave Welch, Infinera

Dave Welch values shows such as OFC/NFOEC: "I view the show's benefit as everyone getting together in one place and hearing the same chatter." This helps identify areas of consensus and subjects where there is less agreement.

And while there were no significant surprises at the show, it did highlight several shifts in how the network is evolving, he says.

"The first [shift] is the realisation that the layers are going to physically converge; the architectural layers may still exist but they are going to sit within a box as opposed to multiple boxes," says Welch.

The implementation of this started with the convergence of the Optical Transport Network (OTN) and dense wavelength division multiplexing (DWDM) layers, and the efficiencies that brings to the network.

That is a big deal, says Welch.

Optical designers have long been making transponders for optical transport. But now the transponder isn't an element in the integrated OTN-DWDM layer, rather it is the transceiver. "Even that subtlety means quite a bit," say Welch. "It means that my metrics are no longer 'gray optics in, long-haul optics out', it is 'switch-fabric to fibre'."

Infinera has its own OTN-DWDM platform convergence with the DTN-X platform, and the trend was reaffirmed at the show by the likes of Huawei and Ciena, says Welch: "Everyone is talking about that integration."

The second layer integration stage involves multi-protocol label switching (MPLS). Instead of transponder point-to-point technology, what is being considered is a common platform with an optical management layer, an OTN layer and, in future, an MPLS layer.

"The drive for that box is that you can't continue to scale the network in terms of bandwidth, power and cost by taking each layer as a silo and reducing it down," says Welch. "You have to gain benefits across silos for the scaling to keep up with bandwidth and economic demands."

Super-channels

Optical transport has always been about increasing the data rates carried over wavelengths. At 100 Gigabit-per-second (Gbps), however, companies now use one or two wavelengths - carriers - onto which data is encoded. As vendors look to the next generation of line-side optical transport, what follows 100Gbps, the use of multiple carriers - super-channels - will continue and this was another show trend.

Infinera's technology uses a 500Gbps super-channel based on dual polarisation, quadrature phase-shift keying (DP-QPSK). The company's transmit and receive photonic integrated circuit pair comprise 10 wavelengths (two 50Gbps carriers per 50GHz band).

Ciena and Alcatel-Lucent detailed their next-generation ASICs at OFC. These chips, to appear later this year, include higher-order modulation schemes such as 16-QAM (quadrature amplitude modulation) which can be carried over multiple wavelengths. Going from DP-QPSK to 16-QAM doubles the data rate of a carrier from 100Gbps to 200Gbps, using two carriers each at 16-QAM, enables the two vendors to deliver 400Gbps.

"The concept of this all having to sit on one wavelength is going by the wayside," say Welch.

Capacity challenges

"Over the next five years there are some difficult trends we are going to have to deal with, where there aren't technical solutions," says Welch.

The industry is already talking about fibre capacities of 24 Terabit using coherent technology. Greater capacity is also starting to be traded with reach. "A lot of the higher QAM rate coherent doesn't go very far," says Welch. "16-QAM in true applications is probably a 500km technology."

This is new for the industry. In the past a 10Gbps service could be scaled to 800 Gigabit system using 80 DWDM wavelengths. The same applies to 100Gbps which scales to 8 Terabit.

"I'm used to having high-capacity services and I'm used to having 80 of them, maybe 50 of them," says Welch. "When I get to a Terabit service - not that far out - we haven't come up with a technology that allows the fibre plant to go to 50-100 Terabit."

This issue is already leading to fundamental research looking at techniques to boost the capacity of fibre.

PICs

However, in the shorter term, the smarts to enable high-speed transmission and higher capacity over the fibre are coming from the next-generation DSP-ASICs.

Is Infinera's monolithic integration expertise, with its 500 Gigabit PIC, becoming a less important element of system design?

"PICs have a greater differentiation now than they did then," says Welch.

Unlike Infinera's 500Gbps super-channel, the recently announced ASICs use two carriers and 16-QAM to deliver 400Gbps. But the issue is the reach that can be achieved with 16-QAM: "The difference is 16-QAM doesn't satisfy any long-haul applications," says Welch.

Infinera argues that a fairer comparison with its 500Gbps PIC is dual-carrier QPSK, each carrier at 100Gbps. Once the ASIC and optics deliver 400Gbps using 16-QAM, it is no longer a valid comparison because of reach, he says.

Three parameters must be considered here, says Welch: dollars/Gigabit, reach and fibre capacity. "I have to satisfy all three for my application," he says.

Long-haul operators are extremely sensitive to fibre capacity. "I need as much fibre capacity as I can get," he says. "But I also need reach."

In data centre applications, for example, reach is becoming an issue. "For the data centre there are fewer on and off ramps and I need to ship truly massive amounts of data from one end of the country to the other, or one end of Europe to the other."

The lower reach of 16-QAM is suited to the metro but Welch argues that is one segment that doesn't need the highest capacity but rather lower cost. Here 16-QAM does reduce cost by delivering more bandwidth from the same hardware.

Meanwhile, Infinera is working on its next-generation PIC that will deliver a Terabit super-channel using DP-QPSK, says Welch. The PIC and the accompanying next-generation ASIC will likely appear in the next two years.

Such a 1 Terabit PIC will reduce the cost of optics further but it remains to be seen how Infinera will increase the overall fibre capacity beyond its current 80x100Gbps. The integrated PIC will double the 100Gbps wavelengths that will make up the super-channel, increasing the long-haul line card density and benefiting the dollars/ Gigabit and reach metrics.

In part two, ADVA Optical Networking, Ciena, Cisco Systems and market research firm Ovum reflect on OFC/NFOEC. Click here

Infinera details Terabit PICs, 5x100G devices set for 2012

Infinera has given first detail of its terabit coherent detection photonic integrated circuits (PICs). The pair - a transmitter and a receiver PIC – implement a ten-channel 100 Gigabit-per-second (Gbps) link using polarisation multiplexing quadrature phase-shift keying (PM-QPSK). The Infinera development work was detailed at OFC/NFOEC held in Los Angeles between March 6-10.

Infinera has recently demonstrated its 5x100Gbps PIC carrying traffic between Amsterdam and London within Interoute Communications’ pan-European network. The 5x100Gbps PIC-based system will be available commercially in 2012.

“We think we can drive the system from where it is today – 8 Terabits-per-fibre - to around 25 Terabits-per-fibre”

Dave Welch, Infinera

Why is this significant?

The widespread adoption of 100Gbps optical transport technology will be driven by how quickly its cost can be reduced to compete with existing 40Gbps and 10Gbps technologies.

Whereas the industry is developing 100Gbps line cards and optical modules, Infinera has demonstrated a 5x100Gbps coherent PIC based on 50GHz channel spacing while its terabit PICs are in the lab.

If Infinera meets its manufacturing plans, it will have a compelling 100Gbps offering as it takes on established 100Gbps players such as Ciena. Infinera has been late in the 40Gbps market, competing with its 10x10Gbps PIC technology instead.

40 and 100 Gigabit

Infinera views 40Gbps and 100Gbps optical transport in terms of the dynamics of the high-capacity fibre market. In particular what is the right technology to get most capacity out of a fibre and what is the best dollar-per-Gigabit technology at a given moment.

For the long-haul market, Dave Welch, chief strategy officer at Infinera, says 100Gbps provides 8 Terabits (Tb) of capacity using 80 channels versus 3.2Tb using 40Gbps (80x40Gbps). The 40Gbps total capacity can be doubled to 6.4Tb (160x40Gbps) if 25GHz-spaced channels are used, which is Infinera’s approach.

“The economics of 100 Gigabit appear to be able to drive the dollar-per-gigabit down faster than 40 Gigabit technology,” says Welch. If operators need additional capacity now, they will adopt 40Gbps, he says, but if they have spare capacity and can wait till 2012 they can use 100Gbps. “The belief is that they [operators] will get more capacity out of their fibre and at least the same if not better economics per gigabit [using 100Gbps],” says Welch. Indeed Welch argues that by 2012, 100Gbps economics will be superior to 40Gbps coherent leading to its “rapid adoption”.

For metro applications, achieving terabits of capacity in fibre is less of a concern. What matters is matching speeds with services while achieving the lowest dollar-per-gigabit. And it is here – for sub-1000km networks – where 40Gbps technology is being mostly deployed. “Not for the benefit of maximum fibre capacity but to protect against service interfaces,” says Welch, who adds that 40 Gigabit Ethernet (GbE) rather than 100GbE is the preferred interface within data centres.

Shorter-reach 100Gbps

Companies such as ADVA Optical Networking and chip company MultiPhy highlight the merits of an additional 100Gbps technology to coherent based on direct detection modulation for metro applications (for a MultiPhy webinar on 100Gbps direct detection, click here). Direct detection is suited to distances from 80km up to 1000km, to connect data centres for example.

Is this market of interest to Infinera? “This is a great opportunity for us,” says Welch.

The company’s existing 10x10Gbps PIC can address this segment in that it is least 4x cheaper than emerging 100Gbps coherent solutions over the next 18 months, says Welch, who claims that the company’s 10x10Gbps PIC is making ‘great headway’ in the metro.

“If the market is not trying to get the maximum capacity but best dollar-per-gigabit, it is not clear that full coherent, at least in discrete form, is the right answer,” says Welch. But the cost reduction delivered by coherent PIC technology does makes it more competitive for cost-sensitive markets like metro.

A 100Gbps coherent discrete design is relatively costly since it requires two lasers (one as a local oscillator (LO - see fig 1 - at the receiver), sophisticated optics and a high power-consuming digital signal processor (DSP). “Once you go to photonic integration the extra lasers and extra optics, while a significant engineering task, are not inhibitors in terms of the optics’ cost.”

Coherent PICs can be used ‘deeper in the network’ (closer to the edge) while shifting the trade-offs between coherent and on-off keying. However even if the advent of a PIC makes coherent more economical, the DSP’s power dissipation remains a factor regarding the tradeoff at 100Gbps line rates between on-off keying and coherent.

Welch does not dismiss the idea of Infinera developing a metro-centric PIC to reduce costs further. He points out that while such a solution may be of particular interest to internet content companies, their networks are relatively simple point-to-point ones. As such their needs differ greatly from cable operators and telcos, in terms of the services carried and traffic routing.

PIC challenges

Figure 1: Infinera's terabit PM-QPSK coherent receiver PIC architecture

Figure 1: Infinera's terabit PM-QPSK coherent receiver PIC architecture

There are several challenges when developing multi-channel 100Gbps PICs. “The most difficult thing going to a coherent technology is you are now dealing with optical phase,” says Welch. This requires highly accurate control of the PIC’s optical path lengths.

The laser wavelength is 1.5 micron and with the PIC's indium phosphide waveguides this is reduced by a third to 0.5 micron. Fine control of the optical path lengths is thus required to tenths of a wavelength or tens of nanometers (nm).

Achieving a high manufacturing yield of such complex PICs is another challenge. The terabit receiver PIC detailed in the OFC paper integrates 150 optical components, while the 5x100Gbps transmit and receive PIC pair integrate the equivalent of 600 optical components.

Moving from a five-channel (500Gbps) to a ten-channel (terabit) PIC is also a challenge. There are unwanted interactions in terms of the optics and the electronics. “If I turn one laser on adjacent to another laser it has a distortion, while the light going through the waveguides has potential for polarisation scattering,” says Welch. “It is very hard.”

But what the PICs shows, he says, is that Infinera’s manufacturing process is like a silicon fab’s. “We know what is predictable and the [engineering] guys can design to that,” says Welch. “Once you have got that design capability, you can envision we are going to do 500Gbps, a terabit, two terabits, four terabits – you can keep on marching as far as the gigabits-per-unit [device] can be accomplished by this technology.”

The OFC post-deadline paper details Infinera's 10-channel transmitter PIC which operates at 10x112Gbps or 1.12Tbps.

Power dissipation

The optical PIC is not what dictates overall bandwidth achievable but rather the total power dissipation of the DSPs on a line card. This is determined by the CMOS process used to make the DSP ASICs, whether 65nm, 40nm or potentially 28nm.

Infinera has not said what CMOS process it is using. What Infinera has chosen is a compromise between “being aggressive in the industry and what is achievable”, says Welch. Yet Infinera also claims that its coherent solution consumes less power than existing 100Gbps coherent designs, partly because the company has implemented the DSP in a more advanced CMOS node than what is currently being deployed. This suggests that Infinera is using a 40nm process for its coherent receiver ASICs. And power consumption is a key reason why Infinera is entering the market with a 5x100Gbps PIC line card. For the terabit PIC, Infinera will need to move its ASICs to the next-generation process node, he says.

Having an integrated design saves power in terms of the speeds that Infinera runs its serdes (serialiser/ deserialiser) circuitry and the interfaces between blocks. “For someone else to accumulate 500Gbps of bandwdith and get it to a switch, this needs to go over feet of copper cable, and over a backplane when one 100Gbps line card talks to a second one,” says Welch. “That takes power - we don’t; it is all right there within inches of each other.”

Infinera can also trade analogue-to-digital (A/D) sampling speed of its ASIC with wavelength count depending on the capacity required. “Now you have a PIC with a bank of lasers, and FlexCoherent allows me to turn a knob in software so I can go up in spectral efficiency,” he says, trading optical reach with capacity. FlexCoherent is Infinera’s technology that will allow operators to choose what coherent optical modulation format to use on particular routes. The modulation formats supported are polarisation multiplexed binary phase-shift keying (PM-BPSK) and PM-QPSK.

Dual polarisation 25Gbaud constellation diagrams

Dual polarisation 25Gbaud constellation diagrams

What next?

Infinera says it is an adherent of higher quadrature amplitude modulation (QAM) rates to increase the data rate per channel beyond 100Gbps. As a result FlexCoherent in future will enable the selection of higher-speed modulation schemes such as 8-QAM and 16-QAM. “We think we can drive the system from where it is today –8 Terabits-per-fibre - to around 25 Terabits-per-fiber.”

But Welch stresses that at 16-QAM and even higher level speeds must be traded with optical reach. Fibre is different to radio, he says. Whereas radio uses higher QAM rates, it compensates by increasing the launch power. In contrast there is a limit with fibre. “The nonlinearity of the fibre inhibits higher and higher optical power,” says Welch. “The network will have to figure out how to accommodate that, although there is still significant value in getting to that [25Tbps per fibre]” he says.

The company has said that its 500 Gigabit PIC will move to volume manufacturing in 2012. Infinera is also validating the system platform that will use the PIC and has said that it has a five terabit switching capacity.

Infinera is also offering a 40Gbps coherent (non-PIC-based) design this year. “We are working with third-party support to make a module that will have unique performance for Infinera,” says Welch.

The next challenge is getting the terabit PIC onto the line card. Based on the gap between previous OFC papers to volume manufacturing, the 10x100Gbps PIC can be expected in volume by 2014 if all goes to plan.