The various paths to co-packaged optics

Near package optics has emerged as companies have encountered the complexities of co-packaged optics. It should not be viewed as an alternative to co-packaged optics but rather a pragmatic approach for its implementation.

Co-packaged optics will be one of several hot topics at the upcoming OFC show in March.

Placing optics next to silicon is seen as the only way to meet the future input-output (I/O) requirements of ICs such as Ethernet switches and high-end processors.

For now, pluggable optics do the job of routing traffic between Ethernet switch chips in the data centre. The pluggable modules sit on the switch platform’s front panel at the edge of the printed circuit board (PCB) hosting the switch chip.

But with switch silicon capacity doubling every two years, engineers are being challenged to get data into and out of the chip while ensuring power consumption does not rise.

One way to boost I/O and reduce power is to use on-board optics, bringing the optics onto the PCB nearer the switch chip to shorten the electrical traces linking the two.

The Consortium of On-Board Optics (COBO), set up in 2015, has developed specifications to ensure interoperability between on-board optics products from different vendors.

However, the industry has favoured a shorter still link distance, coupling the optics and ASIC in one package. Such co-packaging is tricky which explains why yet another approach has emerged: near package optics.

I/O bottleneck

“Everyone is looking for tighter and tighter integration between a switch ASIC, or ‘XPU’ chip, and the optics,” says Brad Booth, president at COBO and principal engineer, Azure hardware architecture at Microsoft. XPU is the generic term for an IC such as a CPU, a graphics processing unit (GPU) or even a data processing unit (DPU).

What kick-started interest in co-packaged optics was the desire to reduce power consumption and cost, says Booth. These remain important considerations but the biggest concern is getting sufficient bandwidth on and off these chips.

“The volume of high-speed signalling is constrained by the beachfront available to us,” he says.

Booth cites the example of a 16-lane PCI Express bus that requires 64 electrical traces for data alone, not including the power and ground signalling. “I can do that with two fibres,” says Booth.

Near package optics

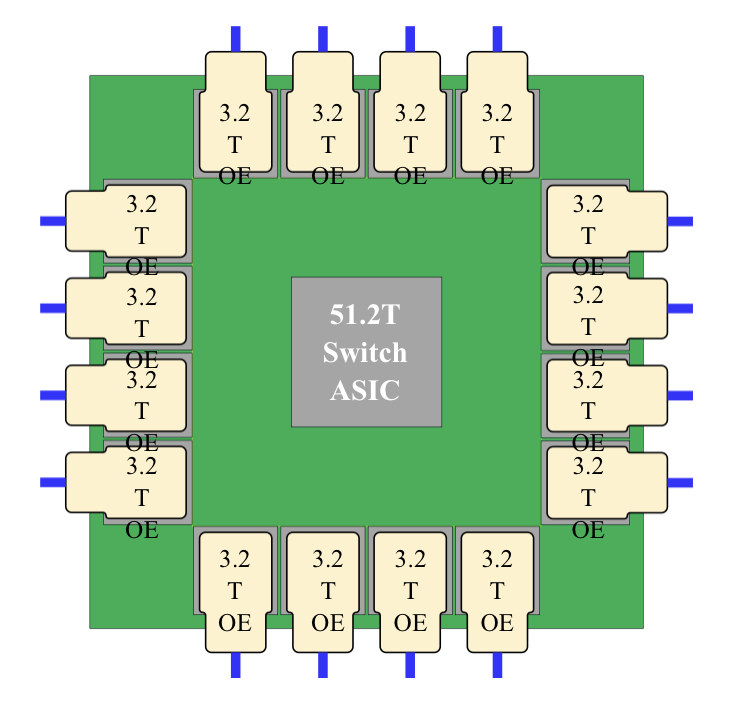

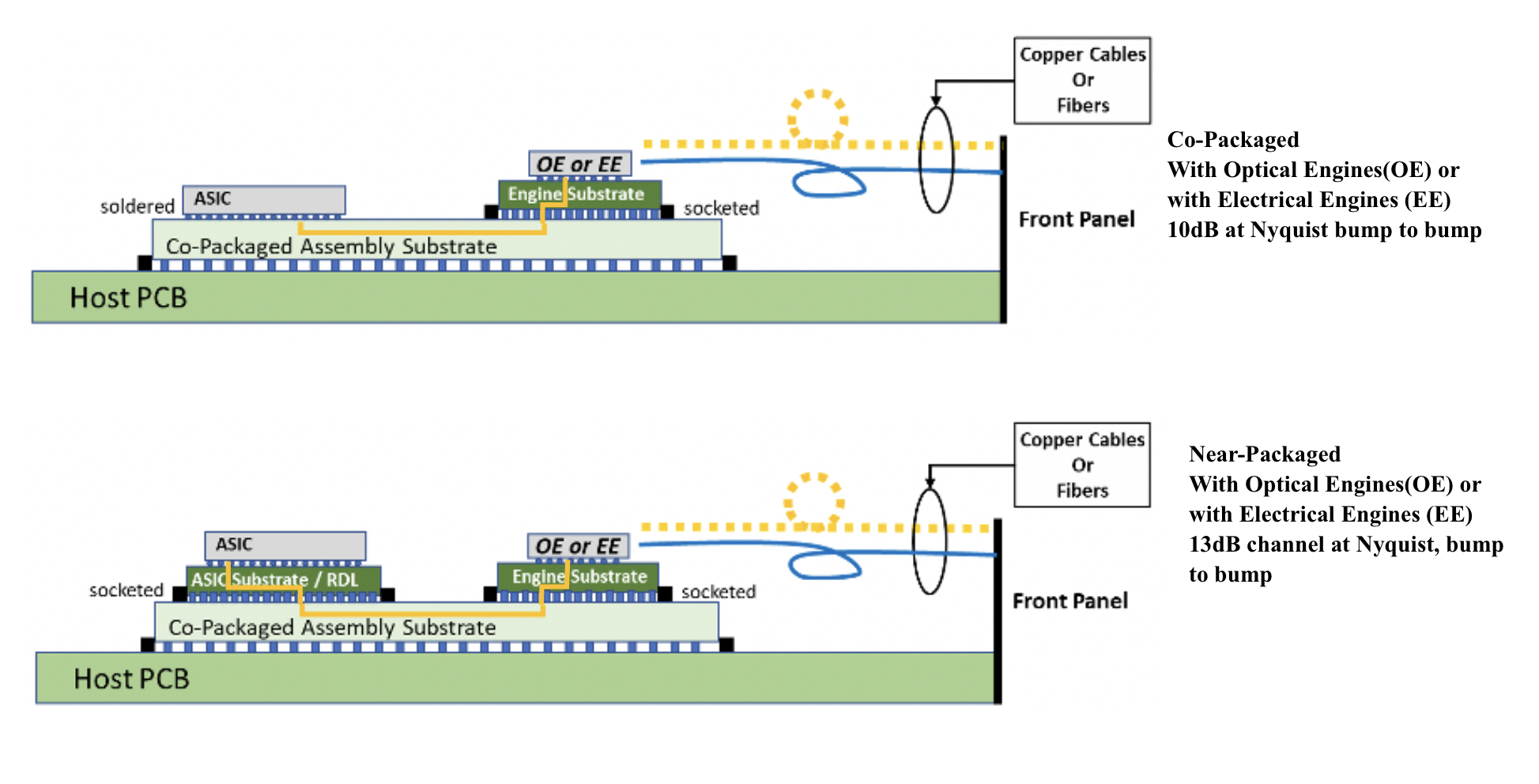

With co-packaged optics, the switch chip is typically surrounded by 16 optical modules, all placed on an organic substrate (see diagram below).

“Another name for it is a multi-chip module,” says Nhat Nguyen, senior director, solutions architecture at optical I/O specialist, Ayar Labs.

A 25.6-terabit Ethernet switch chip requires 16, 1.6 terabits-per-second (1.6Tbps) optical modules while upcoming 51.2-terabit switch chips will use 3.2Tbps modules.

“The issue is that the multi-chip module can only be so large,” says Nguyen. “It is challenging with today’s technology to surround the 51.2-terabit ASIC with 16 optical modules.”

Near package optics tackles this by using a high-performance PCB substrate – an interposer – that sits on the host board, in contrast to co-packaged optics where the modules surround the chip on a multi-chip module substrate.

The near package optics’ interposer is more spacious, making the signal routing between the chip and optical modules easier while still meeting signal integrity requirements. Using the interposer means the whole PCB doesn’t need upgrading which would be extremely costly.

Some co-packaged optics design will use components from multiple suppliers. One concern is how to service a failed optical engine when testing the design before deployment. “That is one reason why a connector-based solution is being proposed,” says Booth. “And that also impacts the size of the substrate.”

A larger substrate is also needed to support both electrical and optical interfaces from the switch chip.

Platforms will not become all-optical immediately and direct-attached copper cabling will continue to be used in the data centre. However, the issue with electrical signalling, as mentioned, is it needs more space than fibre.

“We are in a transitional phase: we are not 100 per cent optics, we are not 100 per cent electrical anymore,” says Booth. “How do you make that transition and still build these systems?”

Perspectives

Ayar Labs views near package optics as akin to COBO. “It’s an attempt to bring COBO much closer to the ASIC,” says Hugo Saleh, senior vice president of commercial operations and managing director of Ayar Labs U.K.

However, COBO’s president, Booth, stresses that near package optics is different from COBO’s on-board optics work.

“The big difference is that COBO uses a PCB motherboard to do the connection whereas near package optics uses a substrate,” he says. “It is closer than where COBO can go.”

It means that with near package optics, there is no high-speed data bandwidth going through the PCB.

Booth says near package optics came about once it became obvious that the latest 51.2-terabit designs – the silicon, optics and the interfaces between them – cannot fit on even the largest organic substrates.

“It was beyond the current manufacturing capabilities,” says Booth. “That was the feedback that came back to Microsoft and Facebook (Meta) as part of our Joint Development Foundation.”

Near package optics is thus a pragmatic solution to an engineering challenge, says Booth. The larger substrate remains a form of co-packaging but it has been given a distinct name to highlight that it is different to the early-version approach.

Nathan Tracy, TE Connectivity and the OIF’s vice president of marketing, admits he is frustrated that the industry is using two terms since co-packaged optics and near package optics achieve the same thing. “It’s just a slight difference in implementation,” says Tracy.

The OIF is an industry forum studying the applications and technology issues of co-packaging and this month published its framework Implementation Agreement (IA) document.

COBO is another organisation working on specifications for co-packaged optics, focussing on connectivity issues.

Technical differences

Ayar Labs highlights the power penalty using near package optics due to its use of longer channel lengths.

For near package optics, lengths between the ASIC and optics can be up to 150mm with the channel loss constrained to 13dB. This is why the OIF is developing the XSR+ electrical interface, to expand the XSR’s reach for near package optics.

In contrast, co-packaged optics confines the modules and host ASIC to 50mm of each other. “The channel loss here is limited to 10dB,” says Nguyen. Co-packaged optics has a lower power consumption because of the shorter spans and 3dB saving.

Ayar Labs highlights its optical engine technology, the TeraPHY chiplet that combines silicon photonics and electronics in one die. The optical module surrounding the ASIC in a co-packaged design typically comprises three chips: the DSP, electrical interface and photonics.

“We can place the chiplet very close to the ASIC,” says Nguyen. The distance between the ASIC and the chiplet can be as close as 3-5mm. Whether on the same interposer Ayar Labs refers to such a design using athird term: in-package optics.

Ayar Labs says its chiplet can also be used for optical modules as part of a co-packaged design.

The very short distances using the chiplet result in a power efficiency of 5pJ/bit whereas that of an optical module is 15pJ/bit. Using TeraPHY for an optical module co-packaged design, the power efficiency is some 7.5pJ/bit, half that of a 3-chip module.

A 3-5mm distance also reduces the latency while the bandwidth density of the chiplet, measured in Gigabit/s/mm, is higher than the optical module.

Co-existence

Booth refers to near package optics as ‘CPO Gen-1’, the first generation of co-packaged optics.

“In essence, you have got to use technologies you have in hand to be able to build something,” says Booth. “Especially in the timeline that we want to demonstrate the technology.”

Is Microsoft backing near package optics?

“We are definitely saying yes if this is what it takes to get the first level of specifications developed,” says Booth.

But that does not mean the first products will be exclusively near package optics.

“Both will be available and around the same time,” says Booth. “There will be near packaged optics solutions that will be multi-vendor and there will be more vertically-integrated designs; like Broadcom, Intel and others can do.”

From an end-user perspective, a multi-vendor capability is desirable, says Booth.

Ayar Labs’ Saleh sees two developing paths.

The first is optical I/O to connect chips in a mesh or as part of memory semantic designs used for high-performance computing and machine learning. Here, the highest bandwidth and lowest power are key design goals.

Ayar Labs has just announced a strategic partnership with high performance computing leader, HPE, to design future silicon photonics solutions for HPE’s Slingshot interconnect that is used for upcoming Exascale supercomputers and also in the data centre.

The second path concerns Ethernet switch chips and here Saleh expects both solutions to co-exist: near package optics will be an interim solution with co-packaged optics dominating longer term. “This will move more slowly as there needs to be interoperability and a wide set of suppliers,” says Saleh.

Booth expects continual design improvements to co-packaged optics. Further out, 2.5D and 3D chip packaging techniques, where silicon is stacked vertically, to be used as part of co-packaged optics designs, he says.

Infinera’s ICE6 crosses the 100-gigabaud threshold

Coherent discourse 3

- The ICE6 Turbo can send two 800-gigabit wavelengths over network spans of 1,100-1,200km using a 100.4 gigabaud (GBd) symbol rate.

- The enhanced reach can reduce the optical transport equipment needed in a network by 25 to 30 per cent.

Infinera has enhanced the optical performance of its ICE6 coherent engine, increasing by up to 30 per cent the reach of its highest-capacity wavelength transmissions.

The ICE6 Turbo coherent optical engine can send 800-gigabit optical wavelengths over 1,100-1,200km compared to the ICE6’s reach of 700-800km.

ICE6 Turbo uses the same coherent digital signal processor (DSP) and optics as the ICE6 but operates at a higher symbol rate of 100.4GBd.

“This is the first time 800 gigabits can hit long-haul distances,” says Ron Johnson, general manager of Infinera’s optical systems & network solutions group.

Baud rates

Infinera’s ICE6 operates at 84-96GBd to transmit two wavelengths ranging from 200-800 gigabits. This gives a total capacity of 1.6 terabits, able to send 4×400 Gigabit Ethernet (GbE) or 16x100GbE channels, for example.

Infinera’s ICE6’s coherent DSP uses sub-carriers and their number and baud rates are tuned to the higher symbol rate.

The bit rate sent is defined using long-codeword probabilistic constellation shaping (LC-PAS) while Infinera also uses soft-decision FEC gain sharing between the DSP’s two channels.

The ICE6 Turbo adds several more operating modes to the DSP that exploit this higher baud rate, says Rob Shore, senior vice president of marketing at Infinera.

Reach

Infinera says that the ICE6 Turbo can also send two 600-gigabit wavelengths over 4,000km.

“This is almost every network in the world except sub-sea,” says Shore, adding that the enhanced reach will reduce the optical transport equipment needed in a network by 25 to 30 per cent.

“One thousand kilometres sending 2×800 gigabits or 4x400GbE is a powerful thing,” adds Johnson. “We’ll see a lot of traction with the content providers with this.”

Increasing symbol rate

Optical transport system designers continue to push the symbol rate. Acacia, part of Cisco, has announced its next 128GBd coherent engine while Infinera’s ICE6 Turbo now exceeds 100GBd.

Increasing the baud rate boosts the capacity of a single coherent transceiver while lowering the cost and power used to transport data. A higher baud rate can also send the same data further, as with the ICE6 Turbo.

“The original ICE6 device was targeted for 84GBd but it had that much overhead in the design to allow for these higher baud rate modes,” says Johnson. “We strived for 84GBd and technically we can go well beyond 100.4GBd.”

This is common, he adds.

The electronics of the coherent design – the silicon germanium modulator drivers, trans-impedance amplifiers, and analogue-to-digital and digital-to-analogue converters – are designed to perform at a certain level and are typically pushed harder and harder over time.

Baud rate versus parallel-channel designs

Shore believes that the industry is fast approaching the point where upping the symbol rate will no longer make sense. Instead, coherent engines will embrace parallel-channel designs.

Already upping the baud rate no longer improves spectral efficiency. “The industry has lost a vector in which we typically expect improvements generation by generation,” says Shore. “We now only have the vector of lowering cost-per-bit.”

At some point, coherent designs will use multiple DSP cores and wavelengths. What matters will be the capacity of the optical engine rather than the capacity of an individual wavelength, says Shore.

“We have had a lot of discussion about parallelism versus baud rate,” adds Johnson.

Already there is fragmentation with embedded and pluggable coherent optics designs. Embedded designs are optimised for high-performance spectral efficiency while for pluggables cost-per-bit is key.

This highlights that there is more than one optimisation approach, says Johnson: “We have got to develop multiple technologies to hit all those different optimisations.”

Infinera will use 5nm and 3nm CMOS for its future coherent DSPs, optimised for different parts of the network.

Infinera will keep pushing the baud rate but Johnson admits that at some point the cost-per-bit will start to rise.

“At present, it is not clear that doubling the baud rate again is the right answer,” says Johnson. “Maybe it is a combination of a little bit more [symbol rate] and parallelism, or it is moving to 200GBd.”

The key is to explore the options and deliver coherent technology consistently.

“If we put too much risk in one area and drive too hard, it has the potential to push our time-to-market out,” says Johnson.

The ICE6 Turbo will be showcased at the OFC show being held in San Diego in March.

Building the data rate out of smaller baud rates

In the second article addressing the challenges of increasing the symbol rate of coherent optical transport systems, Professor Andrew Lord, BT’s head of optical network research, argues that the time is fast approaching to consider alternative approaches.

Coherent discourse 2

Coherent optical transport systems have advanced considerably in the last decade to cope with the relentless growth of internet traffic.

One-hundred-gigabit wavelengths, long the networking standard, have been replaced by 400-gigabit ones while state-of-the-art networks now use 800 gigabits.

Increasing the data carried by a single wavelength requires advancing the coherent digital signal processor (DSP), electronics and optics.

It also requires faster symbol rates.

Moving from 32 to 64 to 96 gigabaud (GBd) has increased the capacity of coherent transceivers from 100 to 800 gigabits.

Last year, Acacia, now part of Cisco, announced the first 1-terabit-plus wavelength coherent modem that uses a 128GBd symbol rate.

Other vendors will also be detailing their terabit coherent designs, perhaps as soon as the OFC show, to be held in San Diego in March.

The industry consensus is that 240GBd systems will be possible towards the end of this decade although all admit that achieving this target is a huge challenge.

Baud rate

Upping the baud rate delivers several benefits.

A higher baud rate increases the capacity of a single coherent transceiver while lowering the cost and power used to transport data. Simply put, operators get more bits for the buck by upgrading their coherent modems.

But some voices in the industry question the relentless pursuit of higher baud rates. One is Professor Andrew Lord, head of optical network research at BT.

“Higher baud rate isn’t necessarily a panacea,” says Lord. “There is probably a stopping point where there are other ways to crack this problem.”

Parallelism

Lord, who took part in a workshop at ECOC 2021 addressing whether 200+ GBd transmission systems are feasible, says he used his talk to get people to think about this continual thirst for higher and higher baud rates.

“I was asking the community, ‘Are you pushing this high baud rate because it is a competition to see who builds the biggest rate?’ because there are other ways of doing this,” says Lord.

One such approach is to adopt a parallel design, integrating two channels into a transceiver instead of pushing a single channel’s symbol rate.

“What is wrong with putting two lasers next to each other in my pluggable?” says Lord. “Why do I have to have one? Is that much cheaper?”

For an operator, what matters is the capacity rather than how that capacity is achieved.

Lord also argues that having a pluggable with two lasers gives an operator flexibility.

A single-laser transceiver can only go in one direction but with two, networking is possible. “The baud rate stops that, it’s just one laser so I can’t do any of that anymore,” says Lord.

The point is being reached, he says, where having two lasers, each at 100GBd, probably runs better than a single laser at 200GBd.

Excess capacity

Lord cites other issues arising from the use of ever-faster symbol rates.

What about links that don’t require the kind of capacity offered by very high baud rate transceivers?

If the link spans a short distance, it may be possibe to use a higher modulation scheme such as 32-ary quadrature amplitude modulation (32-QAM) or even 64-QAM. With a 200GBd symbol rate transceiver, that equates to a 3.2-terabit transceiver. “Yet what if I only need 100 gigabits,” says Lord.

One option is to turn down the data rate using, say, probabilistic constellation shaping. But then the high-symbol rate would still require a 200GHz channel. Baud rate equals spectrum, says Lord, and that would be wasting the fibre’s valuable spectrum.

Another solution would be to insert a different transceiver but that causes sparing issues for the operators.

Alternatively, the baud rate could be turned down. “But would operators do that?” says Lord. “If I buy a device capable of 200GBd, wouldn’t I always operate it at its maximum or would I turn it down because I want to save spectrum in some places?”

Turning the baud rate down also requires the freed spectrum to be used and that is an optical network management challenge.

“If I need to have to think about defragmenting the network, I don’t think operators will be very keen to do that,” says Lord.

Pushing electronics

Lord raises another challenge: the coherent DSP’s analogue-to-digital and digital-to-analogue converters.

Operating at a 200+ GBd symbol rate means the analogue-to-digital converters at the coherent receiver must operate at least at 200 giga-samples per second.

“You have to start sampling incredibly fast and that sampling doesn’t work very well,” says Lord. “It’s just hard to make the electronics work together and there will be penalties.”

Lord cites research work at UCL that suggests that the limitations of the electronics – and the converters in particular – are not negligible. Just connecting two transponders over a short piece of fibre shows a penalty.

“There shouldn’t be any penalty but there will be, and the higher the baud rate, you will get a penalty back-to-back because the electronics are not perfect,” he says.

He suspects the penalty is of the order of 1 or 2dB. That is a penalty lost to the system margin of the link before the optical transmission even starts.

Such loss is clearly unacceptable especially when considering how hard engineers are working to enhance algorithms for even a few tenths of a dB gain.

Lord expects that such compromised back-to-back performance will ultimately lead to the use of multiple adjacent carriers.

“Advertising the highest baudrate is obviously good for publicity and shows industry leadership,” he concludes. “But it does feel that we are approaching a limit for this, and then the way forward will be to build aggregate data rates out of smaller baud rates.”

Books read in 2021: Final Part

In the final favoured reads during 2021, the contributors are Daryl Inniss of OFS, Vladimir Kozlov of LightCounting Market Research, and Gazettabyte’s editor.

Daryl Inniss, Director, Business Development at OFS

Four thousand weeks is the average human lifetime.

A book by Oliver Burkeman: Four Thousand Weeks: Time Management For Mortals is a guide to using the finite duration of our lives.

Burkeman argues that by ignoring the reality of our limited lifetime, we fill our lives with busyness and distractions and fail to achieve the very fullness that we seek.

While sobering, Burkeman presents thought-provoking and amusing examples and stories while transitioning them into positive action.

An example is his argument that our lives are insignificant and that, regardless of our accomplishments, the universe continues unperturbed. Setting unrealistic goals is one consequence of our attempt to achieve greatness.

On the other hand, recognising our inability to transform the world should give us enormous freedom to focus on the things we can accomplish.

We can jettison that meaningless job, be fearless in the face of pandemics given that they come and go throughout history, and lower our stresses on financial concerns given they are transitory. What is then left is the freedom to spend time on things that do matter to us.

Defining what’s important is an individual thing. It need not be curing cancer or solving world peace – two of my favourites. It can be something as simple as making a most delicious cookie that your kids enjoy.

It is up to each of us to find those items that make us feel good and make a difference. Burkeman guides us to pursue a level of discomfort as we seek these goals.

I found this book profound and valuable as I enter the final stage of my life.

I continue to search for ways to fulfil my life. This book helps me to reflect and consider how to use my finite time.

Vladimir Kozlov, CEO and Founder of LightCounting Market Research

Intelligence is a fascinating topic. The artificial kind is making all the headlines but alien minds created by nature have yet to be explored.

One of the most bizarre among these is the distributed mind of the octopus. “Other Minds: The Octopus, the Sea and the Deep Origins of Consciousness, by Peter Godfery-Smith, is a perfect introduction to the subject.

The Overstory: A Novel, by Richard Powers takes the concept of alien minds to a new, more emotional level. It is a heavy read. The number of characters rivals that of War and Peace while the density matches the style of Dostoevsky. Yet, it is impossible not to finish the book, even if it takes several months.

It concerns the conflict of “alien minds”. The majority of the aliens are humans, cast from the distant fringes of our world. The trees emerge as a unifying force that keeps the book and the planet together. It is an unforgettable drama.

I have not cut a live tree since reading the book. I can not stop thinking about just how shallow our understanding of the world is.

The intelligence created by nature is more puzzling than dark matter yet it is shuffled into the ‘Does-not-matter’ drawer of our alien minds.

Roy Rubenstein, Gazettabyte’s editor

Ten per cent of my contacts changed jobs in 2021, according to LinkedIn.

Of these, how many quit their careers after 32 years at one firm? And deliberately downgraded their salaries?

That is what Kate Kellaway did. The celebrated Financial Times journalist quit her job to become a school teacher.

Kellaway is also a co-founder of Now Teach, a non-profit organisation that helps turn experienced workers in such professions as banking and the law into teachers.

In her book, Re-educated: How I Changed My Job, My Home and My Hair, Kellaway reflects on her career as a journalist and on her life. She notes how privileged she has been in the support she received that helped her correct for mistakes and fulfill her career; something that isn’t available to many of her students.

She also highlights the many challenges of teaching. In one chapter she describes a class and the exchanges with her students that captures this magnificently.

A book I reread after many years was Arthur Miller’s autobiography, Timebends: A Life.

In the mid-1980s on a trip to the UK to promote his book, Miller visited the Royal Exchange Theatre in Manchester. There, I got a signed copy of his book which I prize.

The book starts with his early years in New York, surrounded by eccentric Jewish relatives.

Miller also discusses the political atmosphere during the 1950s, resulting in his being summoned before the House Un-American Activities Committee. The first time I read this, that turbulent period seemed very much a part of history. This time, the reading felt less alien.

Miller is fascinating when explaining the origins of his plays. He also had an acute understanding of human nature, as you would expect of a playwright.

The book I most enjoyed in 2021 is The Power of Strangers: The Benefits of Connecting in a Suspicious World, by Joe Keohane.

The book explores talking to strangers and highlights a variety of people going about it in original ways.

Keohane describes his many interactions that include an immersive 3-day course on how to talk to strangers, held in London, and a train journey between Chicago and Los Angeles; the thinking being that, during a 42-hour trip, what else would you do but interact with strangers.

Keohane learns that, as he improves, there is something infectious about the skill: people start to strike up conversations with him.

The book conveys how interacting with strangers can be life-enriching and can dismantle long-seated fears and preconceptions.

He describes an organisation that gets Republican and Democrat supporters to talk. At the end of one event, an attendee says: “We’re all relieved that we can actually talk to each other. And we can actually convince the other side to look at something a different way on some subjects.”

If reading novels can be viewed as broadening one’s experiences through the stories of others, then talking to strangers is the non-fiction equivalent.

I loved the book.

Intel sets a course for scalable optical input-output

- Intel is working with several universities to create building-block circuits to address its optical input-output (I/O) needs for the next decade-plus.

- By 2024 the company wants to demonstrate the technologies achieving 4 terabits-per-second (Tbps) over a fibre at 0.25 picojoules-per-bit (pJ/b).

Intel has teamed up with seven universities to address the optical I/0 needs for several generations of upcoming products.

The initiative, dubbed the Intel Research Center for Integrated Photonics for Data Centre Interconnects, began six months ago and is a three-year project.

No new location is involved, rather the research centre is virtual with Intel funding the research. By setting up the centre, Intel’s goal is to foster collaboration between the research groups.

Motivation

James Jaussi, senior principal engineer and director of the PHY Research Lab in Intel Labs, (pictured) heads a research team that focuses on chip-to-chip communication involving electrical and optical interfaces.

“My team is primarily focussed on optical communications, taking that technology and bringing it close to high-value silicon,” says Jaussi.

Much of Jaussi’s 20 years at Intel has focussed on electrical I/O. During that time, the end of electrical interfaces has repeatedly been predicted. But copper’s demise has proved overly pessimistic, he says, given the advances made in packaging and printed circuit board (PCB) materials.

But now the limits of copper’s bandwidth and reach are evident and Intel’s research arm wants to ensure that when the transition to optical occurs, the technology has longevity.

“This initiative intends to prolong the [optical I/O] technology so that it has multiple generations of scalability,” says Jaussi. And by a generation, Jaussi means the 3-4 years it takes typically to double the bandwidth of an I/O specification.

Co-packaged optics and optical I/O

Jaussi distinguishes between co-packaged optics and optical I/O.

He describes co-packaged optics as surrounding a switch chip with optics. Given the importance of switch chips in the data centre, it is key to maintain compatibility with specifications, primarily Ethernet.

But that impacts the power consumption of co-packaged optics. “The power envelope you are going to target for co-packaged optics is not necessarily going to meet the needs of what we refer to as optical I/O,” says Jaussi.

Optical I/O involves bringing the optics closer to ICs such as CPUs and graphics processor units (GPUs). Here, the optical I/O need not be aligned with standards.

The aim is to take the core I/O off a CPU or GPU and replace it with optical I/O, says Jaussi.

With optical I/O, non-return-to-zero (NRZ) signalling can be used rather than 4-level pulse amplitude modulation (PAM-4). The data rates are slower using NRZ but multiple optical wavelengths can be used in parallel. “You can power-optimise more efficiently,” says Jaussi.

Ultimately, co-packaged optics and optical I/O will become “stitched together” in some way, he says.

Research directions

One of the research projects involves the work of Professor John Bowers and his team at the University of California, Santa Barbara, on the heterogeneous integration of next-generation lasers based on quantum-dot technology.

Intel’s silicon photonics transceiver products use hybrid silicon quantum well lasers from an earlier collaboration with Professor Bowers.

The research centre work is to enable scalability by using multi-wavelength designs as well as enhancing the laser’s temperature performance to above 100oC. This greater resilience to temperature helps the laser’s integration alongside high-performance silicon.

Another project, that of Professor Arka Majumdar at the University of Washington, is to develop non-volatile reconfigurable optical switching using silicon photonics.

“We view this as a core building block, a capability,” says Jaussi. The switching element will have a low optical loss and will require liitle energy for its control.

The switch being developed is not meant to be a system but an elemental building block, analogous to a transistor, Intel says, with the research exploring the materials needed to make such a device.

The work of Professor S.J. Ben Yoo at University of California, Davis, is another of the projects.

His team is developing a silicon photonics-based modulator and a photodetector technology to enable 40-terabit transceivers at 150fJ/bit and achieving 16Tb/s/mm I/O density.

“The intent is to show over a few fibres a massive amount of bandwidth,” says Jaussi.

Goals

Intel says each research group has its own research targets that will be tracked.

All the device developments will be needed to enable the building of something far more sophisticated in future, says Jaussi.

At Intel Labs’ day last year, the company spoke about achieving 1Tbps of I/O at 1pJ/s. The research centre’s goals are more ambitious: 4Tbps over a fibre at 0.25pJ/b in the coming three years.

There will be prototype demonstrations showing data transmissions over a fibre or even several fibres. “This will allow us to make that scalable not just for one but two, four, 10, 20, 100 fibres,” he says. “That is where that parallel scalability will come from.”

Intel says it will be years before this technology is used for products but the research goals are aggressive and will set the company’s optical I/O goals.

PCI-SIG releases the next PCI Express bus specification

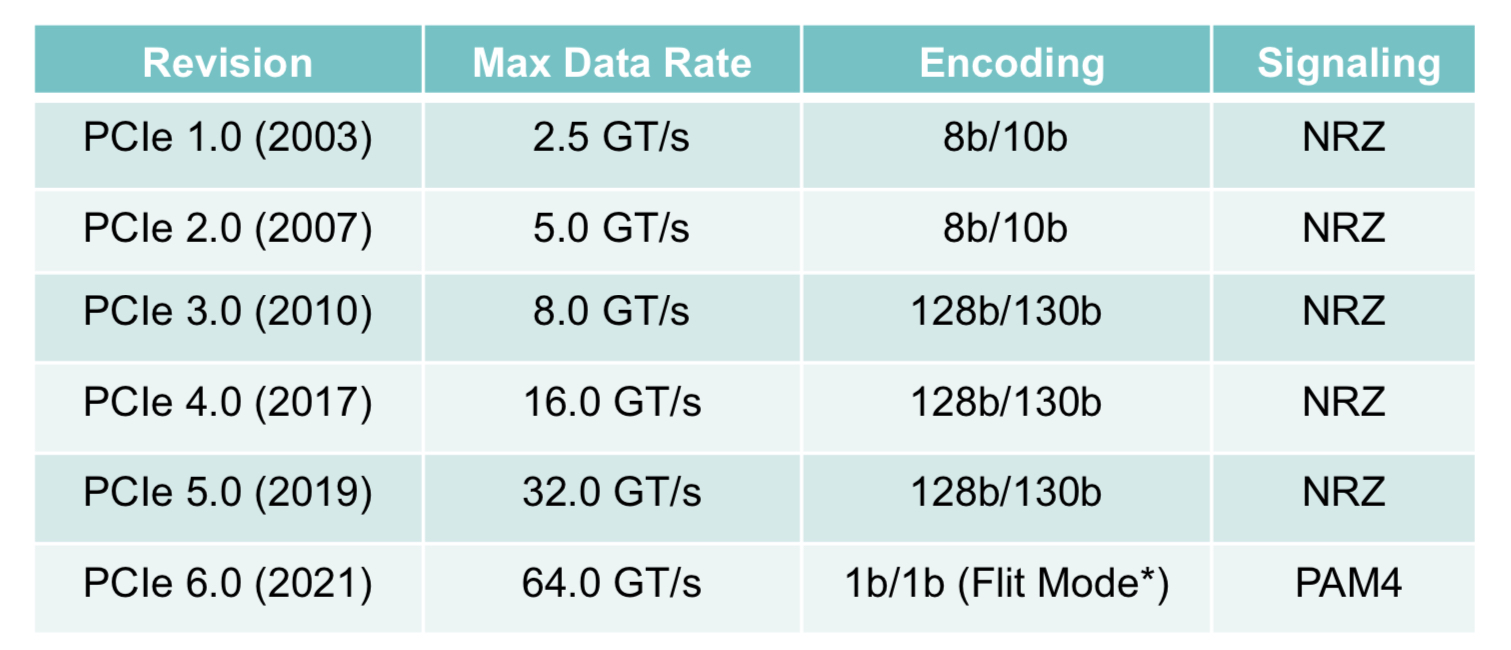

The Peripheral Component Interconnect Express (PCIe) 6.0 specification doubles the data rate to deliver 64 giga-transfers-per-second (GT/s) per lane.

For a 16-lane configuration, the resulting bidirectional data transfer capacity is 256 gigabytes-per-second (GBps).

“We’ve doubled the I/O bandwidth in two and a half years, and the average pace is now under three years,” says Al Yanes, President of the Peripheral Component Interconnect – Special Interest Group (PCI-SIG).

The significance of the specification’s release is that PCI-SIG members can now plan their products.

Users of FPGA-based accelerators, for example, will know that in 12-18 months there will be motherboards running at such rates, says Yanes

Applications

The PCIe bus is used widely for such applications as storage, processors, artificial intelligence (AI), the Internet of Things (IoT), mobile, and automotive.

In servers, PCIe has been adopted for storage and by general-purpose processors and specialist devices such as FPGAs, graphics processor units (GPUs) and AI hardware.

The CXL standard enables server disaggregation by interconnecting processors, accelerator devices, memory, and switching, with the protocol sitting on top of the PCIe physical layer. The NVM Express (NVMe) storage standard similarly uses PCIe.

“If you are on those platforms, you know you have a healthy roadmap; this technology has legs,” says Yanes.

A focus area for PCI-SIG is automotive which accounts for the recent membership growth; the organisation now has 900 members. PCI-SIG has also created a new workgroup addressing automotive.

Yanes attributes the automotive industry’s interest in PCIe due to the need for bandwidth and real-time analysis within cars. Advanced driver assistance systems, for example, use a variety of sensors and technologies such as AI.

PCIe 6.0

The PCIe bus uses a dual simplex scheme – serial transmissions in both directions – referred to as a lane. The bus can be configured in several lane configurations: x1, x2, x4, x8, x12, x16 and x32, although x2, x12 and x32 are rarely used.

PCIe 6.0’s 64GT/s per lane is double that of PCIe 5.0 that is already emerging in ICs and products.

IBM’s latest 7nm POWER10 16-core processor, for example, uses the PCIe 5.0 bus as part of its I/O, while the latest data processing units (DPUs) from Marvell (Octeon 10) and Nvidia (BlueField 3) also support PCIe 5.0.

To achieve the 64GT/s transfer rates, the PCIe bus has adopted 4-level pulse amplitude modulation (PAM-4) signalling. This requires forward error correction (FEC) to offset the bit error rates of PAM-4 while minimising the impact on latency. And low latency is key given the PCIe PHY layer is used by such protocols as CXL that carry coherency and memory traffic. (see IEEE Micro article.)

The latest specification also adopts flow control unit (FLIT) encoding. Here, fixed 256-byte packets are sent: 236 bytes of data and 20 bytes of cyclic redundancy check (CRC).

Using fixed-length packets simplifies the encoding, says Yanes. Since the PCIe 3.0 specification, 128b/130b encoding has been used for clock recovery and the aligning of data. Now with the fixed-sized packet of FLIT, no encoding bits are needed. “They know where the data starts and where it ends,” says Yanes.

Silicon designed for PCIe 6.0 will also be able to use FLITs with earlier standard PCIe transfer speeds.

Yanes says power-saving modes have been added with the release. Both ends of a link can agree to make lanes inactive when they are not being used.

Status and developments

IP blocks for PCIe 6.0 already exist while demonstrations and technology validations will occur this year. First products using PCIe 6.0 will appear in 2023.

Yanes expects PCIe 6.0 to be used first in servers with accelerators used for AI and machine learning, and also where 800 Gigabit Ethernet will be needed.

PCI-SIG is also working to develop new cabling for PCIe 5.0 and PCIe 6.0 for sectors such as automotive. This will aid the technology’s adoption, he says

Meanwhile, work has begun on PCIe 7.0.

“I would be ecstatic if we can double the data rate to 128GT/s in two and a half years,” says Yanes. “We will be investigating that in the next couple of months.”

One challenge with the PCIe standard is that it borrows the underlying technology from telecom and datacom. But the transfer rates it uses are higher than the equivalent rates used in telecom and datacom.

So, while PCI 6.0 has adopted 64GT/s, the equivalent rate used in telecom is 56Gbps only. The same will apply if PCI-SIG chooses 128GT/s as the next data rate given that telecom uses 112Gbps.

Yanes notes, however, that telecom requires much greater reaches whereas PCIe runs on motherboards, albeit ones using advanced printed circuit board (PCB) materials.

Books read in 2021: Part 4

In Part IV, two more industry figures pick their reads.

Michael Hochberg, a silicon photonics expert and currently at a start-up in stealth mode, discusses classical Greek history, while Professor Laura Lechuga, a biosensor luminary highlights Michael Lewis’s excellent book about the pandemic, among others.

Michael Hochberg, President of a stealth-mode start-up

One of the primary ways that I mis-spent my youth was by crawling through my father’s library of social science and history books. This activity generally occurred when I was supposed to be asleep, resting up for a full day of stark and abject boredom in school. This resulted in some perverse outcomes, like my tendency to fall asleep in class at an unusually young age.

It’s accepted practice for college students; certainly, many of the students in my classes during my time as an academic got in some excellent naps. I was always sad to see them leave the comfort of their warm beds to nap in a hard, wooden chair while I lectured; I feel like they would have slept better at home.

Of course, the true masters of napping were the faculty, for whom it was a key technique in committee meetings. But this sort of advanced napping is considerably less common in elementary and middle school, and I suspect that some of my teachers took it personally.

Perhaps the most compelling thing I read during those years was Thucydides’ History of the Peloponnesian War. It’s the history (arguably the first historical volume ever written) of the great conflict between Athens and Sparta. If you’ve never read Pericles’ Funeral Oration, I highly recommend it for anyone who has an interest in leadership; it’s quite possibly the greatest political speech ever given. And there’s a new-ish edition that includes necessary maps, references and explanations, which makes reading and understanding the context dramatically easier. This material makes the text accessible to people who aren’t experts and aren’t reading it as part of a course. It’s even out in paperback!

It’s a volume that I’ve returned to twice, because one of the things that amazes me every time I look at it is how little things have changed in the last 2,500 years. I found myself re-reading it this year and thinking about how much more I got out of it than I did ten years ago; the benefit of experience.

The motivations, actions, and behaviors of the people in Thucydides are instantly and intensely familiar. Given all the changes to technology, government, religion, our knowledge of the universe, access to information, communications, our ideas about ourselves, the triumphs of empiricism and the scientific method, et cetera, it’s amazing to see that the bones of how people behave, both as individuals and as groups, really haven’t changed.

People are still motivated by their desire for security, their interests, and their values (including that sometimes-forgotten motive: honour.) Despite the panoply of innovations, we seem to be basically the same. As a technologist, it’s a thought that gives me both pause and comfort.

Thucydides’ History is told primarily from an Athenian perspective. As I dug deeper, I encountered several books over the years that were truly fascinating, and that gave great insight into the leadership and motivations of the Athenians; I’ve developed a keen interest, starting with this reading, in the circumstances under which democratic regimes can emerge and thrive, both historically and in the present day.

Here are a couple of my favorite further readings on the history of Athens:

- Donald Kagan’s biography of Pericles (Pericles of Athens and the Birth of Democracy); Kagan’s other works are also fascinating reading, as are his lectures from his Yale University history course on the history of Ancient Greece, which are on the web.

- John Hale’s biography of Themistocles and his history of the Athenian navy, The Lords of the Sea.

Because this history of Athens is the story of the rise and fall of a great maritime power, it provides only a limited treatment of Sparta, the foremost territorial power of Ancient Greece.

So, what of the Spartans? The world remembers Leonidas and the 300 at Thermopylae. Students of history remember Plataea, and the later alliance between the Spartans and the Persian Empire directed against Athens. But the Spartans, as a people, remained enigmatic after reading Thucydides, at least to me. What motivated them to create their peculiar society? What were the pressures that shaped their thinking? How did they come to be what they were? Why did they make the decisions that they did?

As Thucydides observed: The Athenians built and left behind immense public works. Temples that still stand today. An extensive literature: comedies, tragedies, and philosophies. Art and sculpture. All the trappings of a commercial, vibrant, creative society. They get to explain themselves to us in their own words. But what of the Spartans, who left behind almost nothing of the sort? We remember their military achievements. But they left behind very little that would allow us to understand them.

To quote Thucydides directly, courtesy of Lapham’s Quarterly (possibly my favourite periodical):

“Suppose that the city of Sparta were to become deserted and that only the temples and foundations of buildings remained: I think that future generations would, as time passed, find it very difficult to believe that the place had really been as powerful as it was represented to be. Yet the Spartans occupy two-fifths of the Peloponnese and stand at the head not only of the whole Peloponnese itself but also of numerous allies beyond its frontiers. Since, however, the city is not regularly planned and contains no temples or monuments of great magnificence, but is simply a collection of villages, in the ancient Hellenic way, its appearance would not come up to expectation. If, on the other hand, the same thing were to happen to Athens, one would conjecture from what met the eye that the city had been twice as powerful as in fact it is.

To address the mystery of the Spartans, Paul Rahe, of Hillsdale College, has written four volumes (thus far) on the history of Spartan strategic thought, and the fifth one will go to press soon.

- The Grand Strategy of Classical Sparta

- The Spartan Regime

- Sparta’s First Attic War

- Sparta’s Second Attic War

These volumes are among the finest books I’ve read. They make the strategic dilemmas and choices of the Spartans as clear as the historical record seems to allow, and where the record is silent, Rahe fills in the blanks with speculation informed by a nuanced understanding of the politics and practices of the day. Rahe has filled in the blank spaces in a way that is remarkable. These books are also utterly readable even to someone like me, who is most decidedly a non-expert in the field.

One of my key takeaways from reading Rahe’s work was the importance of understanding, in detail, the capabilities, motivations, aspirations, and commitments of adversaries, and of having a grand strategy that is simple to articulate, understand and implement.

Only then can a wide range of individuals and organizations coordinate their actions in service of such a grand strategy, which is generally what is required for success, in both business and warfare. The conflict between Athens and Sparta was between a maritime society and a territorial power, and this finds echoes in the current conflicts between the United States and the People’s Republic of China.

In that vein, I’ve been reading Elbridge Colby’s fascinating book (I’m about a third through) on the Strategy of Denial, which I recommend as a thought-provoking work to anyone with an interest in US-China relations. My next reading in this area is Thucydides on Strategy: Grand Strategies in the Peloponnesian War and their Relevance Today.

In recent years, with the revolution in machine learning, we’ve started to develop tools that can do super-human things in areas where humans previously had an absolute monopoly. These tools will allow us to do amazing things that we can barely imagine today, and they will have world-changing economic, military, and social impacts. It’s an incredibly exciting time to be a technologist and to have a chance to participate in this revolution.

Many people believe that these tools will produce fundamental changes in how individual humans behave, in how we organise ourselves into polities, and in how we strategise to enhance our security. Perhaps they’re right. But I don’t think so. The community of technologists have believed that our innovations will change human nature before, and we’ve been wrong before.

I believe that the same motivations that Thucydides articulated so well – Fear, Honor, and Interest – will continue to dominate the landscape. I expect that reading Thucydides will still give deep insight into human nature 500 years from now (assuming of course that anyone is still around to read his work). And 2,500 years of western history seem to suggest, at least to me, that new technology doesn’t fundamentally change human nature, and that to think otherwise is arrogance.

Professor Laura Lechuga, Group Leader NanoBiosensors and Bioanalytical Applications Group, Catalan Institute of Nanoscience and Nanotechnology (ICN2), CSIC, BIST and CIBER-BBN.

I used to read a lot but with our frantic way of living before the pandemic – travelling and working, it was hard to find the time. The pandemic has given me back this habit and I am enjoying it very much.

Here are my best readings of 2021:

The Premonition: A Pandemic Story, by Michael Lewis is an excellent description of the chaotic US public health system and how fighting for political power have corrupted the organisations that should help society in any emergency. The result: the United States has had been one of the countries in pandemic management. A must-read book.

Reina Roja, by Juan Gómez-Jurado, a renowned Spanish author. This is a thriller about the world’s smartest woman on the hunt for a serial killer. I especially like how the protagonist interprets, with a female scientific mind, the actions of the murderer and the staging of the crimes. The book grabs your attention from the first page and continues with a frenetic pace that ends up taking your breath away. I´m not going to disclose the identity of the serial killer …

Acoso by Angela Bernardo is the first book that reveals sexual harassment in Spanish science. The book includes testimonies, interviews with specialists in sexual harassment and compiles the scarce data available in Spain regarding this problem.

The author unravels how the structure of Spanish universities and research centres make its very difficult for female scientists to report and find support when they are victims of sexual harassment or harassment due to gender discrimination.

The Man Who Counted: A Collection of Mathematical Adventures, by Melba Tahan (a pen name), is a well known and classic book in maths by Brazilian writer, Júlio César de Mello e Souz. The book describes curious word problems, mathematics puzzles and curiosities. The protagonist is a thirteenth-century Persian scholar of the Islamic Empire. A must-read book!

My last recommendation is Nosotros, los actogésimos (una novela de mundoochenta), by Jesús Zamora Bonilla. This is a Spanish science fiction book describing “Mundochenta”, a planet 140,000 light years from Earth and its inhabitants, the “eightieths”.

A police and scientific thriller, the book takes place in a very distant future but in a society not yet as technologically advanced as ours. “Mundoachenta” is dominated by the Empire and the Church, which face the challenge of assimilating the increasing scientific advances.

The book is a parody of how power and religion have always tried to stop scientific advances to maintain its dominance.

Books read in 2021: Part 3

In Part III, two more industry figures pick their reads of the year: Dana Cooperson of Blue Heliotrope Research and ADVA’s Gareth Spence.

My reading traverses different ground from that of other invited analysts to this yearly section. In addition, my ‘avoid new releases’ approach means my picks are not from 2021. And before jumping straight into recommendations, I’ll preface my comments with an homage to communal aspects of reading that have meant so much to me, especially during these two Covid years.

My two book groups managed to meet steadily during the pandemic, sometimes while sitting outside in the snow, covered with blankets and sipping hot tea.

Beyond ensuring a steady stream of titles to read and discuss, the ladies in my book clubs have supported and encouraged each other through births and deaths and all the highs and lows in between. I tried a third, online alumni book club, this year, but meh: what it provided was not even close to the tight-knit book club experience I treasure.

I have also appreciated the annual August in-person ad hoc book club and reading recommendations sessions that grew out of my college experience, and which have been going strong for 40 years now. My daughters and I also exchanged books and discussed them this last year.

The books I most appreciated of the 20 or so I read in 2021 were those that offered interesting, deep, and well-written windows into people, places, cultures, and identities I didn’t know I needed to know more about. Here are my top picks:

My favourite 2021 read was the 2019 Booker Prize winner Girl, Woman, Other, by Bernadine Evaristo. This funny and touching novel spans space and time to weave the stories of twelve mostly female, mostly Black, and mostly British characters and their ancestors. The characters’ narratives intersect in surprising ways that don’t feel at all artificial or manipulative. The book’s unique style and structure add to the storytelling.

A Tree Grows in Brooklyn, by Betty Smith, is a fantastic autobiographical novel published in 1943. It details the hard yet full life of Frances Nolan, who grows up impoverished in Williamsburg to first-generation parents from immigrant families (one Irish, one Austrian) in the early 20th century. The descriptions are so vivid, and the main character so tenacious, determined, and smart, that the book is positive and affirming despite its often tough subject matter (alcoholism, abuse, poverty).

My daughter, who had taken an Asian-American literature class in college, suggested The Sympathizer, a 2016 Pulitzer winner by Viet Thanh Nguyen. Like “A Tree Grows in Brooklyn,” the subject matter (the fall of Saigon, spying, torture, betrayal, being a stranger in a strange land) is not a simple read. But the characters are again so vivid, the narrative so darkly comic and satirical, and the historic subject matter so relevant to today that I found the book riveting. (Note: Nguyen published a sequel in 2021 that I’ve yet to read.)

American Dirt, by Jeanine Cummins, tells the harrowing tale of a group of desperate migrants trying to complete the dangerous trip from Latin America to the US. As I started reading it, a friend who hadn’t read it noted the controversies swirling around the author (she’s not Latinx enough for some) and the plot (lambasted by some as ‘immigrant porn’). Whatever: I read the book and loved it. This gripping novel made the plight of desperate migrants more real to me than any news story had done.

Other book recommendations:

• The Vanishing Half: A Novel by Britt Bennett, regards two African American sisters from the US South who make very different choices (one passes as white) and how their futures and families are affected by their choices.

• Afterlife, by Julia Alvarez, concerns a retired English professor who is suddenly widowed and trying to figure out how to live her life and deal with her three sister

• The Great Believers, by Rebecca Makkai, is about the AIDS crisis in Chicago. It bounces between 1985 and 2015 as it follows a group of gay men and their born and made families. I found the plot (who lives, who dies) a tad manipulative, but the book shined a light on a pandemic and its victims that we should never forget.

• The Miniaturist, by Jessie Burton, which is set in 17th century Amsterdam, is an atmospheric, magical, and suspenseful novel that made the era of the Dutch East India Company come alive for me. You did not want to be poor, female, Black, or gay in 1686 in the Netherlands, so this book is dark.

• Midnight in the Garden of Good and Evil: A Savannah Story, a non-fiction novel by journalist John Berendt, describes a 1980s murder and trial in Savannah, Georgia. Readers will not easily forget the town’s many characters, especially The Lady Chablis.

It seems fitting to end my 2021 recommendations with a recent read, Oscar Wilde’s The Picture of Dorian Gray, about a young man whose moral decay and debauchery is recorded by his painted portrait even while his body retains its unsullied youth and beauty.

Wilde sure had a way with words: his descriptions of 19th century London high society are as sharp as any knife. For example, Lord Fermor was “a genial if somewhat rough-mannered old bachelor, whom the outside world called selfish because it derived no particular benefit from him, but who was considered generous by Society as he fed the people who amused him.”

I’ll close with Wilde’s musing on art from the last epigram in the novel’s preface: “We can forgive a man for making a useful thing as long as he does not admire it. The only excuse for making a useless thing is that one admires it intensely. All art is quite useless.”

Gareth Spence, Senior Director of Digital Marketing and Public Relations at ADVA.

It’s been a grey and wet holiday season in the UK. Ideal conditions for hunkering down in front of the fire and building a reading list for 2022. If you’re doing the same, here are two suggestions for your book pile.

Both recommendations can loosely be filed under the topic of the American Dream. The first one stretches the rules as it’s only available as an audiobook. It’s Miracle and Wonder: Conversations with Paul Simon, by Malcolm Gladwell and Bruce Headlam.

I was reluctant to listen to this book. I’ve grown tired of Gladwell’s writing style and his tendency to reduce human nature to a digestible catchphrase. Still, the opportunity to hear Simon talk about his career proved too compelling.

As a child, I was an avid listener of Simon. His work shaped my early notions of America and the American Dream. In the book, Simon talks extensively about his anthemic tunes. Where the ideas came from, how the songs were shaped and how his relationship with his music has changed during his long career.

It’s fascinating to hear Simon talk openly about his past. If you have any interest in his songs or the musical process, you’ll enjoy this book. Just try your best to overcome Gladwell’s gushing praise of Simon. The man could rob a bank and Gladwell would find artistic merit in it.

My second recommendation is Nomadland: Surviving America in the Twenty-First Century, by Jessica Bruder. This book is a powerful exploration of the flipside of the American Dream. It follows the lives of a growing community of people who have been cast aside by society and forced to find ways to live outside mainstream America.

Many of the people detailed are over 60 and have lost their homes and livelihoods. They now live in recreational vehicles, vans and even cars and spend their time in laborious, menial jobs. When they’re not working, they’re travelling the country, finding ways to embrace freedoms they never had before.

It’s sobering to read Bruder’s book as she spends over a year exploring this nomadic community. It’s hard to imagine that this group won’t continue to expand as life in America becomes ever more challenging.

But as difficult as it is to read, there’s also hope. The people show resourcefulness and resiliency in how they discover a new way to live and rediscover their country.

Compute vendors set to drive optical I/O innovation

Part 2: Data centre and high-performance computing trends

Professor Vladimir Stojanovic has an engaging mix of roles.

When he is not a professor of electrical engineering and computer science at the University of California, Berkeley, he is the chief architect at optical interconnect start-up, Ayar Labs.

Until recently Stojanovic spent four days each week at Ayar Labs. But last year, more of his week was spent at Berkeley.

Stojanovic is a co-author of a 2015 Nature paper that detailed a monolithic electronic-photonics technology. The paper described a technological first: how a RISC-V processor communicated with the outside world using optical rather than electronic interfaces.

It is this technology that led to the founding of Ayar Labs.

Research focus

“We [the paper’s co-authors] always thought we would use this technology in a much broader sense than just optical I/O [input-output],” says Stojanovic.

This is now Stojanovic’s focus as he investigates applications such as sensing and quantum computing. “All sorts of areas where you can use the same technology – the same photonic devices, the same circuits – arranged in different configurations to achieve different goals,” says Stojanovic.

Stojanovic is also looking at longer-term optical interconnect architectures beyond point-to-point links.

Ayar Labs’ chiplet technology provides optical I/O when co-packaged with chips such as an Ethernet switch or an “XPU” – an IC such as a CPU or a GPU (graphics processing unit). The optical I/O can be used to link sockets, each containing an XPU, or even racks of sockets, to form ever-larger compute nodes to achieve “scale-out”.

But Stojanovic is looking beyond that, including optical switching, so that tens of thousands or even hundreds of thousands of nodes can be connected while still maintaining low latency to boost certain computational workloads.

This, he says, will require not just different optical link technologies but also figuring out how applications can use the software protocol stack to manage these connections. “That is also part of my research,” he says.

Optical I/O

Optical I/O has now become a core industry focus given the challenge of meeting the data needs of the latest chip designs. “The more compute you put into silicon, the more data it needs,” says Stojanovic.

Within the packaged chip, there is efficient, dense, high-bandwidth and low-energy connectivity. But outside the package, there is a very sharp drop in performance, and outside the chassis, the performance hit is even greater.

Optical I/O promises a way to exploit that silicon bandwidth to the full, without dropping the data rate anywhere in a system, whether across a shelf or between racks.

This has the potential to build more advanced computing systems whose performance is already needed today.

Just five years go, says Stojanovic, artificial intelligence (AI) and machine learning were still in their infancy and so were the associated massively parallel workloads that required all-to-all communications.

Fast forward to today, such requirements are now pervasive in high-performance computing and cloud-based machine-learning systems. “These are workloads that require this strong scaling past the socket,” says Stojanovic.

He cites natural language processing that within 18 months has grown 1000x in terms of the memory required; from hosting a billion to a trillion parameters.

“AI is going through these phases: computer vision was hot, now it’s recommender models and natural language processing,” says Stojanovic. “Each generation of application is two to three orders of magnitude more complex than the previous one.”

Such computational requirements will only be met using massively parallel systems.

“You can’t develop the capability of a single node fast enough, cramming more transistors and using high-bandwidth memory,“ he says. High-bandwidth memory (HBM) refers to stacked memory die that meet the needs of advanced devices such as GPUs.

Co-packaged optics

Yet, if you look at the headlines over the last year, it appears that it is business as usual.

For example, there have been a Multi Source Agreement (MSA) announcement for new 1.6-terabit pluggable optics. And while co-packaged optics for Ethernet switch chips continues to advance, it remains a challenging technology; Microsoft has said it will only be late 2023 when it starts using co-packaged optics in its data centres.

Stojanovic stresses there is no inconsistency here: it comes down to what kind of bandwidth barrier is being solved and for what kind of application.

In the data centre, it is clear where the memory fabric ends and where the networking – implemented using pluggable optics – starts. That said, this boundary is blurring: there is a need for transactions between many sockets and their shared memory. He cites Nvidia’s NVLink and AMD’s Infinity Fabric links as examples.

“These fabrics have very different bandwidth densities and latency needs than the traditional networks of Infiniband and Ethernet,” says Stojanovic. “That is where you look at what physical link hardware answers the bottleneck for each of these areas.”

Co-packaged optics is focussed on continuing the scaling of Ethernet switch chips. It is a more scalable solution than pluggables and even on-board optics because it eliminates long copper traces that need to be electrically driven. That electrical interface has to escape the switch package, and that gives rise to that package-bottleneck problem, he says.

There will be applications where pluggables and on-board optics will continue to be used. But they will still need power-consuming retimer chips and they won’t enable architectures where a chip can talk to any other chip as if they were sharing the same package.

“You can view this as several different generations, each trying to address something but the ultimate answer is optical I/O,” says Stojanovic.

How optical connectivity is used also depends on the application, and it is this diversity of workloads that is challenging the best of the system architects.

Application diversity

Stojanovic cites one machine learning approach for natural language processing that Google uses that scales across many compute nodes, referred to as the ‘multiplicity of experiments’ (MoE) technique.

A processing pipeline is replicated across machines, each performing part of the learning. For the algorithm to work in parallel, each must exchange its data set – its learning – with every other processing pipeline, a stage referred to as all-to-all dispatch and combine.

“As you can imagine, all-to-all communications is very expensive,” says Stojanovic. “There is a lot of data from these complex, very large problems.”

Not surprisingly, as the number of parallel nodes used grows, a greater proportion of the overall time is spent exchanging the data.

Using 1,000 AI processors running 2,000 experiments, a third of the time is required for data exchange. Scaling the hardware to 3,000 to 4,000 AI processors and communications dominate the runtime.

This, says Stojanovic, is a very interesting problem to have: it’s an example where adding more compute simply does not help.

“It is always good to have problems like this,” he says. “You have to look at how you can introduce some new technology that will be able to resolve this to enable further scaling, to 10,000 or 100,000 machines.”

He says such examples highlight how optical engineers must also have an understanding of systems and their workloads and not just focus on ASIC specifications such as bandwidth density, latency and energy.

Because of the diverse workloads, what is needed is a mixture of circuit switching and packet switching interconnect.

Stojanovic says high-radix optical switching can connect up to a thousand nodes and, scaling to two hops, up to a million nodes in sub-microsecond latencies. This suits streamed traffic.

But an abundance of I/O bandwidth is also needed to attach to other types of packet switch fabrics. “So that you can also handle cache-line size messages,” says Stojanovic.

These are 64 bytes long and are found with processing tasks such as Graph AI where data searches are required, not just locally but across the whole memory space. Here, transmissions are shorter and involve more random addressing and this is where point-to-point optical I/O plays a role.

“It is an art to architect a machine,” says Stojanovic.

Disaggregation

Another data centre trend is server disaggregation which promises important advantages.

The only memory that meets the GPU requirements is HBM. But it is becoming difficult to realise taller and taller HBM stacks. Stojanovic cites as an example how Nvidia came out with its A100 GPU with 40GB of HBM that was quickly followed a year later, by an 80GB A100 version.

Some customers had to do a complete overall of their systems to upgrade to the newer A100 yet welcomed the doubling of memory because of the exponential growth in AI workloads.

By disaggregating a design – decoupling the compute and memory into separate pools – memory can be upgraded independently of the computing. In turn, pooling memory means multiple devices can share the memory and it avoids ‘stranded memory’ where a particular CPU is not using all its private memory. Having a lot of idle memory in a data centre is costly.

If the I/O to the pooled memory can be made fast enough, it promises to allow GPUs and CPUs to access common DDR memory.

“This pooling, with the appropriate memory controller design, equalises the playing field of GPUs and CPUs being able to access jointly this resource,” says Stojanovic. “That allows you to provide way more capacity – several orders more capacity of memory – to the GPUs but still be within a DRAM read access time.”

Such access time is 50-60ns overall from the DRAM banks and through an optical I/O. The pooling also means that the CPUs no longer have stranded memory.

“Now something that is physically remote can be logically close to the application,” says Stojanovic.

Challenges

For optical I/O to enable such system advances what is needed is an ecosystem of companies. Adding an optical chiplet alongside an ASIC is not the issue; chiplets are aready used by the chip industry. Instead, the ecosystem is needed to address such practical matters as attaching fibres and producing the lasers needed. This requires collaboration among companies across the optical industry.

“That is why the CW-WDM MSA is so important,” says Stojanovic. The MSA defines the wavelength grids for parallel optical channels and is an example of what is needed to launch an ecosystem and enable what system integrators and ultimately the hyperscalers want to do.

Systems and networking

Stojanovic concludes by highlighting an important distinction.

The XPUs have their own design cycles and, with each generation, new features and interfaces are introduced. “These are the hearts of every platform,” says Stojanovic. Optical I/O needs to be aligned with these devices.

The same applies to switch chips that have their own development cycles. “Synchronising these and working across the ecosystem to be able to find these proper insertion points is key,” he says.

But this also implies that the attention given to the interconnects used within a system (or between several systems i.e. to create a larger node) will be different to that given to the data centre network overall.

“The data centre network has its own bandwidth pace and needs, and co-packaged optics is a solution for that,“ says Stojanovic. “But I think a lot more connections get made, and the rules of the game are different, within the node.”

Companies will start building very different machines to differentiate themselves and meet the huge scaling demands of applications.

“There is a lot of motivation from computing companies and accelerator companies to create node platforms, and they are freer to innovate and more quickly adopt new technology than in the broader data centre network environment,” he says

When will this become evident? In the coming two years, says Stojanovic.