From 8-bit micros to modelling the brain

Part 1: An interview with computer scientist, Professor Steve Furber

Steve Furber is renowned for architecting the 32-bit reduced instruction set computer (RISC) processor from Acorn Computer, which became the founding architecture for Arm.

Arm processors have played a recurring role in Furber’s career. He and his team developed a clockless – asynchronous – version of the Arm, while a specialist Arm design has been the centrepiece building block for a project to develop a massively-parallel neural network computer.

Origins

I arrive at St Pancras International station early enough to have a coffee in the redeveloped St Pancras Renaissance London Hotel, the architecturally striking building dating back to the 19th century that is part of the station.

The train arrives on time at East Midlands Parkway, close to Nottingham, where Professor Steve Furber greets me and takes me to his home.

He apologises for the boxes, having recently moved to be closer to family.

We settle in the living room, and I’m served a welcome cup of tea. I tell Professor Furber that it has been 13 years since I last interviewed him.

Arm architecture

Furber was a key designer at Acorn Computer, which developed the BBC Microcomputer, an early personal computer that spawned a generation of programmers.

The BBC Micro used a commercially available 8-bit microprocessor, but in 1983-84 Acorn’s team, led by Furber, developed a 32-bit RISC architecture.

The decision was bold and had far-reaching consequences: the Acorn RISC Machine, or ARM1, would become the founding processor architecture of Arm.

Nearly 40 years on, firms have shipped over 250 billion Arm-based chips.

Cambridge

Furber’s interest in electronics began with his love of radio-controlled aircraft.

He wasn’t very good at it, and his Physics Master at Manchester Grammar School helped him.

Furber always took a bag with him when flying his model plane, as he often returned with his plane in pieces; he refers to his aircraft as ‘radio-affected’ rather than radio-controlled.

Furber was gifted at maths and went to Cambridge, where he undertook the undergraduate Mathematical Tripos, followed by the Maths Part III. Decades later, the University of Cambridge recognised Maths Part III as equivalent to a Master’s.

“It [choosing to read maths] was very much an exploration,” says Furber. “My career decisions have all been opportunistic rather than long-term planned.”

At Cambridge, he was influenced by the lectures of British mathematician James Lighthill on biofluid dynamics. This led to Furber’s PhD topic, looking at the flight of different animals and insects to see if novel flight motions could benefit jet-engine design.

He continued his love of flight as a student by joining a glider club, but his experience was mixed. When he heard of a fledgling student society building computers, he wondered if he might enjoy using computers for simulated flight rather than actual flying.

“I was one of the first [students] that started building computers,” says Furber. “And those computers then started getting used in my research.”

The first microprocessor he used was the 8-bit Signetics 2650.

Acorn Computers

Furber’s involvement in the Cambridge computer society brought him to the attention of Chris Curry and Hermann Hauser, co-founders of Acorn Computers, a pioneering UK desktop company.

Hauser interviewed and recruited Furber in 1977. Furber joined Acorn full-time four years later after completing his research fellowship.

Hauser, who co-founded venture capital firm Amadeus Capital Partners, said Furber was among the smartest people he had met. And having worked in Cambridge, Hauser said he had met a few.

During design meetings, Furber would come out with outstandingly brilliant solutions to complex problems, said Hauser, who led the R&D department at Acorn Computers.

BBC Micro and the ARM1

The BBC’s charter was to educate the public and broadcast programmes highlighting microprocessors.

The UK broadcaster wanted to educate in detail about what microprocessors might do and was looking for a computer to provide users with a hands-on perspective via TV programmes.

When the BBC spoke to Acorn, it estimated it would need 12,000 machines. Public demand was such that 1.5 million Acorn units were sold.

The computer’s success led Acorn to consider its next step. Acorn had already added a second processor to the BBC Micro, and Acorn had expanded its computer portfolio, including the Acorn Cambridge workstation. But by then, microprocessors were moving from 8-bit to 16-bits.

Acorn’s R&D group lab-tested leading 16-bit processors but favoured none.

One issue was that the processors would not be interruptible for relatively long periods – when writing to disk storage, for example, yet the BBC Micro used processor interrupts heavily.

A second factor was that memory chips accounted for much of the computer’s cost.

“The computer’s performance was defined by how much memory bandwidth the processor could access, and those 16-bit processors couldn’t use the available bandwidth; they were slower than the memory,” says Furber. “And that struck us as just wrong.”

While Furber and colleagues were undecided about how to proceed, they began reading academic papers on RISC processors, CPUs designed on principles different to mainstream 16-bit processors.

“To us, designing the processor was a bit of a black art,” says Furber. “So the idea that there was this different approach, which made the job much simpler, resonated with us.”

Hauser was very keen to do ambitious things, says Furber, so when Acorn colleague, Sophie Wilson, started discussing designing a RISC instruction set, they started work but solely as an exploration.

Furber would turn Wilson’s processor instructions and architecture designs into microarchitecture.

“It was sketching an architecture on a piece of paper, going through the instructions that Sophie had specified, and colouring it in for what would happen in each phase,” says Furber.

The design was scrapped and started again each time something didn’t work or needed a change.

“In this sort of way, that is how the ARM architecture emerged,” says Furber.

It took 18 months for the first RISC silicon to arrive and another two years to get the remaining three chips that made up Acorn’s Archimedes computer.

The RISC chip worked well, but by then, the IBM PC had emerged as the business computer of choice, confining Acorn to the educational market. This limited Acorn’s growth, making it difficult for the company to keep up technologically.

Furber was looking at business plans to move the Arm activity into a separate company.

“None of the numbers worked,” he says. “If it were going to be a royalty business, you’d have to sell millions of them, and nobody could imagine selling such numbers.”

During this time, a colleague told him how the University of Manchester was looking for an engineering professor. Furber applied and got the position.

Arm was spun out in November 1990, but Furber had become an academic by then.

Asynchronous logic

Unlike most UK computing departments, Manchester originated in building machines. Freddy Williams and Tom Kilburn built the first programmed computer in 1948. Kilburn went on to set up the department.

“The department grew out of engineering; most computing departments grew out of Maths,” says Furber.

Furber picked asynchronous chip design as his first topic for research, motivated by a desire to improve energy efficiency. “It was mainly exploring a different way to design chips and seeing where it went,” says Furber.

Asynchronous or self-timed circuits use energy only when there’s something useful to do. In contrast, clocked circuits burn energy all the time unless they turn their clocks off, a technique that is now increasingly used.

Asynchronous chips also have significant advantages in terms of electromagnetic interference.

“What a clock does on the chip is almost as bad as you can get when it comes to generating electrical interference, locking everything to a particular frequency, and synchronising all the current pulses is exactly that,” says Furber.

The result was the Amulet processor series, asynchronous versions of the Arm, which kept Furber and his team occupied during the 1990s and the early 2000s.

In the late 1990s, Arm moved from making hard-core processors to synthesised ones. The issue was that the electronic design automation (EDA) tools did not synthesise asynchronous designs well.

While Furber and his team learnt how to build chips – the Amulet3 processor was a complete asynchronous system-on-chip – the problem shifted to automating the design process. Even now, asynchronous design EDA tools are lagging, he says.

In the early 2000s, Furber’s interest turned to neuromorphic computing.

The resulting SpiNNaker chip, the programable building block of Furber’s massively parallel neural network, uses asynchronous techniques, as does Intel’s Loihi neuromorphic processor.

“There’s always been a synergy between neuromorphic and asynchronous,” says Furber.

Implementing a massive neural network using specialised hardware has been Furber’s main interest for the last 20 years, the subject of the second part of the interview.

For Part 2: Modelling the Human Brain with specialised CPUs, click here

Further Information:

The Everything Blueprint: The Microchip Design That Changed The World, by James Ashton.

Modelling the Human Brain with specialised CPUs

Part 2: University of Manchester’s Professor Steve Furber discusses the design considerations for developing hardware to mimic the workings of the human brain.

The designed hardware, the Arm-based Spiking Neural Network Architecture (SpiNNAker) chip, is being used to understand the working of the brain and for industrial applications to implement artificial intelligence (AI)

Steve Furber has spent his career researching computing systems but his interests have taken him on a path different to the mainstream.

As principal designer at Acorn Computers, he developed a reduced instruction set computing (RISC) processor architecture when microprocessors used a complex instruction set.

The RISC design became the foundational architecture for the processor design company Arm.

As an academic, Furber explored asynchronous logic when the digital logic of commercial chips was all clock-driven.

He then took a turn towards AI during a period when AI research was in the doldrums.

Furber had experienced the rapid progress in microprocessor architectures, yet they could not do things that humans found easy. He became fascinated with the fundamental differences between computer systems and biological brains.

The result was a shift to neuromorphic computing – developing hardware inspired by neurons and synapses found in biological brains.

The neural network work led to the Arm-based SpiNNaker and the University of Manchester’s massively parallel computer that uses one million.

Now, a second-generation SpiNNaker exists, a collaboration between the University of Manchester and University of Technology Dresden. But it is Germany, rather than the UK, exploiting the technology for its industry.

Associative memory

Furber’s interest in neural networks started with his research work on inexact associative memory.

Traditional memory returns a stored value when the address of a specific location in memory is presented to the chip. In contrast, associative memory – also known as content addressable memory – searches all of its store, returning data only when there is an exact match. Associative memory is used for on-chip memory stores for high-speed processors, for example.

Each entry in the associative memory effectively maps to a point in a higher dimensional space, explains Furber: “If you’re on that point, you get an output, and if you’re not on that point, you don’t.”

The idea of inexact associative memory is to soften it by increasing the radius at the output from a point to a space.

“If you have many of these points in space that you are sensitive to, then what you want to do is effectively increase the space that gives you an output without overlapping too much,” says Furber. “This is exactly what a neural network looks for.”

Biological neural networks

Neurons and synapses are the building blocks making up a biological neural network. A neuron sends electrical signals to a network of such cells, while the synapse acts as a gateway enabling one neuron to talk to another.

When Furber looked at biological neural networks to model them in hardware, he realized the neural networks models kept changing as the understanding into their workings deepened.

So after investigating hardware designs to model biological neural networks, he decided to make the engines software programmable. Twenty years on, the decision proved correct, says Furber, allowing the adaptation of the models run on the hardware.

Furber and his team chose the Arm architecture to base their programmable design, resulting in the SpiNNaker chip.

SpiNNaker was designed with massive scale in mind, and one million SpiNNakers make up the massively parallel computer that models human brain functions and runs machine learning algorithms.

Neurons, synapses and networking

Neural networks had a low profile 20 years ago. It was around 2005 when academic Geoffrey Hinton had a breakthrough that enabled deep learning to take off. Hinton joined Google in 2013 and recently resigned from the company to allow him to express his concerns about AI.

Furber’s neural network work took time; funding for the SpiNNaker design began in 2005, seven years after the inexact associative memory began.

Furber started by looking at how to model neural networks in hardware more efficiently: neurons and synapses.

“The synapse is a complex function which, my biological colleagues tell me, has 1,500 proteins; the presence or absence of each affects how it behaves,” says Furber. “So you have very high dimensional space around one synapse in reality.”

Furber and his team tackled such issues as how to encode the relevant equations in hardware and how the chips were to be connected, given the connectivity topology of the human brain is enormous.

A brain neuron typically connects to 10,000 others. Specific cells in the cerebellum, a part of the human brain that controls movement and balance, have up to 250,000 inputs.

“How do they make a sensible judgment, and what’s happening on these quarter of a million impulses is a mystery,” says Furber.

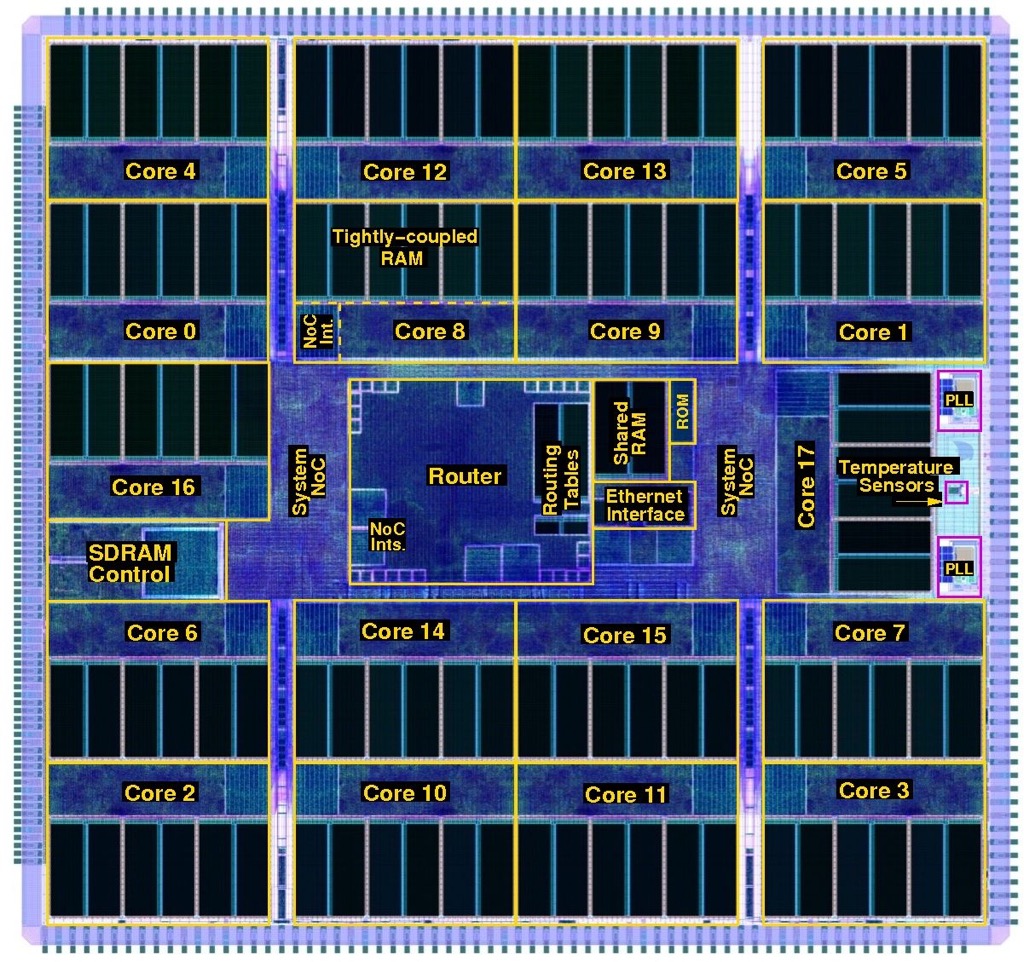

SpiNNaker design

Neurons communicate by sending electrical spikes, asynchronous events that encapsulate information in the firing patterns, so the SpiNNaker would have to model such spiking neurons.

In the human brain, enormous resources are dedicated to communication; 100 billion (1011) neurons are linked by one quadrillion (1015) connections.

For the chip design, the design considerations included how the inputs and outputs would get into and out of the chip and how the signals would be routed in a multi-chip architecture.

Moreover, each chip would have to be general purpose and scalable so that the computer architecture could implement large brain functions.

Replicating the vast number of brain connections electronically is impractical, so Furber and his team exploited the fact that electronic communication is far faster than the biological equivalent.

This is the basis of SpiNNaker: electrical spikes are encapsulated as packets and whizzed across links. The spikes reach where they need to be in less than a millisecond to match biological timescales.

The neurons and synapses are described using mathematical functions solved on the Arm-based processor using fixed-point arithmetic.

SpiNNaker took five years to design. This sounds a long time, especially when the Arm1 took 18 months, until Furber explains the fundamental differences between the two projects.

“Moore’s Law has delivered transistors in exponentially growing abundance,” he says. “The Arm1 had 25,000 transistors, whereas the SpiNNaker has 100 million.”

Also, firms have tens or even 100s of engineers designing chips; the University of Manchester’s SpiNNaker team numbered five staff.

One critical design decision that had to be made was whether a multi-project wafer run was needed to check SpiNNaker’s workings before committing to production.

“We decided to go for the full chip, and we got away with it,” says Furber. Cutting out the multi-project wafer stage saved 12% of the total system build cost.

The first SpiNNaker chips arrived in 2010. First test boards had four SpiNNaker chips and were used for software development. Then the full 48-chip boards were made, each connecting to six neighbouring ones.

The first milestone was in 2016 when a half-million processor machine was launched and made available for the European Union’s Human Brain Project. The Human Brain Project came about as an amalgam of two separate projects; modelling of the human brain and neuromorphic computing.

This was followed in 2018 by the entire one million SpiNNaker architecture.

“The size of the machine was not the major constraint at the time,” says Furber. “No users were troubled by the fact that we only had half a million cores.” The higher priority was improving the quality and reach of the software.

Programming the computer

The Python programming language is used to program the SpiNNaker parallel processor machine, coupled with the Python Neural Network application programming interface (PyNN API).

PyNN allows neuroscientists to describe their networks as neurons with inputs and outputs (populations) and how their outputs act as inputs to the next layer of neurons (projections).

Using this approach, neural networks can be described concisely, even if it is a low-level way to describe them. “You’re not describing the function; you’re describing the physical instantiation of something,” says Furber.

Simulators are available that run on laptops to allow model development. Once complete, the model can be run on the BrainScaleS machine for speed or the SpiNNaker architecture if scale is required.

BrainScaleS, also part of the Human Brain Project, is a machine based in Heidelberg, Germany, that implements models of neurons and synapses at 1000x biological speeds.

Modeling the brain

The SpiNNaker computer became the first to run a model of the segment of a mammalian cortex in real biological time. The model of the cortex was developed by Jülich Research Centre in Germany.

“The cortex is a very important part of the brain and is where most of the higher-level functions are thought to reside,” says Furber.

When the model runs, it reproduces realistic biological spiking in the neural network layers. The problem, says Furber, is that the cortex is poorly understood.

Neuroscientists have a good grasp of the Cortex’s physiology – the locations of the neurons and their connections, although not their strengths – and this know-how is encapsulated in the PyNN model.

But neuroscientists don’t know how the inputs are coded or what the outputs mean. Furber describes the Cortex as a black box with inputs and outputs that are not understood.

“What we are doing is building a model of the black box and asking if the model is realistic in the sense that it reproduces something we can sensibly measure,” says Furber

For neuroscientists to progress, the building blocks must be combined to form whole brain models to understand how to test them.

At present, the level of testing is to turn them on and see if they produce realistic spike patterns, says Furber.

SpiNNaker 2

A second-generation SpiNNaker 2 device has been developed, with the first silicon available in late 2022 while the first large SpiNNaker 2 boards are becoming available.

The original SpiNNaker was implemented using a 130nm CMOS process, while SpiNNaker 2 is implemented using a 22nm fully depleted silicon on insulator (FDSOI) process.

SpiNNaker 2 improves processing performance by 50x such that a SpiNNaker 2 chip exceeds the processing power of the 48- SpiNNaker printed circuit board.

SpiNNaker 2’s design is also more general purpose. A multiply-accumulator engine has been added for deep learning AI. The newer processor also has 152 processor engines compared to Spinnaker’s 18, and the device includes dynamic power management.

“Each of the 152 processor engines effectively has its dynamic voltage and frequency scaling control,” says Furber. “You can adjust the voltage and frequency and, therefore, the efficiency for each time step, even at the 0.1-millisecond level; you look at the incoming workload and just adjust.”

The University of Technology Dresden has been awarded an $8.8 billion grant to build a massively parallel processor using 10 million SpiNNaker 2 devices.

The university is also working with German automotive firms to develop edge-cloud applications using SpiNNaker 2 to process sensor data with milliseconds latency.

The device is also ideal for streaming AI applications where radar, video or audio data can be condensed close to where it is generated before being sent for further processing in the cloud.

Furber first met with the University of Technology Dresden’s neuromorphic team via the Human Brain Project.

The teams decided to collaborate, given Dresden’s expertise in industrial chip design complementing Furber and his team’s system expertise.

Takeaways

“We are not there yet, says Furber, summarizing the brain work in general.

Many practical lessons have been learnt from the team’s research work in developing programmable hardware at a massive scale. The machine runs brain models in real time, demonstrating realistic brain behaviour.

“We’ve built a capability,” he says. “People are using this in different ways: exploring ideas and exploring new learning rules.

In parallel, there has also been an explosion in industrial AI, and a consensus is emerging that neuromorphic computing and mainstream AI will eventually converge, says Furber.

“Mainstream AI has made these huge advances but at huge cost,” says Furber. Training one of these leading neural networks takes several weeks consuming vast amounts of power. “Can Neuromorphics change that?”

Mainstream AI is well established and supported with compelling tools, unlike the tools for neuromorphic models.

Furber says the SpiNNaker technology is proven and reliable. The Manchester machine is offered as a cloud service and remained running during the pandemic when no one could enter the university.

But Furber admits it has not delivered any radical new brain science insights.

“We’ve generated the capability that has that potential, but no results have been delivered in this area yet, which is a bit disappointing for me,” he says.

Will devices like SpiNNaker impact mainstream AI?

“It’s still an open question,” says Furber. “It has the potential to run some of these big AI applications with much lower power.”

Given such hardware is spike-driven, it only processes when spiking takes place, saving energy. As does the nature of the processing, which is sparse, areas of the chip tend to be inactive during spiking.

Professor Emeritus

Furber is approaching retirement. I ask if he wants to continue working as a Professor Emeritus. “I hope so,” he says. “I will probably carry on at that moment.”

He also has some unfinished business with model aircraft. “I’ve never lost my itch to play with model aeroplanes, maybe I’ll have time for that,” he says.

The last time he flew planes was when he was working at Acorn. “Quite often, the aeroplanes came back in one piece,” he quips.

For Part 1: From 8-bit micros to the modeling the human brain, click here

Further information

Podcast: SpiNNaker 2: Building a Brain with 10 Million CPUs