OFC 2025 industry reflections - Part 2

Gazettabyte is asking industry figures for their thoughts after attending the 50th-anniversary OFC show in San Francisco. In Part 2, the contributions are from BT’s Professor Andrew Lord, Chris Cole, Coherent’s Vipul Bhatt, and Juniper Network’s Dirk van den Borne.ontent

Professor Andrew Lord, Head of Optical Network Research at BT Group

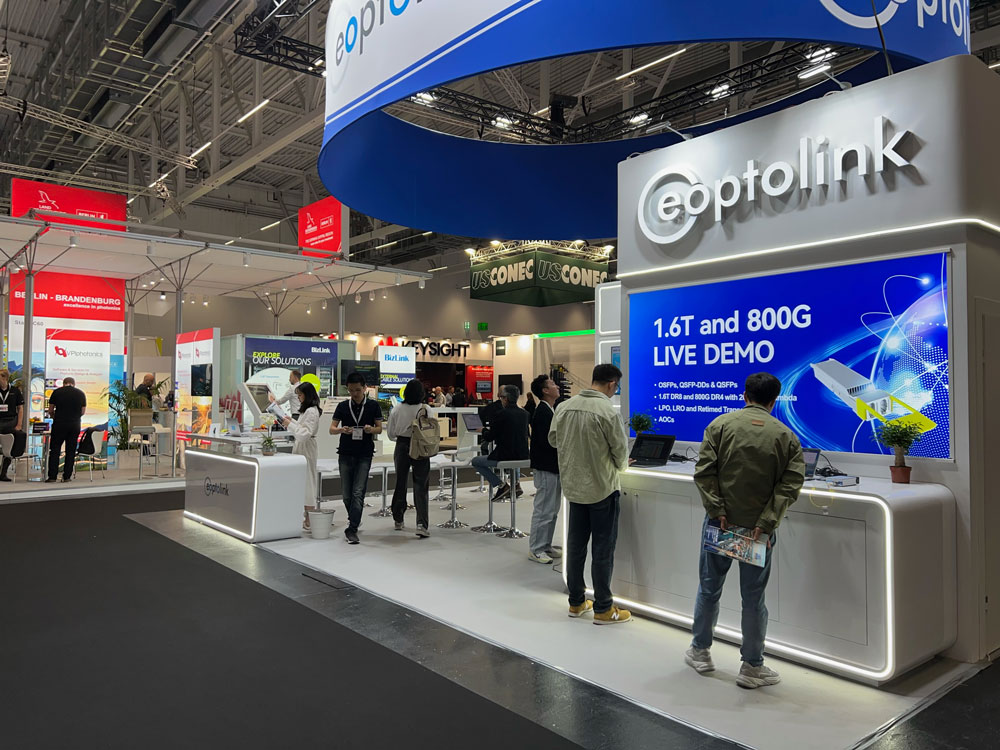

OFC was a highly successful and lively show this year, reflecting a sense of optimism in the optical comms industry. The conference was dominated by the need for optics in data centres to handle the large AI-driven demands. And it was exciting to see the conference at an all-time attendance peak.

From a carrier perspective, I continued to appreciate the maturing of 800-gigabit plugs for core networks and 100GZR plugs (including bidirectional operation for single-fibre working) for the metro-access side.

Hollow-core fibre continues to progress with multiple companies developing products, and evidence for longer lengths of fibre in manufacturing. Though dominated by data centres and low-latency applications such as financial trading, use cases are expected to spread into diverse areas such as subsea cables and 6G xHaul.

There was also a much-increased interest in fibre sensing as an additional revenue generator for telecom operators, although compelling use cases will require more cost-effective technology.

Lastly, there has been another significant increase in quantum technology at OFC. There was an ever-increasing number of Quantum Key Distribution (QKD) protocols on display but with a current focus on Continuous—Variable QKD (CV-QKD), which might be more readily manufacturable and easier to integrate.

Chris Cole, Optical Communications Advisor

For the premier optics conference, the amount of time and floor space devoted to electrical interfaces was astounding.

Even more amazing is that while copper’s death at the merciless hands of optics continues to be reported, the percentage of time devoted to electrical work at OFC is going up. Multiple speakers commented on this throughout the week.

One reason is that as rates increase, the electrical links connecting optical links to ASICs are becoming disproportionately challenging. The traditional Ethernet model of electrical adding a small penalty to the overall link is becoming less valid.

Another reason is the introduction of power-saving interfaces, such as linear and half-retimed, which tightly couple the optical and electrical budgets.

Optics engineers now have to worry about S-parameters and cross-talk of electrical connectors, vias, package balls, copper traces and others.

The biggest buzz in datacom was around co-packaged optics, helped by Nvidia’s switch announcements at GTC in March.

Established companies and start-ups were outbidding each other with claims of the highest bandwidth in the smallest space; typically the more eye-popping the claims, the less actual hard engineering data to back them up. This is for a market that is still approximately zero and faces its toughest hurdles of yield and manufacturability ahead.

To their credit, some companies are playing the long game and doing the slow, hard work to advance the field. For example, I continue to cite Broadcom for publishing extensive characterisation of their co-packaged optics and establishing the bar for what is minimally acceptable for others if they want to claim to be real.

The irony is that, in the meantime, pluggable modules are booming, and it was exciting to see so many suppliers thriving in this space, as demonstrated by the products and traffic in their booths.

The best news for pluggable module suppliers is that if co-packaged optics takes off, it will create more bandwidth demand in the data centre, driving up the need for pluggables.

I may have missed it, but no coherent ZR or other long-range co-packaged optics were announced.

A continued amazement at each OFC is the undying interest and effort in various incarnations of general optical computing.

Despite having no merit as easily shown on first principles, the number of companies and researchers in the field is growing. This is also despite the market holding steady at zero.

The superficiality of the field is best illustrated by a slogan gaining popularity and heard at OFC: computing at the speed of light. This is despite the speed of propagation being similar in copper and optical waveguides. The reported optical computing devices are hundreds of thousands or millions of times larger than equivalent CMOS circuits, resulting in the distance, not the speed, determining the compute time.

Practical optical computing precision is limited to about four bits, unverfied claims of higher precision not withstanding, making it useless in datacenter applications.

Vipul Bhatt, Vice President, Corporate Strategic Marketing at Coherent.

Three things stood out at OFC:

- The emergence of transceivers based on 200-gigabit VCSELs

- A rising entrepreneurial interest in optical circuit switching

- And an accelerated momentum towards 1.6-terabit (8×200-gigabit transceivers) alongside the push for 400-gigabit lanes due to AI-driven bandwidth expansion.

The conversations about co-packaged optics showed increasing maturity, shifting from ‘pluggable versus co-packaged optics’ to their co-existence. The consensus is now more nuanced: co-packaged optics may find its place, especially if it is socketed, while front-panel pluggables will continue to thrive.

Strikingly, talk of optical interconnects beyond 400-gigabit lanes was almost nonexistent. Even as we develop 400 gigabit-per-lane products, we should be planning the next step: either another speed leap (this industry has never disappointed) or, more likely, a shift to ‘fast-and-wide’, blurring the boundary between scale-out and scale-up by using a high radix.

Considering the fast cadence of bandwidth upgrades, the absence of such a pivotal discussion was unexpected.

Dirk van den Borne, director of system engineering at Juniper Networks

The technology singularity is defined as the merger of man and machine. However, after a week at OFC, I will venture a different definition where we call the “AI singularity” the point when we only talk about AI every waking hour and nothing else. The industry seemed close to this point at OFC 2025.

My primary interest at the show was the industry’s progress around 1.6-terabit optics, from scale-up inside the rack to data centre interconnect and long-haul using ZR/ZR+ optics. The industry here is changing and innovating at an incredible pace, driven by the vast opportunity that AI unlocks for companies across the optics ecosystem.

A highlight was the first demos of 1.6-terabit optics using a 3nm CMOS process DSP, which have started to tape out and bring the power consumption down from a scary 30W to a high but workable 25W. Well beyond the power-saving alone, this difference matters a lot in the design of high-density switches and routers.

It’s equally encouraging to see the first module demos with 200 gigabit-per-lane VCSELs and half-retimed linear-retimed optical (LRO) pluggables. Both approaches can potentially reduce the optics power consumption to 20W and below.

The 1.6-terabit ecosystem is rapidly taking shape and will be ready for prime time once 1.6-terabit switch ASICs arrive in the marketplace. There’s still a lot of buzz around linear pluggable optics (LPO) and co-packaged optics, but both don’t seem ready yet. LPO mostly appears to be a case of too little, too late. It wasn’t mature enough to be useful at 800 gigabits, and the technology will be highly challenging for 1.6 terabits.

The dream of co-packaged optics will likely have to wait for two more years, though it does seem inevitable. But with 1.6 terabit pluggable optics maturing quickly, I don’t see it having much impact in this generation.

The ZR/ZR+ coherent optics are also progressing rapidly. Here, 800-gigabit is ready, with proven interoperability between modules and DSPs using the OpenROADM probabilistic constellation shaping standard, a critical piece for interoperability in more demanding applications.

The road to 1600ZR coherent optics for data centre interconnect (DCI) is now better understood, and power consumption projections seem reasonable for 2nm DSP designs.

Unfortunately, the 1600ZR+ is more of a question mark to me, as ongoing standardisation is taking this in a different direction and, hence, a different DSP design from 1600ZR. The most exciting discussions are around “scale-up” and how optics can replace copper for intra-rack connectivity.

This is an area of great debate and speculation, with wildly differing technologies being proposed. However, the goal of around 10 petabit-per-second (Pbps) in cross-sectional bandwidth in a single rack is a terrific industry challenge, one that can spur the development of technologies that might open up new markets for optics well beyond the initial AI cluster application.

ECOC 2024 industry reflections - Part II

Gazettabyte is asking industry figures for their thoughts after attending the recent 50th-anniversary ECOC show in Frankfurt. Here are contributions from Nubis Communications' Dan Harding, imec's Peter Ossieur, and Chris Cole.

Dan Harding, CEO, Nubis Communications

Our biggest takeaway from ECOC is the increased confidence not just in 200-gigabit electrical and optical interfaces but also in 400 gigabit. It is becoming clear that in 2025 and 2026, the industry will broadly launch platforms using a 200 gigabit per lane serdes [serialiser/deserialiser interfaces] that will connect to 200 gigabit per lane optics.

At ECOC, we were shown demonstrations of 400-gigabit serdes. We had several discussions with industry leaders who expressed confidence that serdes can scale to 400 gigabit per lane and that the industry will need optics to support this in the next few years. Different optics approaches were shown at ECOC, but the main takeaway was that serdes speeds continue to advance, and optics needs to figure out a way to keep up.

Our second takeaway is that advancements in materials have significantly reduced loss across printed circuit boards (PCBs), so linear pluggable optics (LPO) at 200 gigabit looks increasingly feasible with vertical line cards and even with traditional ones.

Generally, the 200 gigabit per lane generation will be more similar to the 100-gigabit generation than we thought a year ago. That said, the transition to each new service speed is becoming fuzzy, such that 100 gigabits per lane will have years of overlap with 200 gigabits per lane. The data centre operators and system vendors remain committed to copper for short-reach links, even at 200 gigabit per lane. However, there will be more “active” copper links, so the mix between passive and active copper will shift to more active at 200 gigabit per lane.

As a supplier of optics for AI/ machine-learning networks, the third big takeaway for us is that the speed at which new architectures are being deployed puts an extreme focus on delivering a solution that can quickly move from first samples into volume production. That means we must constantly consider our entire development flow to support this.

Lastly, we were encouraged to see progress on a new form factor for pluggable optics to eliminate the “gold fingers.” This will help the optics industry take advantage of silicon photonics and the density it can deliver. Let’s see how quickly this form factor work progresses, but this is the right direction for the optics industry.

Peter Ossieur, Program Manager, High-Speed Transceivers at imec-IDLab

I noted the speed at which the industry has embraced the concept of linear pluggable optics (LPO). But I’m still unsure how linear pluggable optics will play out.

At ECOC, it was evident that linear pluggable optics are now driving rapid adoption of new materials, notably thin-film lithium niobate. This is excellent news for imec, which is putting significant effort into integrating lithium niobate on its 200mm silicon photonics platform.

As for surprises, one is that co-packaged optics continues to struggle. Another is that the industry’s focus is already turning to 400 gigabit per lane.

Chris Cole, Optical Communications Advisor

The overall ECOC impression was like this year’s OFC show; optics are back with a vengeance. The excitement, buzz, and optimism were infectious. Also uplifting was the focus of the technical conference and the exhibition on solving tough engineering challenges and going after new markets rather than eking out a living. This period may yet be a bubble, but it is a fun ride while it lasts.

There were two important technical trends of note. First, parallelism will become increasingly important, including more fibre and wavelengths. Second, reliability must be approached holistically, and today’s data centre paradigm of swapping failed modules is inadequate to support AI/ machine-learning growth, especially for training.

Also necessary are significantly lower Failures in Time (FIT) for all parts of an optical link, along with system-level redundancy schemes. Achieving this will likely require a shift to fab process-based integration, replacing current discrete assembly methods. This transition is not just a suggestion but a crucial step towards ensuring the reliability and efficiency of optical systems.

Pluggable optics in need of a makeover

Current pluggable optics have stunted optical innovation for the last decade. So argues Chris Cole, industry veteran and an advisor at start-up Quintessent.

Cole calls for a new form factor supporting hundreds of electrical and optical channels. In a workshop on massively parallel optics held at the recent ECOC conference and exhibition in Frankfurt, he outlined other important specifications such a module should have.

Cole, working with other interested parties in the new form factor, will present their proposal to the OIF industry body at its next meeting in November.

“I’m very optimistic it will be approved,” says Cole.

Limitations

Pluggable optics require improvement in several areas.

One limitation is the large, limited number of gold-fingered interconnects on the edge of the printed circuit board (PCB) that fits inside the pluggable module. “This technology goes back 30 or 40 years,” says Cole.

The high-speed OSFP (Octal Small Form-factor Pluggable) module has a row of eight transmit-receive pairs of gold-fingered edge interconnects. The OSFP interface supports 800 gigabits per second (Gbps), and 1.6 terabits per second (Tbps) if 200Gbps signalling is used. The industry can also double capacity to 3.2-terabit with 8x400Gbps signals.

In turn, the QSFP-XD has 16 such pairs arranged in two rows. That promises 3.2Tbps capacity using 16x200Gbps signals and 6.4Tbps with 16x400Gbps signals. However, Cole expects huge signal integrity issues using such a design.

Heat dissipation is another challenge with pluggables. Heat is extracted from a pluggable using a metal plate on the top, which Cole says limits power consumption to 50W.

It is is the low channel count, however, that is the biggest restriction, says Cole.

Meanwhile, yield and reliability have yet to advance. He cites the significant reliability performance achieved by Intel with its integrated laser technology. “It doesn’t do any good because who cares?” he says. “You have a four-channel module, and something’s wrong; you throw it away,” says Cole.

Proposed form factor

The high-capacity form factor proposal calls for a dense, high-bandwidth design. Significant numbers of electrical and optical lanes are needed for that: hundreds rather than eight or 16. Moreover, hundreds of electrical interfaces is not a new concept: Cole cites the large 300-pin MSA used for early embedded coherent modems.

The new form factor would have 2D electrical connections with at least a 0.5mm lower pitch. A high-speed 256-lane count is envisaged, that would also enable many optical lanes and wavelength counts. Each electrical lane should have a bandwidth of 200GHz to support 448Gbps signalling. If implemented, the package’s capacity would be 114Tbps.

“[200GHz lanes] is not very hard to do if you have a connection that is almost negligible height,” says Cole.

Many optical connections are also required, says Cole. He suggests 512, where individual links are supported to ensure a high radix. “We can quibble about the correct number, but it’s not eight or 16,” says Cole. The design should also support liquid cooling to ensure a 100W power consumption.

Optical options

Cole says the design must be optics-agnostic. Nobody can predict the future, he says.

To support 12.8Tbps, for example, it could use 32 optical lanes each at 400Gbps or 16 lanes at 800Gbps both using a thin-film lithium niobate modulator. However, the design should also support many more slower optical channels.

One such 12.8Tbps optical transmission example is 256 channels, each a 50Gbps non-return-to-zero signal, making use of a compact ring-resonator modulators. It could even be 3,200x4Gbps MicroLED channels using an approach favoured by Avicena. It is not out of the question, says Cole.

Cole stresses that while 12.8-terabit and 25.6-terabit capacities may sound high compared to existing pluggable, but the numbers should be viewed as aggregate package capacities. “You would be breaking them out into many channels,” he says.

Retaining features that work

Cole argues that the benefits of pluggable modules must be retained. These include front-plate access, testing, and easy replacement. Equally, the proposed form factor should preserve existing industry business models.

He is also adamant that conventional assembly must be replaced with process-based photonic integration to improve reliability. “The consistency you get in a fab versus what you get in a discrete assembly, there’s an order of magnitude difference there,” he says.

OFC 2024 industry reflections: Final part

Chris Cole, Consultant

OFC and optics were back with a vengeance. The high level of excitement and participation in the technical and exhibit programmes was fueled by artifical intelligence/ machine learning (AI/ML). To moderate this exuberance, a few reality checks are offered.

During the Optica Executive Forum, held on the Monday, one of the panels was with optics industry CEOs. They were asked if AI/ML is a bubble. All five said no. They are right that there is a real, dramatic increase in optics demand driven by AI/ML, with solid projections showing exponential growth.

At the same time, it is a bubble because of the outrageous valuations for anything with an AI/ML label, even on the most mundane products. Many booths in the Exhibit Hall had AI/ML on their panels, for the same product types companies have been showing for years. Some of the start-ups and public companies presenting and exhibiting at OFC have frothy valuations by claiming to solve compute bottlenecks. An example is optically interconnecting memory, which sends investors into a frenzy, as if this has not been considered for decades.

The problem with a bubble is that it misallocates resources to promises of near-term pay off, at the expense of investment into long-term fundamental technology which is the only way to enable a paradigm shift to optical AI/ML interconnect.

I presented a version of the below table at the OFC Executive Forum, pointing out that there have only been two paradigm shifts in optical datacom, and these were enabled by fundamentally new optical components and devices which took decades to develop.

My advice to investors was to be skeptical of any optically-enabled breakthrough claims which simply rearrange or integrate existing components and devices. As with previous bubbles, this one will self-correct, and many of the stratospheric valuations will collapse.

Source: Chris Cole

A second dose of reality was provided by Ashkan Seyedi of Nvidia, in several OFC forums, illustrated by the Today’s Interconnect Details table below (shared with permission).

Source: Ashkan Seyedi, Nvidia

He pointed out that the dominant AI/ML interconnect continues to be copper because it beats optics by integer or decade better metrics of bandwidth density, power, and cost. Existing data centre optical networking technology cannot simply be repackaged as optical compute input-output (I/O), including optical memory interconnect, because that does not beat copper.

A third dose of reality came from Xiang Zhou of Google and Qing Wang of Meta in separate detailed analysis presented at the Future of LPO (Linear Pluggable Optics) Workshop. They showed that not only does linear pluggable optics have no future beyond 112 gigabits per lane, but even at that rate it is highly constrained, making it unsuitable for general data centre deployment.

Yet linear pluggable optics was one of the big stories at OFC 2024, with many highly favourable presentations and more than two dozen booths exhibiting it in some form. This was the culmination of a view that has been advanced for years that optics development is too slow, especially if it involves standards. LPO was moved blazingly fast into prototype hardware without being preceded by extensive analysis. The result was predictable as testing in typical large deployment scenarios found significant problems.

At OFC 2025, there will be few if any linear pluggable optics demos. And it will not be generally deployed in large data centres.

Coincidently, the OIF announced that it started a project to standardise optics with one digital signal processor (DSP) in the link, located in the transmitter. This was preceded by analysis, including by Google and Meta, showing good margin against the types of impairments found in large data centres. The expectation is that many IC vendors will have DSP on transmit-only chips soon, including likely at OFC 2025.

A saving grace of linear pluggable optics may be the leveraging of related OIF work on linear receiver specification methodology. Another benefit may be the reaffirmation that real progress in optics is hard and requires fundamental understanding. Shortcutting of well-established engineering practices leads to wasted effort.

Real advances require large investment and take many years, which is what is necessary for optical AI/ML compute interconnect. Let’s hope investors realise this.

Hojjat Salemi, Chief Business Development Officer, Ranovus

Hyperscalers are increasingly recognising that scaling AI/ML compute demands extensive optical connectivity, and the conventional approach of using pluggable optical modules is proving inadequate.

The network infrastructure plays a pivotal role in the compute architecture, with various optimisation strategies depending on the workload. Both compute scale-up and scale-out scenarios necessitate substantial connectivity, high-density beach-front, cost-effectiveness, and energy efficiency. These requirements underscore the advantages of co-packaged optics (CPO) in meeting the evolving demands of AI/ML compute scaling.

It is great to see prominent tier-1 vendors like Nvidia, AMD, Broadcom, Marvell, GlobalFoundries, and TSMC embracing co-packaged optics. Their endorsement shows a significant step forward, indicating that the supply chain is gearing up for high-volume manufacturing by 2026. The substantial investments being poured into this technology underscore the collective effort to address the pressing challenge of scaling compute efficiently. This momentum bodes well for the future of AI/ML compute infrastructure and its ability to meet the escalating demands of various applications.

What surprise me was how fast low-power pluggable optics fizzled. While initially shown as a great technology, linear pluggable optics ultimately fell short in meeting some critical requirements crucial to Hyperscalers. Although retimed pluggable optical modules have been effective in certain applications and are likely to continue serving those needs for the foreseeable future, the evolving demands of new applications such as scaling compute necessitate innovative solutions like co-packaged optics.

The shift towards co-packaged optics highlights the importance of adapting to emerging technologies that can better address the unique challenges and requirements of rapidly evolving industries like hyperscale computing.

Harald Bock, Vice President Network Architecture, Infinera

I am impressed by the range of topics, excellent scientific work and product innovation each time I attend OFC.

Normally, the show's takeaways differ among the participants that I talk to. This year, most of the attendees I chatted agreed on the main topics. The memorable items this year ranged from artificial intelligence (AI) data centres, 800 gigabit-per-second (Gbps) pluggables, to the Full Spectrum Concert at Infinera’s OFC party that was held on the USS Midway.

AI is becoming the key driver for network capacity. While we are a very technology-driven industry, the interest in different technologies is driven by the business opportunities we expect. This puts AI at the top of the list. It is not the AI use cases in network operations, planning, and analytics, which are all progressing, but rather the impact that deploying AI data centres will have on network capacity and particularly on optical interfaces within and between data centres.

The interest was clearly amplified by the fact that recovery of the telecom networks business is only expected in the year’s second half.

Short term, AI infrastructure creates massive demand for short-reach interconnect within data centres, with longer-reach inter-data centre connectivity also being driven by new buildouts. So, we can expect AI to be the key driver of network bandwidth in the coming years.

It is in this context that linear pluggable optics has become an important candidate technology to provide efficient, low-energy interconnect, and as a result, it generated a huge amount of interest this year, stealing some of the attention that co-packaged optics or similar approaches have received in the past. Overall, AI use cases drove huge interest in 800Gbps pluggable optics products and demonstrations at the show.

Reducing interface and network power consumption have become key industry objectives. In all of these use cases and products, power consumption is now the main optimisation goal in order to drive down overall data centre power or to fit all pluggable optics into the same existing form factors (QSFP-DD and OSFP), even at higher rates such as 1.6Tbps.

I do believe that reducing power consumption, be it per capacity, or per reach x capacity depending on use case, has become our industry’s main objective. Looking at projected capacity growth that will continue at 35 to 40 per cent per year across much of cloud networks, that is what we all should be working on.

Another observation is that power consumption and capacity per duct have replaced spectral efficiency as the figure of merit. You could say that this is starting to replace the objective of increasing fibre capacity that our industry has been working under for many years.

We have all discussed the fact that we are no longer going to be able to easily increase spectral efficiency as we are approaching Shannon’s limit. In order to further increase fibre capacity, we have been talking about additional wavelength bands, with products now achieving beyond 10-terabit transmission bandwidth with Super C- and Super L-band and the option to add the S-, O-, and U- bands, as well as about spatial division multiplexing, which today refers to the use of multiple fibre cores to transmit data.

Before OFC, I was puzzled about the steps we, as an industry, would take since all of these require more than a single product from one company. Indeed it is an ecosystem of related components, amplifiers, wavelength handling, even splicing procedures. After OFC, I am now confident that uncoupled multi-core fibre is a good candidate for a next step, with progress on additional wavelength bands not at all out of the picture.

There is one additional point I learned from looking at this topic. In real-world deployments today, multi-core fibre will accelerate a massive increase in parallel fibres that are being deployed in fibre ducts across the world. To me, that means that while we are going to all focus on power consumption as a key measure for innovation, we should really use capacity per duct as an additional figure of merit.

In terms of technological progress, I would like to call out the area of quantum photonics.

We all saw the results from an impressive research push in this area, with complex photonic integration and interesting use cases being explored. The amount of work done in this area makes it difficult for me to keep up to speed. I continue to be fascinated and excited about the work done.

An entirely different category of innovation was shown in the post-deadline session where Microsoft and University of Southampton presented hollow-core fiber with a record 0.11 dB/km fiber loss. While we have been talking about the great promise of anti-resonant hollow-core fiber for a while as it offers significantly reduced latency, it reduces signal distortion by removing nonlinearity and offering low dispersion. All that has been shown before, but achieving a fibre loss that is considerably lower than that of all other fibre types is excellent news.

It confirms that hollow-core fiber could change the systems and the networks we build, and I will continue to keep close tabs on the progress in this area.

Overall, OFC 2024 was a great show, with my company launching new products and having a packed booth full of visitors, a large number of customer engagements, and meetings with most of our suppliers.

I left San Diego already looking forward to next year's OFC.

OFC 2023 show preview

- Sunday, March 5 marks the start of the Optical Fiber Communication (OFC) conference in San Diego, California

- The three General Chairs – Ramon Casellas, Chris Cole, and Ming-Jun Li – discuss the upcoming conference

OFC 2023 will be a show of multiple themes. That, at least, is the view of the team overseeing and coordinating this year’s conference and exhibition.

General Chair Ming-Jun Li of Corning who is also the recipient of the 2023 John Tyndall Award (see profiles, bottom), begins by highlighting the 1,000 paper submissions, suggesting that OFC has returned to pre-pandemic levels.

Ramon Casellas, another General Chair, highlights this year’s emphasis on the social aspects of technology. “We are trying not to forget what we are doing and why we are doing it,” he says.

Casellas highlights the OFC’s Plenary Session speakers (see section, below), an invited talk by Professor Dimitra Simeonidou of the University of Bristol, entitled: Human-Centric Networking and the Road to 6G, and a special event on sustainability.

This year’s OFC has received more submissions on quantum communications totaling 66 papers.

In the past, papers on quantum communications were submitted across OFC’s tracks addressing networking, subsystems and systems, and devices. However, evaluating them was challenging given that only some reviewers are quantum experts, says Chris Cole, the third General Chair. Now, OFC has a subcommittee dedicated to quantum.

Another first is OFCnet, a production network that will run during the show.

Themes and topics

Machine learning is one notable topic this year. The subject is familiar at OFC, says Casellas, but people are discussing it more.

Casellas highlights one session at OFC 2021 that addressed machine learning for optics and optics for machine learning. “It showed the duality of how you can use photonic components to do machine learning and apply machine learning to optimise networking,” says Casellas.

This year there will be additional aspects of machine learning for networks, transmission, and operations, says Casellas.

Other General Chair highlighted subjects include point-to-multipoint coherent transmission, non-terrestrial and satellite networks, and optical switching and how its benefits networking in the data centre.

Google, for example, is presenting a paper detailing its use of optical switching in its data centres, something the hyperscaler disclosed at the ACM Sigcomm conference in August 2022.

There is also more interest in fibre sensors used in communications networks.

“We see an increasing trend because now if you want smart networks, you need sensors everywhere,” says Li.

“That is another theme that goes across all the tracks, which is a non-traditional optical fibre communication area that we’ve been embracing,” adds Cole.

As examples, Cole cites lidar, radio over fibre, free-space communications, microwave fibre sensing, and optical processing.

OFC has had contributions in these areas, he says, but now these topics have dedicated subcommittee titles.

Plenary session

This year’s three Plenary Session speakers are:

- Patricia Obo-Nai, CEO of Vodafone Ghana, who will discuss Harnessing Digitalization for Effective Social Change,

- Jayshree V. Ullal, president and CEO of Arista Networks, addressing The Road to Petascale Cloud Networking,

- and Wendell P. Weeks, chairman and CEO of Corning, whose talk is entitled Capacity to Transform.

“We thought that having someone who could explain how technology improves society would be very positive,” says Casellas. “I’m proud to have someone who can talk on the benefits of digitisation from the point of view of society, in addition to more technical topics.”

Li highlights how OFC celebrated the 50th anniversary of low-loss fibre two years ago and that last year, OFC celebrated the year of glass, displaying information on panels.

Corning has played an important role in both technologies. “Having a speaker [Wendell Weeks] from a glass company talking about both will be interesting to the OFC audience,” says Li.

Cole highlights the third speaker, Jayshree Ullal, the CEO of Arista. The successful networking player is one of the companies competing in what he describes as a very tough field.

Rump session

This year’s Rump Session tackles silicon photonics, a session moderated by Daniel Kuchta of IBM TJ Watson Research Center and Michael Hochberg of Luminous Computing.

Cole says silicon photonics has received tremendous attention, and the Rump Session is asking some tough questions: “Is silicon photonics for real now? Is it just one of the guys in the toolbox? Or is it being sunsetted or supplemented?”

Cole expects a lively session, not just challenging conventional thinking but having people representing exciting alternatives which are commercially successful alongside silicon photonics.

Show interests

The Chairs also highlight their interests and what they hope to learn from the show.

For Li, it is high-density fibre and cable trends.

Work on space division multiplexing (SDM) – multicore and multimode – fibre has been an OFC topic for over 15 years. One question Li has is whether systems will use SDM.

“It looks like multicore fibre is close, but we want to learn more from customers,” says Li.

Another interest is an alternative development of reduced coating diameter fibres that promise greater cable density. “I always think this is probably the short-term solution, but we’ll see what people think,” says Li.

AI drives interest in fibre density and latency issues in the data centre. Low latency is attracting interest in hollow-core fibre. Microsoft acquired Lumenisity, a UK hollow core fibre specialist, late last year.

Li is keen to learn more about quantum communications. “We want to understand, from a fibre component point of view, what to do in this area.”

Until now industry focus has been on quantum key distribution (QKD), but Li wants to learn about other applications of quantum in telecoms.

The bandwidth challenge facing datacom is Cole’s interest.

As the Rump Session shows, there has been an explosion of technologies to address data challenges, particularly in the data centre. “So I’m looking forward to continuing to see all the great ideas and all the different directions,” says Cole.

Another show interest for Cole is start-ups in components, subsystems and systems, and networking.

At Optica’s Executive Forum, held on Monday, March 6, a session is dedicated to start-ups. Casellas is looking forward to the talks on optical network automation.

Much work has applied machine learning to optical transmission and amplifier optimisation. Casellas wants to see how reinforcement learning is applied to optical network controllers. Telemetry and its use for network monitoring are another of his interests.

“Maybe because I’m an academic and idealistic, but I like everything related to disaggregation and the opening of interfaces,” says Casellas, who too wants to learn more about quantum.

“I have a basic understanding of this, but maybe it is hard to get into something new,” says Casellas. Non-terrestrial and satellite networks are other topics of interest.

Cole concludes with a big-picture view of photonics.

“It’s a great time to be in optics,” he says. “We’re seeing an explosion of creativity in different areas to solve problems.”

Ramon Casellas works at the Centre Tecnològic de Telecomunicacions de Catalunya (CTTC) research institution in Barcelona, Spain. His research focuses on networks – particularly the control plane, operations and management – rather than optical systems and devices.

Ming-Jun Li is a Corporate Fellow at Corning where he has that worked for 32 years.

Li is also this year’s winner of the John Tyndall Award, presented by Optica and the IEEE Photonics Society. The award is for Li’s ‘seminal contributions to advances in optical fibre technology.’

“It was a surprise to me and a great honour,” says Li. “The work is not only for myself but for many people working with me at Corning; I cannot achieve without working with meaningful colleagues.”

Chris Cole is a consultant whose background is in datacom optics. He will be representing the company, Coherent, at OFC.

Taking a unique angle to platform design

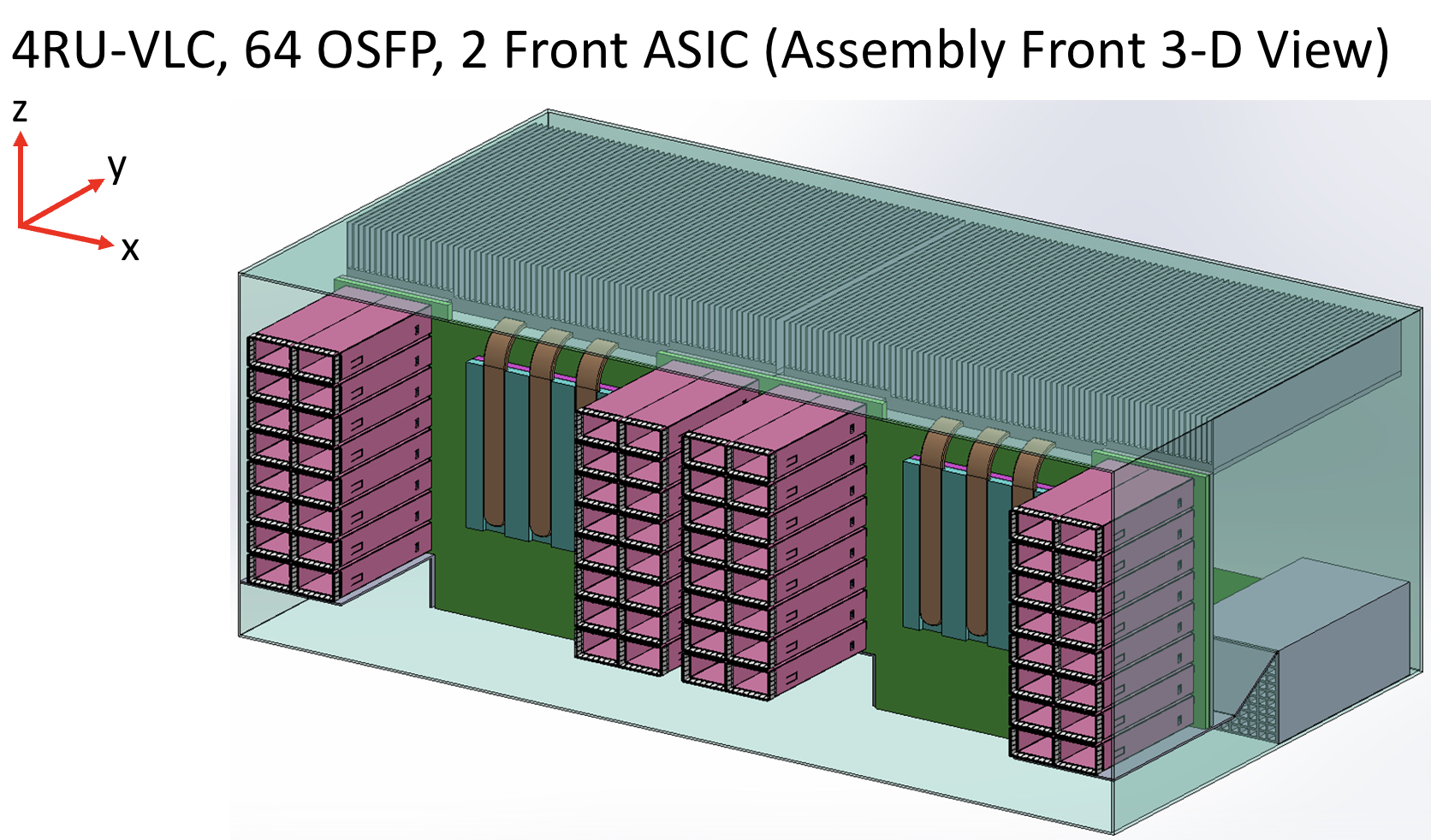

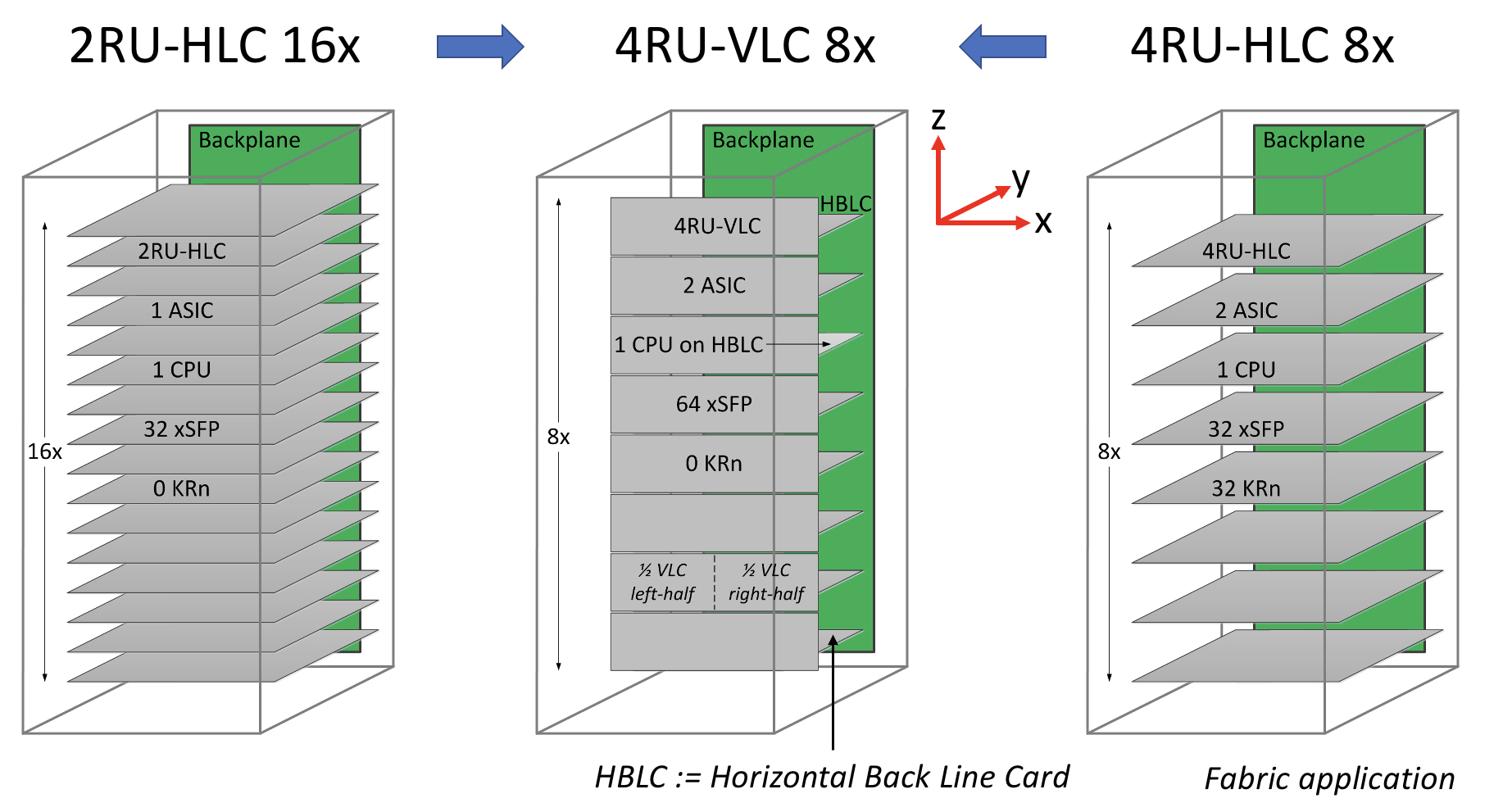

- A novel design based on a vertical line card shortens the trace length between an ASIC and pluggable modules.

- Reducing the trace length improves signal integrity while maintaining the merits of using pluggables.

- Using the vertical line card design will extend for at least two more generations the use of pluggables with Ethernet switches.

The travelling salesperson problem involves working out the shortest route on a round-trip to multiple cities. It’s a well-known complex optimisation problem.

Novel design that shortens the distance between an Ethernet switch chip and the front-panel optics

Systems engineers face their own complex optimisation problem just sending an electrical signal between two points, connecting an Ethernet switch chip to a pluggable optical module, for example.

Sending the high-speed signal over the link with sufficient fidelity for its recovery requires considerable electronic engineering design skills. And with each generation of electrical signalling, link distances are getting shorter.

In a paper presented at the recent ECOC show, held in Basel, consultant Chris Cole, working with Yamaichi Electronics, outlined a novel design that shortens the distance between an Ethernet switch chip and the front-panel optics.

The solution promises headroom for two more generations of high-speed pluggables. “It extends the pluggable paradigm very comfortably through the decade,” says Cole.

Since ECOC, there are plans to standardise the vertical line card technology in one or more multi-source agreements (MSAs), with multiple suppliers participating.

“This will include OSFP pluggable modules as well as QSFP and QSFP-DD modules,” says Cole.

Shortening links

Rather than the platform using stacked horizontal line cards as is common today, Cole and Yamaichi Electronics propose changing the cards’ orientation to the vertical plane.

Vertical line cards also enable the front-panel optical modules to be stacked on top of each other rather than side-by-side. As a result, the pluggables are closer to the switch ASIC; the furthest the high-speed electrical signalling must travel is three inches (7.6cm). The most distant span between the chip and the pluggable with current designs is typically nine inches (22.8cm).

“The reason nine inches is significant is that the loss is high as we reach 200 gigabits-per-second-per-lane and higher,” says Cole.

Current input-output proposals

The industry is pursuing several approaches to tackle such issues as the issues associated with high-speed electrical signalling and also input-output (I/O) bandwidth density.

One is to use twinaxial cabling instead of electrical traces on a printed circuit board (PCB). Such ‘Twinax’ cable has a lower loss, and its use avoids developing costly advanced-material PCBs.

Other approaches involve bringing the optics closer to the Ethernet switch chip, whether near-packaged optics or the optics and chip are co-packaged together. These approaches also promise higher bandwidth densities.

Cole’s talk focussed on a solution that continues using pluggable modules. Pluggable modules are a low-cost, mature technology that is easy to use and change.

However, besides the radio frequency (RF) challenges that arise from long electrical traces, the I/O density of pluggables is limited due to the size of the connector, while placing up to 36 pluggables on the 1 rack unit-high (1RU) front panel obstructs the airflow used for cooling.

Platform design

Ethernet switch chips double their capacity every two years. Their power consumption is also rising; Broadcom’s latest Tomahawk 5 consumes 500W.

The power supply a data centre can feed to each platform has an upper limit. It means fewer cards can be added to a platform if the power consumed per card continues to grow.

The average power dissipation per rack is 16kW, and the limit is around 32kW, says Cole. This refers to when air cooling is used, not liquid cooling.

He cites some examples.

A rack of Broadcom’s 12.8-terabit Tomahawk 3 switch chip – either with 32, 1RU or 16, 2RU cards with two chips per card – and associated pluggable optics consume over 30kW.

A 25.6-terabit Tomahawk 4-based chassis supports 16 line cards and consumes 28kW. However, using the recently announced Tomahawk 5, only eight cards can be supported, consuming 27KW.

“The takeaway is that rack densities are limited by power dissipation rather than the line card’s rack unit [measure],” says Cole.

Vertical line card

The vertical line card design is 4RU high. Each card supports two ASICs on one side and 64 cages for the OSFP modules on the other.

A 32RU chassis can thus support eight vertical cards or 16 ASICs, equivalent to the chassis with 16 horizontal 2RU line cards.

The airflow for the ASICs is improved, enabling more moderate air fans to be used compared to 1RU or 2RU horizontal card chassis designs. There is also airflow across the modules.

“The key change in the architecture is the change from a horizontal card to a vertical card while maintaining the pluggable orientation,” says Cole.

As stated, the maximum distance between an ASIC and the pluggables is reduced to three inches, but Cole says the modules can be arranged around the ASIC to minimise the length to 2.5 inches.

Alternatively, if the height of the vertical card is an issue, a 3RU card can be used instead, which results in a maximum trace length of 3.5 inches. “[In this case], we don’t have dedicated air intakes for the CPU,” notes Cole.

Cole also mentioned the option of a 3RU vertical card that houses one ASIC and 64 OSFP modules. This would be suitable for the Tomahawk 5. However, here the maximum trace length is five inches.

Vertical connectors

Yamaichi Electronics has developed the vertical connectors needed to enable the design.

Cole points out that, unlike a horizontal connector, a vertical one uses equal-length contacts. This is not the case for a flat connector, resulting in performance degradation since a set of contacts has to turn and hence has a longer length.

Cole showed the simulated performance of an OSFP vertical connector with an insertion loss of over 70GHz.

“The loss up to 70GHz demonstrates the vertical connector advantage because it is low and flat for all the leads,” says Cole. “So this [design] is 200-gigabit ready.”

He also showed a vertical connector for the OSFP-XD with a similar insertion loss performance.

Also shown was a comparison with results published for Twinax cables. Cole says this indicates that the loss of a three-inch PCB trace is less than the loss of the cable.

“We’ve dramatically reduced the RF maximum length, so we had solved the RF roadblock problem, and we maintain the cost-benefit of horizontal line cards,” says Cole.

The I/O densities may be unchanged, but it preserves the mature technology’s benefits. “And then we get a dramatic improvement in cooling because there are no obstructions to airflow,” says Cole.

Vladimir Kozlov, CEO of the market research firm, LightCounting, wondered in a research note whether the vertical design is a distraction for the industry gearing up for co-packaged optics.

“Possibly, but all approaches for reducing power consumption on next-generation switches deserve to be tested now,” said Kozlov, adding that adopting co-packaged optics for Ethernet switches will take the rest of the decade.

“There is still time to look at the problem from all angles, literally,” said Kozlov

ECOC '22 Reflections - Part 2

Gazettabyte is asking industry and academic figures for their thoughts after attending ECOC 2022, held in Basel, Switzerland. In particular, what developments and trends they noted, what they learned, and what, if anything, surprised them.

In Part 2, Broadcom‘s Rajiv Pancholy, optical communications advisor, Chris Cole, LightCouting’s Vladimir Kozlov, Ciena’s Helen Xenos, and Synopsys’ Twan Korthorst share their thoughts.

Rajiv Pancholy, Director of Hyperscale Strategy and Products Optical Systems Division, Broadcom*

The buzz at the show reminded me of 2017 when we were in Gothenburg pre-pandemic, and that felt nice.

Back then, COBO (Consortium for On-Board Optics) was in full swing, the CWDM8 multi-source agreement (MSA) was just announced, and 400-gigabit optical module developments were the priority.

This year, I was pleased to see the show focused on lower power and see co-packaged optics filter into all things ECOC.

Broadcom has been working on integrating a trans-impedance amplifier (TIA) into our CMOS digital signal processor (DSP), and the 400-gigabit module demonstration on the show floor confirmed the power savings integration can offer.

Integration impacts power and cost but it does not stop there. It’s also about what comes after 2nm [CMOS], what happens when you run out of beach-front area, and what happens when the maximum power in your rack is not enough to get all of its bandwidth out.

It is the idea of fewer things and more efficient things that draws everyone to co-packaged optics.

The OIF booth showcased some of the excitement behind this technology that is no longer a proof-of-concept.

Moving away from networking and quoting some of the ideas presented this year at the AI Hardware Summit by Alexis Bjorlin, our industry needs to understand how we will use AI, how we will develop AI, and how we will enable AI.

These were in the deeper levels of discussions at ECOC, where we as an industry need to continue to innovate, disagree, and collaborate.

Chris Cole, Optical Communications Advisor

I don’t have many substantive comments because my ECOC was filled with presentations and meetings, and I missed most of the technical talks and market focus presentations.

It was great to see a full ECOC conference. This is a good sign for OFC.

Here is an observation of what I didn’t see. There were no great new silicon photonics products, despite continued talk about how great it is and the many impressive research and development results.

Silicon photonics remains a technology of the future. Meanwhile, other material systems continue to dominate in their use in products.

Vladimir Kozlov, CEO of LightCounting

I am surprised by the progress made by thin-film lithium niobate technology. There are five suppliers of these devices now: AFR, Fujitsu, Hyperlight, Liobate, and Ori-chip.

Many vendors also showed transceivers with thin-film lithium niobate modulators inside.

Helen Xenos, senior director of portfolio marketing at Ciena

One key area to watch right now is what technology will win for the next Ethernet rates inside the data centre: intensity-modulation direct detection (IMDD) or coherent.

There is a lot of debate and discussion happening, and several sessions were devoted to this topic during the ECOC Market Focus.

Twan Korthorst, Group Director Photonic Solutions at Synopsys.

My main observations are from the exhibition floor; I didn’t attend the technical conference.

ECOC was well attended, better than previous shows in Dublin and Valencia and, of course, much better than Bordeaux (the first in-person ECOC in the Covid era).

I spent three days talking with partners, customers and potential customers, and I am pleased about that.

I didn’t see the same vibe around co-packaged optics as at OFC; not a lot of new things there.

There is a feeling of what will happen with the semiconductor/ datacom industry. Will we get a downturn? How will it look? In other words, I noticed some concerns.

On the other hand, foundries are excited about the prospects for photonic ICs and continue to invest and set ambitious goals.

The future of optical I/O is more parallel links

Chris Cole has a lofty vantage point regarding how optical interfaces will likely evolve.

As well as being an adviser to the firm II-VI, Cole is Chair of the Continuous Wave-Wavelength Division Multiplexing (CW-WDM) multi-source agreement (MSA).

The CW-WDM MSA recently published its first specification document defining the wavelength grids for emerging applications that require eight, 16 or even 32 optical channels.

And if that wasn’t enough, Cole is also the Co-Chair of the OSFP MSA, which will standardise the OSFP-XD (XD standing for extra dense) 1.6-terabit pluggable form factor that will initially use 16, 100 gigabits-per-second (Gbps) electrical lanes. And when 200Gbps electrical input-output (I/O) technology is developed, OSFP-XD will become a 3.2-terabit module.

Directly interfacing with 100Gbps ASIC serialiser/ deserialiser (serdes) lanes means the 1.6-terabit module can support 51.2-terabit single rack unit (1RU) Ethernet switches without needing 200Gbps ASIC serdes required by eight-lane modules like the OSFP.

“You might argue that it [the OSFP-XD] is just postponing what the CW-WDM MSA is doing,” says Cole. “But I’d argue the opposite: if you fundamentally want to solve problems, you have to go parallel.”

CW-WDM specification

The CW-WDM MSA is tasked with specifying laser sources and the wavelength grids for use by higher wavelength count optical interfaces.

The lasers will operate in a subset of the O-band (1280nm-1320nm) building on work already done by the ITU-T and IEEE standards bodies for datacom optics.

In just over a year since its launch, the MSA has published Revision 1.0 of its technical specification document that defines the eight, 16 and 32 channels.

The importance of specifying the wavelengths is that lasers are the longest lead items, says Cole: “You have to qualify them, and it is expensive to develop more colors.”

In the last year, the MSA has confirmed there is indeed industry consensus regarding the wavelength grids chosen. The MSA has 11 promoter members that helped write the specification document and 35 observer status members.

“The aim was to get as many people on board as possible to make sure we are not doing something stupid,” says Cole.

As well as the wavelengths, the document addresses such issues as total power and wavelength accuracy.

Another issue raised is four-wavelength mixing. As the channel count increases, the wavelengths are spaced closer together. Four-wavelength mixing refers to an undesirable effect that impacts the link’s optical performance. It is a well-known effect in dense WDM transport systems where wavelengths are closely spaced but is less commonly encountered in datacom.

“The first standard is not a link budget specification, which would have included how much penalty you need to allocate, but we did flag the issue,” says Cole. “If we ever publish a link specification, it will include four-wavelength mixing penalty; it is one of those things that must be done correctly.”

Innovation

The MSA’s specification work is incomplete, and this is deliberate, says Cole.

“We are at the beginning of the technology, there are a lot of great ideas, but we are going to resist the temptation to write a complete standard,” he says.

Instead, the MSA will wait to see how the industry develops the technology and how the specification is used. Once there is greater clarity, more specification work will follow.

“It is a tricky balance,” says Cole. “If you don’t do enough, what is the value of it? But if you do too much, you inhibit innovation.”

“The key aspect of the MSA is to help drive compliance in an emerging market,” says Matt Sysak of Ayar Labs and editor of the MSA’s technical specification. “This is not yet standardised, so it is important to have a standard for any new technology, even if it is a loose one.”

The MSA wants to see what people build. “See which one of the grids gain traction,” says Sysak.

Ayar Labs’ SuperNova remote light source for co-packaged optics is one of the first products that is compliant with the CW-WDM MSA.

Sysak notes that at recent conferences co-packaged optics is a hot topic but what is evident is that it is more of a debate.

“The fact that the debate doesn’t seem to coagulate around particular specification definitions and industry standards is indicative of the fact that the entire industry is struggling here,” says Sysak.

This is why the CW-WDM MSA is important, to help promote economies of scale that will advance co-packaged optics.

Applications

Cole notes that, if anything, the industry has become more entrenched in the last year.

The Ethernet community is fixed on four-wavelength module designs. To be able to support such designs as module speeds increase, higher-order modulation schemes and more complex digital signal processors (DSPs) are needed.

“The problem right now is that all the money is going into signal processing: the analogue-to-digital converters and more powerful DSPs,” says Cole.

His belief is that parallelism is the right way to go, both in terms of more wavelengths and more fibers (physical channels).

“This won’t come from Ethernet but emerging applications like machine learning that are not tied to backward compatibility issues,” says Cole. “It is emerging applications that will drive innovation here.”

Cole adds that there is hyperscaler interest in optical channel parallelism. “There is absolutely a groundswell interest here,” says Cole. “This is not their main business right now, but they are looking at their long-term strategy.”

The likelihood is that laser companies will step in to develop the laser sources and then other companies will develop the communications gear.

“It will be driven by requirements of emerging applications,” says Cole. “This is where you will see the first deployments.”

CW-WDM MSA charts a parallel path for optics

Artificial intelligence (AI) and machine learning have become an integral part of the businesses of the webscale players.

The mega data centre players apply machine learning to the treasure trove of data collected from users to improve services and target advertising.

Chris Cole

They can also use their data centres to offer cloud-based AI services.

Training neural networks with data sets is so intensive that it is driving new processor and networking requirements.

It is also impacting optics. Optical interfaces will need to become faster to cope with the amount of data, and that means interfaces with more parallel channels.

Anticipating these trends, a group of companies has formed the Continuous-Wave Wavelength Division Multiplexing (CW-WDM) multi-source agreement (MSA).

The CW-WDM MSA will specify lasers sources and the wavelength grids they use. The lasers will operate in the O-band (1260nm-1360nm) used for datacom optics.

The MSA is defining eight, 16 and 32 channels and will build on work done by the ITU-T and the IEEE.

This is good news for the laser manufacturers, says Chris Cole, Chair of CW-WDM MSA (pictured), given they have already shipped millions of lasers for datacom.

“In general, lasers are typically the hardest thing,” he says.

Wavelength count

The majority of datacom pluggable modules deployed today use either one or four optical channels. “When I started in optics 20 years ago it was all about single wavelengths,” says Cole.

Four channels were first used successfully for 40-gigabit interfaces. “That is when we introduced coarse wavelength-division multiplexing (CWDM),” says Cole.

Four wavelengths are the standard approach for 100, 200 and 400-gigabit optical modules. Spreading data across four channels simplifies the design of the electrical and optical interfaces.

“But we are ready to move on because the ability to increase parallel channels - be it parallel fibres or wavelengths - is much greater than the ability to push speed,” says Cole. “If all we do is rely on a four-wavelength paradigm and we keep pushing speed, we will run into a brick wall.”

Integration

Adopting more parallel channels will have two consequences on the optics, says Cole.

One is that photonic integration will become the only practical way to build multi-channel designs. Eight-channel designs are possible using discrete components but it won’t be cost-competitive for designs of 16 or more channels.

“It has to be photonic integration because as you get to eight and later, 16 and 32 wavelengths, it is not supportable in a small size with conventional approaches,” says Cole.

The MSA favours silicon photonics integration but indium phosphide or polymer integration platforms could be used.

The MSA will also cause wavelengths to be packed far more closely than the 20nm used for CWDM. Techniques now exist that enable tighter wavelength spacings without needing dedicated cooling.

One approach is separating the laser from the silicon chip - a switch chip or processor - that generates a lot of heat. Here, light from the source is fed to the optics over a fibre such that temperature control is more straightforward because the laser and chip are separated.

Cole also highlights the athermal silicon photonics of Juniper Networks that controls wavelength drift on the grid without requiring a thermo-electric cooler. Juniper gained the technology with its Aurrion acquisition in 2016.

Specification work

“Using the O-band has a lot of advantages,” says Cole. “That is where all the datacom optics are.”

The optical loss in the O-band may be double that of the C-band but this is not an issue for datacom’s short spans.

The MSA is to define a technology roadmap rather than a specific product, says Cole. First-generation products will use eight wavelengths followed by 16- and then 32-wavelength designs. Sixty-four and even 128 channel counts will be specified once the technology is established.

“Initially we did [specify 64 and 128 channels] but we took it out,” says Cole. “We’ll know a lot more if we are successful over three generations; we’ll figure out what we need to do when we get to that point.”

The MSA is proposing two bands, one 18nm wide (1291nm-1309nm) and the other 36nm wide (1282nm-1318nm). Eight, 16 and 32 wavelengths are assigned across both bands.

“It’s smack in the middle of the CWDM4 grid which is the largest shipping laser grid ever, and it is smack on top of the LWDM4 grid [used by -LR4 modules] which is the next highest grid to ship in volume,” says Cole.

The MSA will also specify continuous-wave laser parameters such as the output power, spectral width, variation in power between the wavelengths, and allowable wavelength shift.

Members

Cole started work on the CW-WDM MSA in collaboration with Ayar Labs while he was still at II-IV. Now at Luminous Computing, Cole, along with MSA editor Matt Sysak of Ayar Labs, and associate editor Dave Lewis of Lumentum, are preparing the first MSA draft and have solicited comments from members as to what to include in the specifications.

The MSA has 11 promoter members: Arista, Ayar Labs, CST Global, imec, Intel, Lumentum, Luminous Computing, MACOM, Quintessent, Sumitomo Electric, and II-VI.

The MSA has created a new observer member status to get input from companies that otherwise would be put off joining an MSA due to the associated legal requirements.

“So we have an observer category that if someone is serious and they want to see a subset of the material the MSA is working on and provide feedback, we welcome that,” says Cole.

The observer members are AMF, Axalume, Broadcom, Coherent Solutions, Furukawa Electric, GlobalFoundries, Keysight Technologies, NeoPhotonics, NVIDIA, Samtec, Scintil Photonics, and Tektronix.

“This MSA is meant to be inclusive, and it is meant to foster innovation and foster as broad an industry contribution as possible,” concludes Cole.

Further information

The CW-WDM MSA has several documents and technical papers on its website. The first document is the CW-WDM MSA grid proposal while the rest are technical papers addressing developments and applications driving the need for high-channel-count optical interfaces.