A Terabit network processor by 2015?

Given that 100 Gigabit merchant silicon network processors will appear this year only, it sounds premature to discuss Terabit devices. But Alcatel-Lucent's latest core router family uses the 400 Gigabit FP3 packet-processing chipset, and one Terabit is the next obvious development.

Source: Gazettabyte

Source: Gazettabyte

Core routers achieved Terabit scale awhile back. Alcatel-Lucent's recently announced IP core router family includes the high-end 32 Terabit 7950 XRS-40, expected in the first half of 2013. The platform has 40 slots and will support up to 160, 100 Gigabit Ethernet interfaces.

Its FP3 400 Gigabit network processor chipset, announced in 2011, is already used in Alcatel-Lucent's edge routers but the 7950 is its first router platform family to exploit fully the chipset's capability.

The 7950 family comes with a selection of 10, 40 and 100 Gigabit Ethernet interfaces. Alcatel-Lucent has designed the router hardware such that the card-level control functions are separate from the Ethernet interfaces and FP3 chipset that both sit on the line card. The re-design is to preserve the service provider's investment. Carrier modules can be upgraded independently of the media line cards, the bulk of the line card investment.

The 7950 XRS-20 platform, in trials now, has 20 slots which take the interface modules - dubbed XMAs (media adapters) - that house the various Ethernet interface options and the FP3 chipset. What Alcatel-Lucent calls the card-level control complex is the carrier module (XCM), of which there are up to are 10 in the system. The XCM, which includes control processing, interfaces to the router's system fabric and holds up to two XMAs.

There are two XCM types used with the 7950 family router members. The 800 Gigabit-per-second (Gbps) XCM supports a pair of 400Gbps XMAs or 200Gbps XMAs, while the 400Gbps XCM supports a single 400Gbps XMA or a pair of 200Gbps XMAs.

The slots that host the XCMs can scale to 2 Terabits, suggesting that the platforms are already designed with the next packet processor architecture in mind.

FP3 chipset

The FP3 chipset, like the previous generation 100Gbps FP2, comprises three devices: the P-chip network processor, a Q-chip traffic manager and the T-chip that interfaces to the router fabric.

The P-chip inspects packets and performs the look ups that determine where the packets should be forwarded. The P-chip determines a packet's class and the quality of service it requires and tells the Q-chip traffic manager in which queue the packet is to be placed. The Q-chip handles the packet flows and makes decisions as to how packets should be dealt with, especially when congestion occurs.

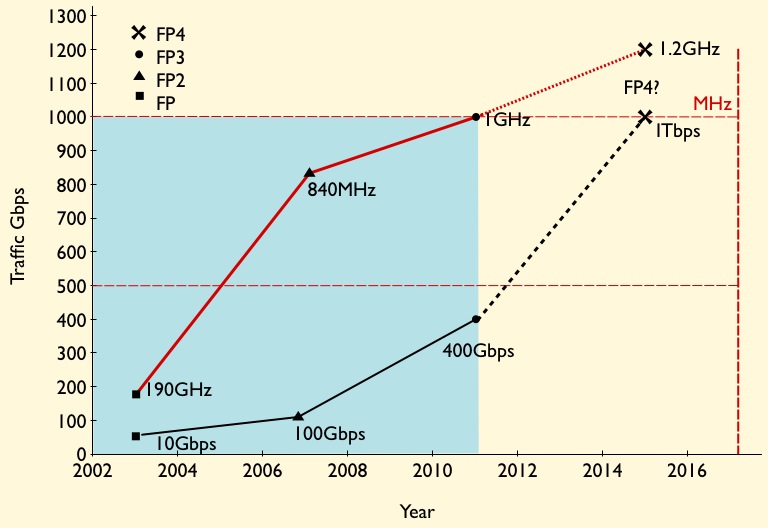

The basic metrics of the 100Gbps FP2 P-chip is that it is clocked at 840GHz and has 112 micro-coded programmable cores, arranged as 16 rows by 7 columns. To scale to 400Gbps, the FP3 P-chip is clocked at 1GHz (x1.2) and has 288 cores arranged as a 32x9 matrix (x2.6). The cores in the FP3 have also been re-architected such that two instructions can be executed per clock cycle. However this achieves a 30-35% performance enhancement rather than 2x since there are data dependencies and it is not always possible to execute instructions in parallel. Collectively the FP3 enhancements provide the needed 4x improvement to achieve 400Gbps packet processing performance.

The FP3's traffic manager Q-chip retains the FP2's four RISC cores but the instruction set has been enhanced and the cores are now clocked at 900GHz.

Terabit packet processing

Alcatel-Lucent has kept the same line card configuration of using two P-chips with each Q-chip. The second P-chip is viewed as an inexpensive way to add spare processing in case operators need to support more complex service mixes in future. However, it is rare that in the example of the FP2-based line card, the capability of the second P-chip has been used, says Alcatel-Lucent.

Having the second P-chip certainly boosts the overall packet processing on the line card but at some point Alcatel-Lucent will develop the FP4 and the next obvious speed hike is 1 Terabit.

Moving to a 28nm or an even more advanced CMOS process will enable the clock speed of the P-chip to be increased but probably not by much. A 1.2GHz clock would still require a further more-than-doubling of the cores, assuming Alcatel-Lucent doesn't also boost processing performance elswhere to achieve the overall 2.5x speed-up to a 1 Terabit FP4.

However, there are two obvious hurdles to be overcome to achieve a Terabit network processor: electrical interface speeds and memory.

Alcatel-Lucent settled on 10Gbps SERDES to carry traffic between the chips and for the interfaces on the T-chip, believing the technology the most viable and sufficiently mature when the design was undertaken. A Terabit FP4 will likely adopt 25Gbps interfaces to provide the required 2.5x I/O boost.

Another even more significant challenge is the memory speed improvement needed for the look up tables and for packet buffering. Alcatel-Lucent worked with the leading memory vendors when designing the FP3 and will do the same for its next-generation design.

Alcatel-Lucent, not surprisingly, will not comment on devices it has yet to announce. But the company did say that none of the identified design challenges for the next chipset are insurmountable.

Further reading:

Network processors to support multiple 100 Gigabit flows

A more detailed look at the FP3 in New Electronics, click here

OFC/NFOEC 2012 industry reflections - Part 1

The recent OFC/NFOEC show, held in Los Angeles, had a strong vendor presence. Gazettabyte spoke with Infinera's Dave Welch, chief strategy officer and executive vice president, about his impressions of the show, capacity challenges facing the industry, and the importance of the company's photonic integrated circuit technology in light of recent competitor announcements.

OFC/NFOEC reflections: Part 1

"I need as much fibre capacity as I can get, but I also need reach"

Dave Welch, Infinera

Dave Welch values shows such as OFC/NFOEC: "I view the show's benefit as everyone getting together in one place and hearing the same chatter." This helps identify areas of consensus and subjects where there is less agreement.

And while there were no significant surprises at the show, it did highlight several shifts in how the network is evolving, he says.

"The first [shift] is the realisation that the layers are going to physically converge; the architectural layers may still exist but they are going to sit within a box as opposed to multiple boxes," says Welch.

The implementation of this started with the convergence of the Optical Transport Network (OTN) and dense wavelength division multiplexing (DWDM) layers, and the efficiencies that brings to the network.

That is a big deal, says Welch.

Optical designers have long been making transponders for optical transport. But now the transponder isn't an element in the integrated OTN-DWDM layer, rather it is the transceiver. "Even that subtlety means quite a bit," say Welch. "It means that my metrics are no longer 'gray optics in, long-haul optics out', it is 'switch-fabric to fibre'."

Infinera has its own OTN-DWDM platform convergence with the DTN-X platform, and the trend was reaffirmed at the show by the likes of Huawei and Ciena, says Welch: "Everyone is talking about that integration."

The second layer integration stage involves multi-protocol label switching (MPLS). Instead of transponder point-to-point technology, what is being considered is a common platform with an optical management layer, an OTN layer and, in future, an MPLS layer.

"The drive for that box is that you can't continue to scale the network in terms of bandwidth, power and cost by taking each layer as a silo and reducing it down," says Welch. "You have to gain benefits across silos for the scaling to keep up with bandwidth and economic demands."

Super-channels

Optical transport has always been about increasing the data rates carried over wavelengths. At 100 Gigabit-per-second (Gbps), however, companies now use one or two wavelengths - carriers - onto which data is encoded. As vendors look to the next generation of line-side optical transport, what follows 100Gbps, the use of multiple carriers - super-channels - will continue and this was another show trend.

Infinera's technology uses a 500Gbps super-channel based on dual polarisation, quadrature phase-shift keying (DP-QPSK). The company's transmit and receive photonic integrated circuit pair comprise 10 wavelengths (two 50Gbps carriers per 50GHz band).

Ciena and Alcatel-Lucent detailed their next-generation ASICs at OFC. These chips, to appear later this year, include higher-order modulation schemes such as 16-QAM (quadrature amplitude modulation) which can be carried over multiple wavelengths. Going from DP-QPSK to 16-QAM doubles the data rate of a carrier from 100Gbps to 200Gbps, using two carriers each at 16-QAM, enables the two vendors to deliver 400Gbps.

"The concept of this all having to sit on one wavelength is going by the wayside," say Welch.

Capacity challenges

"Over the next five years there are some difficult trends we are going to have to deal with, where there aren't technical solutions," says Welch.

The industry is already talking about fibre capacities of 24 Terabit using coherent technology. Greater capacity is also starting to be traded with reach. "A lot of the higher QAM rate coherent doesn't go very far," says Welch. "16-QAM in true applications is probably a 500km technology."

This is new for the industry. In the past a 10Gbps service could be scaled to 800 Gigabit system using 80 DWDM wavelengths. The same applies to 100Gbps which scales to 8 Terabit.

"I'm used to having high-capacity services and I'm used to having 80 of them, maybe 50 of them," says Welch. "When I get to a Terabit service - not that far out - we haven't come up with a technology that allows the fibre plant to go to 50-100 Terabit."

This issue is already leading to fundamental research looking at techniques to boost the capacity of fibre.

PICs

However, in the shorter term, the smarts to enable high-speed transmission and higher capacity over the fibre are coming from the next-generation DSP-ASICs.

Is Infinera's monolithic integration expertise, with its 500 Gigabit PIC, becoming a less important element of system design?

"PICs have a greater differentiation now than they did then," says Welch.

Unlike Infinera's 500Gbps super-channel, the recently announced ASICs use two carriers and 16-QAM to deliver 400Gbps. But the issue is the reach that can be achieved with 16-QAM: "The difference is 16-QAM doesn't satisfy any long-haul applications," says Welch.

Infinera argues that a fairer comparison with its 500Gbps PIC is dual-carrier QPSK, each carrier at 100Gbps. Once the ASIC and optics deliver 400Gbps using 16-QAM, it is no longer a valid comparison because of reach, he says.

Three parameters must be considered here, says Welch: dollars/Gigabit, reach and fibre capacity. "I have to satisfy all three for my application," he says.

Long-haul operators are extremely sensitive to fibre capacity. "I need as much fibre capacity as I can get," he says. "But I also need reach."

In data centre applications, for example, reach is becoming an issue. "For the data centre there are fewer on and off ramps and I need to ship truly massive amounts of data from one end of the country to the other, or one end of Europe to the other."

The lower reach of 16-QAM is suited to the metro but Welch argues that is one segment that doesn't need the highest capacity but rather lower cost. Here 16-QAM does reduce cost by delivering more bandwidth from the same hardware.

Meanwhile, Infinera is working on its next-generation PIC that will deliver a Terabit super-channel using DP-QPSK, says Welch. The PIC and the accompanying next-generation ASIC will likely appear in the next two years.

Such a 1 Terabit PIC will reduce the cost of optics further but it remains to be seen how Infinera will increase the overall fibre capacity beyond its current 80x100Gbps. The integrated PIC will double the 100Gbps wavelengths that will make up the super-channel, increasing the long-haul line card density and benefiting the dollars/ Gigabit and reach metrics.

In part two, ADVA Optical Networking, Ciena, Cisco Systems and market research firm Ovum reflect on OFC/NFOEC. Click here

The post-100 Gigabit era

Feature: Beyond 100G - Part 4

The latest coherent ASICs from Ciena and Alcatel-Lucent coupled with announcements from Cisco and Huawei highlight where the industry is heading with regard high-speed optical transport. But the announcements also raise questions too.

Source: Gazettabyte

Source: Gazettabyte

Observations and queries

- Optical transport has had a clear roadmap: 10 to 40 to 100 Gigabit-per-second (Gbps). 100Gbps optical transport will be the last of the fixed line-side speeds.

- After 100Gbps will come flexible speed-reach deployments. Line-side optics will be able to implement 50Gbps, 100Gbps, 200Gbps or even faster speeds with super-channels, tailored to the particular link.

- Variable speed-reach designs will blur the lines between metro and ultra long-haul. Does a traditional metro platform become a trans-Pacific submarine system simply by adding a new line card with the latest coherent ASIC boasting transmit and receive digital signal processors (DSPs), flexible modulation and soft-decision forward error correction?

Source: Gazettabyte

- The cleverness of optical transport has shifted towards electronics and digital signal processing and away from photonics. Optical system engineers are being taxed as never before as they try to extend the reach of 100, 200 and 400Gbps to match that of 10 and 40Gbps but what is key for platform differentiation is the DSP algorithms and ASIC design.

- Optical is the new radio. This is evident with the adding of a coherent transmit DSP that supports the various modulation schemes and allows spectral shaping, bunching carriers closer to make best use of the fibre's bandwidth.

- The radio analogy is fitting because fibre bandwidth is becoming a scarce resource. Usable fibre capacity has more than doubled with these latest ASIC announcements. Moving to 400Gbps doubles overall capacity to some 18 Terabits. Spectral shaping boosts that even further to over 23 Terabits. Last week 8.8 Terabits (88x100Gbps) was impressive.

- Maximising fibre capacity is why implementing single-carrier 100Gbps signals in 50GHz channels is now important.

- Super-channels, combining multiple carriers, have a lot of operational merits (see the super-channel section in the Cisco story). Infinera announced its 500Gbps super-channel over 250GHz last year. Now Ciena and Alcatel-Lucent highlight how a dual-carrier, dual-polarisation 16-QAM approach in 100GHz implements a 400Gbps signal.

- Despite all the talk of 16-QAM and 400Gbps wavelengths, 100Gbps is still in its infancy and will remain a key technology for years to come. Alcatel-Lucent, one of the early leaders in 100Gbps, has deployed 1,450 100 Gig line units since it launched its system in June 2010.

- Photonic integration for coherent will remain of key importance. Not so much in making yet more complex optical structures than at 100Gbps but shrinking what has already been done.

- Is there a next speed after 100Gbps? Is it 200Gbps until 400Gbps becomes established? Is it 500Gbps as Infinera argues? The answer is that it no longer matters. But then what exactly will operators use to assess the merits of the different vendors' platforms? Reach, power, platform density, spectral efficiency and line speeds are all key performance parameters but assessing each vendor's platform has clearly got harder.

- It is the system vendors not the merchant chip makers that are driving coherent ASIC innovation. The market for 100Gbps coherent merchant chips will remain an important opportunity given the early status of the market but how will coherent merchant chip vendors compete, several of them startups, with the system vendors' deeper pockets and sophisticated ASIC designs?

- Optical transponder vendors at least have more scope for differentiation but it is now also harder. Will one or two of the larger module makers even acquire a coherent ASSP maker?

- Infinera announced its 100G coherent system last year. Clearly it is already working on its next-generation ASIC. And while its DTN-X platform boasts a 500Gbps super-channel photonic chip, its overall system capacity is 8 Terabit (160x50Gbps, each in 25GHz channels). How will Infinera respond, not only with its next ASIC but also its next-generation PIC, to these latest announcements from Ciena and Alcatel-Lucent?

Latest coherent ASICs set the bar for the optical industry

Feature: Beyond 100G - Part 3

Alcatel-Lucent has detailed its next-generation coherent ASIC that supports multiple modulation schemes and allow signals to scale to 400 Gigabit-per-second (Gbps).

The announcement follows Ciena's WaveLogic 3 coherent chipset that also trades capacity and reach by changing the modulation scheme.

"They [Ciena and Alcatel-Lucent] have set the bar for the rest of the industry," says Ron Kline, principal analyst for Ovum’s network infrastructure group.

"We will employ [the PSE] for all new solutions on 100 Gigabit"

"We will employ [the PSE] for all new solutions on 100 Gigabit"

Kevin Drury, Alcatel-Lucent

Photonic service engine

Dubbed the photonic service engine (PSE), Alcatel-Lucent's latest ASIC will be used in 100Gbps line cards that will come to market in the second half of 2012.

The PSE compromises coherent transmitter and receiver digital signal processors (DSPs) as well as soft-decision forward error correction (SD-FEC). The transmit DSP generates the various modulation schemes, and can perform waveform shaping to improve spectral efficiency. The coherent receiver DSP is used to compensate for fibre distortions and for signal recover.

The PSE follows Alcatel-Lucent's extended reach (XR) line card announced in December 2011 that extends its 100Gbps reach from 1,500 to 2,000km. "This [PSE] will be the chipset we will employ for all new solutions on 100 Gigabit," says Kevin Drury, director of optical marketing at Alcatel-Lucent. The PSE will extend 100Gbps reach to over 3,000km.

Ciena's WaveLogic 3 is a two-device chipset. Alcatel-Lucent has crammed the functionality onto a single device. But while the device is referred to as the 400 Gigabit PSE, two PSE ASICs are needed to implement a 400Gbps signal.

"They [Ciena and Alcatel-Lucent] have set the bar for the rest of the industry"

Ron Kline, Ovum

"There are customers that are curious and interested in trialling 400Gbps but we see equal, if not higher, importance in pushing 100Gbps limits," says Manish Gulyani, vice president, product marketing for Alcatel-Lucent's networks group.

In particular, the equipment maker has improved 100Gbps system density with a card that requires two slots instead of three, and extends reach by 1.5x using the PSE.

Performance

Alcatel-Lucent makes several claims about the performance enhancements using the PSE:

- Reach: The reach is extended by 1.5x.

- Line card density: At 100Gbps the improvement is 1.5x. The current 100Gbps muxponder (10x10Gbps client input) and transponder (100Gbps client) line card designs occupy three slots whereas the PSE design will occupy two slots only. Density will be improved by 4x by adopting a 400Gbps muxponder that occupies three slots.

- Power consumption: By going to a more advanced CMOS process and by enhancing the design of the chip architecture, the PSE consumes a third less power per Gigabit of transport: from 650mW/Gbps to 425mW/Gbps. Alcatel-Lucent is not saying what CMOS process technology is used for the PSE. The company's current 100Gbps silicon uses a 65nm process and analysts believe the PSE uses a 40nm process.

- System capacity: The channel width occupied by the signal can be reduced by a third. A 50GHz 100Gbps wavelength can be compressed to occupy a 37.5GHz. This would improve overall 100Gbps system capacity from 8.8 Terabit-per-second (Tbps) to 11.7Tbps. Overall capacity can be improved from 88, 100Gbps ports to 44, 400Gbps interfaces. That doubles system capacity to 17.6Tbps. Using waveform shaping, this is improved by a further third, to greater than 23Tbps.

"We are not saying we are breaking the 50GHz channel spacing today and going to a flexible grid, super-channel-type construct," says Drury. "But this chip is capable of doing just that." Alcatel-Lucent will at least double network capacity when its system adopts 44 wavelengths, each at 400Gbps.

400 Gigabit

To implement a 400Gbps signal, a dual-carrier, dual-polarisation 16-QAM coherent wavelength is used that occupies 100GHz (two 50GHz channels). Alcatel-Lucent says that should it commercialise 400Gbps using waveform shaping, the channel spacing would reduce to 75GHz. But this more efficient grid spacing only works alongside a flexible grid colourless, directionless and contentionless (CDC) ROADM architecture.

A 400Gbps PSE card showing four 100 Gigabit Ethernet client signals going out as a 400Gbps wavelength. The three-slot card is comprised of three daughter boards. Source: Alcatel-Lucent.

A 400Gbps PSE card showing four 100 Gigabit Ethernet client signals going out as a 400Gbps wavelength. The three-slot card is comprised of three daughter boards. Source: Alcatel-Lucent.

Alcatel-Lucent is not ready to disclose the reach performance it can achieve with the PSE using the various modulation schemes. But it does say the PSE supports dual-polarisation bipolar phase-shift keying (DP-BPSK) for longest reach spans, as well as quadrature phase-shift keying (DP-QPSK) and 16-QAM (quadrature amplitude modulation).

"[This ability] to go distances or to sacrifice reach to increase bandwidth, to go from 400km metro to trans-Pacific by tuning software, that is a big advantage," says Ovum's Kline. "You don't then need as many line cards and that reduces inventory."

Market status

Alcatel-Lucent says that it has 55 customers that have deployed over 1,450 100Gbps transponders.

A software release later this year for Alcatel-Lucent's 1830 Photonic Service Switch will enable the platform to support 100Gbps PSE cards.

A 400Gbps card will also be available this year for operators to trial.

2012: A year of unique change

The third and final part on what CEOs, executives and industry analysts expect during the new year, and their reflections on 2011.

Karen Liu, principal analyst, components telecoms, Ovum @girlgeekanalyst

"We’ve entered the next decade for real: the mobile world is unified around LTE and moving to LTE Advanced, complete with small cells and heterogenous networks including Wi-Fi."

Last year was a long one. Looking back, it is hard to believe that only one year has elapsed between January 2011 and now.

In fact, looking back it is hard to remember how things looked a year ago: natural disasters were considered rare occurrences. WiMAX’s role was still being discussed, some viewed TDD LTE as a Chinese peculiarity. For that matter, cloud-RAN was another weird Chinese idea. But no matter, China could do anything given its immunity to economics and need for a return-on-investment.

Femtocells were consumer electronics for the occasional indoor coverage fix, and Wi-Fi was not for carriers.

Only optical could do 100Mbps to the subscriber, who, by the way, was moving on to 10 Gig PON in short order. Flexible spectrum ROADMS meant only Finisar could play, and high port-count wavelength-selective switches had come and gone. 100 Gigabit DWDM took several slots, hadn’t shipped for real, and even the client-side interface was a problem.

As for modules, 40 Gigabit Ethernet (GbE) client was CFP-sized, and high-density 100GbE looked so far away that the non-standard 10x10 MSA was welcomed.

NeoPhotonics was a private company, doing that wacky planar integration thing that works OK for passives but not actives.

Now it feels like we’ve entered the next decade for real: the mobile world is unified around LTE and moving to LTE Advanced, complete with small cells and heterogenous networks including Wi-Fi.

Optical is one of several ways to do backhaul or PC peripherals. 40GbE, even single-mode, comes in a QSFP package, tunable comes in an SFP — both of which, by the way, use optical integration.

Most optical transport vendors, even metro specialists, have 100 Gigabit coherent in trial stage at least. Thousands of 100 Gig ports and tens of thousands of 40 Gig have shipped.

Flexible spectrum is being standardised and CoAdna went public. The tunable laser start-up phase concluded with Santur finding a home in NeoPhotonics, now a public company.

But we also have a new feeling of vulnerability.

Optical components revenues and margins slid back down. Bad luck can strike twice, with Opnext taking the hit from both the spring earthquake and the fall floods. China turns out not to be immune after all, and time hasn’t automatically healed Europe.

What will happen this year? At this rate, I think we’ll see a lot of news at OFC in a couple of months' time. By then I’ll probably think: "Was it as recently as January when the world looked so different?"

Brian Protiva, CEO of ADVA Optical Networking @ADVAOpticalNews

Last year was an incredible year for networks. In many respects it was a watershed moment. Optical transport took a huge step forward with the genuine availability of 100 Gigabit technologies.

What's even more incredible is that 100 Gigabit emerged in more than the core: we saw 100 Gig metro solutions enter the marketplace. This means that for the first time enterprises and service providers have the opportunity to deploy 100 Gig solutions that fit their needs. Thanks to the development of direct-detection 100 Gig technology, cost is becoming less and less of an issue. This is a game changer.

In 2012, 100 Gig deployments will continue to be a key topic, with more available choices and maturing systems. However, I firmly believe the central focus of 2012 will be automation and multi-layer network intelligence.

"We need to see networks that can effectively govern and optimise themselves."

Talking to our customers and the industry, it is clear that more needs to be done to develop true network automation. There are very few companies that have successfully addressed this issue.

We need to see networks that can effectively govern and optimise themselves. That can automatically deliver bandwidth on demand, monitor and resolve problems before they become service disrupting, and drive dramatically increased efficiency.

The future of our networks is all about simplicity. The continued fierce bandwidth growth can no longer be supported by today's complex operational inefficiencies. Streamlined operations are essential if operators are to drive for further profitable growth.

I'm excited about helping to make this happen.

Arie Melamed, head of marketing, ECI Telecom @ecitelecom

The existing momentum of major traffic growth with no proportional revenue increase has continued - even intensified - in 2011. This means that operators have to invest in their networks without being able to generate the proportional revenue increase from this investment. We expect to see new business models crop up as operators cope with over-the-top (OTT) services.

To differentiate themselves from competition, operators must make the network a core part of the end-customer experience. To do so, we expect operators to introduce application-awareness in the network – optimising service delivery to avoid network expansions and introduce new revenues.

We also expect operators to offer quality-of-service assurance to end users and content application providers, turning a lose-lose situation around.

Larry Schwerin, CEO of Capella Intelligent Subsystems @CapellaPhotonic

Over 2011, we witnessed the demand for broadband access increase at an accelerated rate. Much of this has been fueled by the continuation of mass deployments of broadband access - PON/FTTH, wireless LTE, HFC, to name a few - as well as the ever-increasing implementation of cloud computing, requiring instantaneous broadband access. Video and rich media are a small but growing piece of this equation.

The ultimate of this is yet to be felt, as people start to draw more narrowcast versus broadcast content. The final element will be when upstream content via appliances similar to Sling Media, as well as the various forms of video conferencing, become more widespread. This will lead to more symmetrical bandwidth from an upstream perspective.

'Change is definitely in order for the optical ecosystem. The question is how and when?'

Along with this, the issue of falling revenue-per-bit is forcing network operators to develop more cost-effective ways for managing this traffic.

All of aforementioned is driving demand for higher capacity and more flexible support at the fundamental optical layer.

I believe this will work to translate into more bits-per-wavelength, more wavelengths-per-fibre, and finally more flexibility for network operators. They will be able to more easily manage the traffic at the optical layer. This points to good news for transponder, tunable and ROADM/ WSS suppliers.

2011 also pointed out certain issues within the optical communications sector. Most notably, entering 2011, the financial marketplace was bullish on the optical sector following rapid quarter-on-quarter growth of certain larger optical players. Then, the “Ides of March” came and optical stocks lost as much as 40% of their value when it was deemed there was a pull back in demand by a very few, but nonetheless important players in the sector.

Later in the year came the flooding in Thailand, which hampered the production capabilities of many of the optical components players.

Overall margins in the sector remain at unacceptable levels furthering the speculation that things need to change in order for a more robust environment to exist.

What will 2012 bring?

I believe demand for bandwidth will continue to grow. Data centres will gain more focus as cloud computing continues to gain traction. This will lead to more demand for fundamental technologies in the area of optical transmission and management.

The next phase of wavelength management solutions will start to emerge - both at the high port count (1x20) as well as low-port count (1x2, 1x4) for edge applications. More emphasis will be placed on monitoring and control as more complex optical networks are built.

Change is definitely in order for the optical ecosystem. The question is how and when? Will it simply be consolidation? How will vertical integration take shape? How will new technologies influence potential outcomes?

2012 should be a year of unique change.

Terry Unter, president and general manager, optical networks solutions, Oclaro

Discussion and progress on defining next-generation ROADM network architectures was a very important development in 2011. In particular, consensus on feature requirements and technology choices to enable a more cost-efficient optical network layer was generally agreed amongst the major network equipment manufacturers. Colourless, directionless and, to a significant degree, contentionless are clear goals, while we continue to drive down the cost of the network.

"We expect to see a host of system manufacturers making decisions on 100 Gig supply partners. This should be an exciting year."

Coherent detection transponder technology is a critical piece of the puzzle ensuring scalability of network capacity while leveraging a common technology platform. We succeeded in volume production shipments of a 40 Gig coherent transponder and we announced our 100 Gig transponder.

2012 will be an important year for 100 Gig. The availability of 100 Gig transponder modules for deployment will enable a much wider list of system manufacturers to offer their customers more spectrally-efficient network solutions. The interest is universal from metro applications to the long haul and ultra-long haul market segments.

While there is much discussion about 400 Gig and higher rates, standards are in very early stages. The industry as a whole expects 100 Gig to be a key line rate for several years.

As we enter 2012, we expect to see a host of system manufacturers making decisions on 100 Gig supply partners. This should be an exciting year.

For Part 1, click here

For Part 2, click here