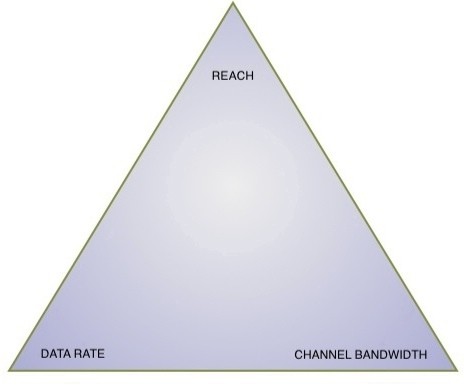

The great data rate-reach-capacity tradeoff

Source: Gazettabyte

Source: Gazettabyte

Optical transmission technology is starting to bump into fundamental limits, resulting in a three-way tradeoff between data rate, reach and channel bandwidth. So says Brandon Collings, JDS Uniphase's CTO for communications and commercial optical products. See the recent Q&A.

This tradeoff will impact the coming transmission speeds of 200, 400 Gigabit-per-second and 1 Terabit-per-second. For each increased data rate, either the channel bandwidth must increase or the reach must decrease or both, says Collings.

Thus a 200Gbps light path can be squeezed into a 50GHz channel in the C-band but its reach will not match that of 100Gbps over a 50GHz channel (Shown on the graph with a hashed line). A wider version of 200Gbps could match the reach to the 100Gbps, but that would probably need a 75GHz channel, says Collings.

For 400Gbps, the same situation arises suggesting two possible approaches: 400Gbps fitting in a 75GHz channel but with limited reach (for metro) or a 400Gbps signal placed within a 125GHz channel to match the reach of 100Gbps over a 50GHz channel.

Optical transmission technology is starting to bump into fundamental limits resulting in a three-way tradeoff between data rate, reach and channel bandwidth.

Optical transmission technology is starting to bump into fundamental limits resulting in a three-way tradeoff between data rate, reach and channel bandwidth.

"Continue this argument for 1 Terabit as well," says Collings. Here the industry consensus suggests a 200GHz-wide channel will be needed.

Similarly, within this compromise, other options are available such as 400Gbps over a 50GHz channel. But this would have a very limited reach.

Collings does not dismiss the possibility of a technology development which would break this fundamental compromise, but at present this is the situation.

As a result there will likely be multiple formats hitting the market which align the reach needed with the minimised channel bandwidth, says Collings.

Fujitsu Labs adds processing to boost optical reach

“That is one of the virtues of the technology; it is not dependent on the modulation format or the bit rate”

Takeshi Hoshida, Fujitsu Labs

Why is it important?

Much progress has been made in developing digital signal processing techniques for 100Gbps coherent receivers to compensate for undesirable fibre transmission effects such as polarisation mode dispersion and chromatic dispersion (See Performance of Dual-Polarization QPSK for Optical Transport Systems). Both dispersions are linear in nature and are compensated for using linear digital filtering. What Fujitsu Labs has announced is the next step: a digital filter design that compensates for non-linear effects.

A key challenge facing optical-transmission designers is extending the reach of 100Gbps transmissions to match that of 10Gbps systems. In the simplest sense, reach falls with increased transmission speed because the shorter-pulsed signals contain less photons. Channel impairments also become more prominent the higher the transmission speed.

Engineers can increase system reach by boosting the optical signal-to-noise ratio but this gives rise to non-linear effects in the fibre. “When the signal power is higher, the refractive index of the fibre changes and that distorts the phase of the optical signal,” says Takeshi Hoshida, a senior researcher at Fujitsu Labs.

The non-linear effect, combined with polarisation mode dispersion and chromatic dispersion, interact with the signal in a complicated way. “The linear and non-linear effects combine to result in a very complex distortion of the received signal,” says Hoshida.

Fujitsu has developed a non-linear distortion compensation technique that recovers 2dB of the transmitted optical signal. Moreover, the compensation technique will equally benefit 400 Gigabit or 1 Terabit channels, says Hoshida: “That is one of the virtues of the technology; it is not dependent on the modulation format or the bit rate.”

Fujitsu plans to extend the reach of its long-haul optical transmission systems using the technique. The 2dB equates to a 1.6x distance improvement. But, as Hoshida points out, this is the theoretical benefit. In practice, the benefit is less since a greater transmission distance means the signal passes through more amplifier and optical add-drop stages that introduce their own signal impairments.

Method used

Fujitsu Labs has implemented a two-stage filtering block. The first filter stage is linear and compensates for chromatic dispersion, while the second unit counteracts the fibre's non-linear effect on the optical signal. To achieve the required compensation, Fujitsu Labs uses multiple filter-stage blocks in cascade.

According to Hoshida, optical phase is rotated according to the optical power: “If the power is higher, the more phase rotation occurs – that is the non-linear effect in the fibre.” The effect is distributed, occurring along the length of the fibre, and is also coupled with chromatic dispersion. “Chromatic dispersion changes the optical intensity waveform, and that intensity waveform induces the non-linear effect,” says Hoshida. “Those two problems are coupled to each other so you have to solve both.”

Fujitsu tackles the problem by applying a filter stage to compensate for each optical span – the fibre segment between repeaters. For a terrestrial transmission system there can be as many as 20 or 30 such spans. “But [using a filter stage per span] is rather inefficient,” says Hoshida. By inserting a weighted-average technique, Fujitsu has reduced by a factor of four the filter stages needed.

Weighted-averaging is a filtering operation that smoothes the signal in the time domain. “It is not necessary to change the weights [of the filter] symbol-by-symbol; it is almost static,” says Hoshida. Changes do occur but infrequently, depending on the fibre’s condition such as changes in temperature, for example.

Fujitsu has been surprised that the weighted-averaging technique is so effective. The technique’s use and the subsequent 4x reduction in filter stages reduce by 70% the hardware needed to implement the compensation. The reason it is not the full 75% is that extra hardware for the weighted averaging must be added to each stage.

What next?

Fujitsu has demonstrated that the technique is technically feasible but practical issues remain such as power consumption. According to Hoshida, the power consumption is too high even using an advanced 40nm CMOS process, and will likely require a 28nm process. Fujitsu thus expects the technique to be deployed in commercial systems by 2015 at the latest.

There are also further optical performance improvements to be claimed, says Hoshida, by addressing cross-phase modulation. This is another non-linear effect where one lightpath affects the phase of another.

Fujitsu Labs has developed two algorithms to address cross-phase modulation which is a more challenging problem since it is modulation-dependent.

For a copy of Fujitsu’s ECOC 2010 slides, please click here.

Optical transmission beyond 100Gbps

Part 3: What's next?

Given the 100 Gigabit-per-second (Gbps) optical transmission market is only expected to take off from 2013, addressing what comes next seems premature. Yet operators and system vendors have been discussing just this issue for at least six months.

And while it is far too early to talk of industry consensus, all agree that optical transmission is becoming increasingly complex. As Karen Liu, vice president, components and video technologies at market research firm Ovum, observed at OFC 2010, bandwidth on the fibre is no longer plentiful.

“We need to keep a very close eye that we are not creating more problems than we are solving.”

“We need to keep a very close eye that we are not creating more problems than we are solving.”

Brandon Collings, JDS Uniphase.

As to how best to extend a fibre’s capacity beyond 80, 100Gbps dense wavelength division multiplexing (DWDM) channels spaced 50GHz apart, all options are open.

“What comes after 100Gbps is an extremely complicated question,” says Brandon Collings, CTO of JDS Uniphase’s consumer and commercial optical products division. “It smells like it will entail every aspect of network engineering.”

Ciena believes that if operators are to exploit future high-speed transmission schemes, new architected links will be needed. The rigid networking constraints imposed on 40 and 100Gbps to operate over existing 10Gbps networks will need to be scrapped.

“It will involve a much broader consideration in the way you build optical systems,” says Joe Berthold, Ciena’s vice president of network architecture. “For the next step it is not possible [to use existing 10Gbps links]; no-one can magically make it happen.”

Lightpaths faster than 100Gbps simply cannot match the performance of current optical systems when passing through multiple reconfigurable optical add/drop multiplexer (ROADM) stages using existing amplifier chains and 50GHz channels.

Increasing traffic capacity thus implies re-architecting DWDM links. “Whatever the solution is it will have to be cheap,” says Berthold. This explains why the Optical Internetworking Forum (OIF) has already started a work group comprising operators and vendors to align objectives for line rates above 100Gbps.

If new links are put in then changing the amplifier types and even their spacing becomes possible, as is the use of newer fibre. “If you stay with conventional EDFAs and dispersion managed links, you will not reach ultimate performance,” says Jörg-Peter Elbers, vice president, advanced technology at ADVA Optical Networking,

Capacity-boosting techniques

Achieve higher speeds while matching the reach of current links will require a mixture of techniques. Besides redesigning the links, modulation schemes can be extended and new approaches used such as going ‘gridless” and exploiting sophisticated forward error-correction (FEC) schemes.

For 100Gbps, polarisation and phase modulation in the form of dual polarization, quadrature phase-shift keying (DP-QPSK) is used. By adding amplitude modulation, quadrature amplitude modulation (QAM) schemes can be extended to include 16-QAM, 64-QAM and even 256 QAM.

Alcatel-Lucent is one firm already exploring QAM schemes but describes improving spectral efficiency using such schemes as a law of diminishing returns. For example, 448Gbps based on 64-QAM achieves a bandwidth of 37GHz and a sampling rate of 74 Gsamples/s but requires use of high-resolution A/D converters. “This is very, very challenging,” says Sam Bucci, vice president, optical portfolio management at Alcatel-Lucent.

Infinera is also eyeing QAM to extend the data performance of its 10-channel photonic integrated circuits (PICs). Its roadmap goes from today’s 100Gbps to 4Tbps per PIC.

Infinera has already announced a 10x40Gbps PIC and says it can squeeze 160 such channels in the C-band using 25GHz channel spacing. To achieve 1 Terabit would require a 10x100Gbps PIC.

How would it get to 2Tbps and 4Tbps? “Using advanced modulation technology; climbing up the QAM ladder,” says Drew Perkins, Infinera’s CTO.

Glenn Wellbrock, director of backbone network design at Verizon Business, says it is already very active in exploring rates beyond 100Gbps as any future rate will have a huge impact on the infrastructure. “No one expects ultra-long-haul at greater than 100Gbps using 16-QAM,” says Wellbrock.

Another modulation approach being considered is orthogonal frequency-division multiplexing (OFDM). “At 100Gbps, OFDM and the single-carrier approach [DP-QPSK] have the same spectral efficiency,” says Jonathan Lacey, CEO of Ofidium. “But with OFDM, it’s easy to take the next step in spectral efficiency – required for higher data rates - and it has higher tolerance to filtering and polarisation-dependent loss.”

One idea under consideration is going “gridless”, eliminating the standard ITU wavelength grid altogether or using different sized bands, each made up of increments of narrow 25GHz ones. “This is just in the discussion phase so both options are possible,” says Berthold, who estimates that a gridless approach promises up to 30 percent extra bandwidth.

Berthold favours using channel ‘quanta’ rather than adopting a fully flexibility band scheme - using a 37GHz window followed by a 17GHz window, for example - as the latter approach will likely reduce technology choice and lead to higher costs.

Wellbrock says coarse filtering would be needed using a gridless approach as capturing the complete C-Band would be too noisy. A band 5 or 6 channels wide would be grabbed and the signal of interest recovered by tuning to the desired spectrum using a coherent receiver’s tunable laser, similar to how a radio receiver works.

Wellbrock says considerable technical progress is needed for the scheme to achieve a reach of 1500km or greater.

“Whatever the solution is it will have to be cheap”

“Whatever the solution is it will have to be cheap”

Joe Berthold, Ciena.

JDS Uniphase’s Collings sounds a cautionary note about going gridless. “50GHz is nailed down – the number of questions asked that need to be addressed once you go gridless balloons,” he says. “This is very complex; we need to keep a very close eye that we are not creating more problems than we are solving.”

“Operators such as AT&T and Verizon have invested heavily in 50GHz ROADMs, they are not just going to ditch them,” adds Chris Clarke, vice president strategy and chief engineer at Oclaro.

More powerful FEC schemes and in particular soft-decision FEC (SD-FEC) will also benefit optical performance for data rates above 100Gbps. SD-FEC delivers up to a 1.3dB coding gain improvement compared to traditional FEC schemes at 100Gbps.

SD-FEC also paves the way for performing joint iterative FEC decoding and signal equalisation at the coherent receiver, promising further performance improvements, albeit at the expense of a more complex digital signal processor design.

400Gbps or 1 Tbps?

Even the question of what the next data rate after 100Gbps will be –200Gbps, 400Gbps or even 1 Terabit-per -second – remains unresolved.

Verizon Business will deploy new 100Gbps coherent-optimised routes from 2011 and would like as much clarity as possible so that such routes are future-proofed. But Collings points out that this is not something that will stop a carrier addressing immediate requirements. “Do they make hard choices that will give something up today?” he says.

At the OFC Executive Forum, Verizon Business expressed a preference for 1Tbps lightpaths. While 400Gbps was a safe bet, going to 1Tbps would enable skipping one additional stage i.e. 400Gbps. But Verizon recognises that backing 1Tbps depends on when such technology would be available and at what cost.

According to BT, speeds such as 200, 400Gbps and even 1 Tbps are all being considered. “The 200/ 400Gbps systems may happen using multiple QAM modulation,” says Russell Davey, core transport Layer 1 design manager at BT. “Some work is already being done at 1Tbps per wavelength although an alternative might be groups or bands of wavelengths carrying a continuous 1Tbps channel, such as ten 100Gbps wavelengths or five 200Gbps wavelengths.”

Davey stresses that the industry shouldn’t assume that bit rates will continue to climb. Multiple wavelengths at lower bitrates or even multiple fibres for short distances will continue to have a role. “We see it as a mixed economy – the different technologies likely to have a role in different parts of network,” says Davey.

Niall Robinson, vice president of product marketing at Mintera, is confident that 400Gbps will be the chosen rate.

Traditionally Ethernet has grown at 10x rates while SONET/SDH has grown in four-fold increments. However now that Ethernet is a line side technology there is no reason to expect the continued faster growth rate, he says. “Every five years the line rate has increased four-fold; it has been that way for a long time,” says Robinson. “100Gbps will start in 2012/ 2013 and 400Gbps in 2017.”

“There is a lot of momentum for 400Gbps but we’ll have a better idea in a six months’ time,” says Matt Traverso, senior manager, technical marketing at Opnext. “The IEEE [and its choice for the next Gigabit Ethernet speed after 100GbE] will be the final arbiter.”

Software defined optics and cognitive optics

Optical transmission could ultimately borrow two concepts already being embraced by the wireless world: software defined radio (SDR) and cognitive radio.

SDR refers to how a system can be reconfigured in software to implement the most suitable radio protocol. In optical it would mean making the transmitter and receiver software-programmable so that various transmission schemes, data rates and wavelength ranges could be used. “You would set up the optical transmitter and receiver to make best use of the available bandwidth,” says ADVA Optical Networking’s Elbers.

This is an idea also highlighted by Nokia Siemens Networks, trading capacity with reach based on modifying the amount of information placed on a carrier.

“For a certain frequency you can put either one bit [of information] or several,” says Oliver Jahreis, head of product line management, DWDM at Nokia Siemens Networks. “If you want more capacity you put more information on a frequency but at a lower signal-to-noise ratio and you can’t go as far.”

Using ‘cognitive optics’, the approach would be chosen by the optical system itself using the best transmission scheme dependent capacity, distance and performance constraints as well as the other lightpaths on the fibre. “You would get rid of fixed wavelengths and bit rates altogether,” says Elbers.

Market realities

Ovum’s view is it remains too early to call the next rate following 100Gbps.

Other analysts agree. “Gridless is interesting stuff but from a commercial standpoint it is not relevant at this time,” says Andrew Schmitt, directing analyst, optical at Infonetics Research.

Given that market research firms look five years ahead and the next speed hike is only expected from 2017, such a stance is understandable.

Optical module makers highlight the huge amount of work still to be done. There is also a concern that the benefits of corralling the industry around coherent DP-QPSK at 100Gbps to avoid the mistakes made at 40Gbps will be undone with any future data rate due to the choice of options available.

Even if the industry were to align on a common option, developing the technology at the right price point will be highly challenging.

“Many people in the early days of 100Gbps – in 2007 – said: ‘We need 100Gbps now – if I had it I’d buy it’,” says Rafik Ward, vice president of marketing at Finisar. “There should be a lot of pent up demand [now].” The reason why there isn’t is that such end users always miss out key wording at the end, says Ward: “If I had it I’d buy it - at the right price.”

For Part 1, click here

For Part 2, click here